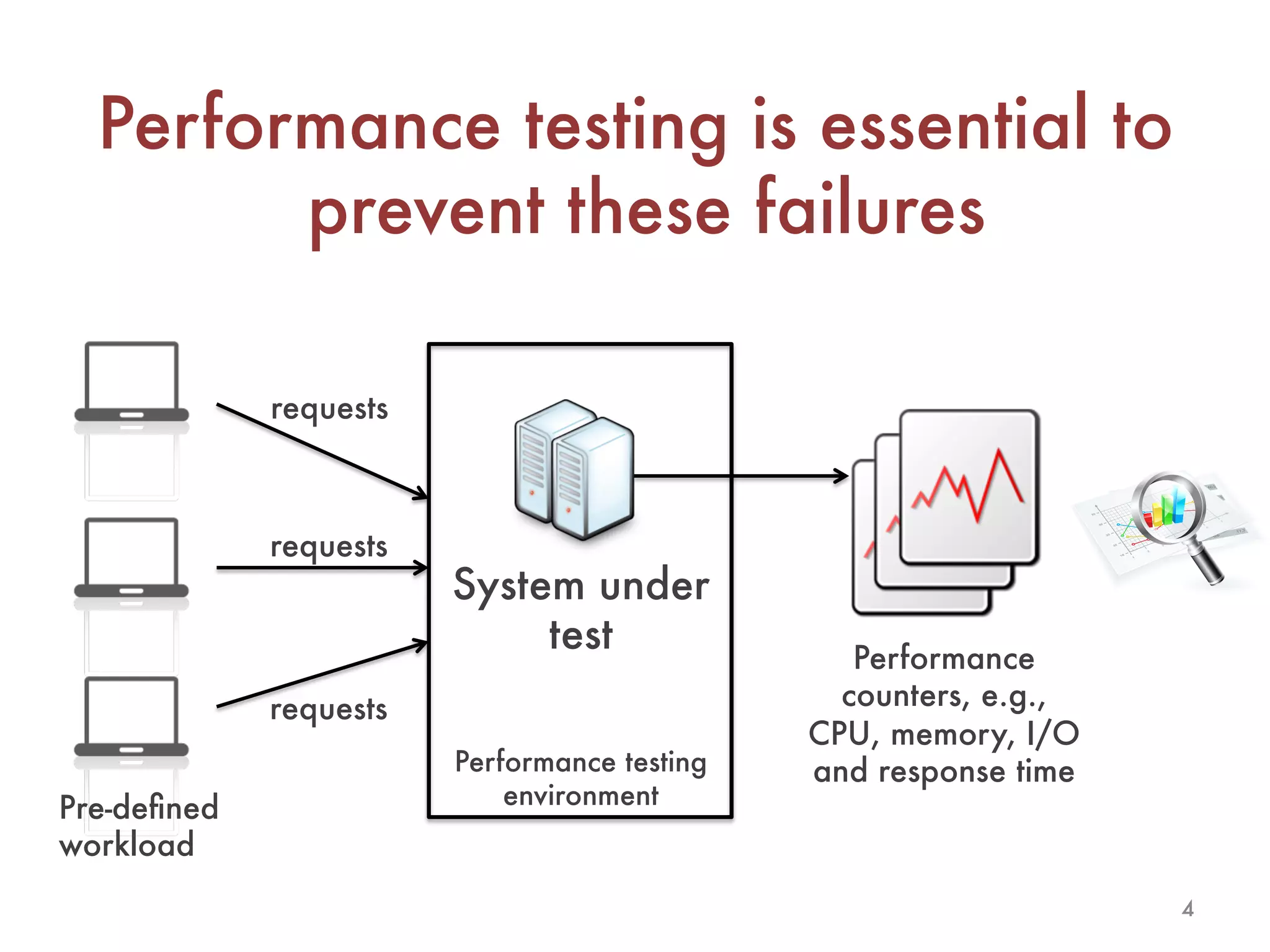

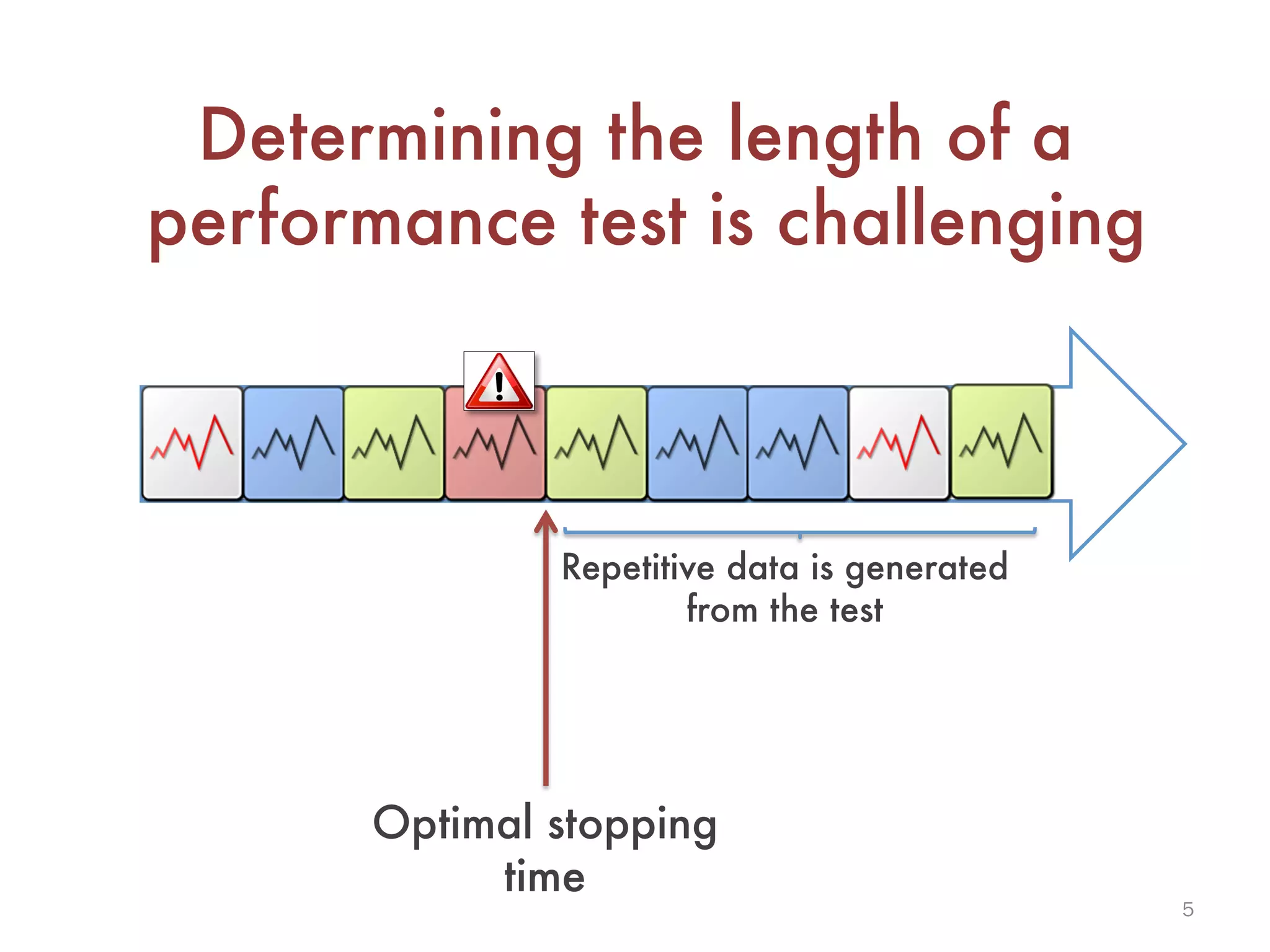

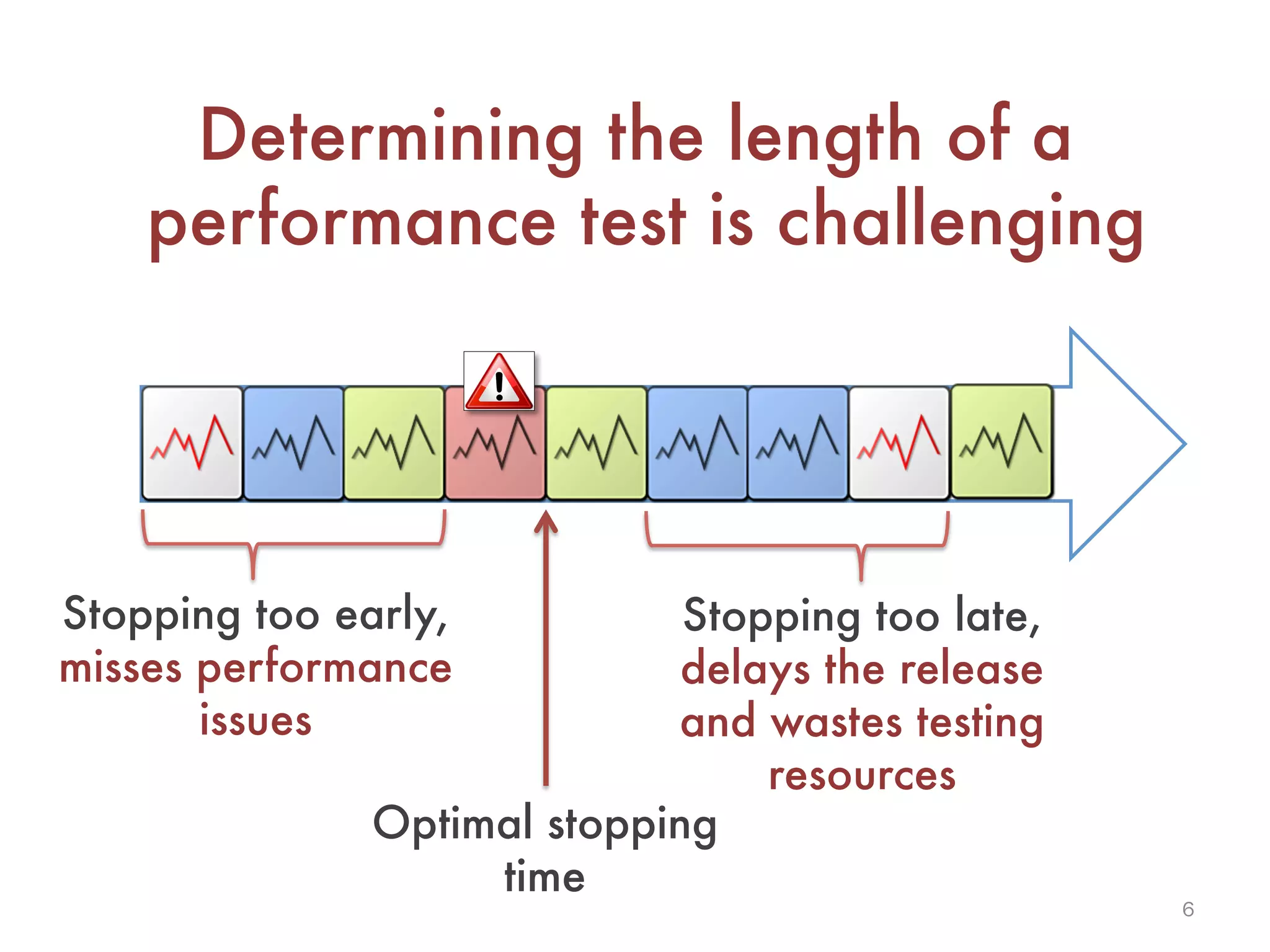

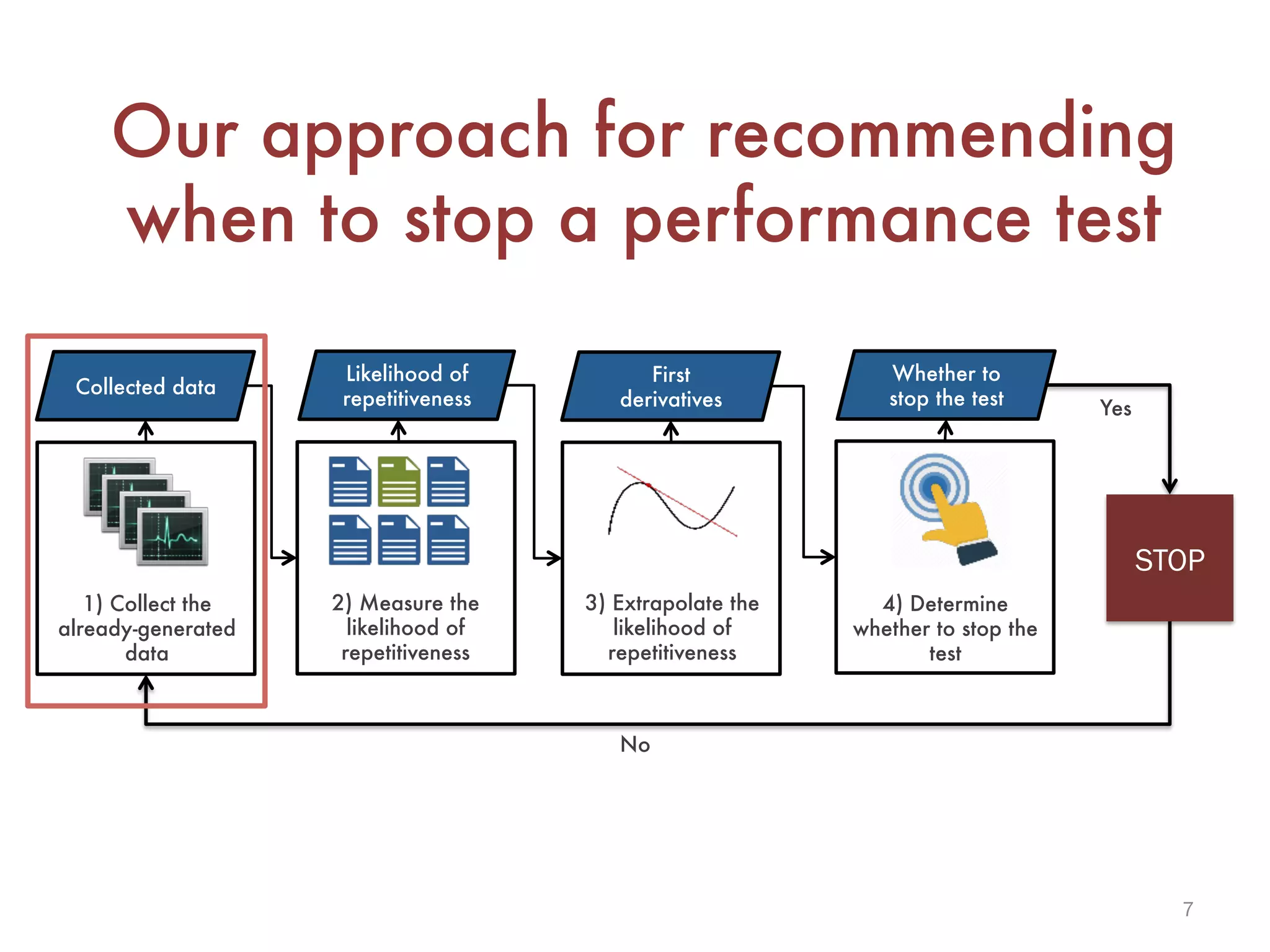

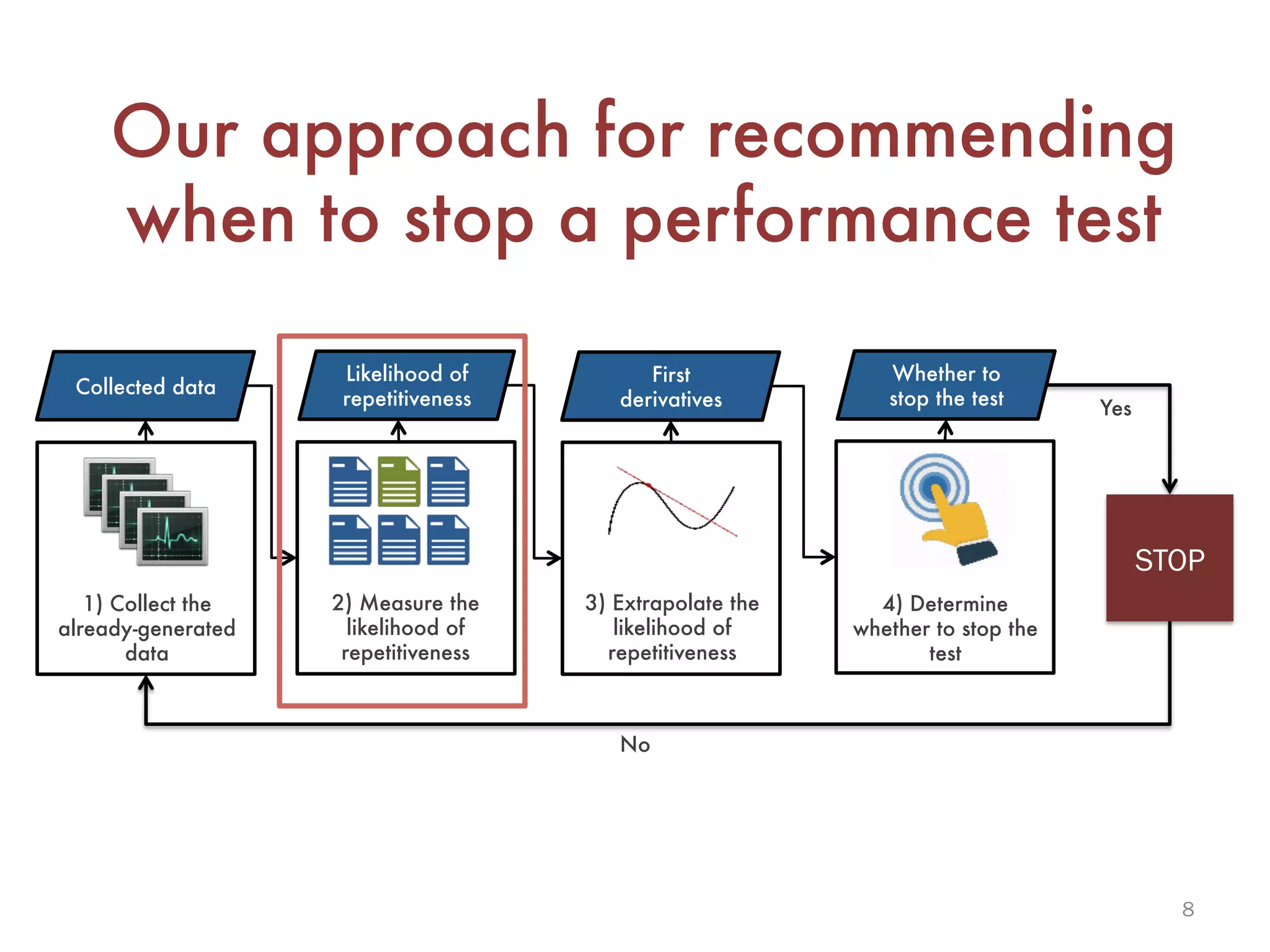

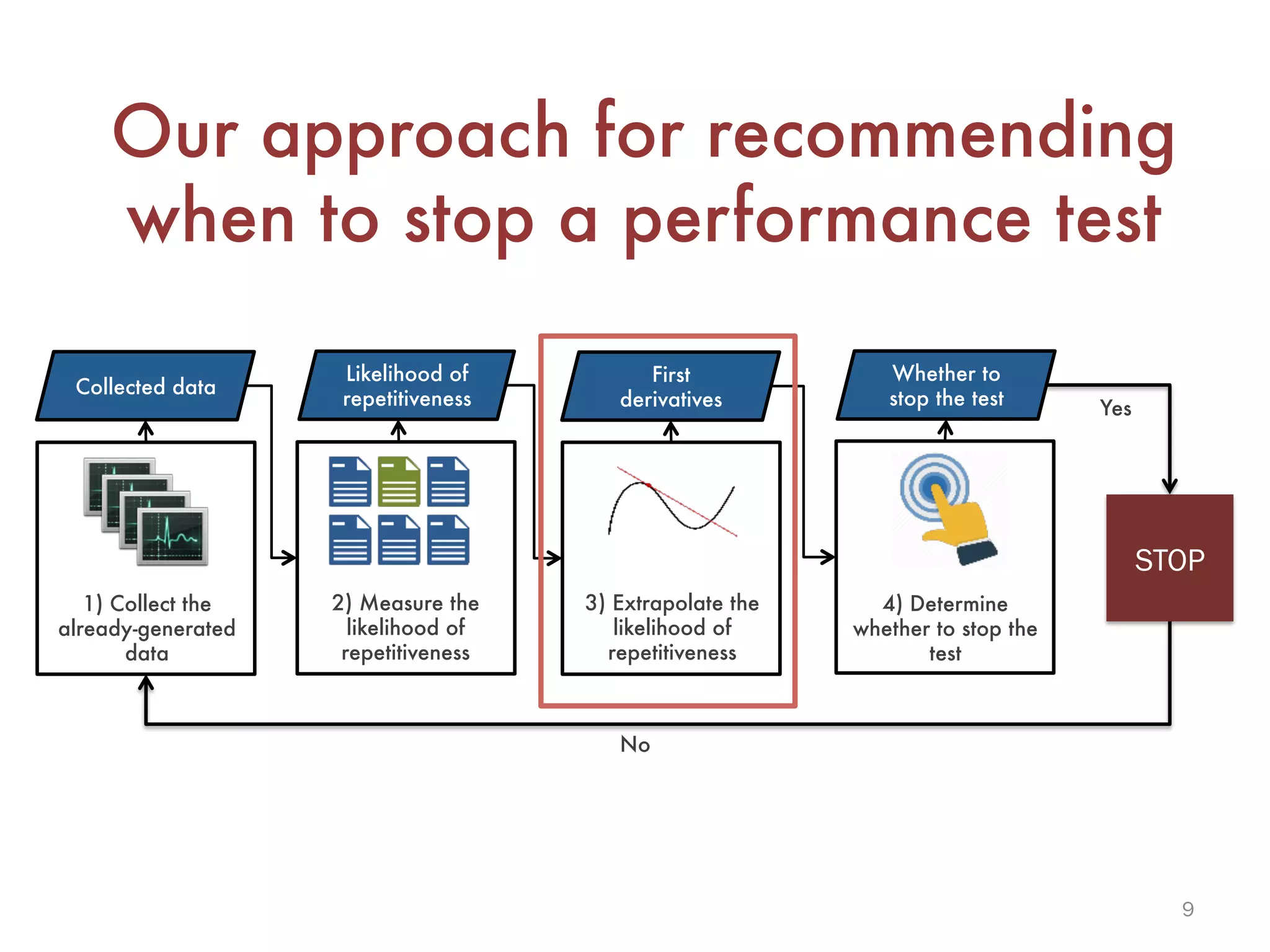

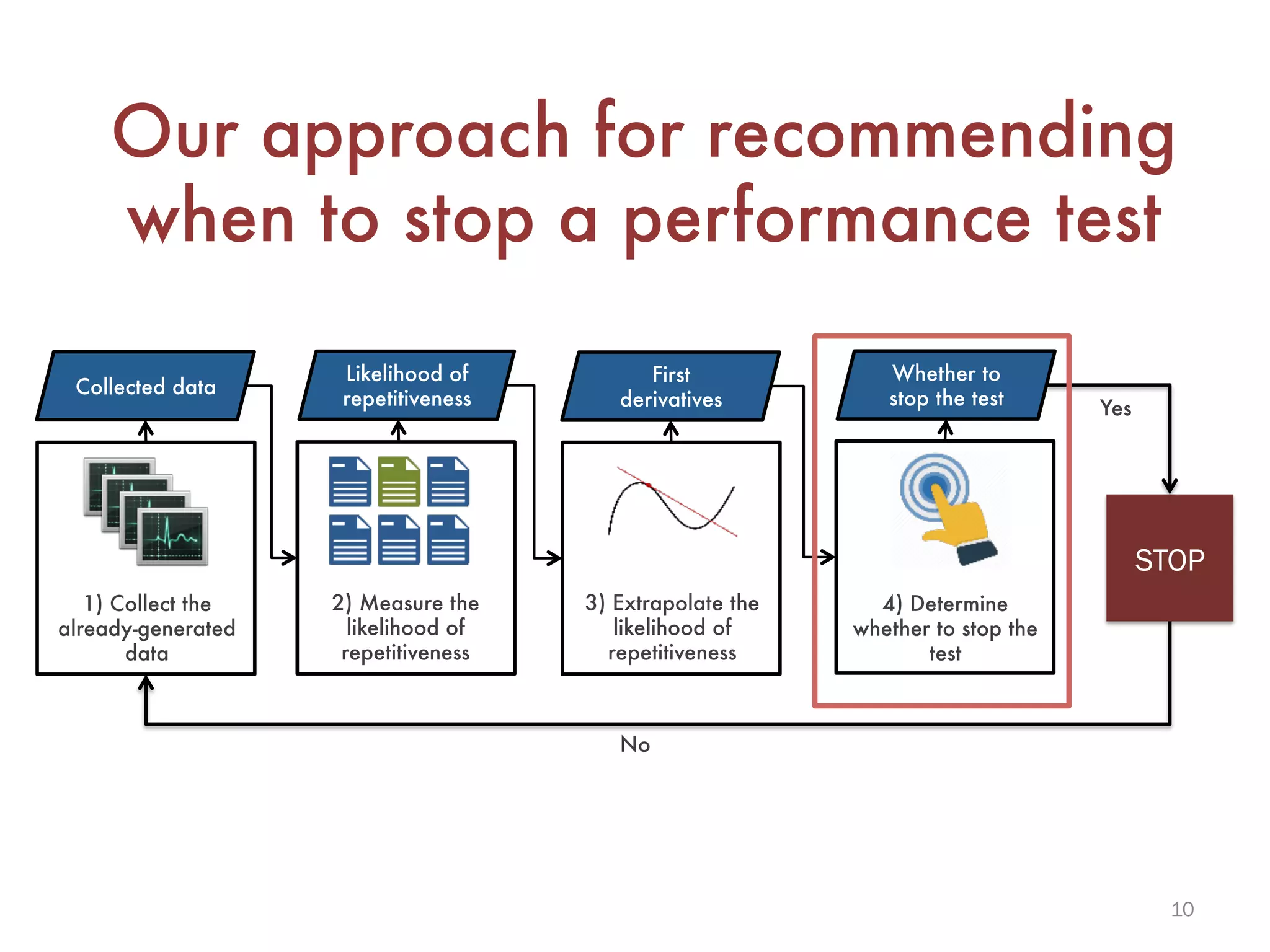

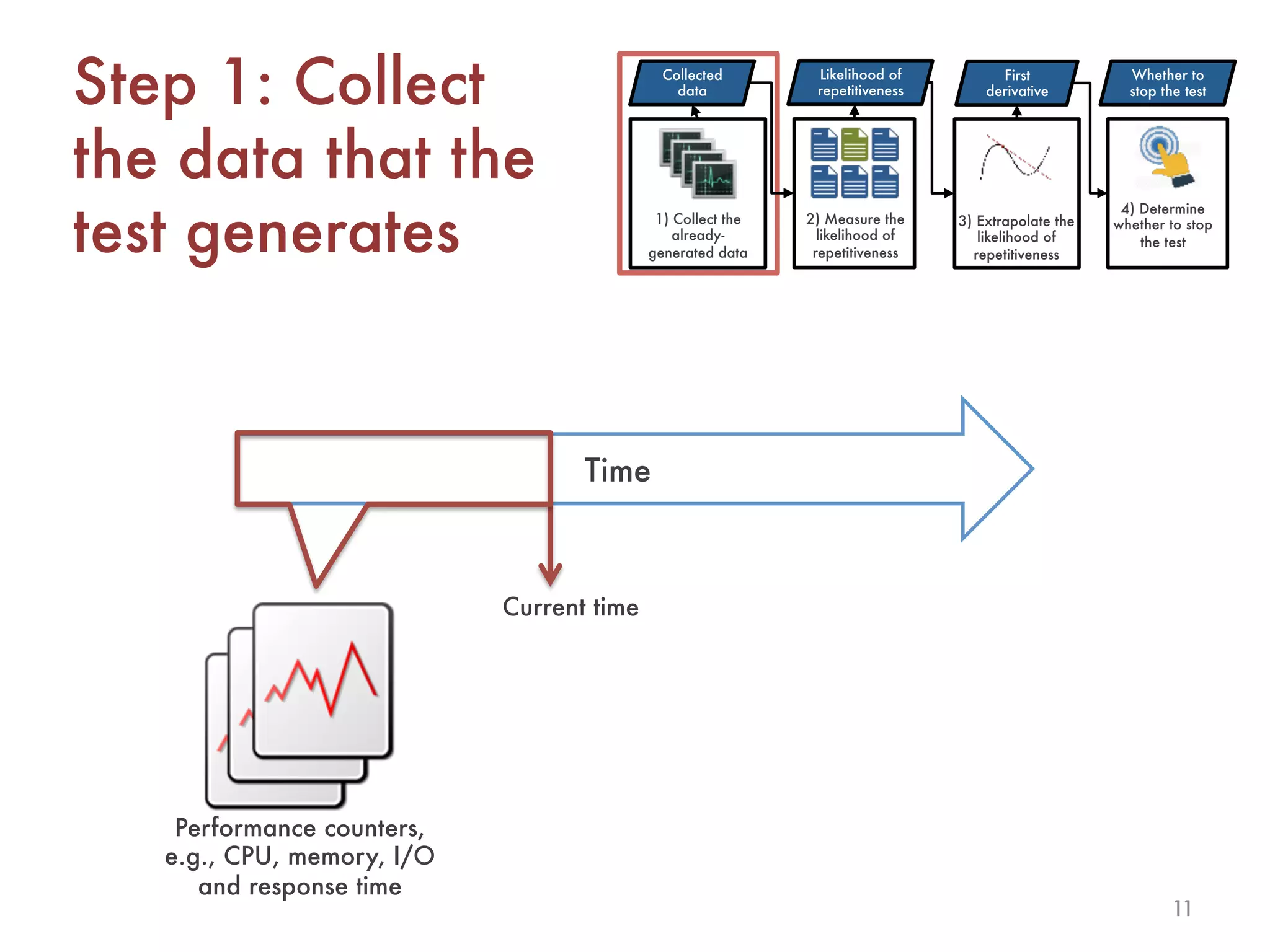

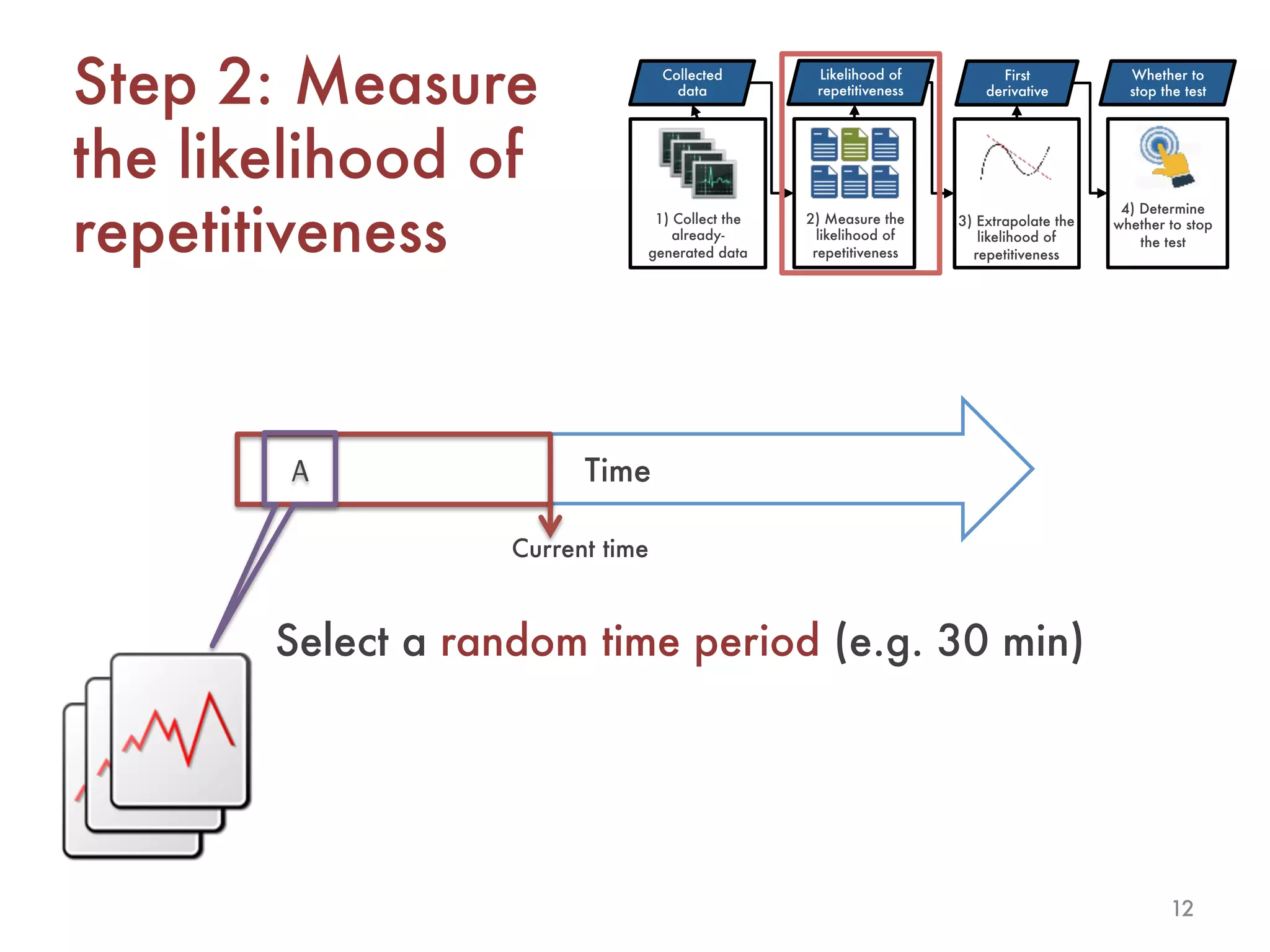

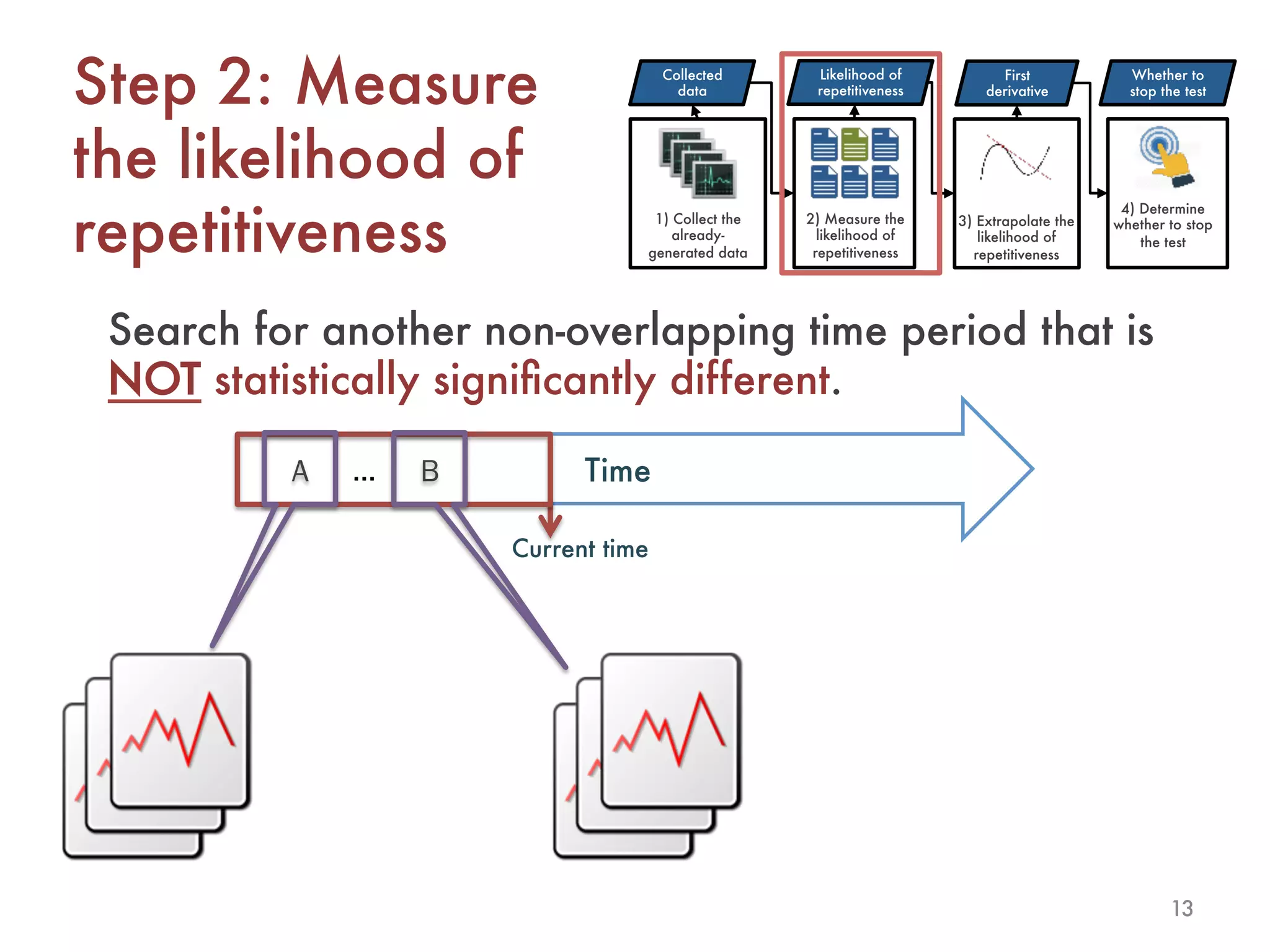

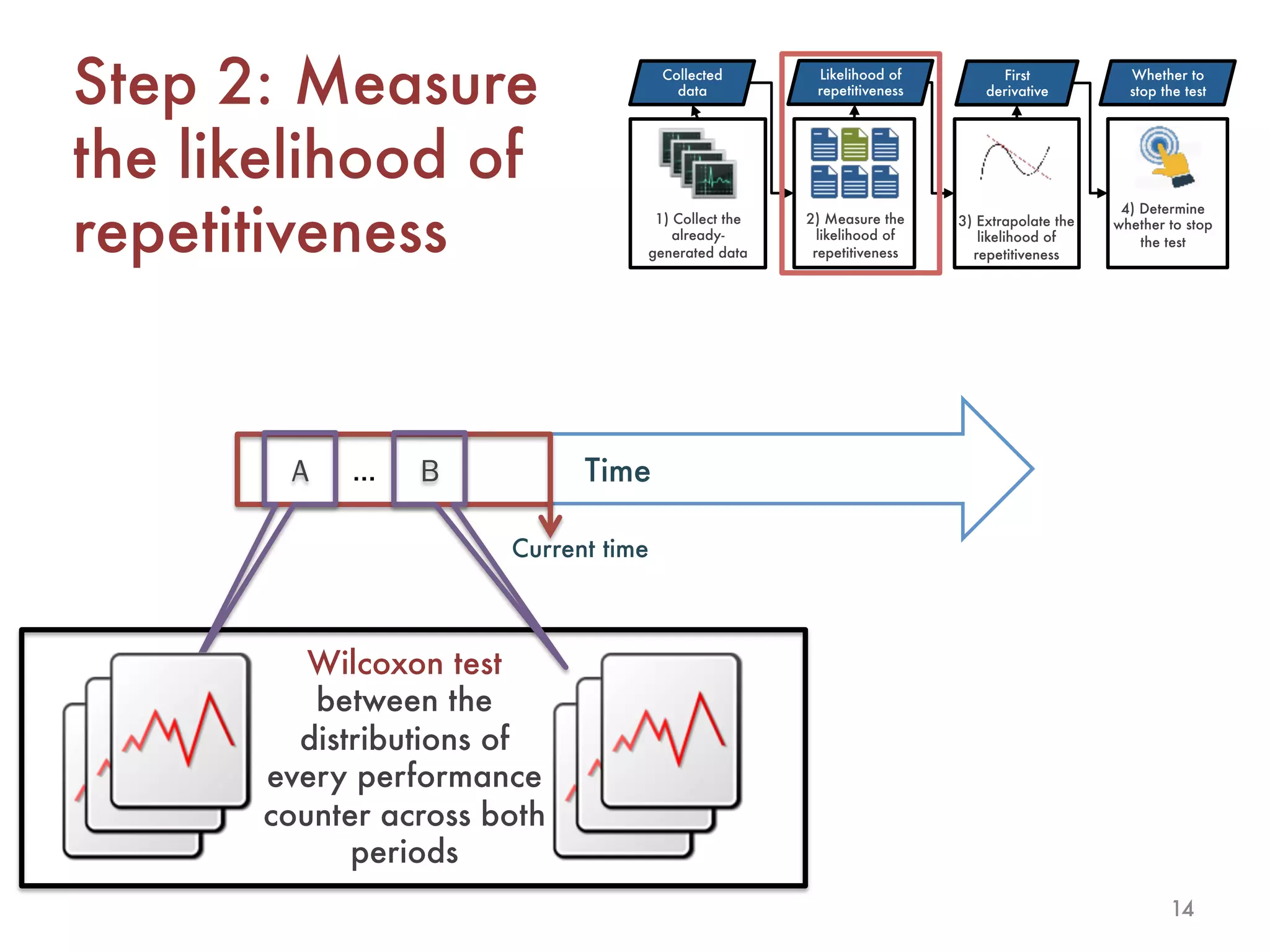

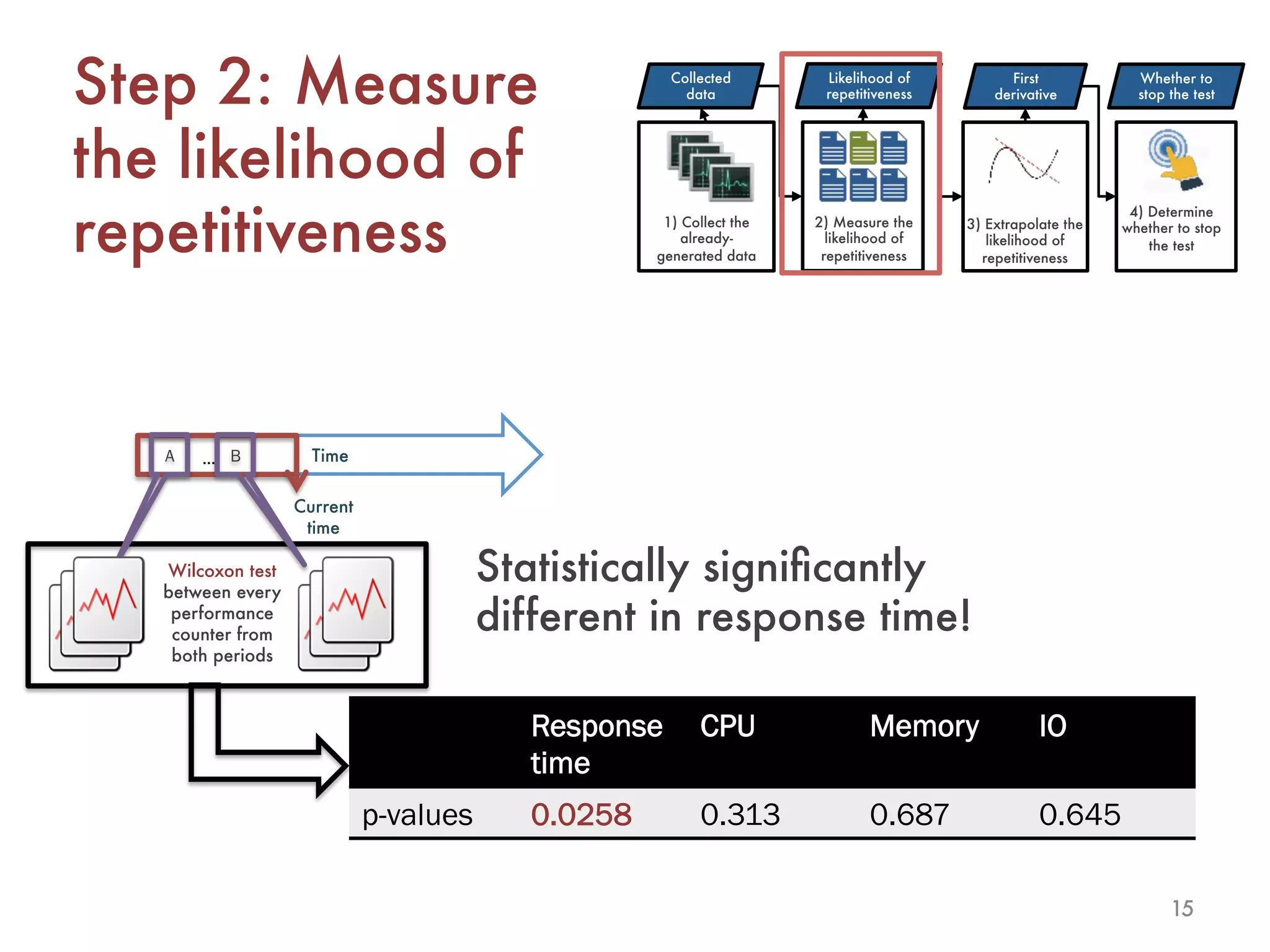

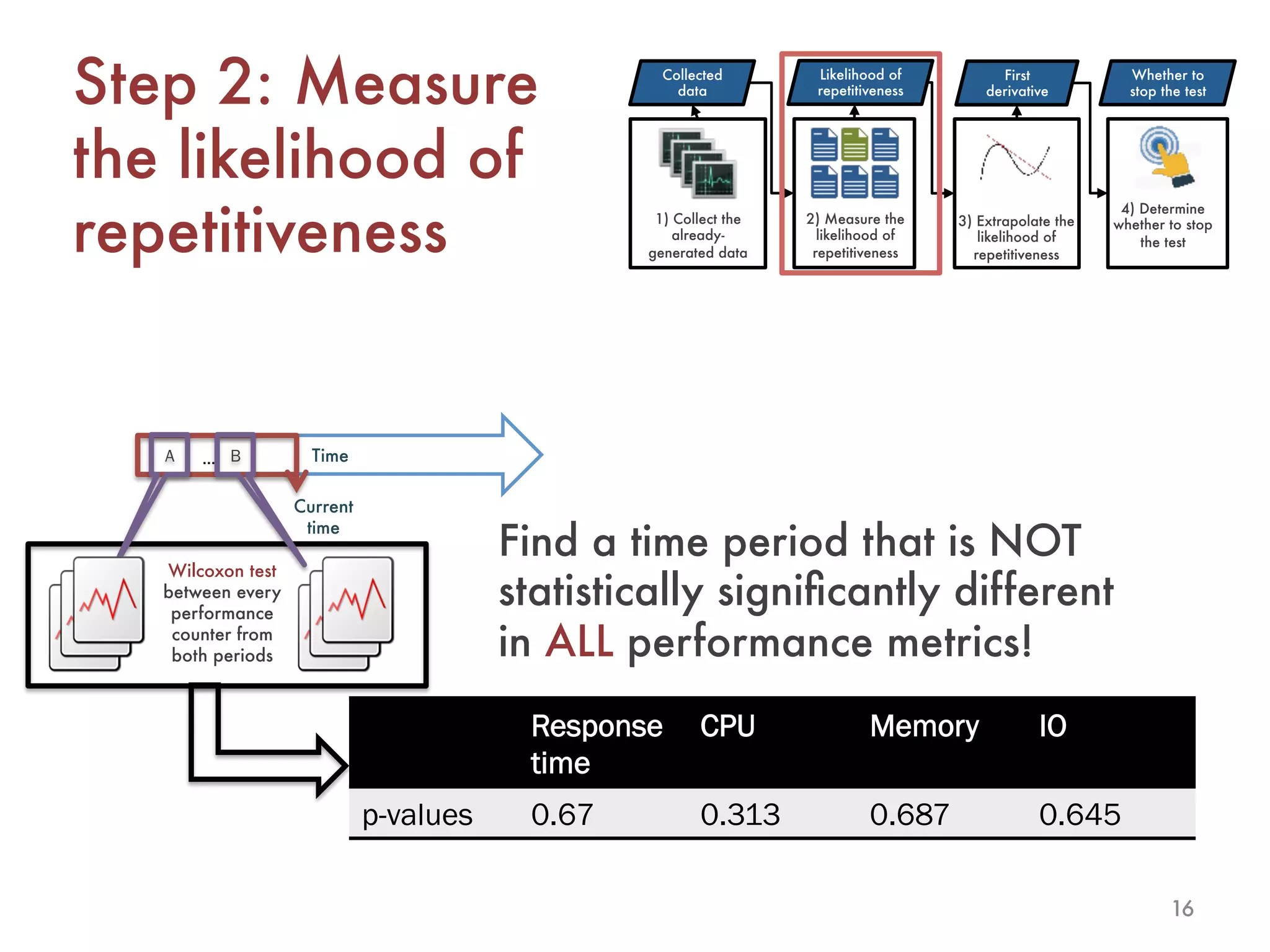

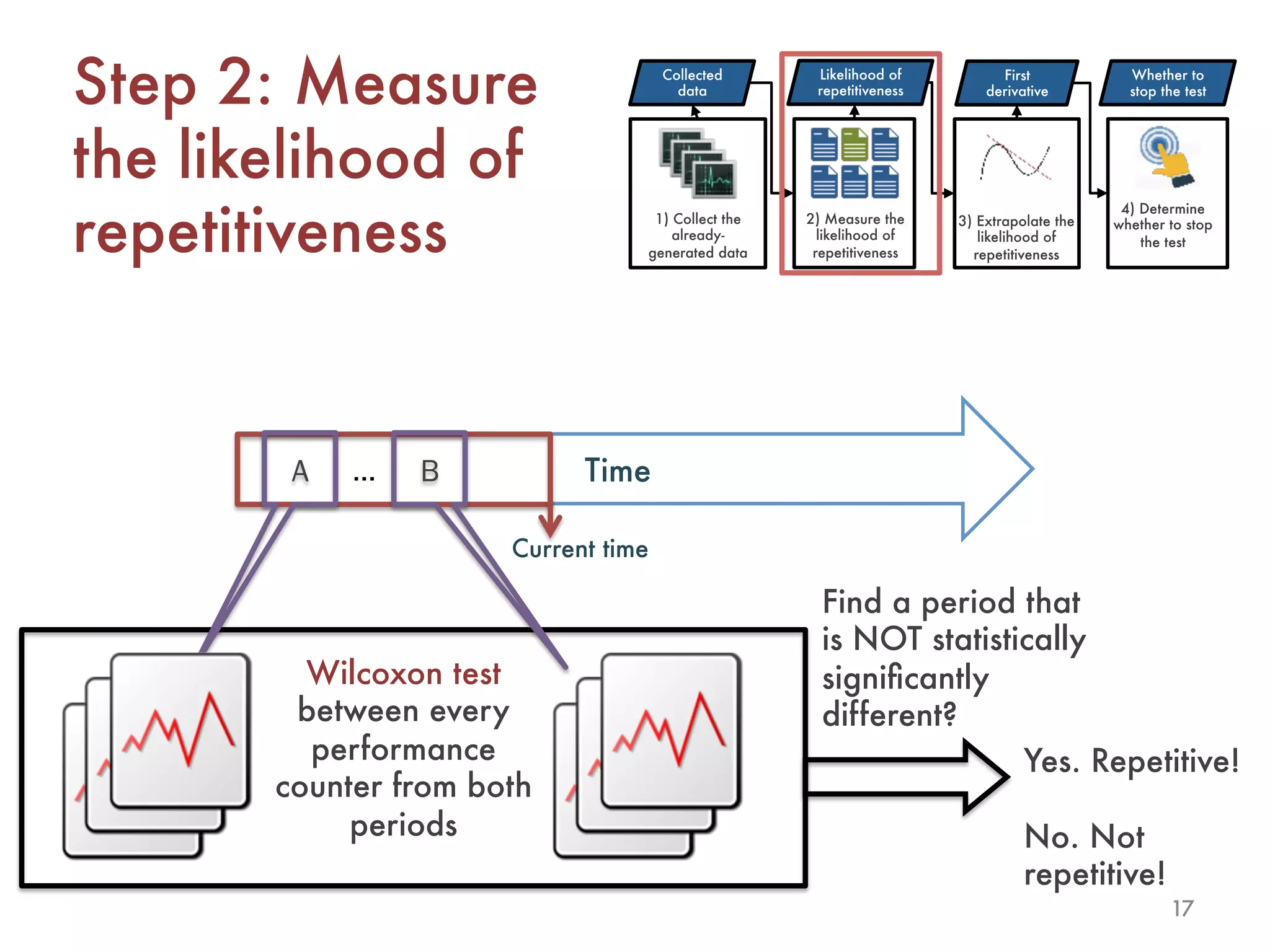

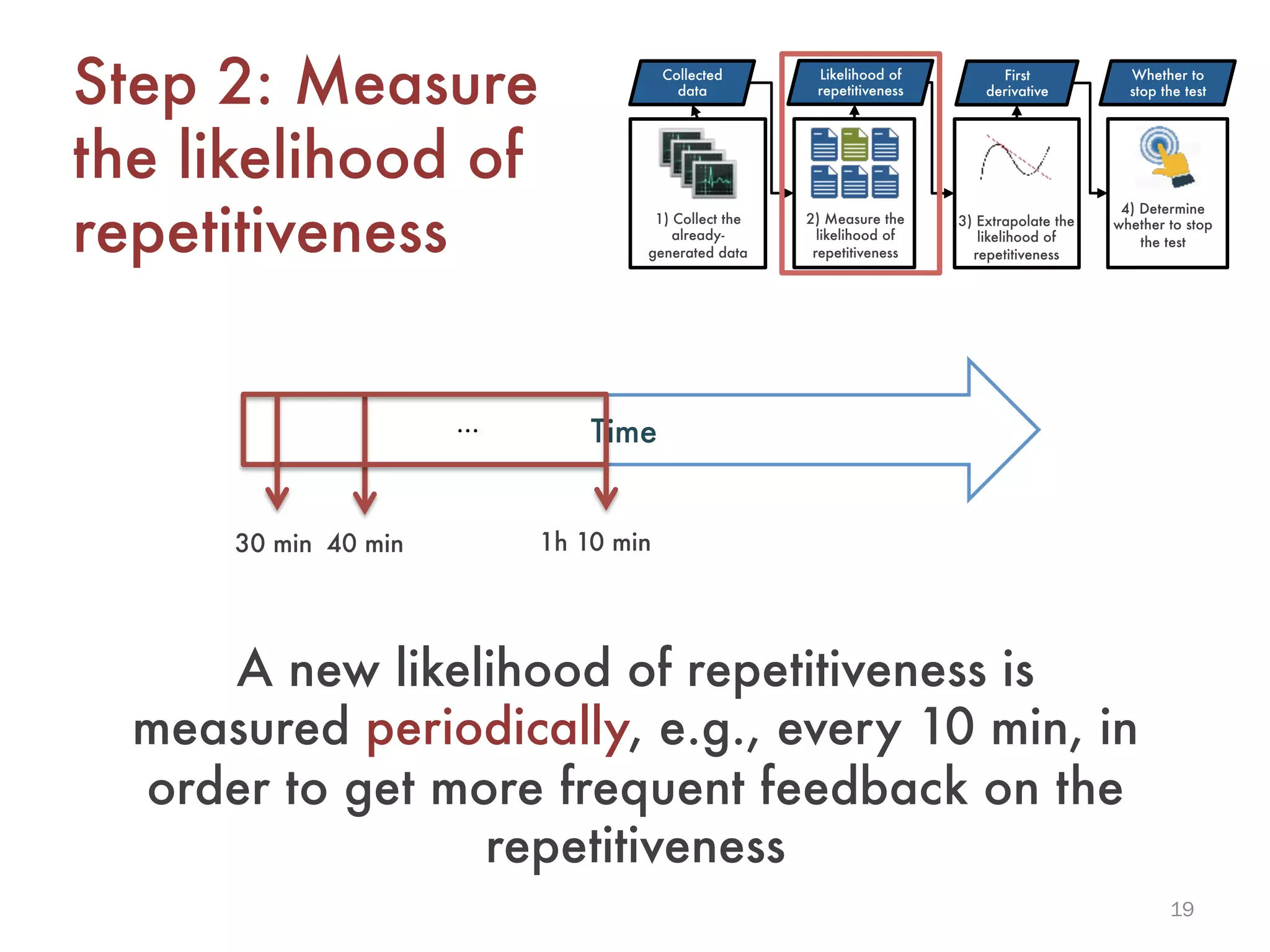

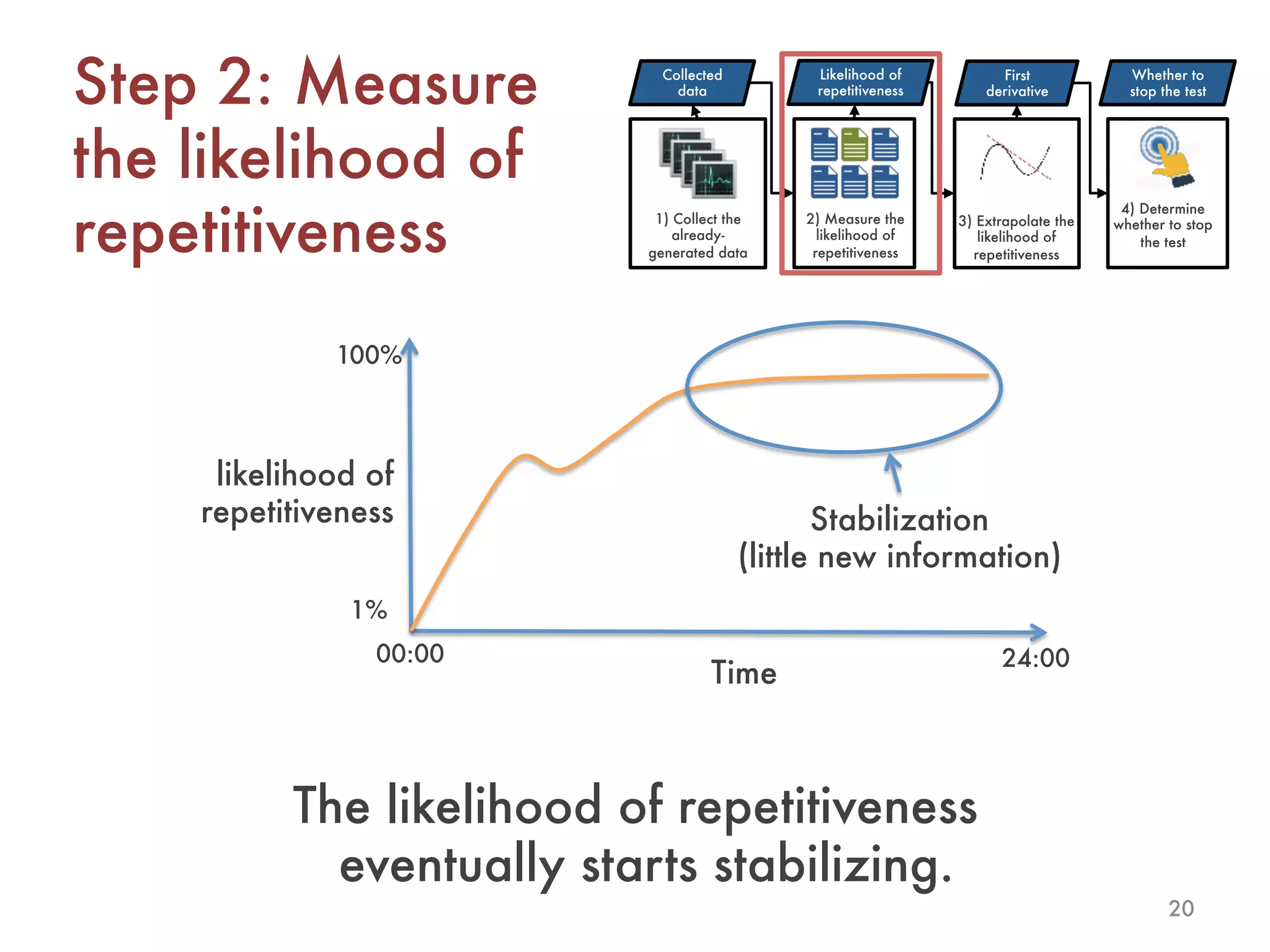

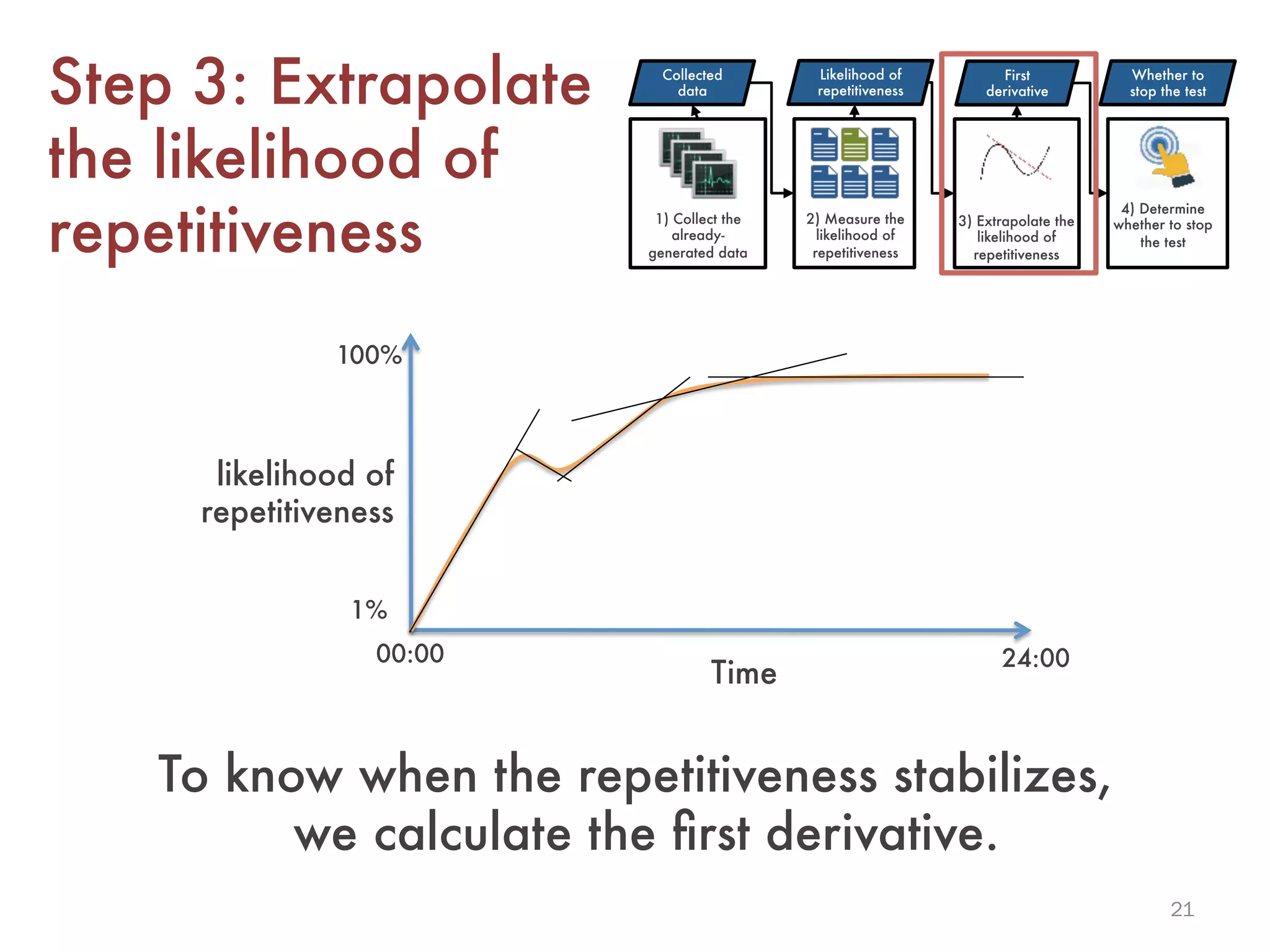

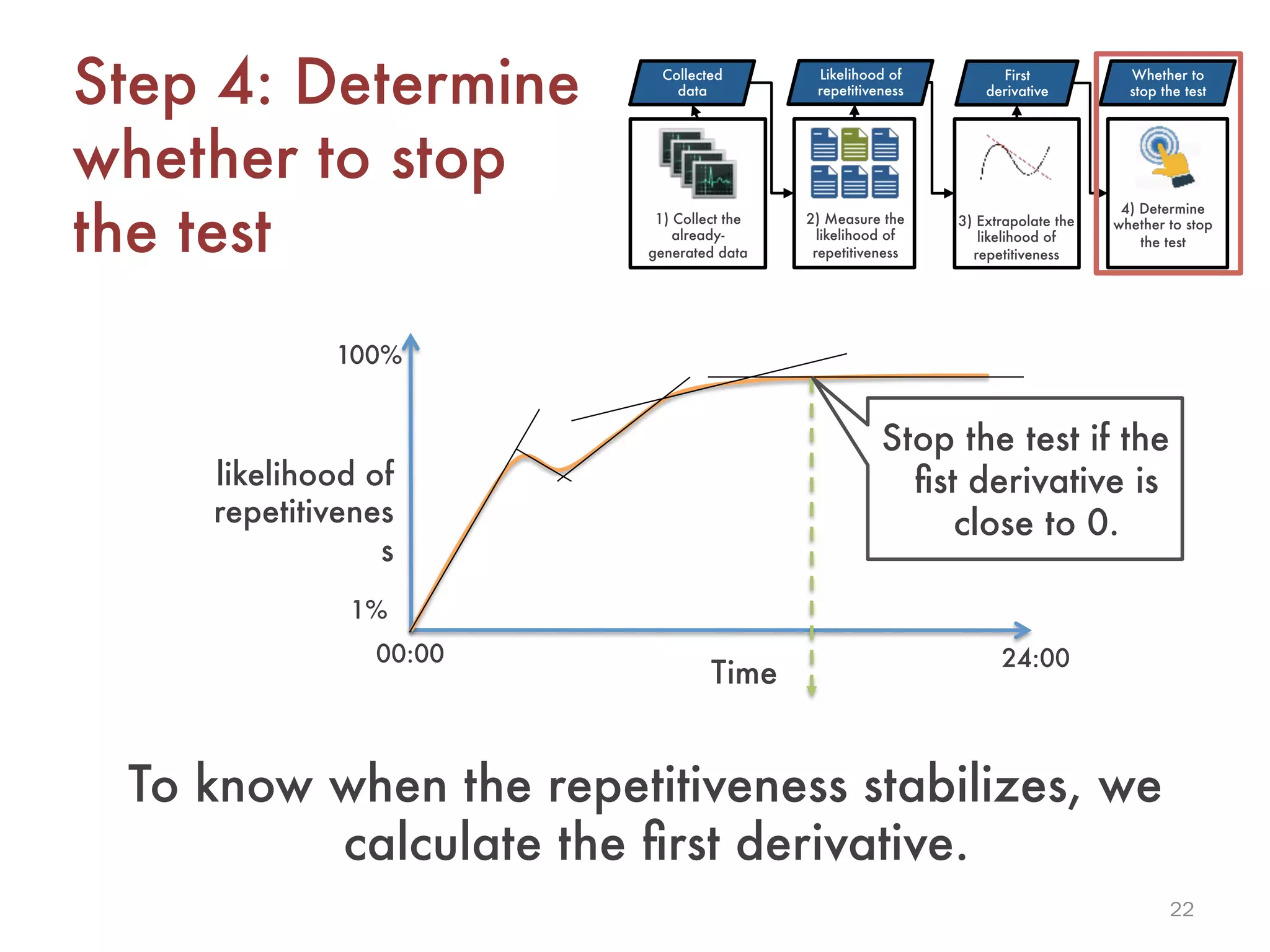

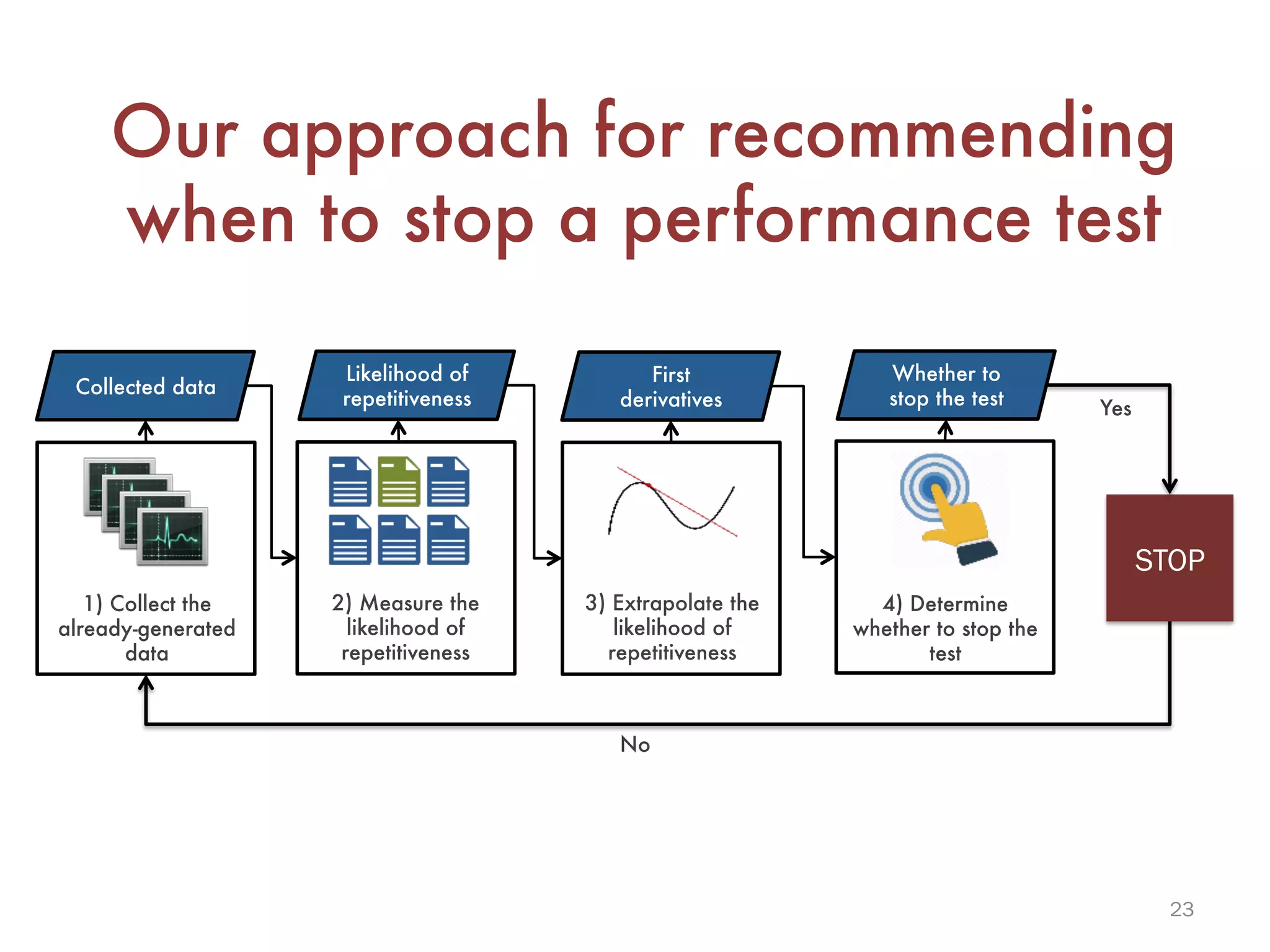

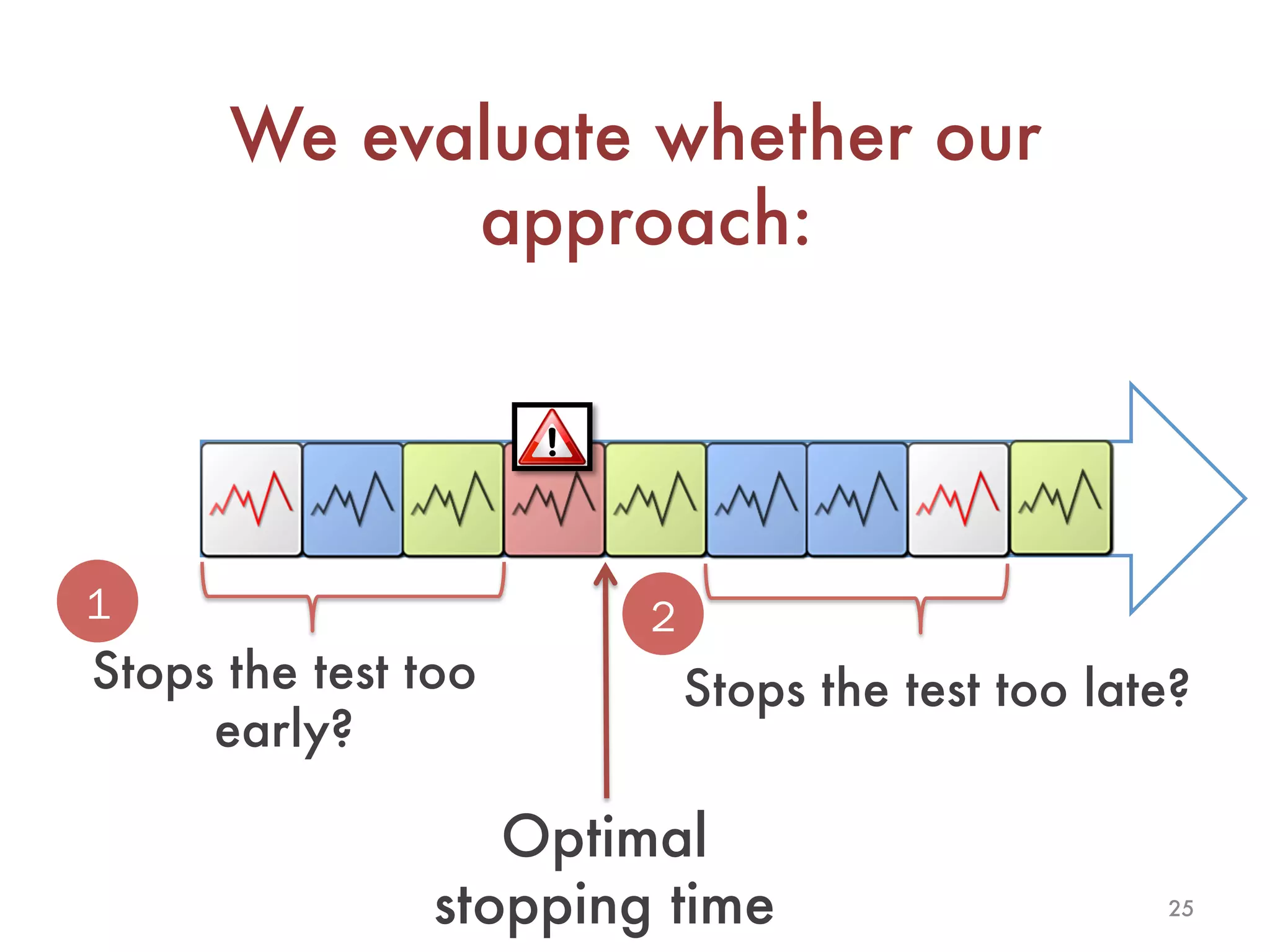

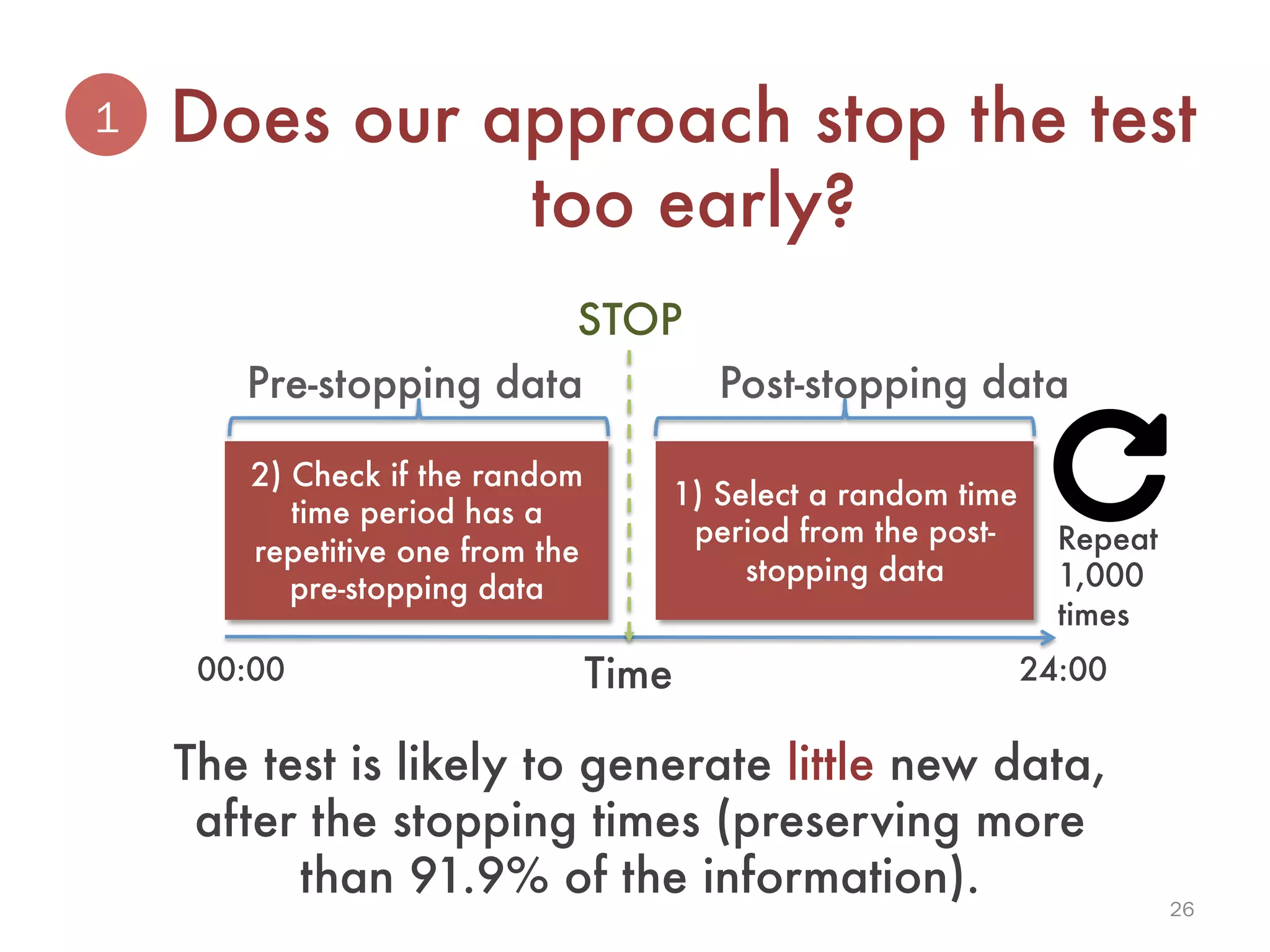

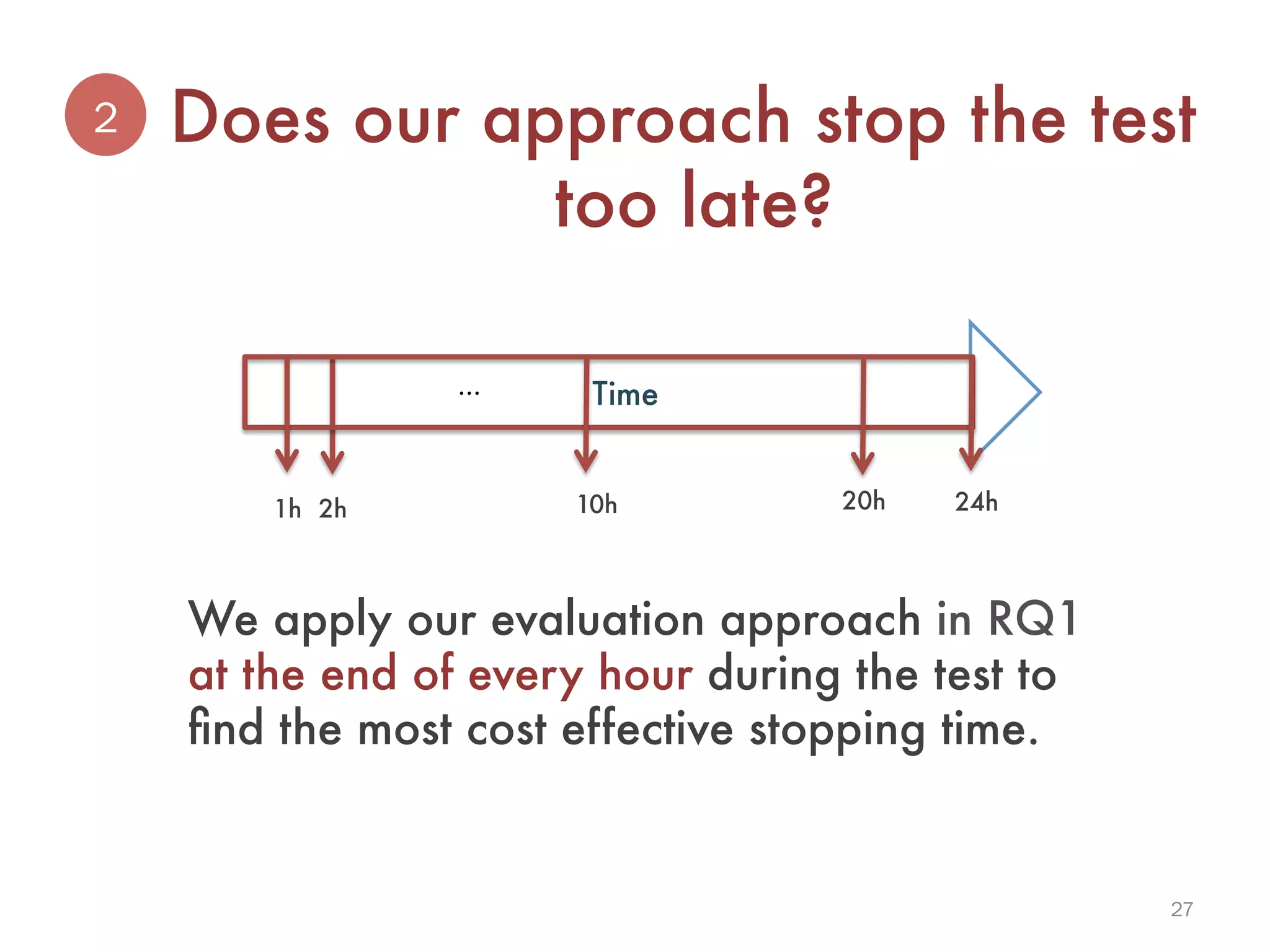

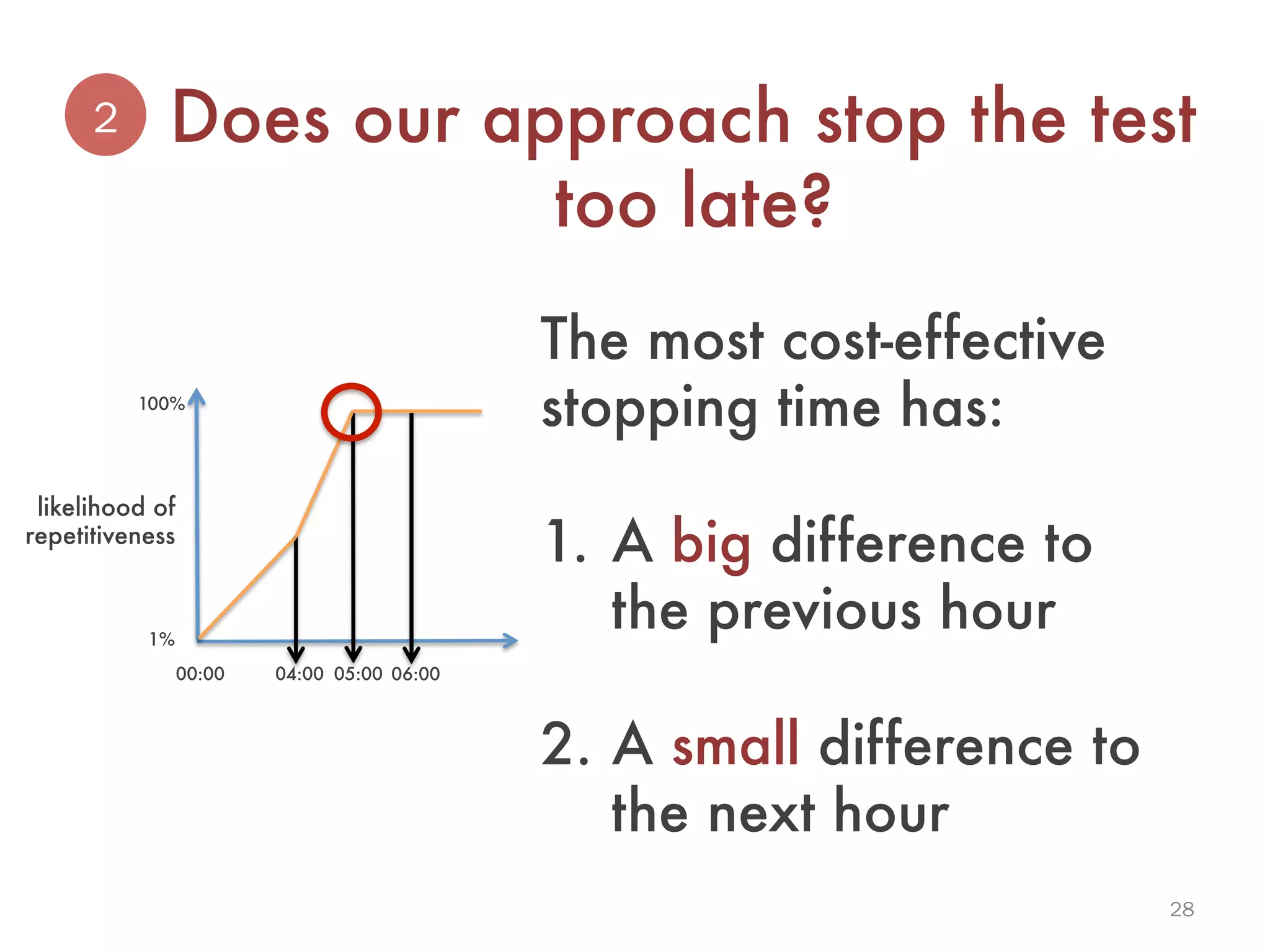

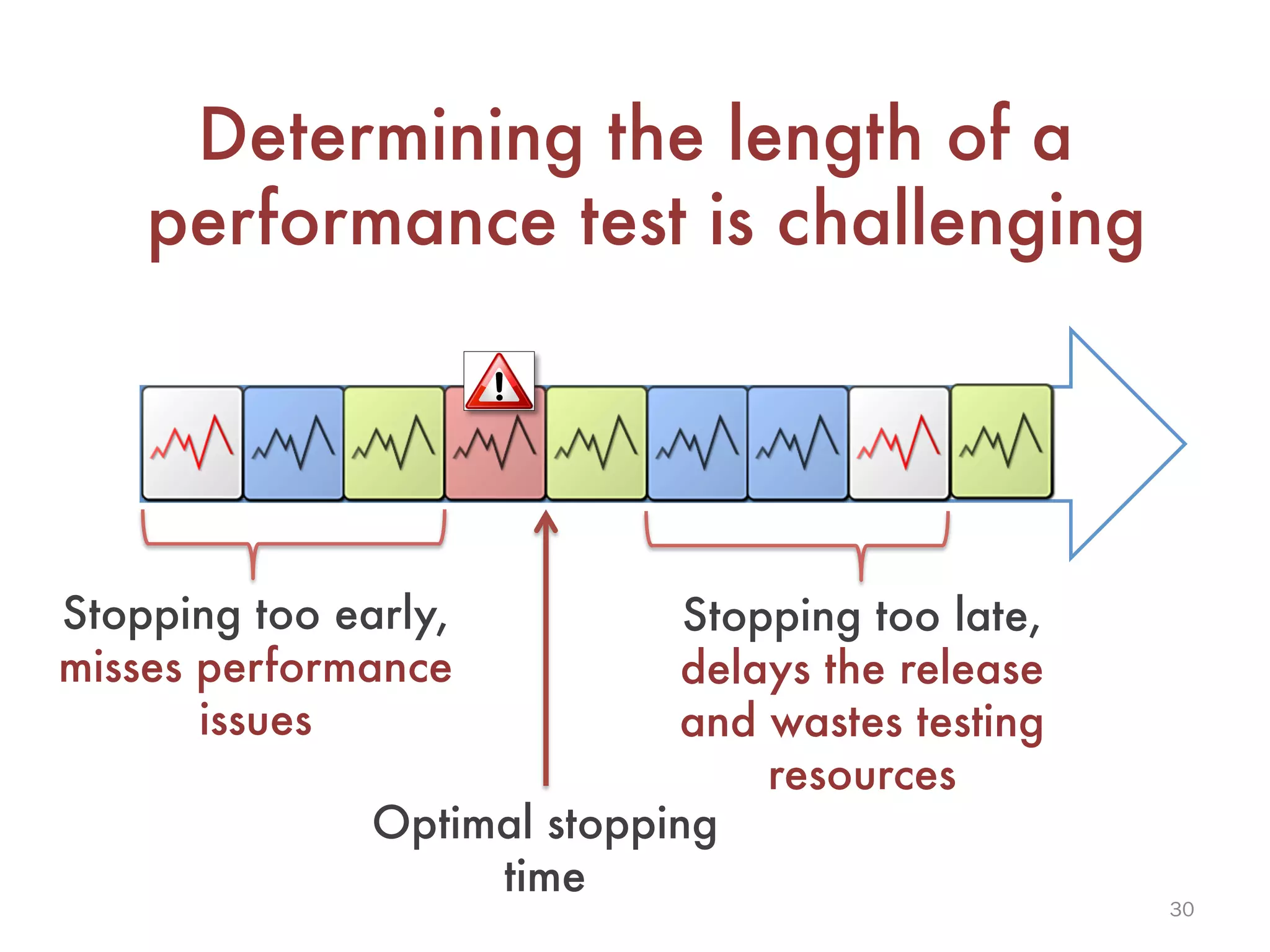

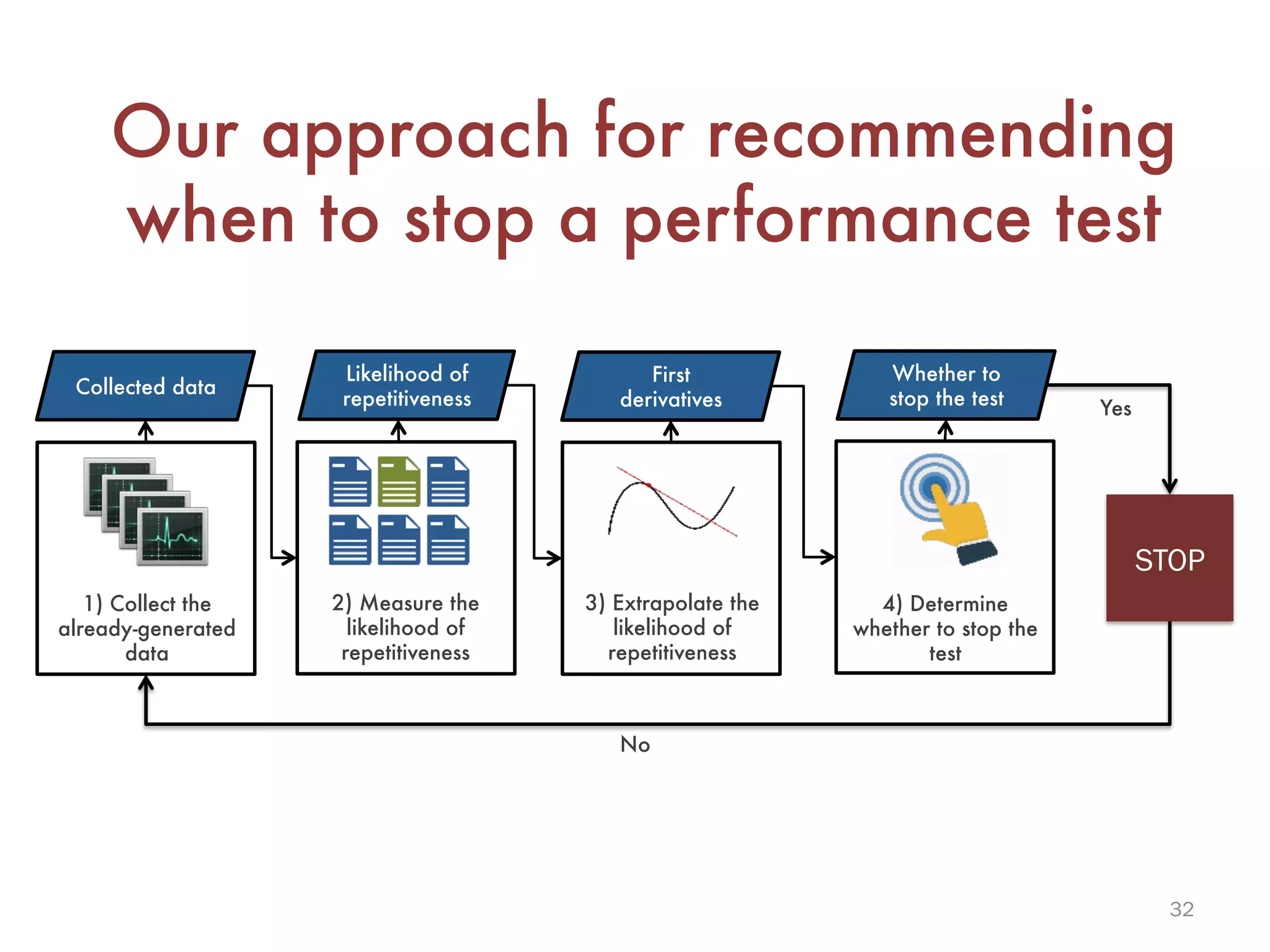

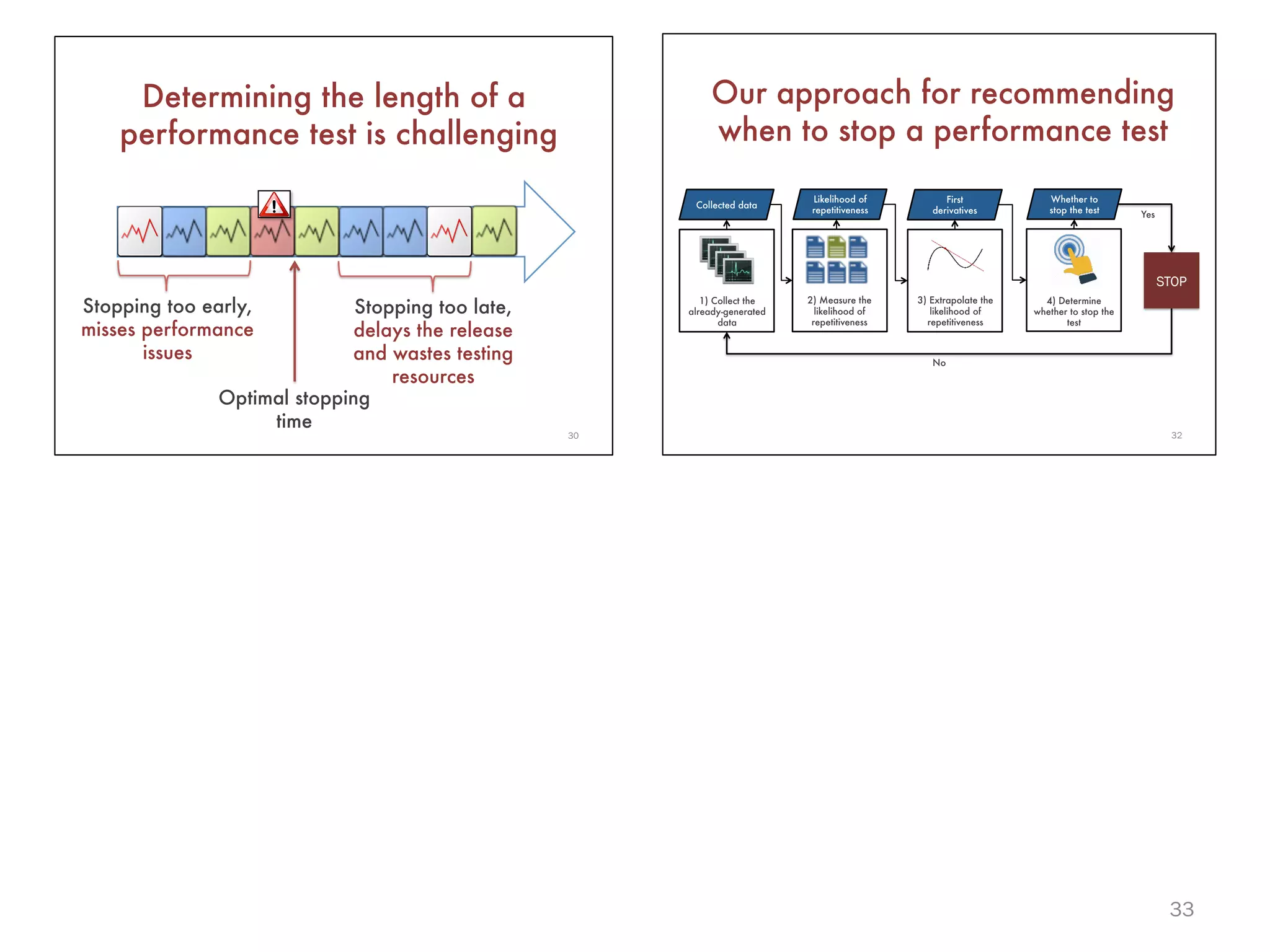

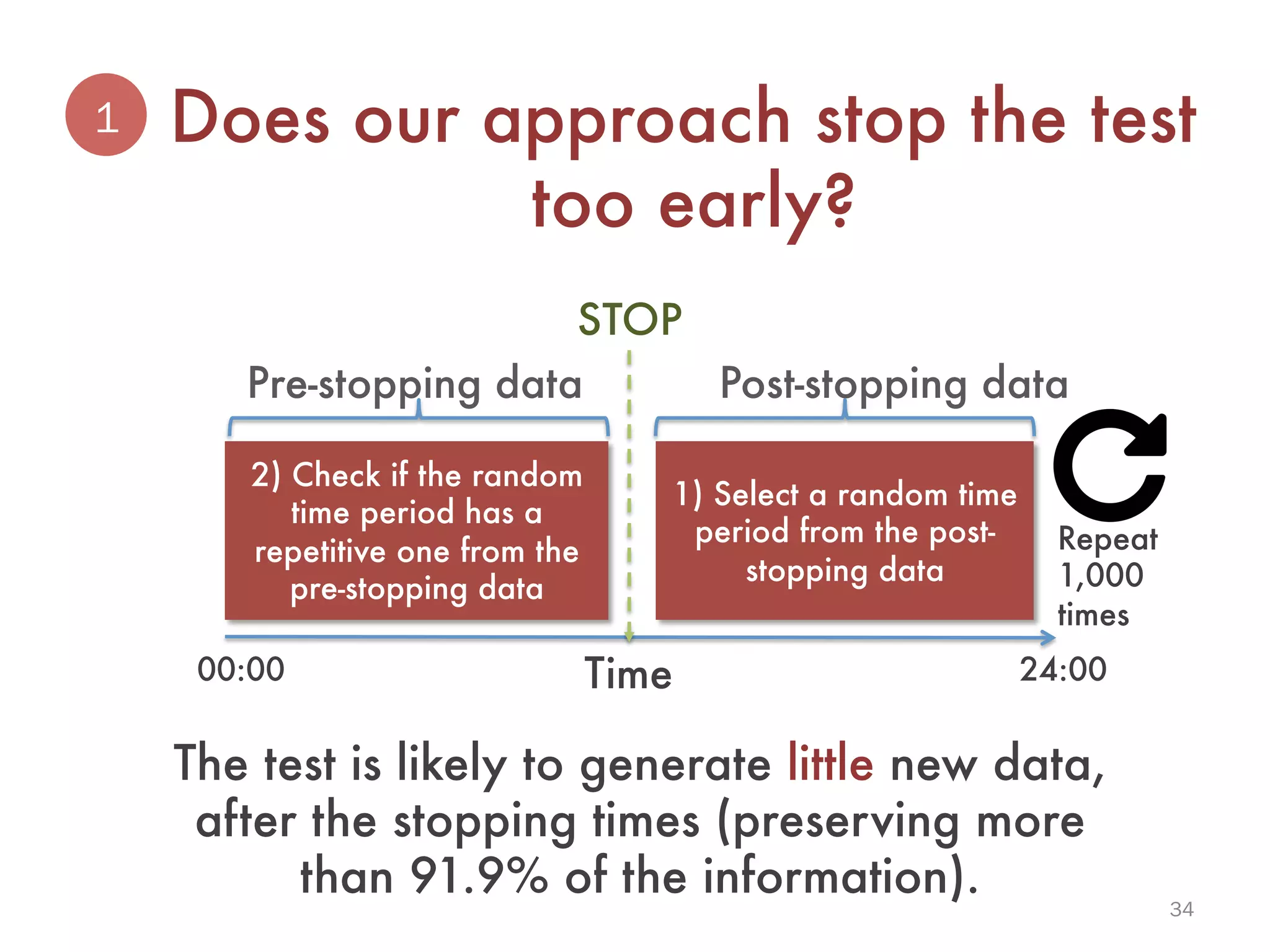

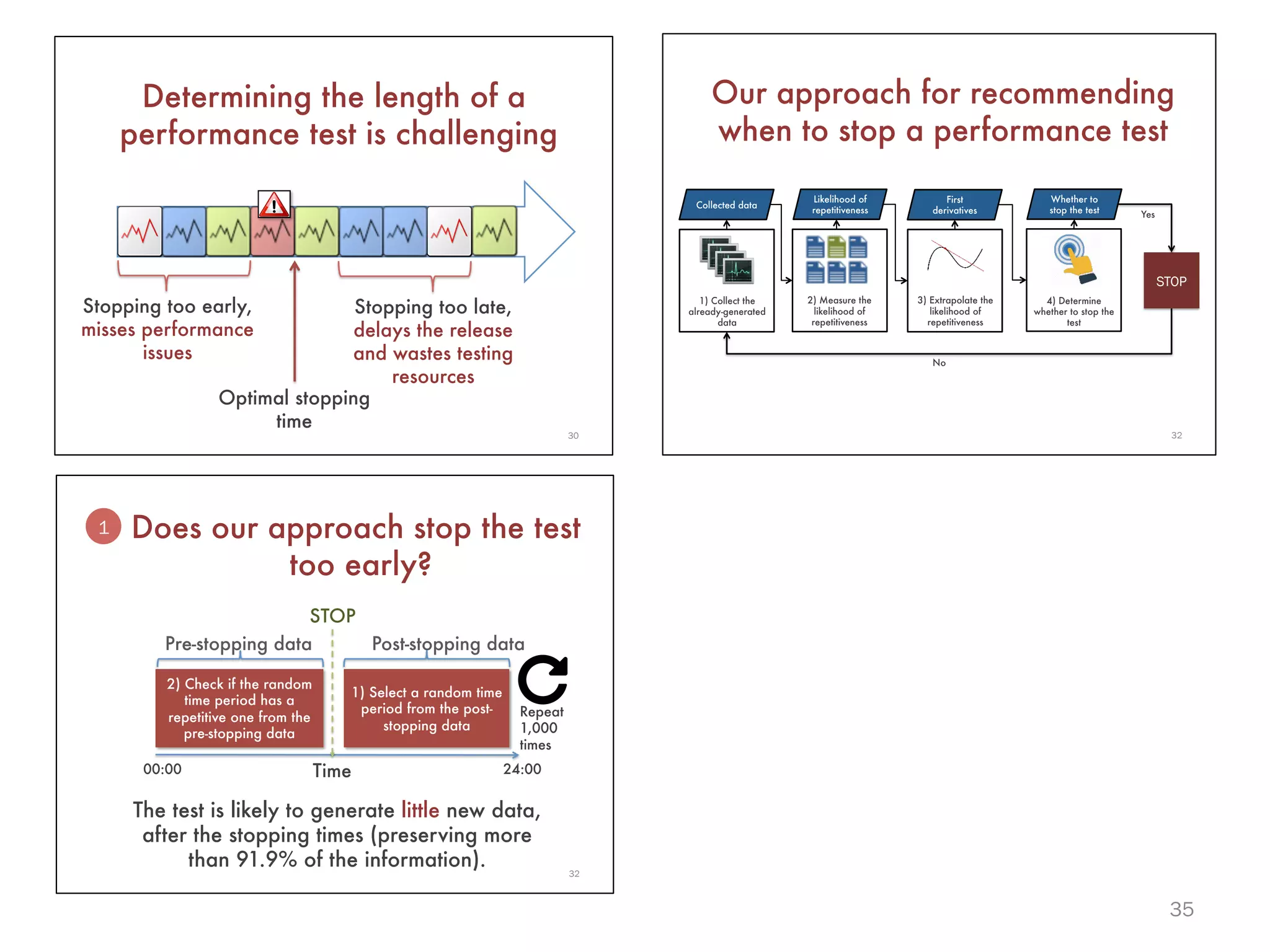

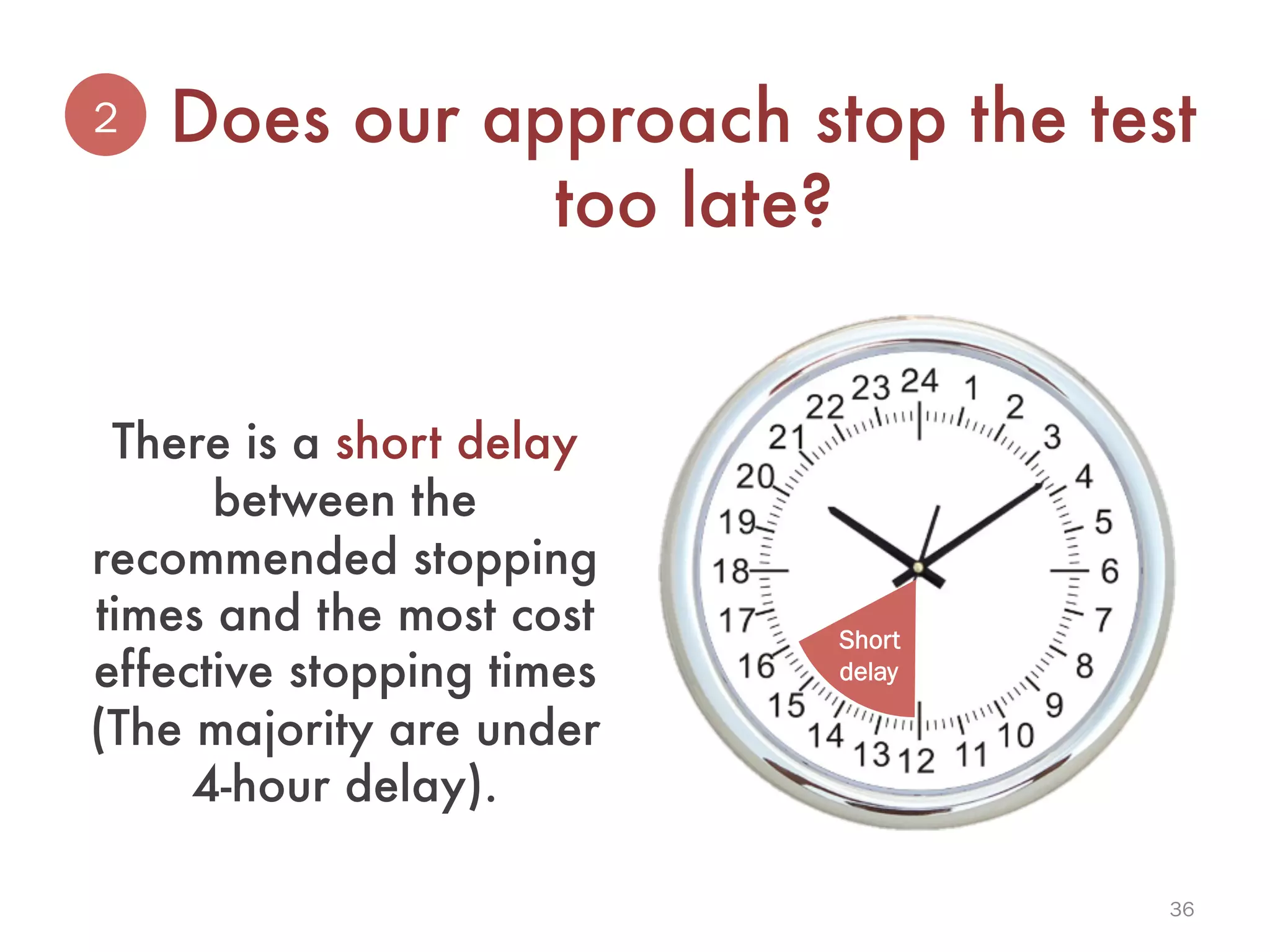

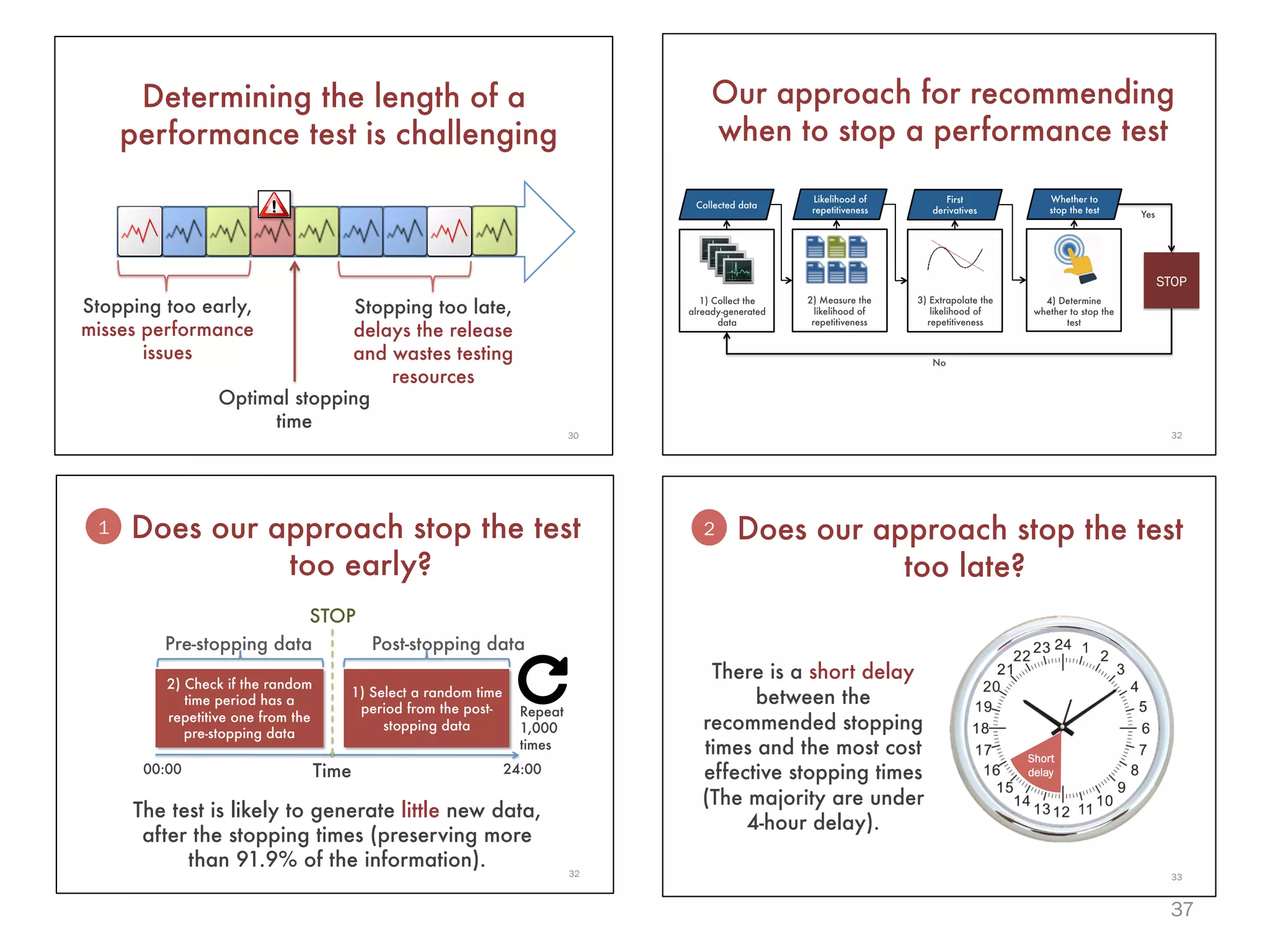

The document presents an automated method for determining the optimal stopping time for performance tests in ultra large-scale systems, which is essential to avoid missing critical performance issues or wasting resources. The approach involves collecting data, measuring the likelihood of data repetitiveness, and employing statistical methods to decide when to stop the tests. Evaluation results indicate that the method effectively balances timely conclusions with cost-effectiveness, with most recommended stopping times requiring a short delay of under 4 hours from the ideal point.