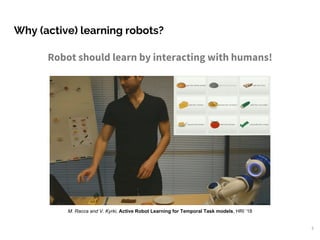

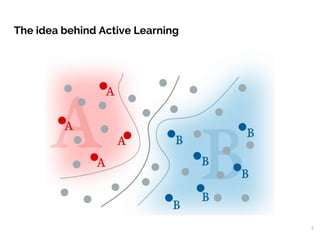

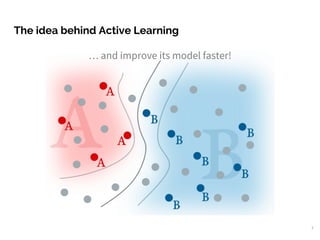

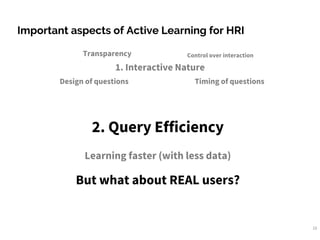

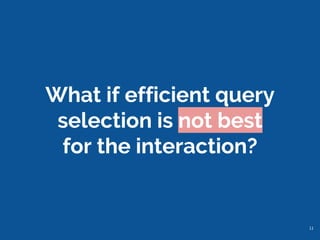

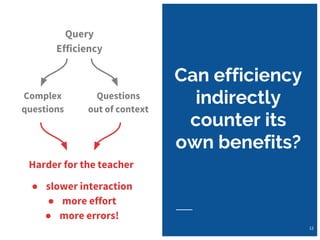

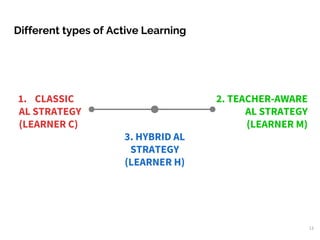

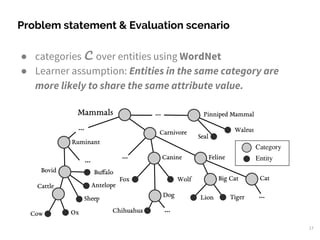

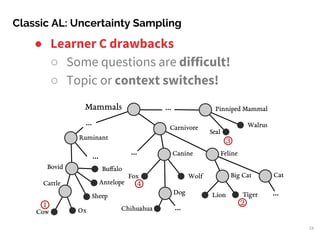

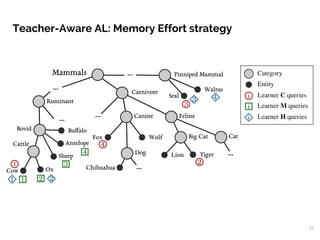

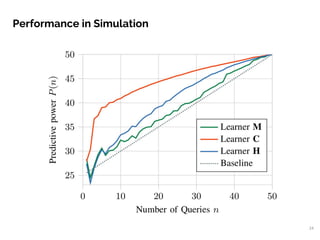

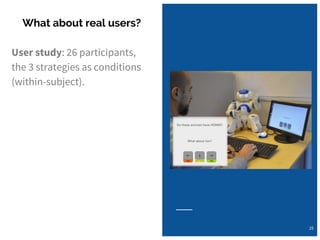

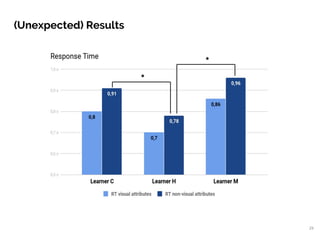

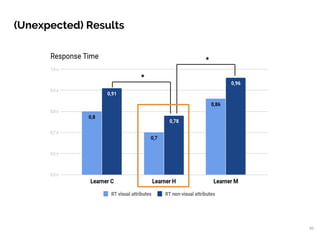

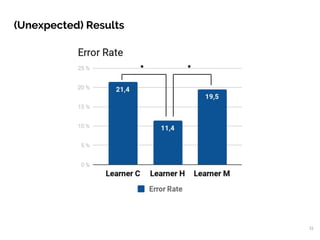

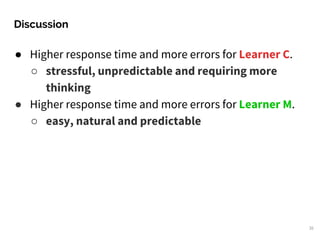

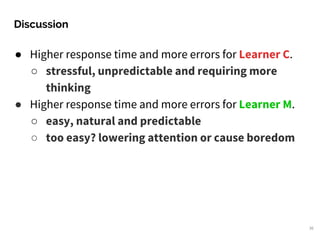

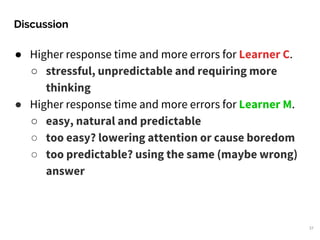

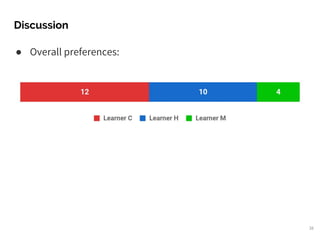

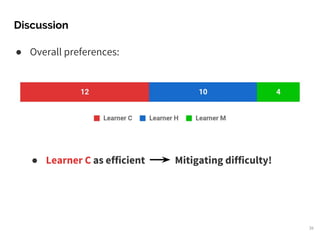

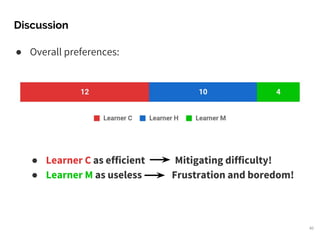

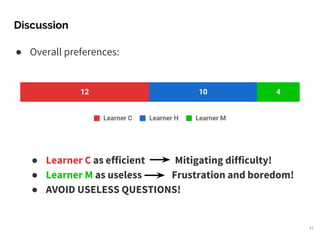

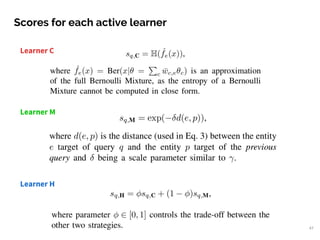

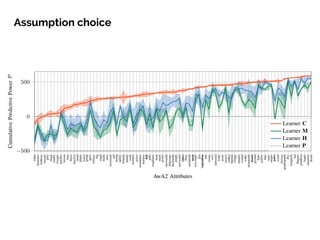

The document presents research on active learning strategies for robots that interact with human teachers. It found that classic active learning, which aims for query efficiency, can increase task difficulty and lead to slower, less accurate responses from teachers compared to more teacher-aware strategies. A hybrid strategy achieved intermediate results. The researchers conclude that considering the human perspective is important for active learning, as efficiency alone can undermine the interaction and learning.