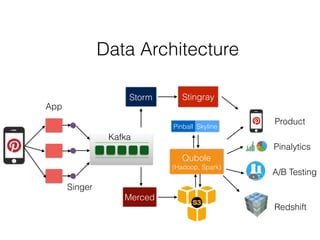

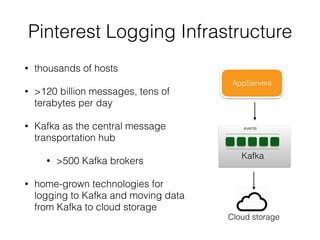

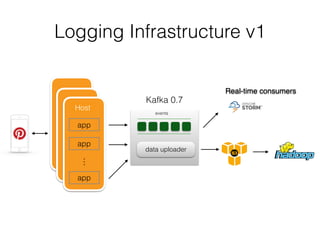

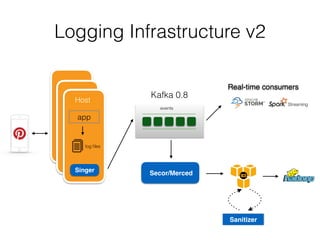

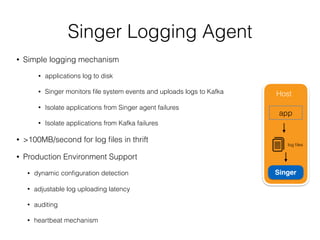

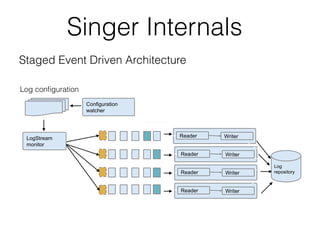

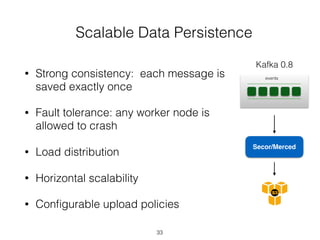

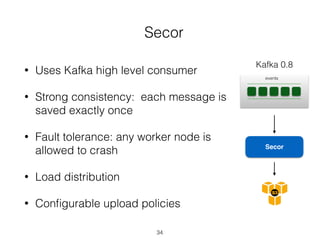

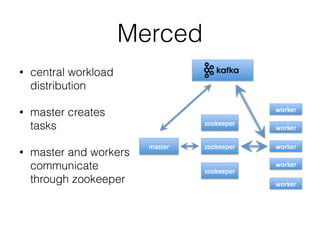

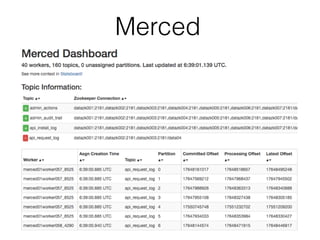

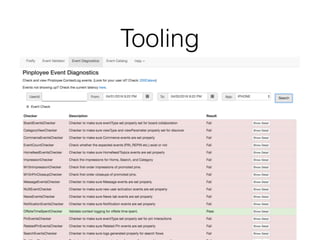

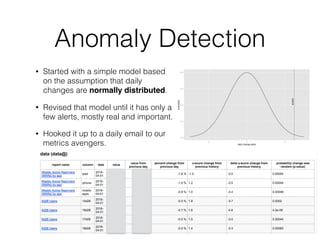

Pinterest uses Kafka as the central logging system to collect over 120 billion messages per day from thousands of hosts. They developed Singer, a lightweight logging agent, to reliably upload application logs to Kafka with low latency. Data is then moved from Kafka to cloud storage using systems like Secor and Merced that ensure exactly-once processing. Maintaining high log quality requires monitoring for anomalies, auditing new features, and catching issues both before and after releases through automated tooling.