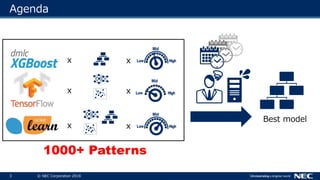

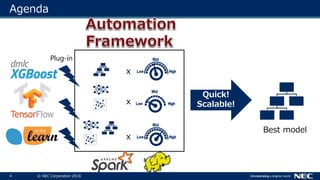

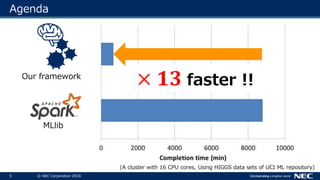

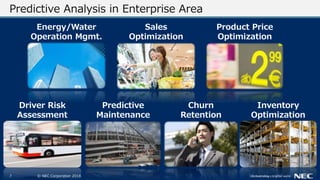

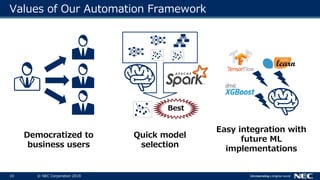

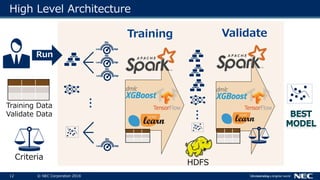

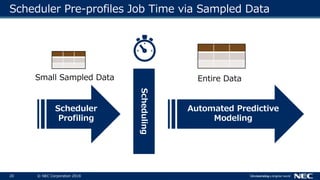

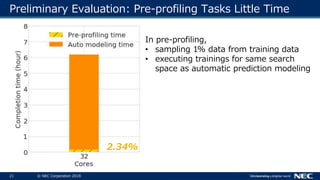

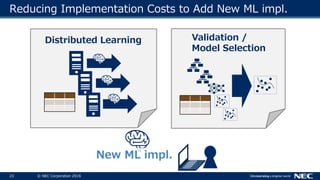

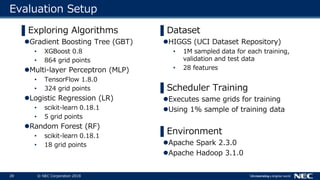

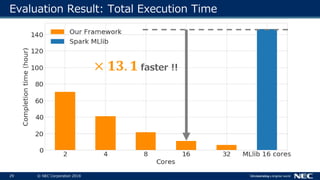

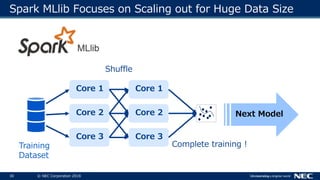

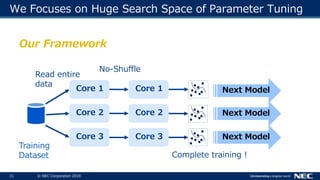

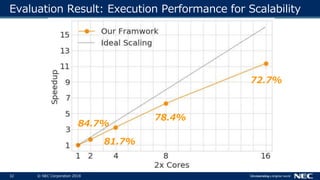

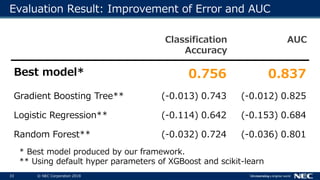

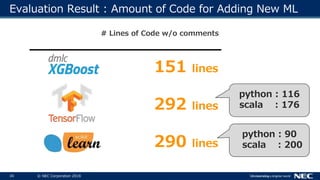

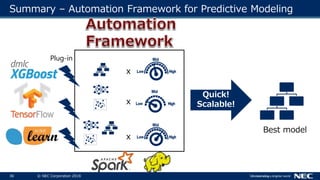

This document summarizes a framework for automating predictive modeling on Apache Spark. The framework allows for scalable searching of predictive models across large parameter spaces. It addresses challenges of high scalability and easy integration of new machine learning implementations. An evaluation shows the framework achieves a 13x speedup over Spark MLlib and reduces the amount of code needed to add new machine learning algorithms. The framework automates predictive modeling tasks in a scalable and plug-and-play manner.

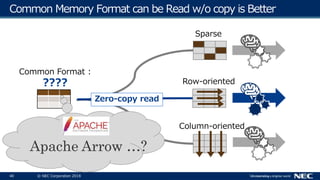

![39 © NEC Corporation 2018

Future work - Convert Data Structure for Each ML impl.

Common Format :

Double[ ][ ]

Sparse

Column-oriented

Row-oriented

Memory Copy &

Convert](https://image.slidesharecdn.com/quickquicknew-180622215414/85/Quick-Quick-Exploration-A-framework-for-searching-a-predictive-model-on-Apache-Spark-39-320.jpg)