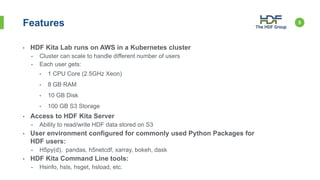

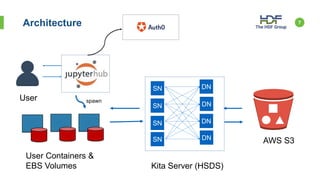

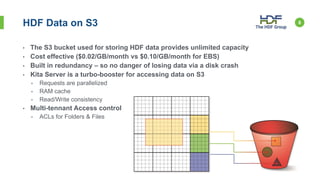

The HDF Group is providing a hosted JupyterLab environment called HDF Kita Lab that provides access to HDF data stored on AWS S3 via the HDF Kita Server. HDF Kita Lab extends JupyterLab with features like auto-configuring the Kita Server and HDF branding. It runs on a Kubernetes cluster in AWS that can scale to handle different numbers of users. Each user gets computing resources and access to HDF data on S3 for analyzing via commonly used Python packages. The data on S3 provides unlimited storage and sharing capabilities between users.