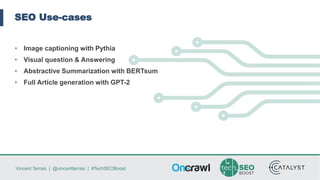

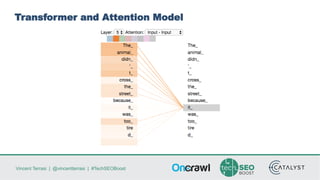

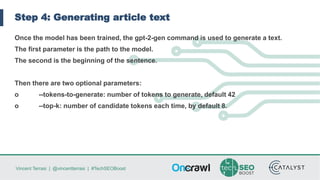

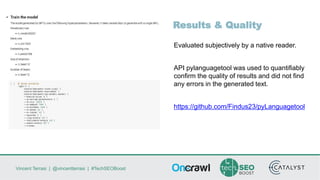

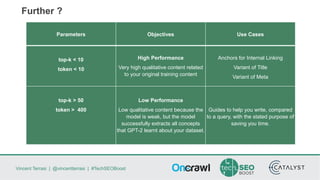

Vincent Terrasi discusses using AI models like GPT-2 and BERT to generate qualitative content in different languages. He outlines the steps to fine-tune a GPT-2 model for a new language using SentencePiece to compress the training data into byte-pair encodings. With sufficient training data in that language, the model can generate fluent multi-sentence texts that pass quality checks by a native speaker and language analysis tool. However, limited training data results in weaker performance. Terrasi provides resources to experiment with a French GPT-2 model and encourages adapting the approach for other languages.