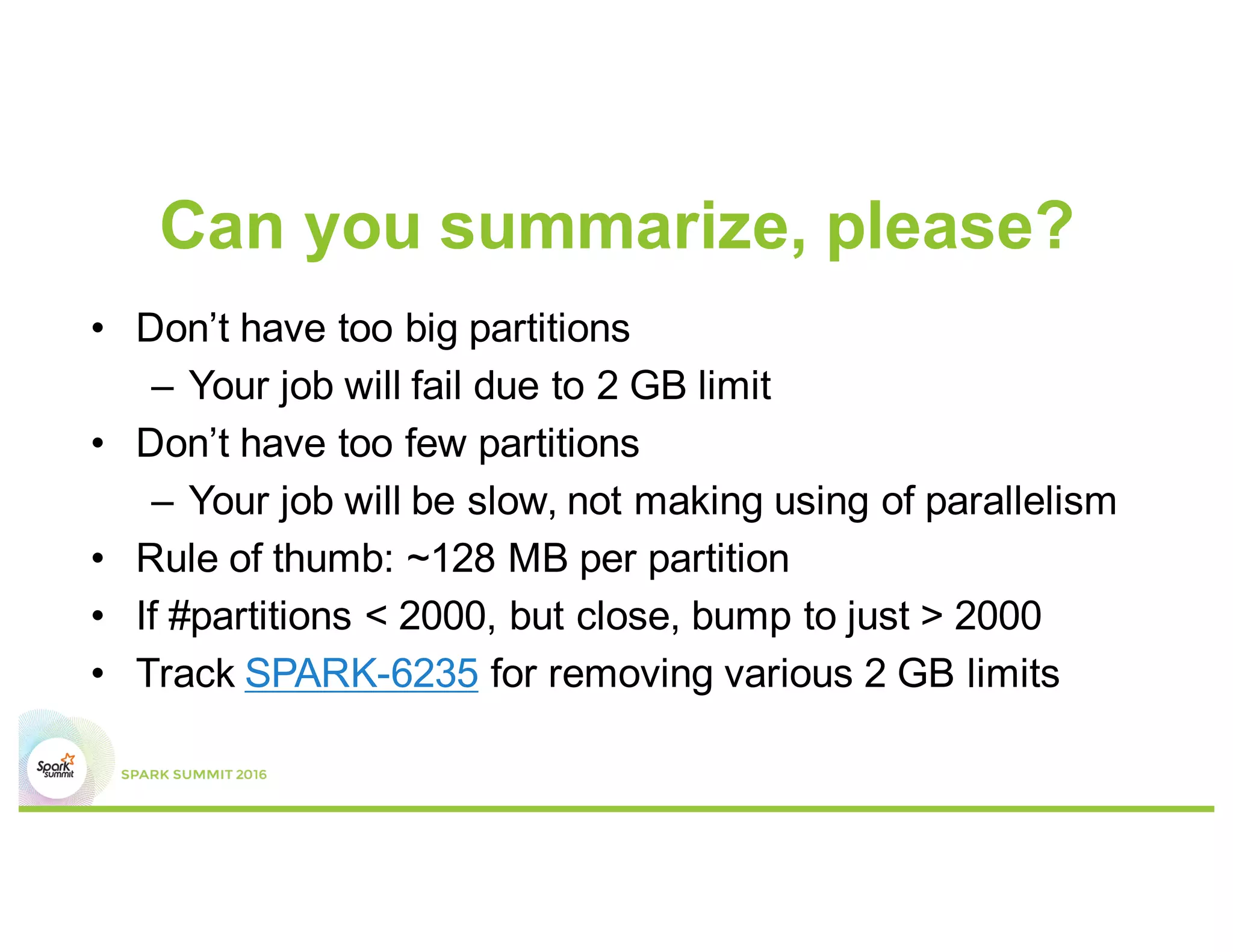

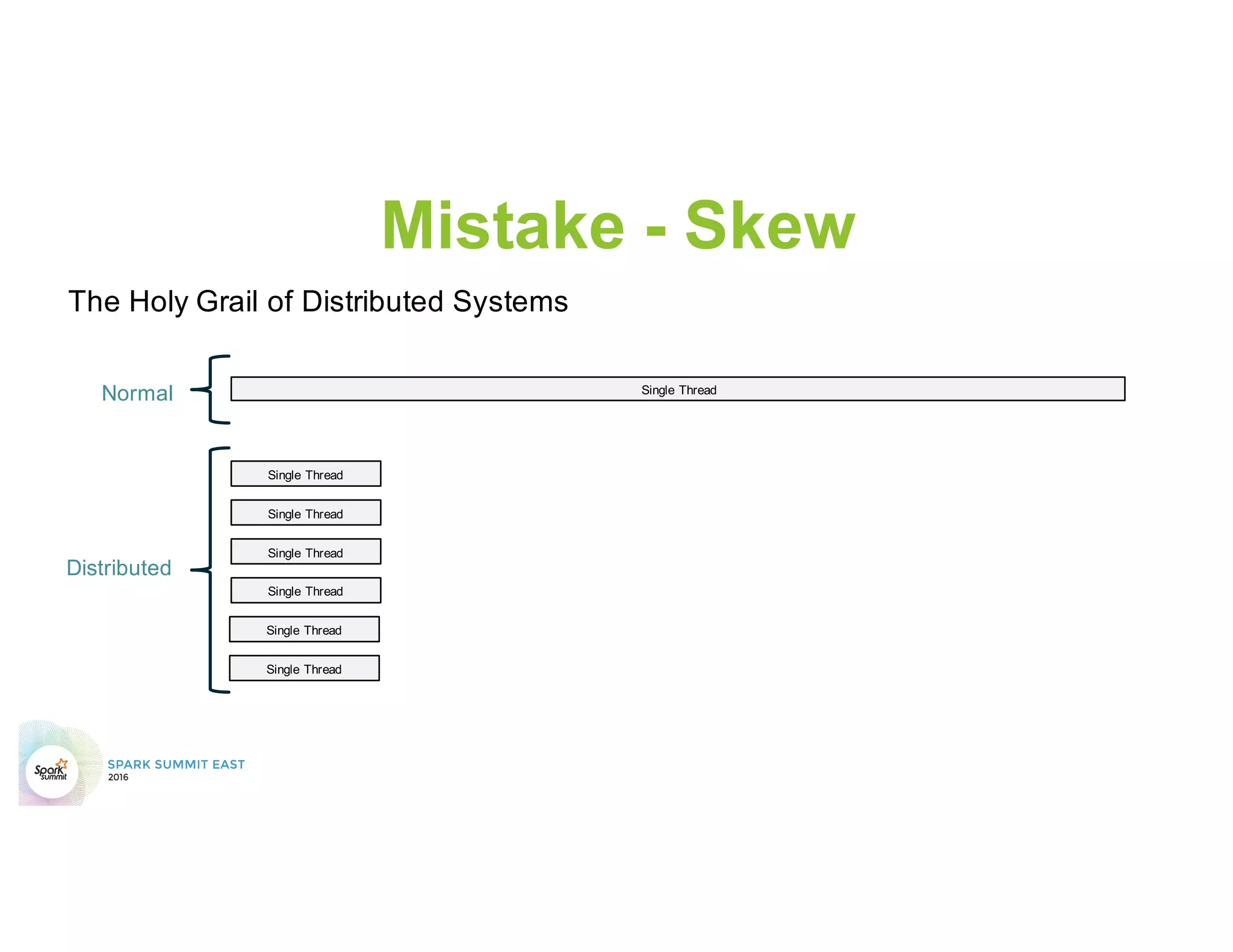

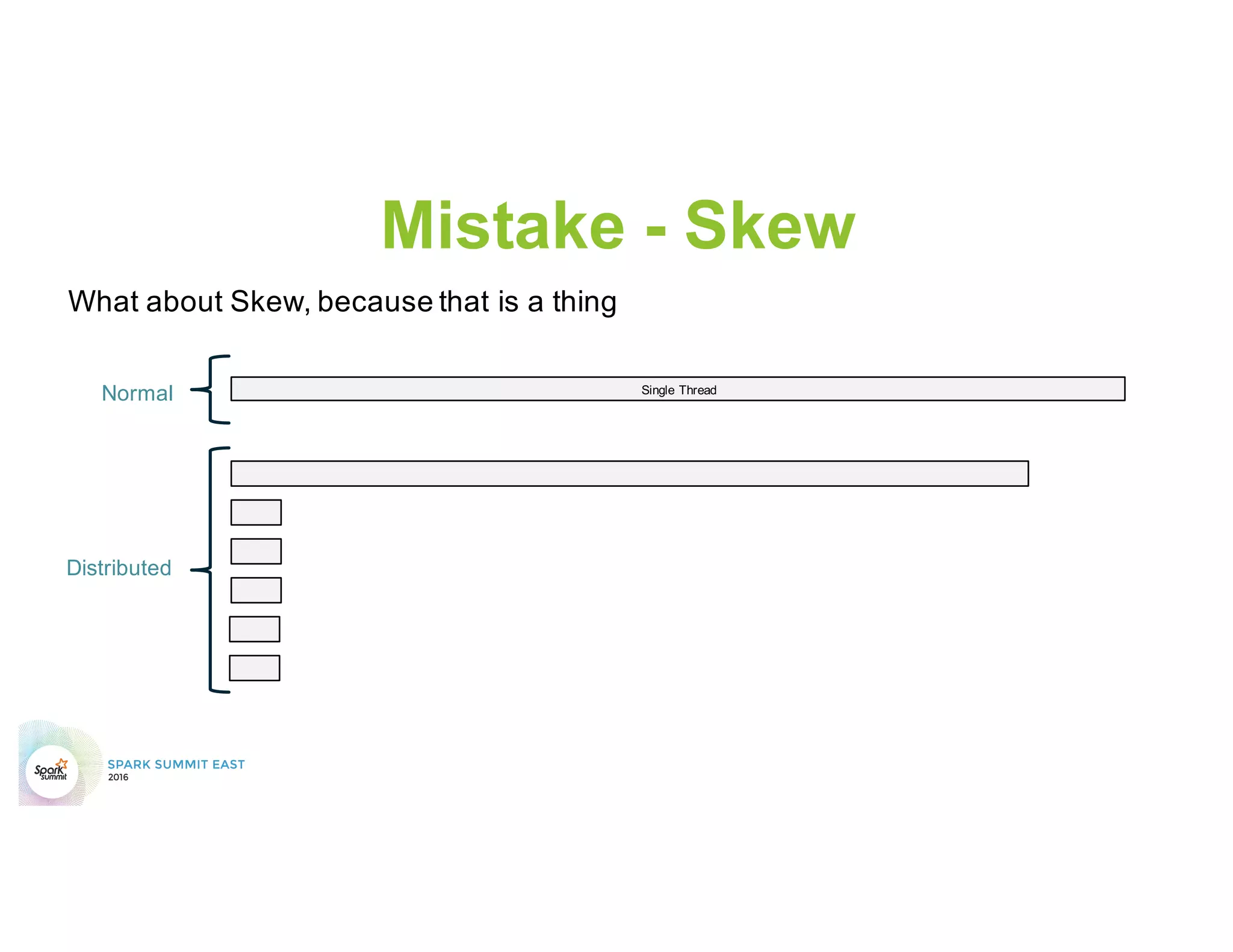

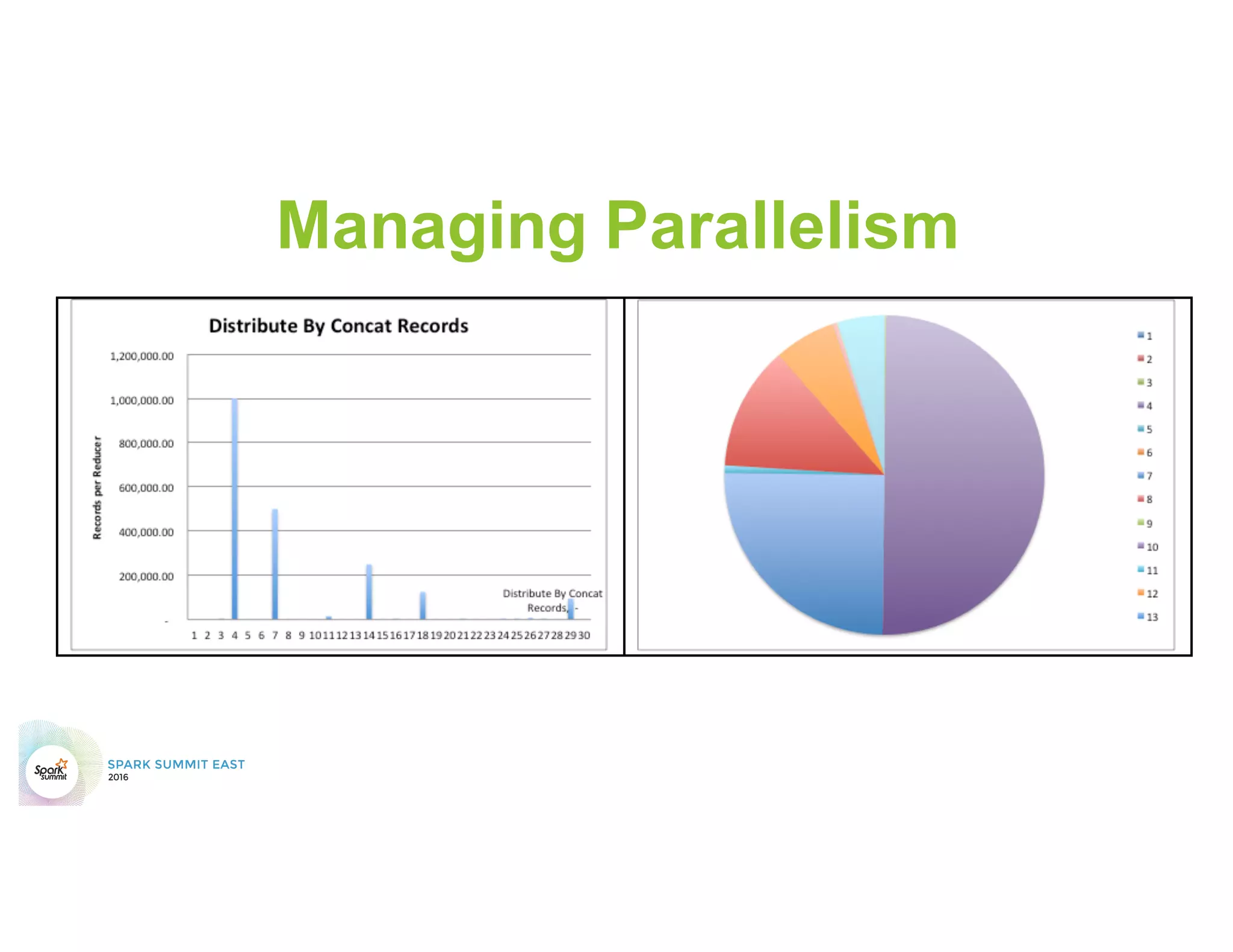

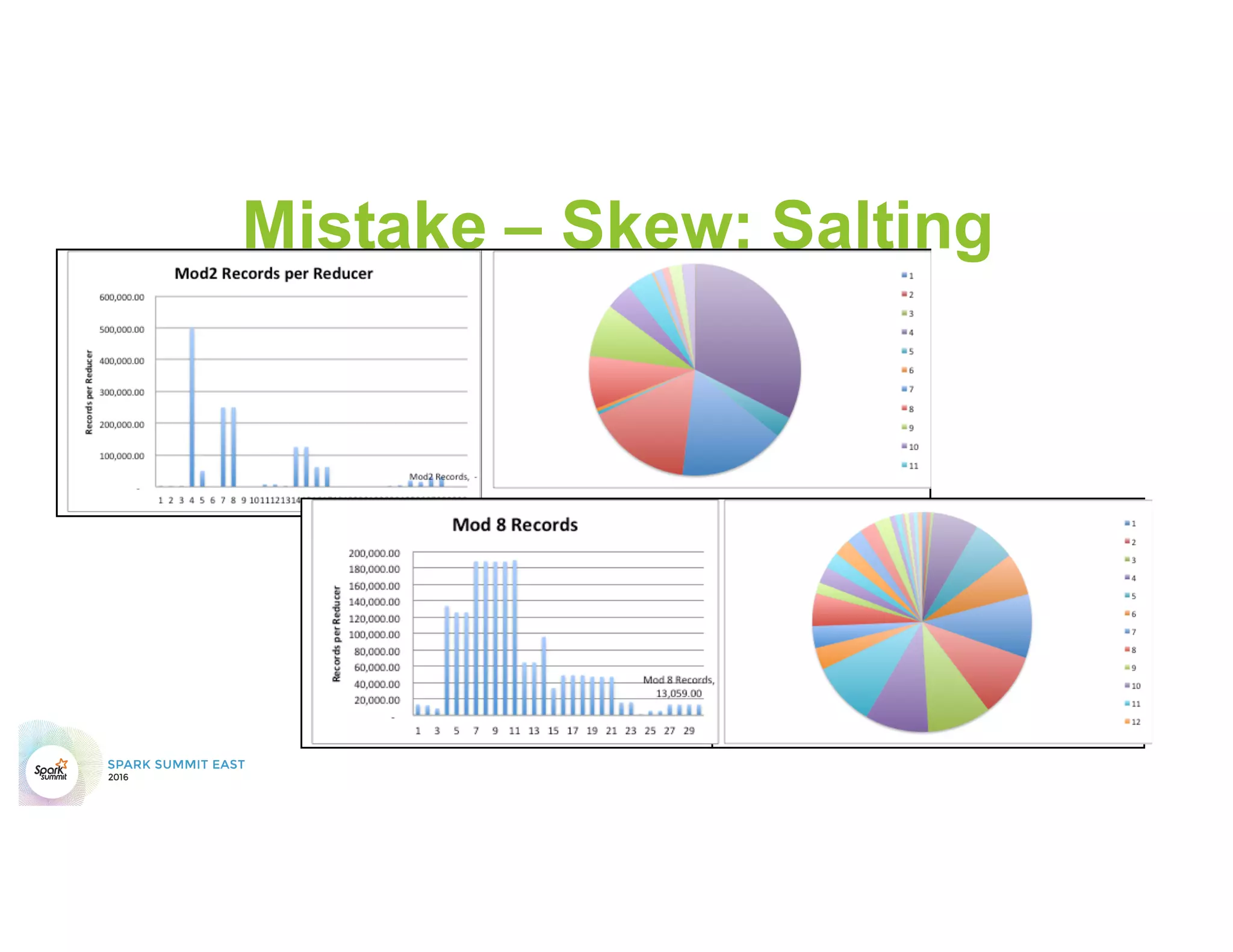

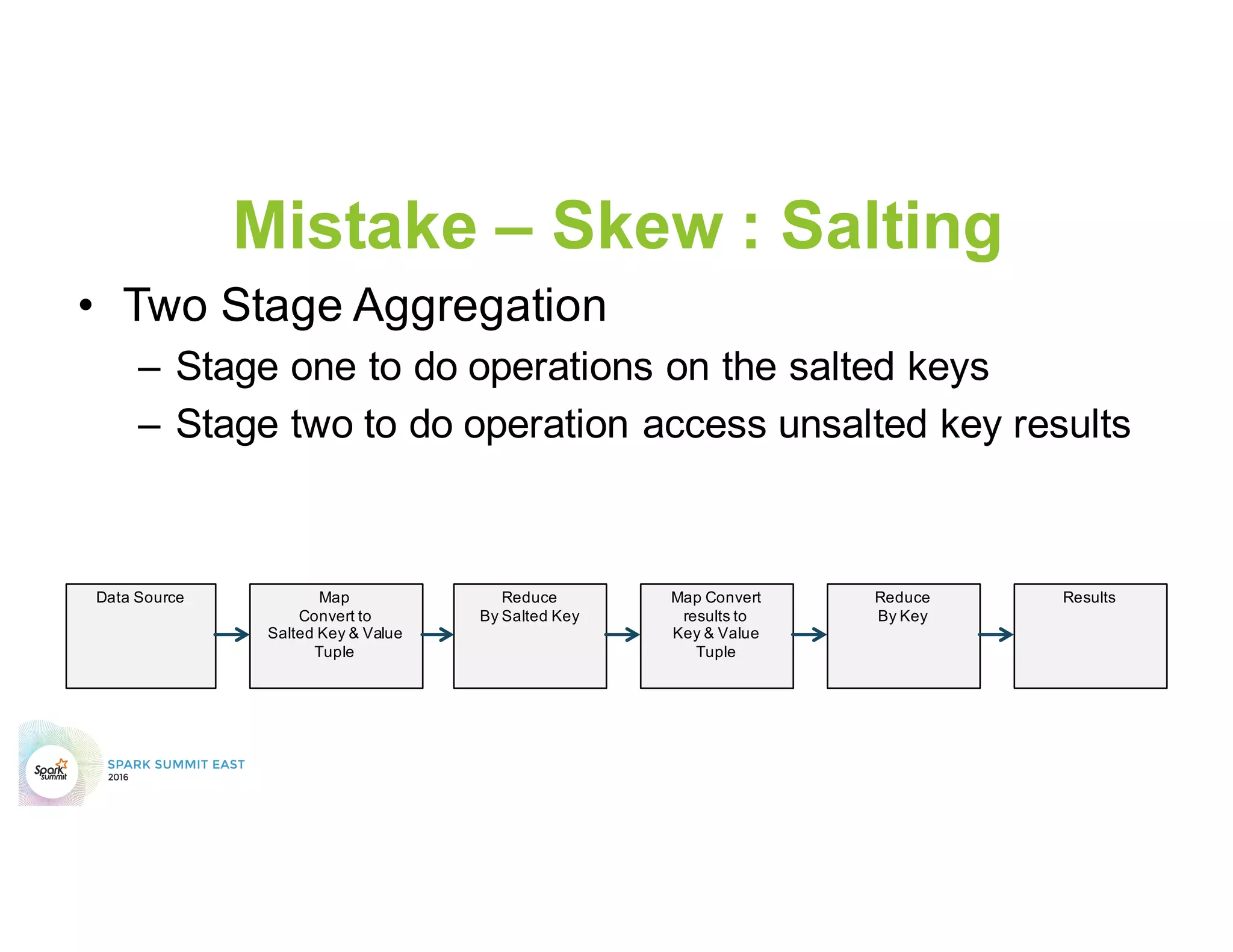

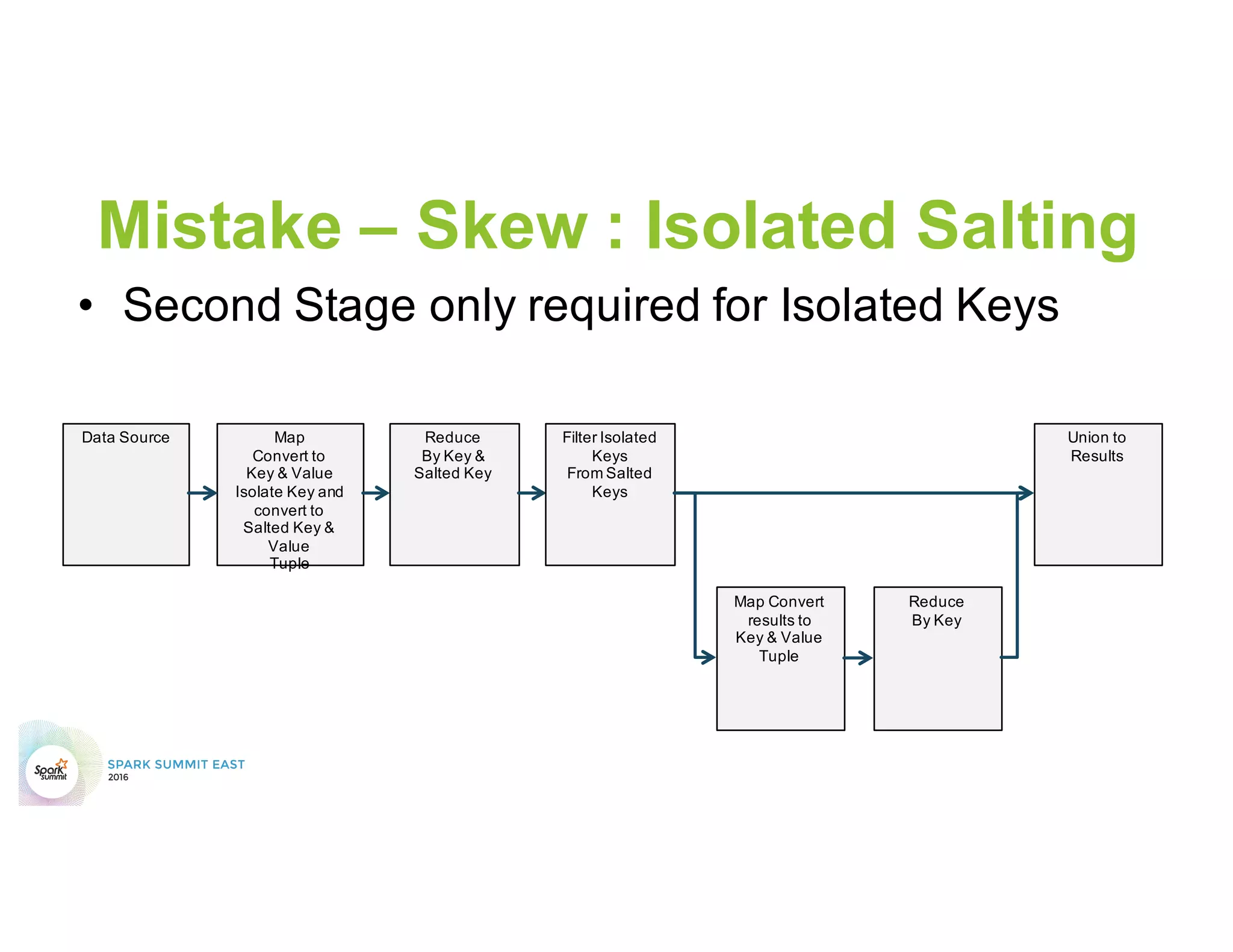

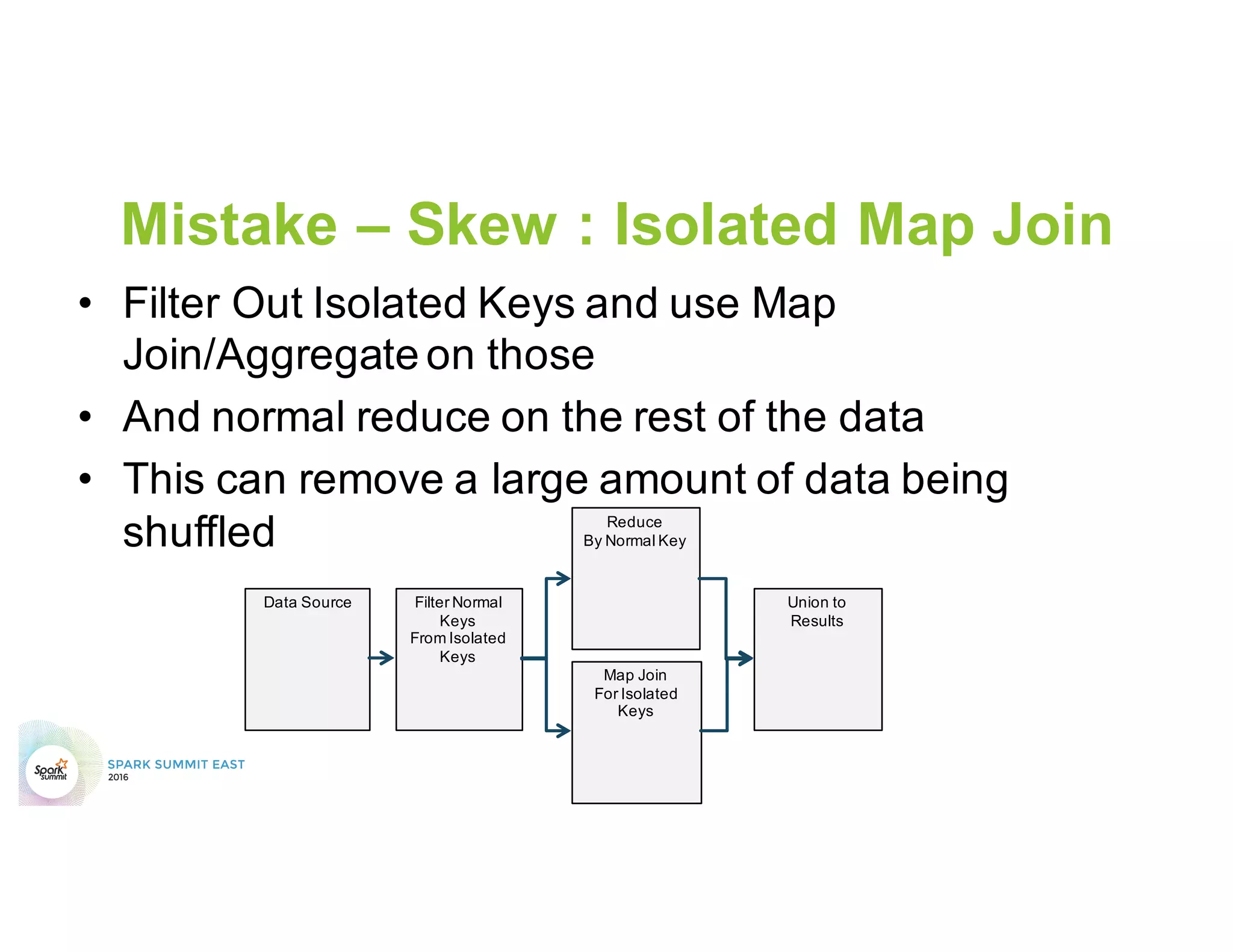

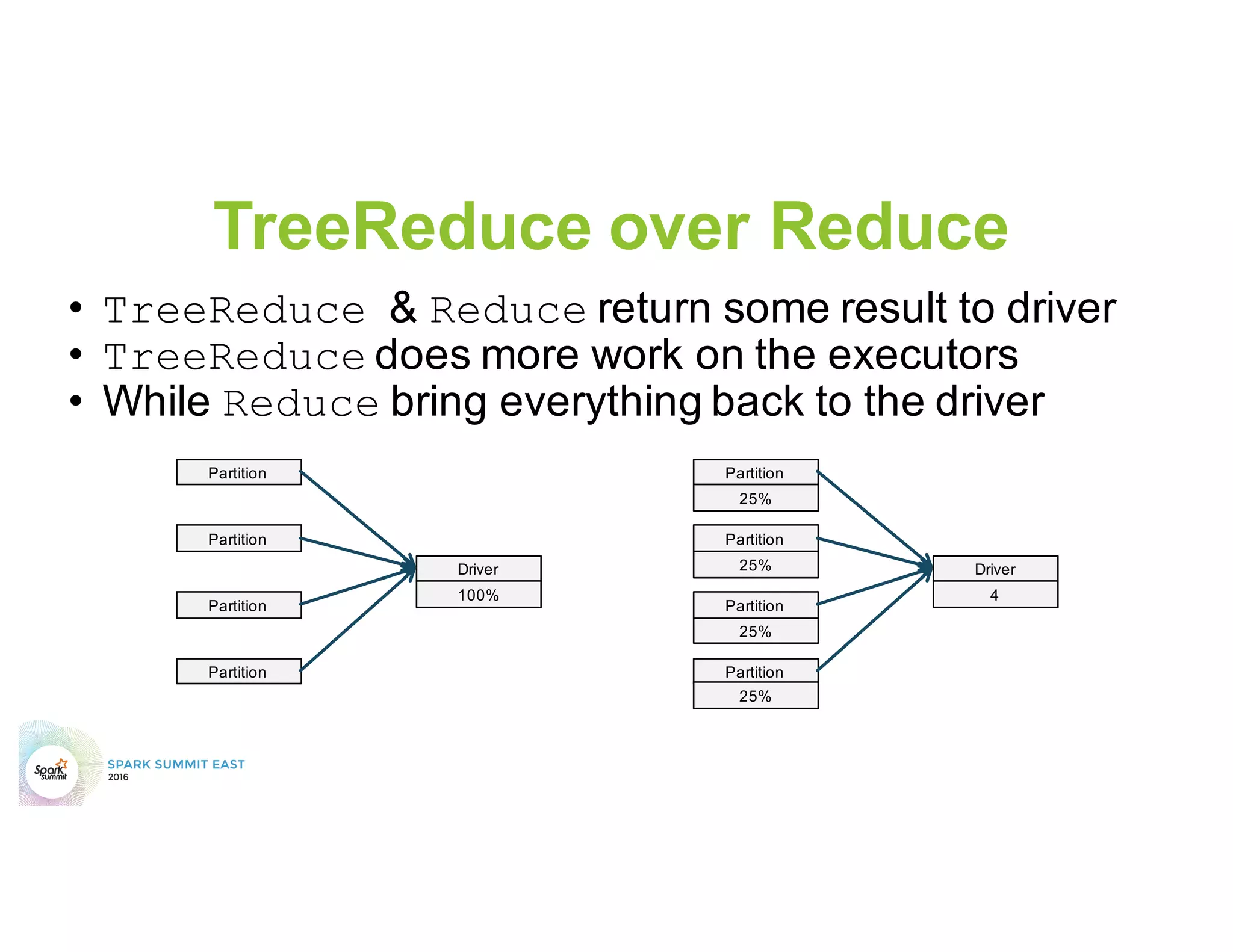

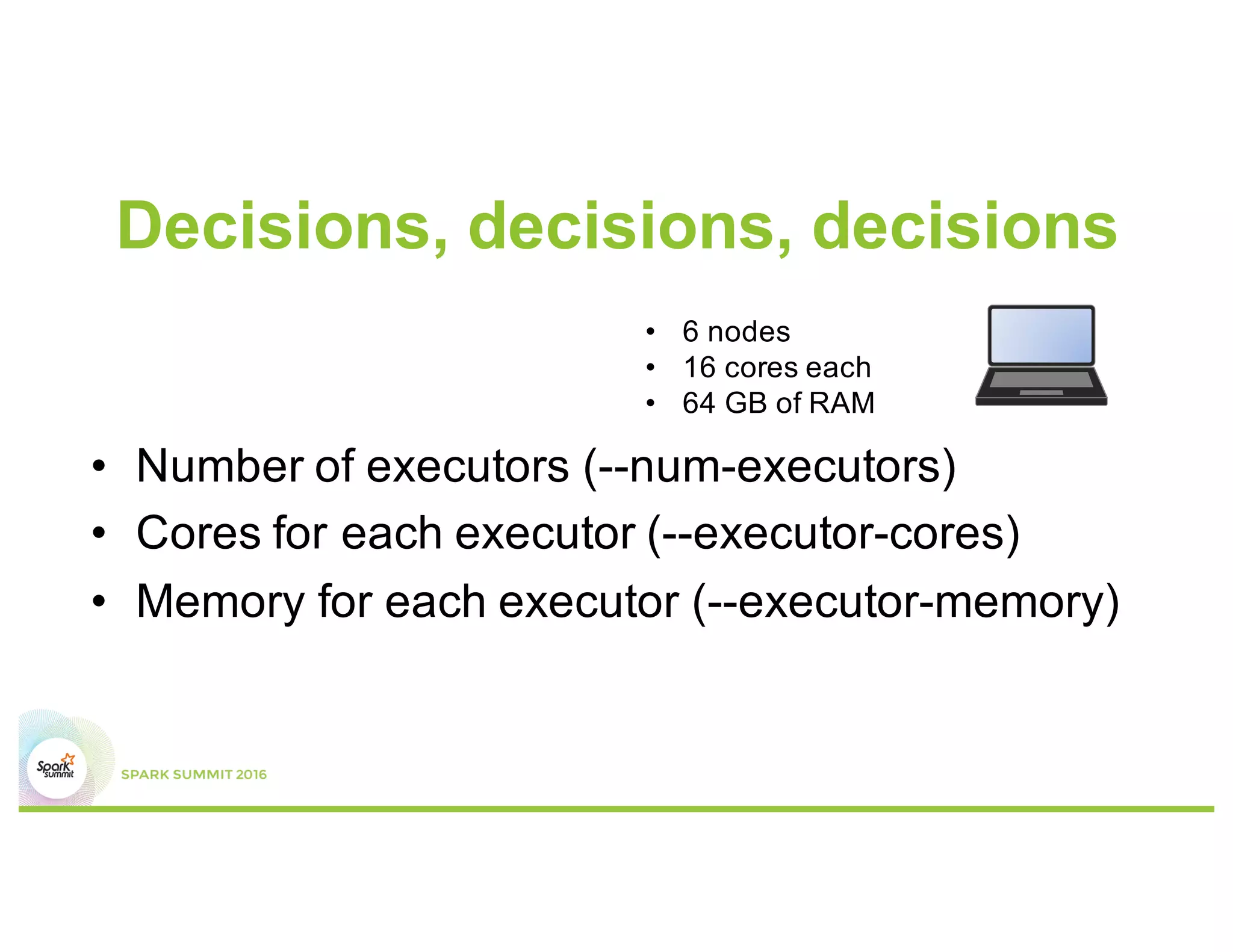

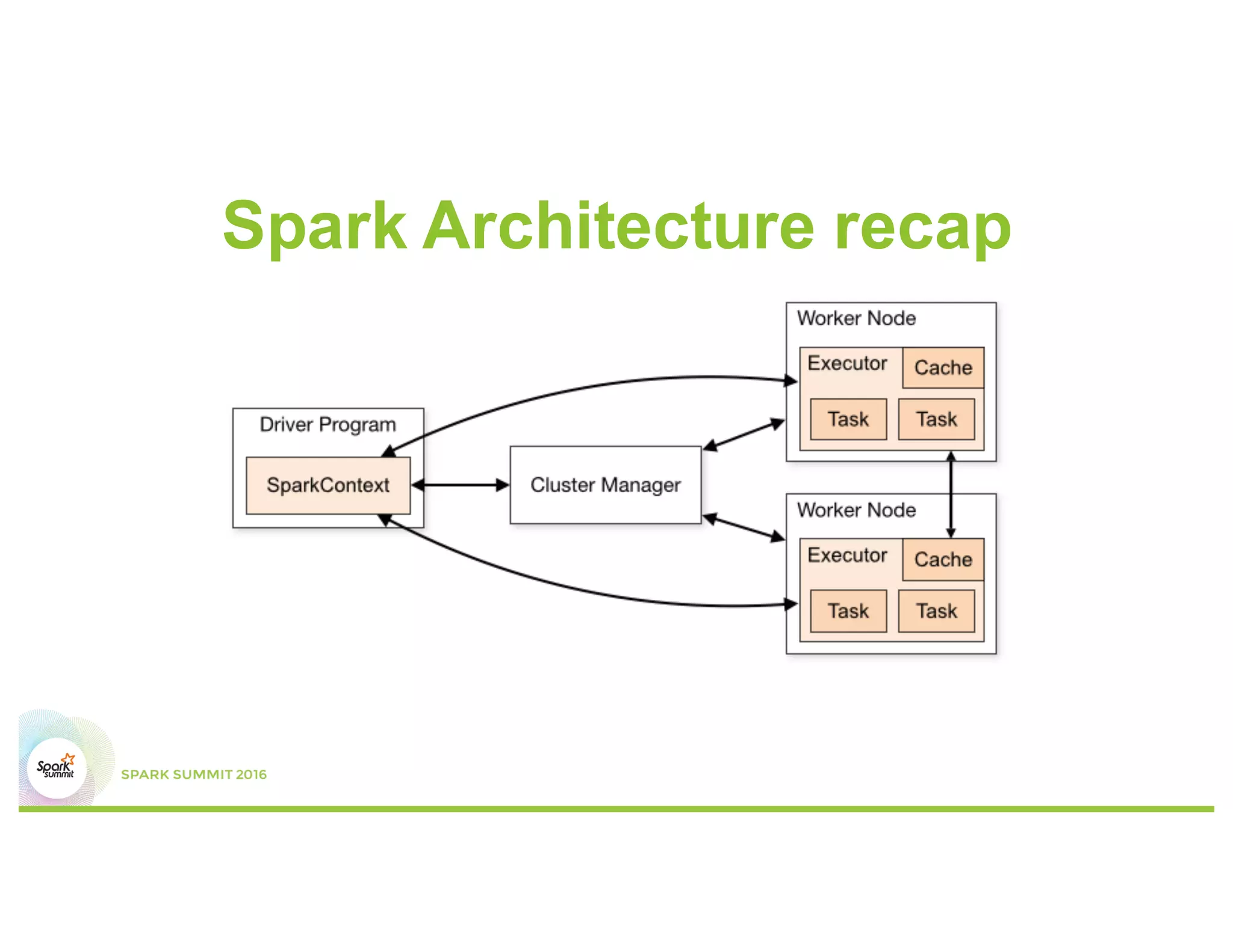

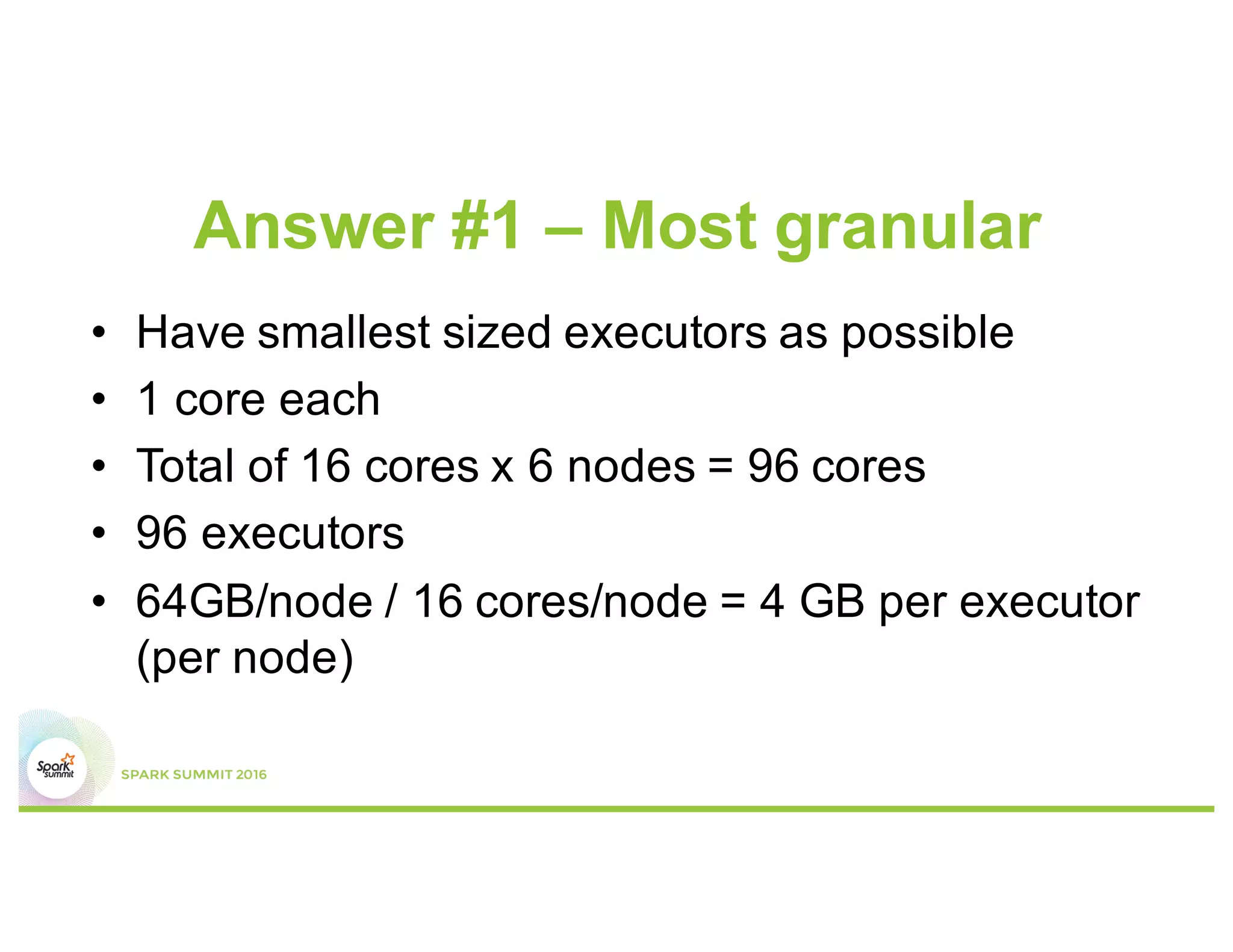

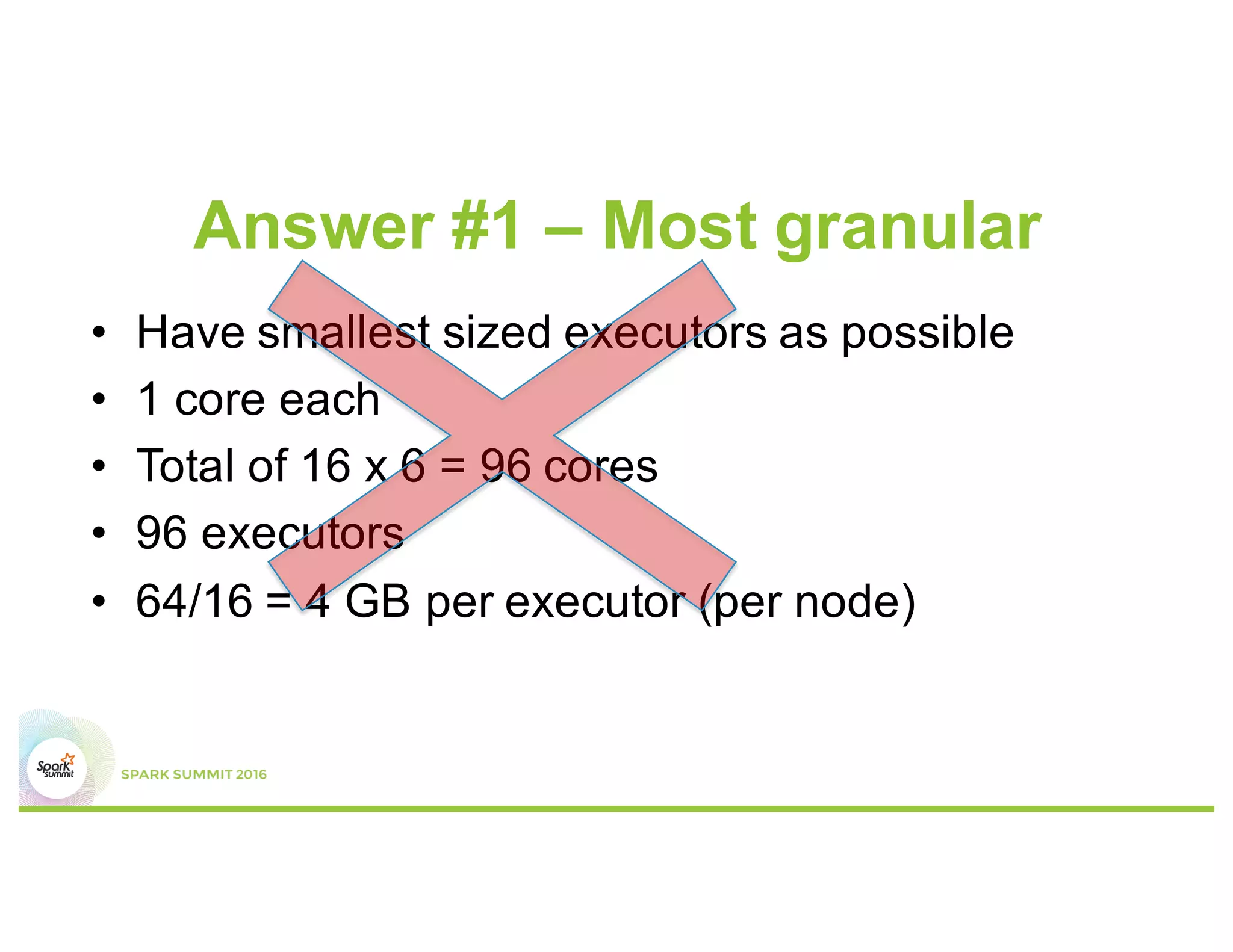

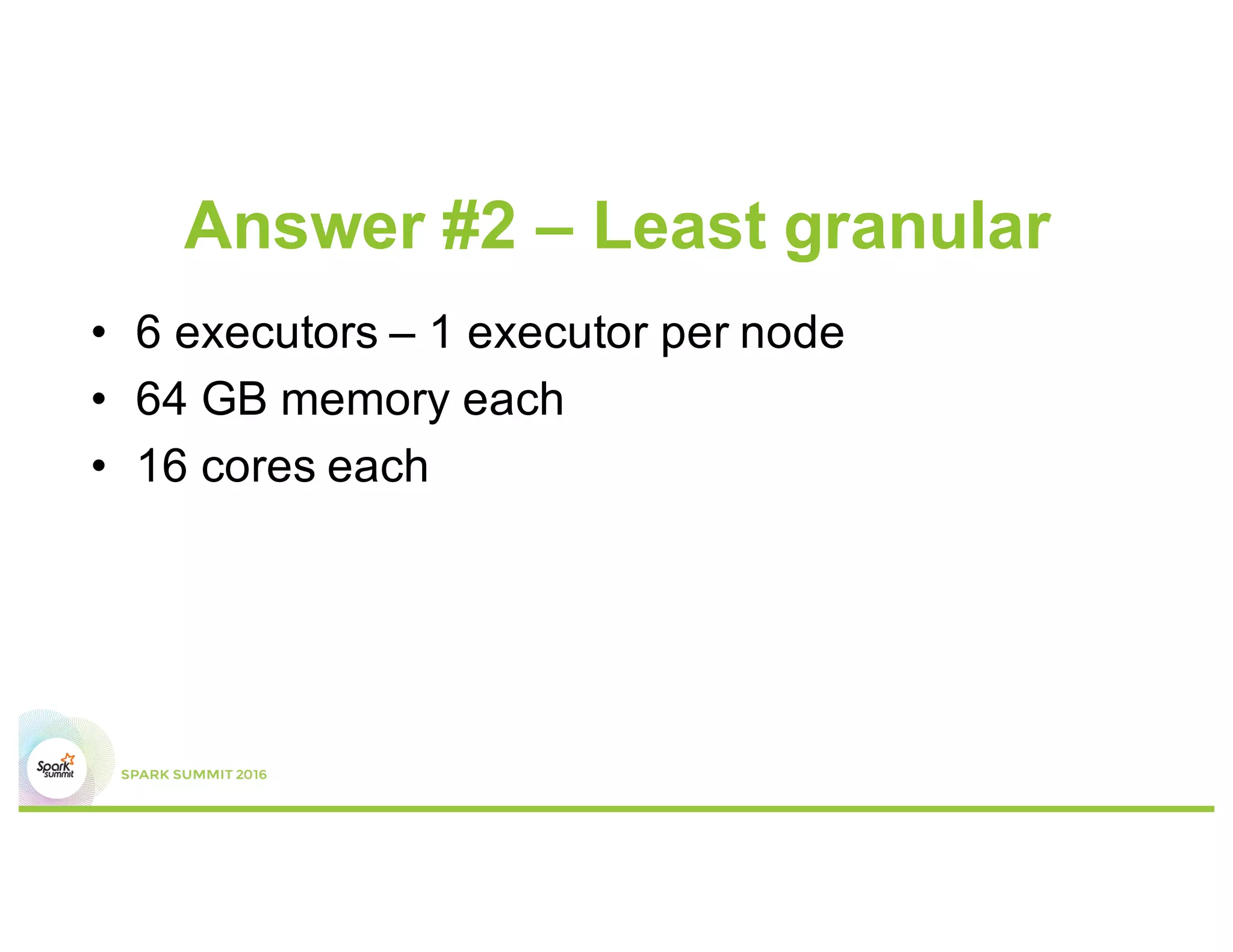

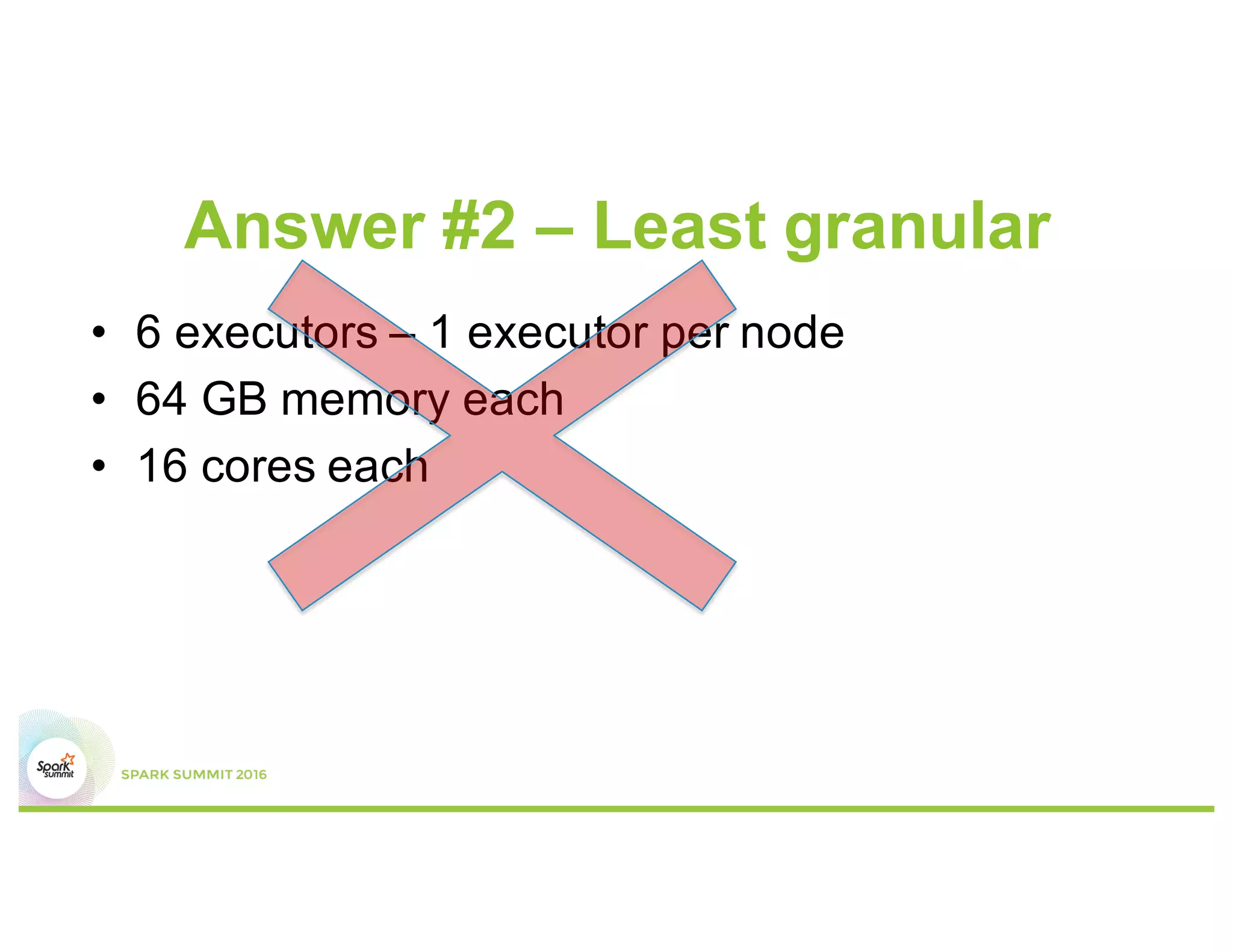

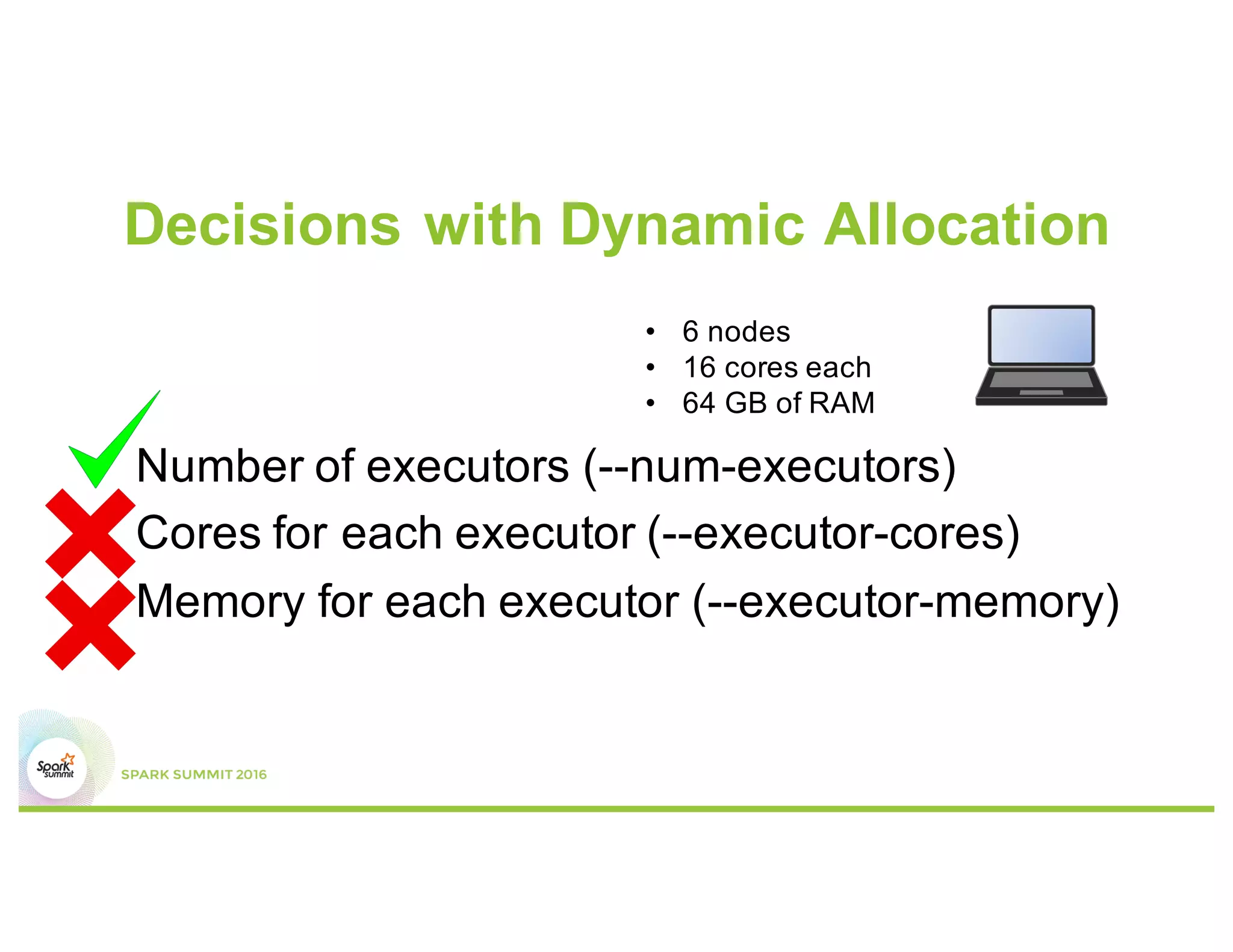

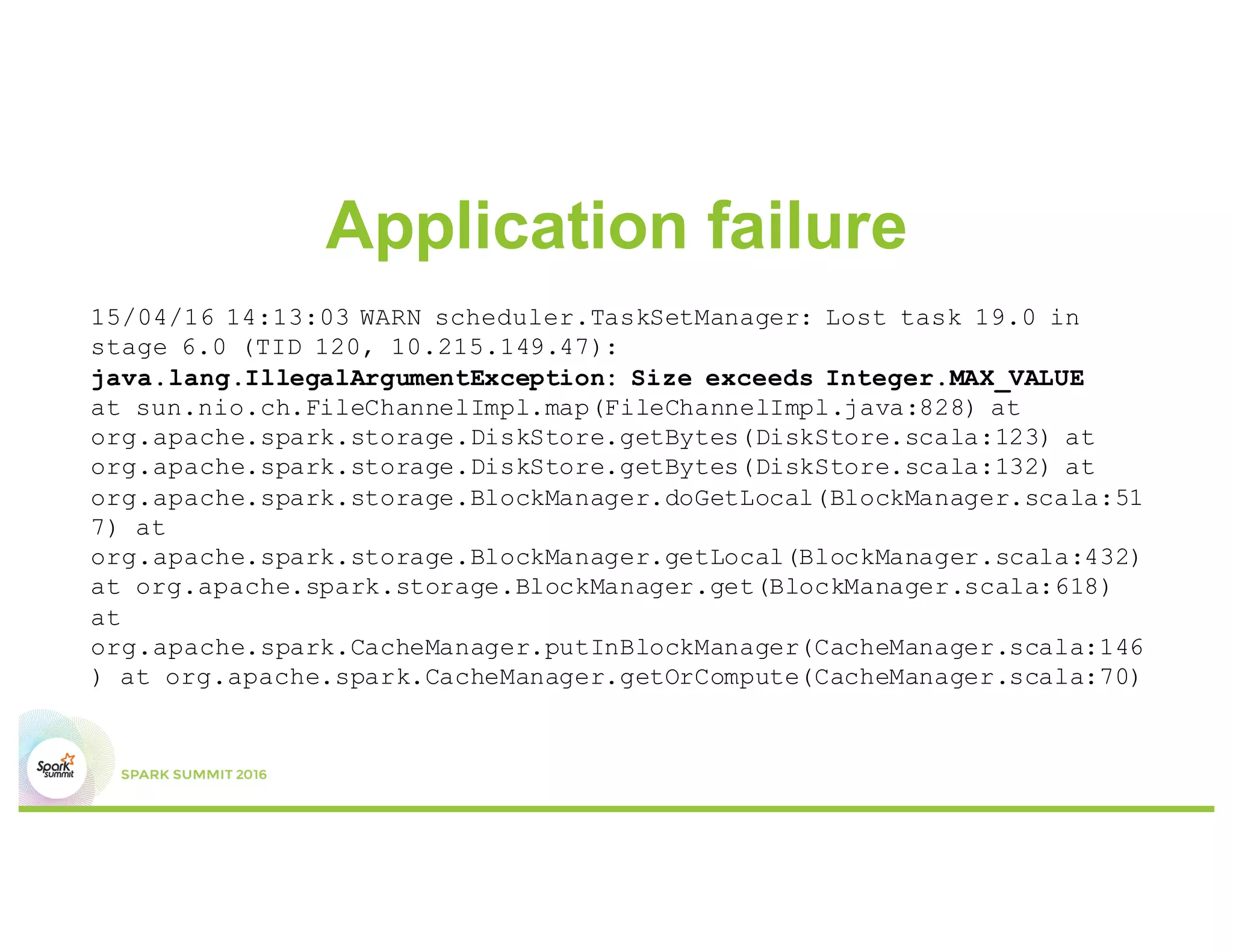

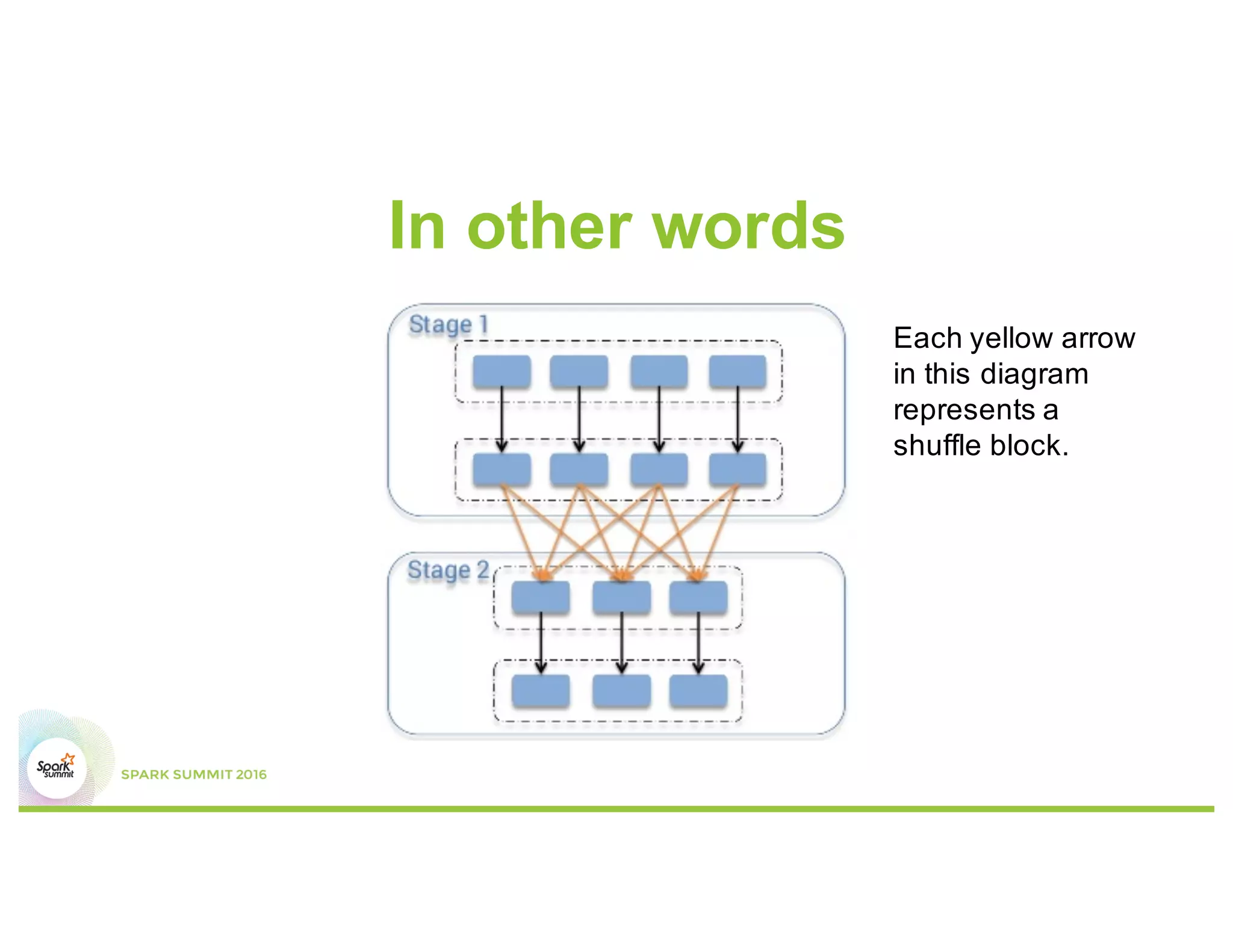

This document discusses common mistakes people make when writing Spark applications and provides recommendations to address them. It covers issues related to executor configuration, application failures due to shuffle block sizes exceeding limits, slow jobs caused by data skew, and managing the DAG to avoid excessive shuffles and stages. Recommendations include using smaller executors, increasing the number of partitions, addressing skew through techniques like salting, and preferring ReduceByKey over GroupByKey and TreeReduce over Reduce to improve performance and resource usage.

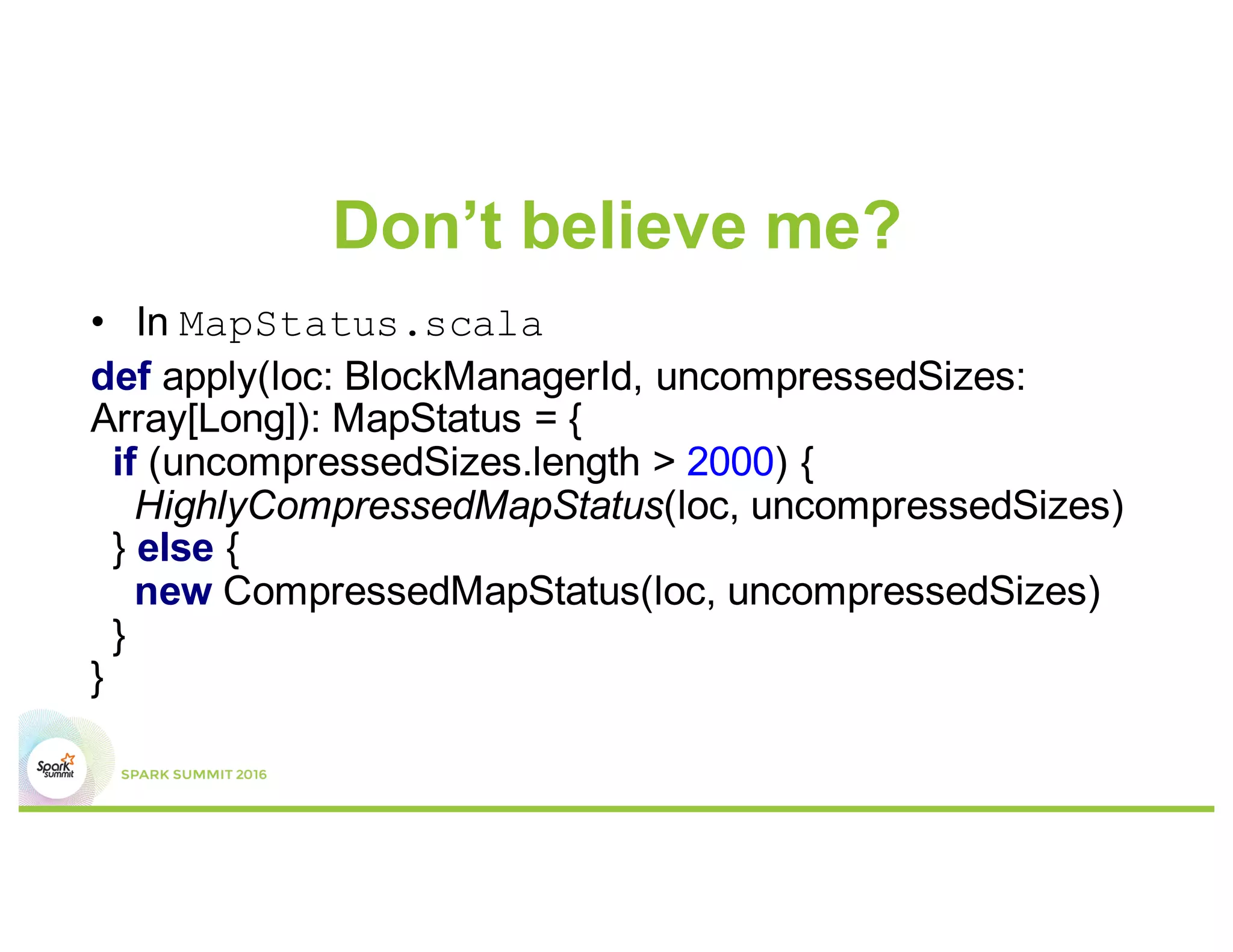

![Don’t believe me?

• In MapStatus.scala

def apply(loc: BlockManagerId, uncompressedSizes:

Array[Long]): MapStatus = {

if (uncompressedSizes.length > 2000) {

HighlyCompressedMapStatus(loc, uncompressedSizes)

} else {

new CompressedMapStatus(loc, uncompressedSizes)

}

}](https://image.slidesharecdn.com/top5mistakesv4-160218171402/75/Top-5-mistakes-when-writing-Spark-applications-41-2048.jpg)