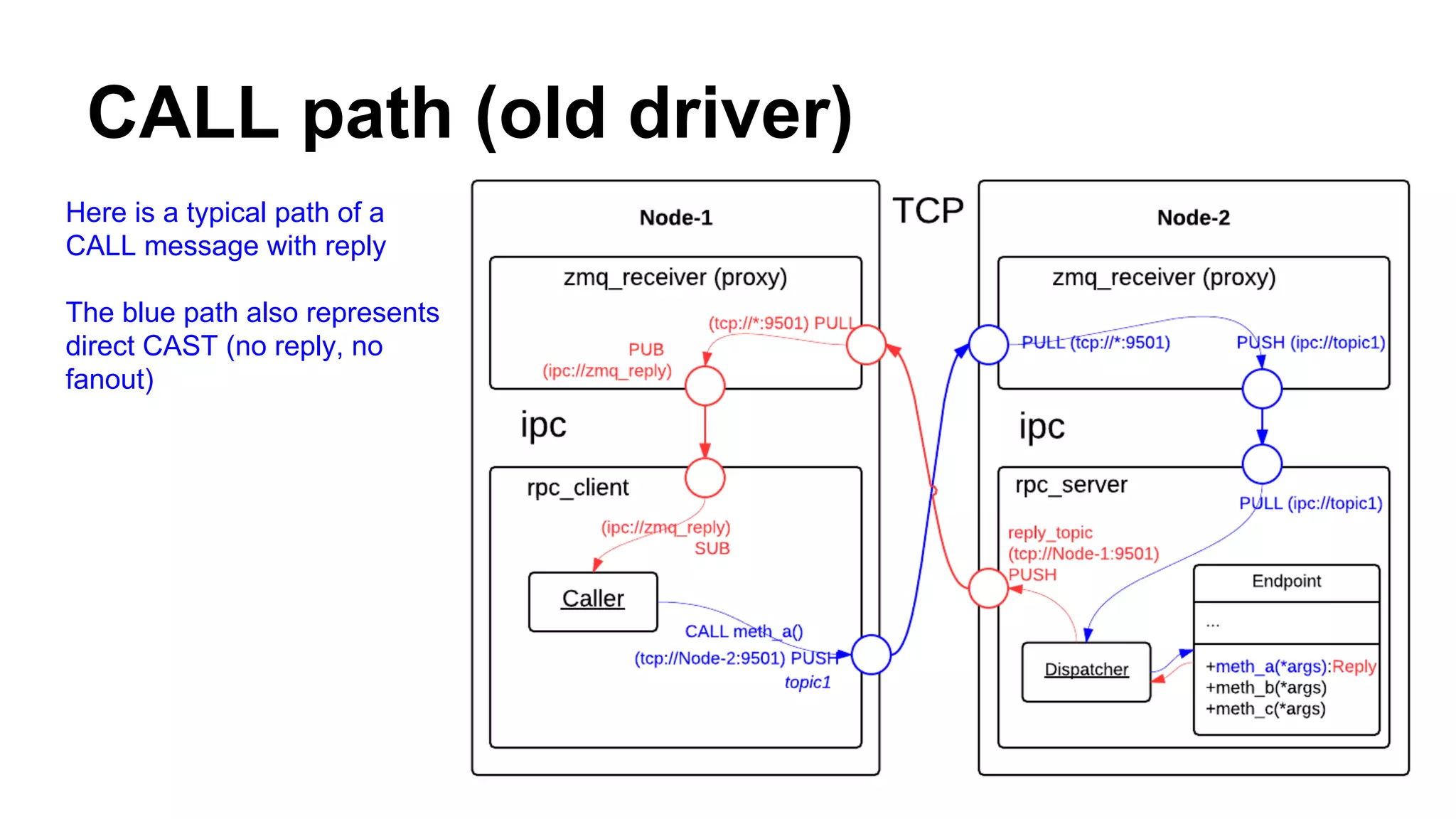

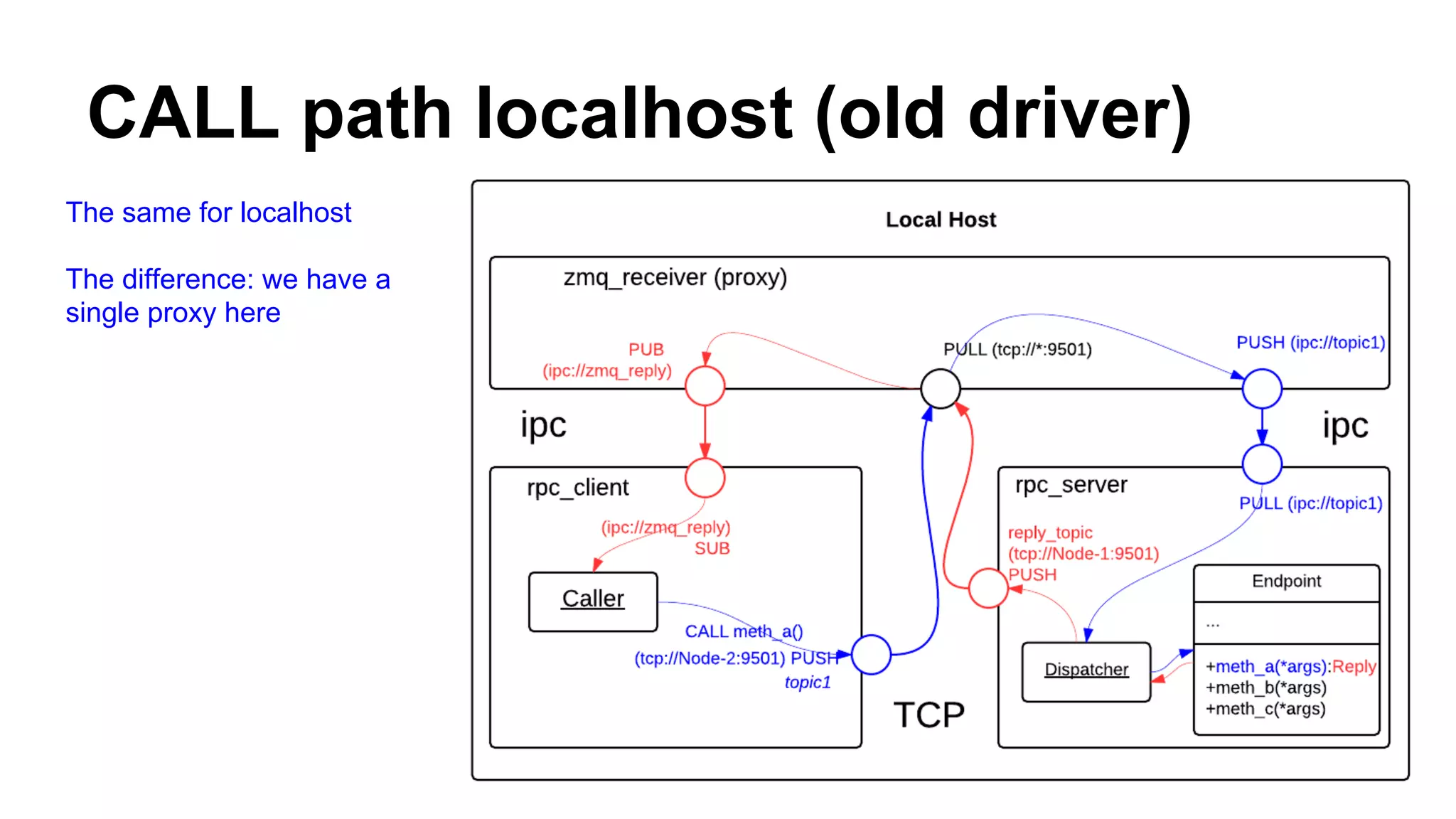

- The current zmq driver uses multiple sockets per call, opening 6 sockets for each call. A new driver is proposed to simplify this using a REQ/REP pattern, opening only 2 sockets per call.

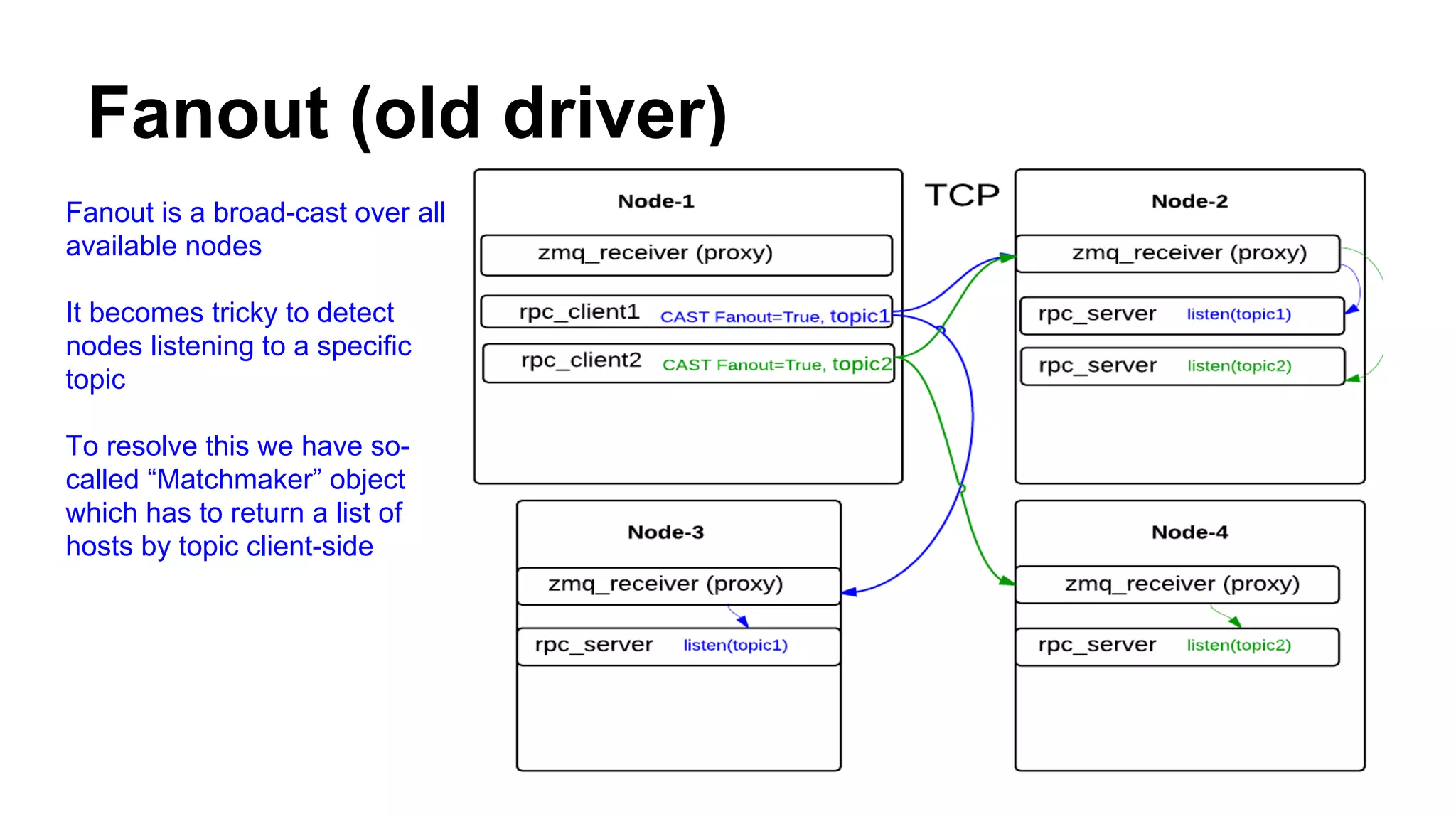

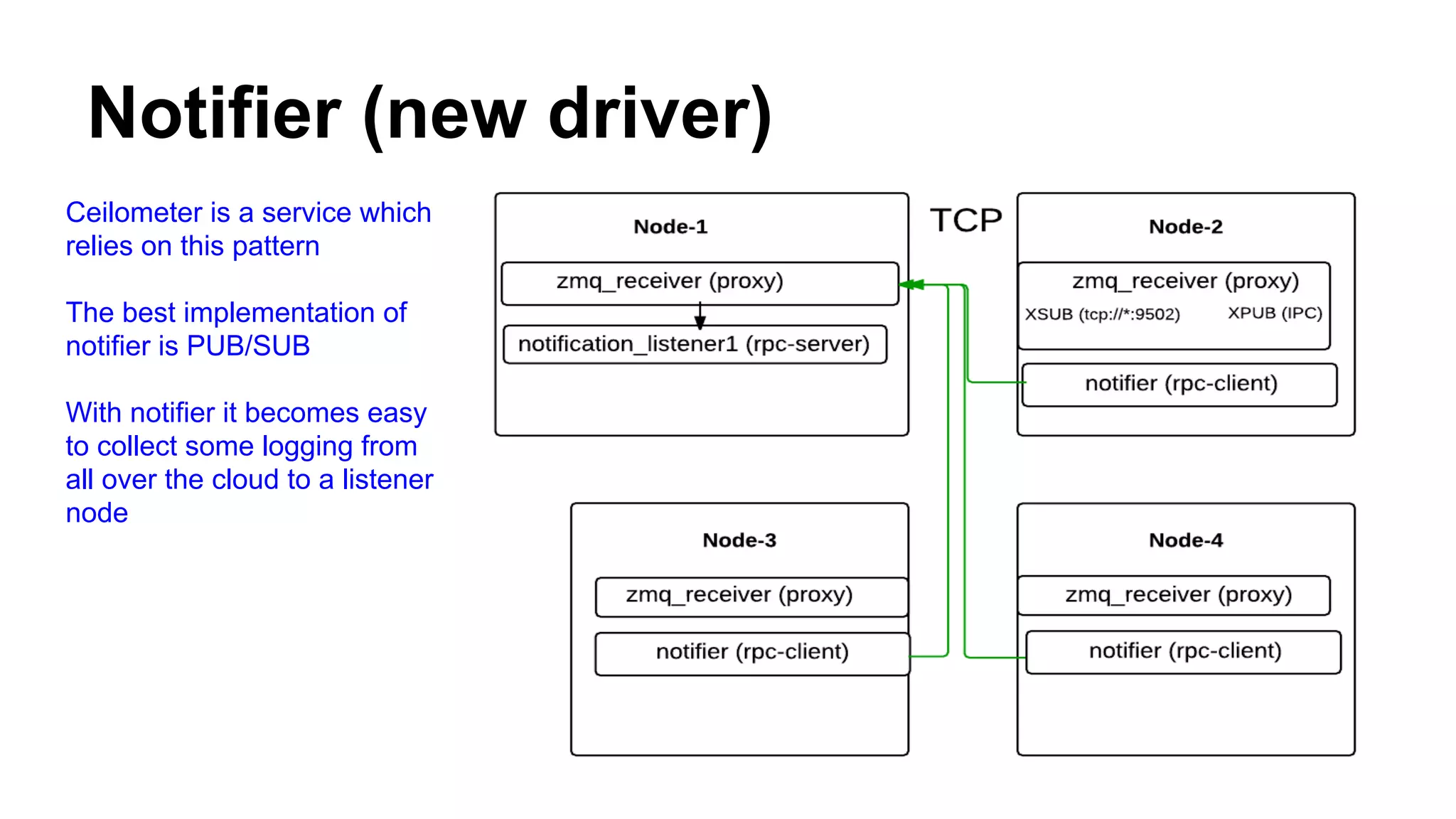

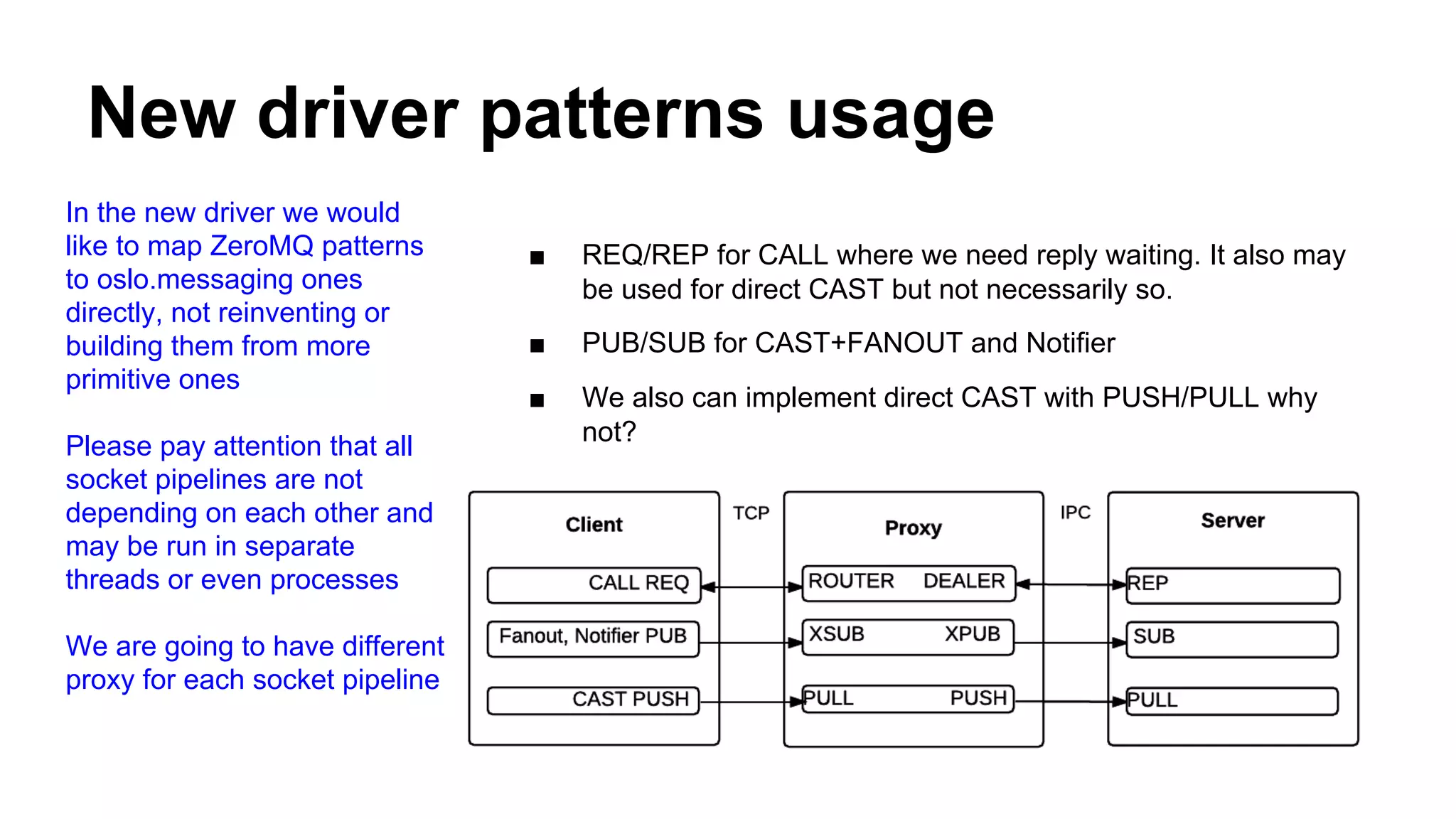

- The new driver would map zmq patterns like PUB/SUB directly to oslo messaging patterns for fanout/notification rather than building them from more primitive sockets.

- Advanced diagnostics, testing, logging and documentation are important to include in the new driver.