Docker Kubernetes Istio

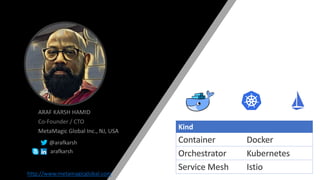

- 1. ARAF KARSH HAMID Co-Founder / CTO MetaMagic Global Inc., NJ, USA @arafkarsh arafkarsh http://www.metamagicglobal.com Kind

- 2. Docker / Kubernetes / Istio Containers Container Orchestration Service Mesh

- 3. • 12 Factor App Methodology • Docker Concepts • Images and Containers • Anatomy of a Dockerfile • Networking / Volume Docker1 • Kubernetes Concepts • Namespace • Pods • RelicaSet • Deployment • Service / Endpoints • Ingress • Rollout and Undo • Auto Scale Kubernetes2 Day 1 - Basic 3 • API Gateway • Load Balancer • Service Discovery • Config Server • Circuit Breaker • Service Aggregator Infrastructure Design Patterns4 • Environment • Config Map • Pod Presets • Secrets 3 Kubernetes – Container App Setup • Hello World App • Multi Version Rollouts • Auto Scaling App 1 - HelloWorld2

- 4. Day 2 – Kubernetes Advanced Networking, Volumes, Logging & Helm Charts 4 • Docker / Kubernetes Networking • Pod to Pod Networking • Pod to Service Networking • Ingress and Egress – Internet Kubernetes Networking – Packet Path5 • Kubernetes IP Network • OSI | L2/3/7 | IP Tables | IP VS | BGP | VXLAN • Kube DNS | Proxy • LB, Cluster IP, Node Port • Ingress Controller Kubernetes Networking Advanced6 • Helm Charts Concepts • Package Charts • Install / Uninstall charts • Manage Release Cycles Helm Charts14 • In-Tree & Out-of-Tree Volume Plugins • Container Storage Interface • CSI – Volume Life Cycle • Persistent Volume • Persistent Volume Claims • Storage Class Kubernetes Volumes11 • Logging • Distributed Tracing • Jagger / Grafana / Prometheus Logging & Monitoring13 • Product App with Product Review Microservice App 2 – Product App with Multiple Versions6

- 5. • Jobs / Cron Jobs • Quotas / Limits / QoS • Pod / Node Affinity • Pod Disruption Budget • Kubernetes Commands Kubernetes Advanced Concepts12 Day 3 – Network Security, Service Mesh and Best Practices 5 • Docker Best Practices • Kubernetes Best Practices • Security Best Practices 15 Best Practices • Istio Concepts / Sidecar Pattern • Envoy Proxy / Cilium Integration 8 Service Mesh – Istio • Security • RBAC • Mesh Policy | Policy • Cluster RBAC Config • Service Role / Role Binding Istio – Security and RBAC10 • Gateway / Virtual Service • Destination Rule / Service Entry • AB Testing using Canary • Beta Testing using Canary Istio Traffic Management9 • Network Policy L3 / L4 • Security Policy for Microservices • Weave / Calico / Cilium / Flannel Kubernetes Network Security Policies7 • Shopping Portal App with 6 Microservices implementation. App 3 – Shopping Portal9

- 6. 12 Factor App Methodology 19-11-2019 6 4 Backing Services Treat Backing services like DB, Cache as attached resources 5 Build, Release, Run Separate Build and Run Stages 6 Process Execute App as One or more Stateless Process 7 Port Binding Export Services with Specific Port Binding 8 Concurrency Scale out via the process Model 9 Disposability Maximize robustness with fast startup and graceful exit 10 Dev / Prod Parity Keep Development, Staging and Production as similar as possible 11 Logs Treat logs as Event Streams 12 Admin Process Run Admin Tasks as one of Process Source:https://12factor.net/ Factors Description 1 Codebase One Code base tracked in revision control 2 Dependencies Explicitly declare dependencies 3 Configuration Configuration driven Apps 1

- 7. High Level Objectives 7 1. Create Docker Images 2. Run Docker Containers for testing. 3. Push the Containers to registry 4. Docker image as part of your Code Pipeline Process. 1. Create Pods (Containers) with Deployments 2. Create Services 3. Create Traffic Rules (Ingress / Gateway / Virtual Service / Destination Rules) 4. Create External Services From Creating a Docker Container to Deploying the Container in Production Kubernetes Cluster. All other activities revolves around these 8 points mentioned below. 1 #01 Slide No’s #22 #22 #22 #40-54 #57 #136-144 #55 #145

- 8. Docker Containers Understanding Containers Docker Images / Containers Docker Networking 8

- 9. What’s a Container? Virtual Machine Looks like a Walks like a Runs like a 19-11-2019 9 Containers are a Sandbox inside Linux Kernel sharing the kernel with separate Network Stack, Process Stack, IPC Stack etc. 1

- 10. Servers / Virtual Machines / Containers Hardware OS BINS / LIB App 1 App 2 App 3 Server Hardware Host OS HYPERVISOR App 1 App 2 App 3 Guest OS BINS / LIB Guest OS BINS / LIB Guest OS BINS / LIB Type 1 Hypervisor Hardware Host OS App 1 App 2 App 3 BINS / LIB BINS / LIB BINS / LIB Container Hardware HYPERVISOR App 1 App 2 App 3 Guest OS BINS / LIB Guest OS BINS / LIB Guest OS BINS / LIB Type 2 Hypervisor 101

- 11. Docker containers are Linux Containers CGROUPS NAME SPACES Copy on Write DOCKER CONTAINER • Kernel Feature • Groups Processes • Control Resource Allocation • CPU, CPU Sets • Memory • Disk • Block I/O • Images • Not a File System • Not a VHD • Basically a tar file • Has a Hierarchy • Arbitrary Depth • Fits into Docker Registry • The real magic behind containers • It creates barriers between processes • Different Namespaces • PID Namespace • Net Namespace • IPC Namespace • MNT Namespace • Linux Kernel Namespace introduced between kernel 2.6.15 – 2.6.26 docker runlxc-start 11 https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/6/html/resource_management_guide/ch01 19-11-2019 1

- 12. Docker Container – Linux and Windows Control Groups cgroups Namespaces Pid, net, ipc, mnt, uts Layer Capabilities Union File Systems: AUFS, btrfs, vfs Control Groups Job Objects Namespaces Object Namespace, Process Table. Networking Layer Capabilities Registry, UFS like extensions Namespaces: Building blocks of the Containers 121

- 13. Docker Key Concepts • Docker images • A Docker image is a read-only template. • For example, an image could contain an Ubuntu operating system with Apache and your web application installed. • Images are used to create Docker containers. • Docker provides a simple way to build new images or update existing images, or you can download Docker images that other people have already created. • Docker images are the build component of Docker. • Docker containers • Docker containers are similar to a directory. • A Docker container holds everything that is needed for an application to run. • Each container is created from a Docker image. • Docker containers can be run, started, stopped, moved, and deleted. • Each container is an isolated and secure application platform. • Docker containers are the run component of Docker. • Docker Registries • Docker registries hold images. • These are public or private stores from which you upload or download images. • The public Docker registry is called Docker Hub. • It provides a huge collection of existing images for your use. • These can be images you create yourself or you can use images that others have previously created. • Docker registries are the distribution component of Docker. 13 Images Containers 19-11-2019

- 14. Docker DaemonDocker Client How Docker works…. $ docker search …. $ docker build …. $ docker container create .. Docker Hub Images Containers $ docker container run .. $ docker container start .. $ docker container stop .. $ docker container ls .. $ docker push …. $ docker swarm .. 19-11-2019 14 21 34 1. Search for the Container 2. Docker Daemon Sends the request to Hub 3. Downloads the image 4. Run the Container from the image 1

- 15. Linux Kernel 19-11-2019 15 HOST OS (Ubuntu) Client Docker Daemon Cent OS Alpine Debian HostLinuxKernel Host Kernel Host Kernel Host KernelAll the containers will have the same Host OS Kernel If you require a specific Kernel version then Host Kernel needs to be updated 1

- 16. Windows Kernel 19-11-2019 16 HOST OS (Windows 10) Client Docker Daemon Nano Server Server Core Nano Server WindowsKernel Host Kernel Host Kernel Host KernelAll the containers will have the same Host OS Kernel If you require a specific Kernel version then Host Kernel needs to be updated 1

- 17. Docker Image structure • Images are read-only. • Multiple layers of image gives the final Container. • Layers can be sharable. • Layers are portable. • Debian Base image • Emacs • Apache • Writable Container 19-11-2019 171

- 18. Running a Docker Container $ ID=$(docker container run -d ubuntu /bin/bash -c “while true; do date; sleep 1; done”) Creates a Docker Container of Ubuntu OS and runs the container and execute bash shell with a script. $ docker container logs $ID Shows output from the( bash script) container $ docker container ls List the running Containers $ docker pull ubuntu Docker pulls the image from the Docker Registry 19-11-2019 181 When you copy the commands for testing change ” quotes to proper quotes. Microsoft PowerPoint messes with the quotes.

- 19. Anatomy of a Dockerfile Command Description Example FROM The FROM instruction sets the Base Image for subsequent instructions. As such, a valid Dockerfile must have FROM as its first instruction. The image can be any valid image – it is especially easy to start by pulling an image from the Public repositories FROM ubuntu FROM alpine MAINTAINER The MAINTAINER instruction allows you to set the Author field of the generated images. MAINTAINER johndoe LABEL The LABEL instruction adds metadata to an image. A LABEL is a key-value pair. To include spaces within a LABEL value, use quotes and blackslashes as you would in command-line parsing. LABEL version="1.0” LABEL vendor=“M2” RUN The RUN instruction will execute any commands in a new layer on top of the current image and commit the results. The resulting committed image will be used for the next step in the Dockerfile. RUN apt-get install -y curl ADD The ADD instruction copies new files, directories or remote file URLs from <src> and adds them to the filesystem of the container at the path <dest>. ADD hom* /mydir/ ADD hom?.txt /mydir/ COPY The COPY instruction copies new files or directories from <src> and adds them to the filesystem of the container at the path <dest>. COPY hom* /mydir/ COPY hom?.txt /mydir/ ENV The ENV instruction sets the environment variable <key> to the value <value>. This value will be in the environment of all "descendent" Dockerfile commands and can be replaced inline in many as well. ENV JAVA_HOME /JDK8 ENV JRE_HOME /JRE8 19 19-11-2019 1

- 20. Anatomy of a Dockerfile Command Description Example VOLUME The VOLUME instruction creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. The value can be a JSON array, VOLUME ["/var/log/"], or a plain string with multiple arguments, such as VOLUME /var/log or VOLUME /var/log VOLUME /data/webapps USER The USER instruction sets the user name or UID to use when running the image and for any RUN, CMD and ENTRYPOINT instructions that follow it in the Dockerfile. USER johndoe WORKDIR The WORKDIR instruction sets the working directory for any RUN, CMD, ENTRYPOINT, COPY and ADD instructions that follow it in the Dockerfile. WORKDIR /home/user CMD There can only be one CMD instruction in a Dockerfile. If you list more than one CMD then only the last CMD will take effect. The main purpose of a CMD is to provide defaults for an executing container. These defaults can include an executable, or they can omit the executable, in which case you must specify an ENTRYPOINT instruction as well. CMD echo "This is a test." | wc - EXPOSE The EXPOSE instructions informs Docker that the container will listen on the specified network ports at runtime. Docker uses this information to interconnect containers using links and to determine which ports to expose to the host when using the –P flag with docker client. EXPOSE 8080 ENTRYPOINT An ENTRYPOINT allows you to configure a container that will run as an executable. Command line arguments to docker run <image> will be appended after all elements in an exec form ENTRYPOINT, and will override all elements specified using CMD. This allows arguments to be passed to the entry point, i.e., docker run <image> -d will pass the -d argument to the entry point. You can override the ENTRYPOINT instruction using the docker run --entrypoint flag. ENTRYPOINT ["top", "-b"] 19-11-2019 201

- 21. 19-11-2019 21 Docker Image • Dockerfile • Docker Container Management • Docker Images 1

- 22. Build Docker Containers as easy as 1-2-3 19-11-2019 22 Create Dockerfile 1 Build Image 2 Run Container 3 1

- 23. Build a Docker Java image 1. Create your Dockerfile • FROM • RUN • ADD • WORKDIR • USER • ENTRYPOINT 2. Build the Docker image 3. Run the Container $ docker build -t org/java:8 . 231 $ docker container run –it org/java:8

- 24. Docker Container Management $ ID=$(docker container run –d ubuntu /bin/bash) $ docker container stop $ID Start the Container and Store ID in ID field Stop the container using Container ID $ docker container stop $(docker container ls –aq) Stops all the containers $ docker container rm $ID Remove the Container $ docker container rm $(docker container ls –aq) Remove ALL the Container (in Exit status) $ docker container prune Remove ALL stopped Containers) $ docker container run –restart=Policy –d –it ubuntu /sh Policies = NO / ON-FAILURE / ALWAYS $ docker container run –restart=on-failure:3 –d –it ubuntu /sh Will re-start container ONLY 3 times if a failure happens $ docker container start $ID Start the container 19-11-2019 241

- 25. Docker Container Management $ ID=$(docker container run –d -i ubuntu) $ docker container exec -it $ID /bin/bash Start the Container and Store ID in ID field Inject a Process into Running Container $ ID=$(docker container run –d –i ubuntu) $ docker container exec inspect $ID Start the Container and Store ID in ID field Read Containers MetaData $ docker container run –it ubuntu /bin/bash # apt-get update # apt-get install—y apache2 # exit $ docker container ls –a $ docker container commit –author=“name” – message=“Ubuntu / Apache2” containerId apache2 Docker Commit • Start the Ubuntu Container • Install Apache • Exit Container • Get the Container ID (Ubuntu) • Commit the Container with new name $ docker container run –cap-drop=chown –it ubuntu /sh To prevent Chown inside the Container 19-11-2019 251 Source: https://github.com/meta-magic/kubernetes_workshop

- 26. Docker Image Commands $ docker login …. Log into the Docker Hub to Push images $ docker push image-name Push the image to Docker Hub $ docker image history image-name Get the History of the Docker Image $ docker image inspect image-name Get the Docker Image details $ docker image save –output=file.tar image-name Save the Docker image as a tar ball. $ docker container export –output=file.tar c79aa23dd2 Export Container to file. 19-11-2019 261 Source: https://github.com/meta-magic/kubernetes_workshop $ docker image rm image-name Remove the Docker Image $ docker rmi $(docker images | grep '^<none>' | tr -s " " | cut -d " " -f 3)

- 27. Build Docker Apache image 1. Create your Dockerfile • FROM alpine • RUN • COPY • EXPOSE • ENTRYPOINT 2. Build the Docker image 3. Run the Container $ docker build -t org/apache2 . $ docker container run –d –p 80:80 org/apache2 $ curl localhost 19-11-2019 271

- 28. Build Docker Tomcat image 1. Create your Dockerfile • FROM alpine • RUN • COPY • EXPOSE • ENTRYPOINT 2. Build the Docker image 3. Run the Container $ docker build -t org/tomcat . $ docker container run –d –p 8080:8080 org/tomcat $ curl localhost:8080 19-11-2019 281

- 29. Docker Images in the Github Workshop Ubuntu JRE 8 JRE 11 Tomcat 8 Tomcat 9 My App 1 Tomcat 9 My App 3 Spring Boot My App 4 From Ubuntu Build My Ubuntu From My Ubuntu Build My JRE8 From My Ubuntu Build My JRE11 From My JRE 11 Build My Boot From My Boot Build My App 4 From My JRE8 Build My TC8 From My TC8 Build My App 1 19-11-2019 29 My App 2 1 Source: https://github.com/meta-magic/kubernetes_workshop

- 30. Docker Images in the Github Workshop Alpine Linux JRE 8 JRE 11 Tomcat 8 Tomcat 9 My App 1 Tomcat 9 My App 3 Spring Boot My App 4 From Alpine Build My Alpine From My Alpine Build My JRE8 From My Alpine Build My JRE11 From My JRE 11 Build My Boot From My Boot Build My App 4 From My JRE8 Build My TC8 From My TC8 Build My App 1 19-11-2019 30 My App 2 1 Source: https://github.com/meta-magic/kubernetes_workshop

- 31. 19-11-2019 311 Docker Networking • Docker Networking – Bridge / Host / None • Docker Container sharing IP Address • Docker Communication – Node to Node • Docker Volumes

- 32. Docker Networking – Bridge / Host / None $ docker network ls $ docker container run --rm --network=host alpine brctl show $ docker network create tenSubnet –subnet 10.1.0.0/16 19-11-2019 321

- 33. Docker Networking – Bridge / Host / None $ docker container run --rm -–net=host alpine ip address$ docker container run --rm alpine ip address $ docker container run –rm –net=none alpine ip address No Network Stack https://docs.docker.com/network/#network-drivers 19-11-2019 331

- 34. Docker Containers Sharing IP Address $ docker container run --name ipctr –itd alpine $ docker container run --rm --net container:ipctr alpine ip address IP (Container) Service 1 (Container) Service 3 (Container) Service 2 (Container) 19-11-2019 341 $ docker container exec ipctr ip address

- 35. Docker Networking: Node to Node Same IP Addresses for the Containers across different Nodes. This requires NAT. 351 Container 1 172.17.3.2 Web Server 8080 Veth: eth0 Container 2 172.17.3.3 Microservice 9002 Veth: eth0 Container 3 172.17.3.4 Microservice 9003 Veth: eth0 Container 4 172.17.3.5 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.101/24 Node 1 Docker0 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3 Container 1 172.17.3.2 Web Server 8080 Veth: eth0 Container 2 172.17.3.3 Microservice 9002 Veth: eth0 Container 3 172.17.3.4 Microservice 9003 Veth: eth0 Container 4 172.17.3.5 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.102/24 Node 2 Docker0 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3Veth: eth0 Veth0 Veth Pairs connected to the container and the Bridge

- 36. Docker Volumes $ docker volume create hostvolume Data Volumes are special directory in the Docker Host. $ docker volume ls $ docker container run –it –rm –v hostvolume:/data alpine # echo “This is a test from the Container” > /data/data.txt 19-11-2019 361 Source:https://github.com/meta-magic/kubernetes_workshop

- 37. Docker Volumes $ docker container run - - rm –v $HOME/data:/data alpine Mount Specific File Path 19-11-2019 371 Source:https://github.com/meta-magic/kubernetes_workshop

- 39. Deployment – Updates and rollbacks, Canary Release D ReplicaSet – Self Healing, Scalability, Desired State R Worker Node 1 Master Node (Control Plane) Kubernetes Architecture POD POD itself is a Linux Container, Docker container will run inside the POD. PODs with single or multiple containers (Sidecar Pattern) will share Cgroup, Volumes, Namespaces of the POD. (Cgroup / Namespaces) Scheduler Controller Manager Using yaml or json declare the desired state of the app. State is stored in the Cluster store. Self healing is done by Kubernetes using watch loops if the desired state is changed. POD POD POD BE 1.210.1.2.34 BE 1.210.1.2.35 BE 1.210.1.2.36 BE 15.1.2.100 DNS: a.b.com 1.2 Service Pod IP Address is dynamic, communication should be based on Service which will have routable IP and DNS Name. Labels (BE, 1.2) play a critical role in ReplicaSet, Deployment, & Services etc. Cluster Store etcd Key Value Store Pod Pod Pod Label Selector selects pods based on the Labels. Label Selector Label Selector Label Selector Node Controller End Point Controller Deployment Controller Pod Controller …. Labels Internet Firewall K8s Cluster Cloud Controller For the cloud providers to manage nodes, services, routes, volumes etc. Kubelet Node Manager Container Runtime Interface Port 10255 gRPC ProtoBuf Kube-Proxy Network Proxy TCP / UDP Forwarding IPTABLES / IPVS Allows multiple implementation of containers from v1.7 RESTful yaml / json $ kubectl …. Port 443API Server Pod IP ...34 ...35 ...36EP • Declarative Model • Desired State Key Aspects Namespace1Namespace2 • Pods • ReplicaSet • Deployment • Service • Endpoints • StatefulSet • Namespace • Resource Quota • Limit Range • Persistent Volume Kind Secrets Kind • apiVersion: • kind: • metadata: • spec: Declarative Model • Pod • ReplicaSet • Service • Deployment • Virtual Service • Gateway, SE, DR • Policy, MeshPolicy • RbaConfig • Prometheus, Rule, • ListChekcer … @ @ Annotations Names Cluster IP Node Port Load Balancer External Name @ Ingress 392

- 40. 40 Focus on the Declarative Model 2

- 41. Ubuntu Installation Kubernetes Setup – Minikube $ sudo snap install kubectl --classic 19-11-2019 41 Install Kubectl using Snap Package Manager $ kubectl version Shows the Current version of Kubectl • Minikube provides a developer environment with master and a single node installation within the Minikube with all necessary add-ons installed like DNS, Ingress controller etc. • In a real world production environment you will have master installed (with a failover) and ‘n’ number of nodes in the cluster. • If you go with a Cloud Provider like Amazon EKS then the node will be created automatically based on the load. • Minikube is available for Linux / Mac OS and Windows. $ curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.30.0/minikube-linux-amd64 $ chmod +x minikube && sudo mv minikube /usr/local/bin/ https://kubernetes.io/docs/tasks/tools/install-kubectl/ 2 Source: https://github.com/meta-magic/kubernetes_workshop

- 42. Windows Installation Kubernetes Setup – Minikube C:> choco install kubernetes-cli 19-11-2019 42 Install Kubectl using Choco Package Manager C:> kubectl version Shows the Current version of Kubectl Mac OS Installation $ brew install kubernetes-cli Install Kubectl using brew Package Manager $ kubectl version Shows the Current version of Kubectl C:> cd c:usersyouraccount C:> mkdir .kube Create .kube directory $ curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-darwin-amd64 $ chmod +x minikube && sudo mv minikube /usr/local/bin/ C:> minikube-installer.exe Install Minikube using Minikube Installer https://kubernetes.io/docs/tasks/tools/install-kubectl/ 2 Source:https://github.com/meta-magic/kubernetes_workshop $ brew update; brew cask install minikube Install Minikube using Homebrew or using curl

- 43. Kubernetes Minikube - Commands 43 Commands $ minikube status Shows the status of minikube installation $ minikube start Start minikube 2 All workshop examples Source Code: https://github.com/meta-magic/kubernetes_workshop $ minikube stop Stop Minikube $ minikube ip Shows minikube IP Address $ minikube addons list Shows all the addons $ minikube addons enable ingress Enable ingress in minikube $ minikube start --memory=8192 --cpus=4 --kubernetes-version=1.14.2 8 GB RAM and 4 Cores $ minikube dashboard Access Kubernetes Dashboard in minikube $ minikube start --network-plugin=cni --extra-config=kubelet.network-plugin=cni --memory=5120 With Cilium Network Driver $ kubectl create -n kube-system -f https://raw.githubusercontent.com/cilium/cilium/v1.3/examples/kubernetes/addons/etcd/standalone-etcd.yaml $ kubectl create -f https://raw.githubusercontent.com/cilium/cilium/v1.3/examples/kubernetes/1.12/cilium.yaml

- 44. K8s Setup – Master / Nodes : On Premise 442 Cluster Machine Setup 1. Switch off Swap 2. Set Static IP to Network interface 3. Add IP to Host file $ k8s-1-cluster-machine-setup.sh 4. Install Docker 5. Install Kubernetes Run the cluster setup script to install the Docker and Kubernetes in all the machines (master and worker node) 1 Master Setup Setup kubernetes master with pod network 1. Kubeadm init 2. Install CNI Driver $ k8s-2-master-setup.sh $ k8s-3-cni-driver-install.sh $ k8s-3-cni-driver-uninstall.sh $ kubectl get po --all-namespaces Check Driver Pods Uninstall the driver 2 Node Setup n1$ kubeadm join --token t IP:Port Add the worker node to Kubernetes Master $ kubectl get nodes Check Events from namespace 3 $ kubectl get events –n namespace Check all the nodes $ sudo ufw enable $ sudo ufw allow 31100 Source Code: https://github.com/meta-magic/metallb-baremetal-example Only if the Firewall is blocking your Pod Al the above-mentioned shell scripts are available in the Source Code Repository $ sudo ufw allow 443

- 45. Kubernetes Setup – Master / Nodes $ kubeadm init node1$ kubeadm join --token enter-token-from-kubeadm-cmd Node-IP:Port Adds a Node $ kubectl get nodes $ kubectl cluster-info List all Nodes $ kubectl run hello-world --replicas=7 --labels="run=load-balancer-example" --image=metamagic/hello:1.0 --port=8080 Creates a Deployment Object and a ReplicaSet object with 7 replicas of Hello-World Pod running on port 8080 $ kubectl expose deployment hello-world --type=LoadBalancer --name=hello-world-service List all the Hello-World Deployments$ kubectl get deployments hello-world Describe the Hello-World Deployments$ kubectl describe deployments hello-world List all the ReplicaSet$ kubectl get replicasets Describe the ReplicaSet$ kubectl describe replicasets List the Service Hello-World-Service with Custer IP and External IP $ kubectl get services hello-world-service Describe the Service Hello-World-Service$ kubectl describe services hello-world-service Creates a Service Object that exposes the deployment (Hello-World) with an external IP Address. List all the Pods with internal IP Address $ kubectl get pods –o wide $ kubectl delete services hello-world-service Delete the Service Hello-World-Service $ kubectl delete deployment hello-world Delete the Hello-Word Deployment Create a set of Pods for Hello World App with an External IP Address (Imperative Model) Shows the cluster details $ kubectl get namespace Shows all the namespaces $ kubectl config current-context Shows Current Context 452 Source: https://github.com/meta-magic/kubernetes_workshop

- 46. 3 Fundamental Concepts 1. Desired State 2. Current State 3. Declarative Model 19-11-2019 462

- 47. Kubernetes Workload Portability 47 Goals 1. Abstract away Infrastructure Details 2. Decouple the App Deployment from Infrastructure (On-Premise or Cloud) To help Developers 1. Write Once, Run Anywhere (Workload Portability) 2. Avoid Vendor Lock-In Cloud On-Premise 2

- 48. 19-11-2019 48 Kubernetes Getting Started • Namespace • Pods / ReplicaSet / Deployment • Service / Endpoints • Ingress • Rollout / Undo • Auto Scale 2

- 49. Kubernetes Commands – Namespace (Declarative Model) $ kubectl config set-context $(kubectl config current-context) --namespace=your-ns The above command will let you switch the namespace to your namespace (your-ns). $ kubectl get namespace $ kubectl describe ns ns-name $ kubectl create –f app-ns.yml List all the Namespaces Describe the Namespace Create the Namespace $ kubectl apply –f app-ns.yml Apply the changes to the Namespace $ kubectl get pods –namespace= ns-name List the Pods from your namespace • Namespaces are used to group your teams and software’s in logical business group. • A definition of Service will add a entry in DNS with respect to Namespace. • Not all objects are there in Namespace. Ex. Nodes, Persistent Volumes etc. 19-11-2019 492

- 50. • Pod is a shared environment for one of more Containers. • Pod in a Kubernetes cluster has a unique IP address, even Pods on the same Node. • Pod is a pause Container Kubernetes Pods $ kubectl create –f app1-pod.yml $ kubectl get pods Atomic Unit ContainerPodVirtual Server SmallBig 19-11-2019 502 Source: https://github.com/meta-magic/kubernetes_workshop

- 51. Kubernetes Commands – Pods (Declarative Model) $ kubectl exec pod-name ps aux $ kubectl exec –it pod-name sh $ kubectl exec –it –container container-name pod-name sh By default kubectl executes the commands in the first container in the pod. If you are running multiple containers (sidecar pattern) then you need to pass –container flag and give the name of the container in the Pod to execute your command. You can see the ordering of the containers and its name using describe command. $ kubectl get pods $ kubectl describe pods pod-name $ kubectl get pods -o json pod-name $ kubectl create –f app-pod.yml List all the pods Describe the Pod details List the Pod details in JSON format Create the Pod Execute commands in the first Container in the Pod Log into the Container Shell $ kubectl get pods -o wide List all the Pods with Pod IP Addresses $ kubectl apply –f app-pod.yml Apply the changes to the Pod $ kubectl replace –f app-pod.yml Replace the existing config of the Pod $ kubectl describe pods –l app=name Describe the Pod based on the label value 19-11-2019 512 $ kubectl logs pod-name container-name Source: https://github.com/meta-magic/kubernetes_workshop

- 52. • Pods wrap around containers with benefits like shared location, secrets, networking etc. • ReplicaSet wraps around Pods and brings in Replication requirements of the Pod • ReplicaSet Defines 2 Things • Pod Template • Desired No. of Replicas Kubernetes ReplicaSet What we want is the Desired State. Game On! 19-11-2019 522 Source: https://github.com/meta-magic/kubernetes_workshop

- 53. Kubernetes Commands – ReplicaSet (Declarative Model) $ kubectl delete rs/app-rs cascade=false $ kubectl get rs $ kubectl describe rs rs-name $ kubectl get rs/rs-name $ kubectl create –f app-rs.yml List all the ReplicaSets Describe the ReplicaSet details Get the ReplicaSet status Create the ReplicaSet which will automatically create all the Pods Deletes the ReplicaSet. If the cascade=true then deletes all the Pods, Cascade=false will keep all the pods running and ONLY the ReplicaSet will be deleted. $ kubectl apply –f app-rs.yml Applies new changes to the ReplicaSet. For example Scaling the replicas from x to x + new value. 19-11-2019 532

- 54. Kubernetes Commands – Deployment (Declarative Model) • Deployments manages ReplicaSets and • ReplicaSets manages Pods • Deployment is all about Rolling updates and • Rollbacks • Canary Deployments 19-11-2019 542 Source: https://github.com/meta-magic/kubernetes_workshop

- 55. Kubernetes Commands – Deployment (Declarative Model) List all the Deployments Describe the Deployment details Show the Rollout status of the Deployment Creates Deployment Deployments contains Pods and its Replica information. Based on the Pod info Deployment will start downloading the containers (Docker) and will install the containers based on replication factor. Updates the existing deployment. Show Rollout History of the Deployment $ kubectl get deploy app-deploy $ kubectl describe deploy app-deploy $ kubectl rollout status deployment app-deploy $ kubectl rollout history deployment app-deploy $ kubectl create –f app-deploy.yml $ kubectl apply –f app-deploy.yml --record $ kubectl rollout undo deployment app-deploy - -to-revision=1 $ kubectl rollout undo deployment app-deploy - -to-revision=2 Rolls back or Forward to a specific version number of your app. $ kubectl scale deployment app-deploy - -replicas=6 Scale up the pods to 6 from the initial 2 Pods. 552 Source: https://github.com/meta-magic/kubernetes_workshop

- 56. Kubernetes Services Why do we need Services? • Accessing Pods from Inside the Cluster • Accessing Pods from Outside • Autoscale brings Pods with new IP Addresses or removes existing Pods. • Pod IP Addresses are dynamic. Service will have a stable IP Address. Service uses Labels to associate with a set of Pods Service Types 1. Cluster IP (Default) 2. Node Port 3. Load Balancer 4. External Name19-11-2019 562 Source: https://github.com/meta-magic/kubernetes_workshop

- 57. Kubernetes Commands – Service / Endpoints (Declarative Model) $ kubectl delete svc app-service $ kubectl create –f app-service.yml List all the Services Describe the Service details List the status of the Endpoints Create a Service for the Pods. Service will focus on creating a routable IP Address and DNS for the Pods Selected based on the labels defined in the service. Endpoints will be automatically created based on the labels in the Selector. Deletes the Service. $ kubectl get svc $ kubectl describe svc app-service $ kubectl get ep app-service $ kubectl describe ep app-service Describe the Endpoint Details Cluster IP (default) - Exposes the Service on an internal IP in the cluster. This type makes the Service only reachable from within the cluster. Node Port - Exposes the Service on the same port of each selected Node in the cluster using NAT. Makes a Service accessible from outside the cluster using <NodeIP>:<NodePort>. Superset of ClusterIP. Load Balancer - Creates an external load balancer in the current cloud (if supported) and assigns a fixed, external IP to the Service. Superset of NodePort. External Name - Exposes the Service using an arbitrary name (specified by external Name in the spec) by returning a CNAME record with the name. No proxy is used. This type requires v1.7 or higher of kube-dns. 19-11-2019 572 Source: https://github.com/meta-magic/kubernetes_workshop

- 58. Kubernetes Ingress (Declarative Model) An Ingress is a collection of rules that allow inbound connections to reach the cluster services. Ingress is still a beta feature in Kubernetes Ingress Controllers are Pluggable. Ingress Controller in AWS is linked to AWS Load Balancer. Source: https://kubernetes.io/docs/concepts/services-networking/ingress/#ingress-controllers 19-11-2019 582 Source: https://github.com/meta-magic/kubernetes_workshop

- 59. Kubernetes Ingress (Declarative Model) An Ingress is a collection of rules that allow inbound connections to reach the cluster services. Ingress is still a beta feature in Kubernetes Ingress Controllers are Pluggable. Ingress Controller in AWS is linked to AWS Load Balancer. Source: https://kubernetes.io/docs/concepts/services-networking/ingress/#ingress-controllers 19-11-2019 592

- 60. Kubernetes Auto Scaling Pods (Declarative Model) • You can declare the Auto scaling requirements for every Deployment (Microservices). • Kubernetes will add Pods based on the CPU Utilization automatically. • Kubernetes Cloud infrastructure will automatically add Nodes if it ran out of available Nodes. CPU utilization kept at 10% to demonstrate the auto scaling feature. Ideally it should be around 80% - 90% 19-11-2019 602 Source: https://github.com/meta-magic/kubernetes_workshop

- 61. Kubernetes Horizontal Pod Auto Scaler $ kubectl autoscale deployment appname --cpu-percent=50 --min=1 --max=10 $ kubectl run -it podshell --image=metamagicglobal/podshell Hit enter for command prompt $ while true; do wget -q -O- http://yourapp.default.svc.cluster.local; done Deploy your app with auto scaling parameters Generate load to see auto scaling in action $ kubectl get hpa $ kubectl attach podshell-name -c podshell -it To attach to the running container 612 Source: https://github.com/meta-magic/kubernetes_workshop

- 62. Auto Scaling - Advanced (Declarative Model) CPU utilization kept at 10% to demonstrate the auto scaling feature. Ideally it should be around 80% - 90%19-11-2019 622

- 63. 19-11-2019 63 Kubernetes App Setup • Environment • Config Map • Pod Preset • Secrets 3

- 64. Detach the Configuration information of the App from the Container Image. Config Map lets you create multiple profiles for your Dev, QA and Prod environment. Config Map All the Database configurations like passwords, certificates, OAuth tokens, etc., can be stored in secrets. Secret Helps you create common configuration which can be injected to Pod based on a Criteria (selected using Label). For Ex. SMTP config, SMS config. Pod Preset Environment option let you pass any info to the pod thru Environment Variables. Environment Container App Setup 19-11-2019 643

- 65. Kubernetes Pod Environment Variables 19-11-2019 65 Source: https://github.com/meta-magic/kubernetes_workshop 3

- 66. Kubernetes Adding Config to Pod Config Maps allow you to decouple configuration artifacts from image content to keep containerized applications portable. Source: https://kubernetes.io/docs/tasks/configure-pod-container/configure-pod-configmap/ 19-11-2019 66 Source: https://github.com/meta-magic/kubernetes_workshop 3

- 67. Kubernetes Pod Presets A Pod Preset is an API resource for injecting additional runtime requirements into a Pod at creation time. You use label selectors to specify the Pods to which a given Pod Preset applies. Using a Pod Preset allows pod template authors to not have to explicitly provide all information for every pod. This way, authors of pod templates consuming a specific service do not need to know all the details about that service. Source: https://kubernetes.io/docs/concepts/workloads/pods/podpreset/ 19-11-2019 67 Source: https://github.com/meta-magic/kubernetes_workshop 3

- 68. Kubernetes Pod Secrets Objects of type secret are intended to hold sensitive information, such as passwords, OAuth tokens, and ssh keys. Putting this information in a secret is safer and more flexible than putting it verbatim in a pod definition or in a docker Source: https://kubernetes.io/docs/concepts/configuration/secret/ 19-11-2019 68 Source: https://github.com/meta-magic/kubernetes_workshop 3

- 69. 19-11-2019 694 Infrastructure Design Patterns • API Gateway • Load balancer • Service discovery • Circuit breaker • Service Aggregator • Let-it crash pattern

- 70. API Gateway Design Pattern – Software Stack UILayer WS BL DL Database Shopping Cart Order Customer Product Firewall Users API Gateway LoadBalancer CircuitBreaker UILayer WebServices BusinessLogic DatabaseLayer Product SE MySQL DB Product Microservice With 4 node cluster LoadBalancer CircuitBreaker UILayer WebServices BusinessLogic DatabaseLayer Customer Redis DB Customer Microservice With 2 node cluster Users Access the Monolithic App Directly API Gateway (Reverse Proxy Server) routes the traffic to appropriate Microservices (Load Balancers) 4

- 71. API Gateway – Kubernetes Implementation /customer /product /cart /order API Gateway Ingress Deployment / Replica / Pod NodesKubernetes Objects Firewall Customer Pod Customer Pod Customer Pod Customer Service N1 N2 N2 EndPoints Product Pod Product Pod Product Pod Product Service N4 N3 MySQL DB EndPoints Review Pod Review Pod Review Pod Review Service N4 N3 N1 Service Call Kube DNS EndPoints Internal Load Balancers Users Routing based on Layer 3,4 and 7 Redis DB Mongo DB Load Balancer 4

- 72. 72 API Gateway – Kubernetes / Istio /customer /product /auth /order API Gateway Virtual Service Deployment / Replica / Pod NodesIstio Sidecar - Envoy Load Balancer Firewall P M CIstio Control Plane MySQL Pod N4 N3 Destination Rule Product Pod Product Pod Product Pod Product Service Service Call Kube DNS EndPoints Internal Load Balancers 72 Kubernetes Objects Istio Objects Users Review Pod Review Pod Review Pod Review Service N1 N4 N3EndPoints Customer Pod Customer Pod Customer Pod Customer Service N1 N2 N2 Destination Rule EndPoints Redis DB Mongo DB 4

- 73. Load Balancer Design Pattern Firewall Users API Gateway Load Balancer CircuitBreaker UILayer WebServices BusinessLogic DatabaseLayer Product SE MySQL DB Product Microservice With 4 node cluster Load Balancer CB=Hystrix UILayer WebServices BusinessLogic DatabaseLayer Customer Redis DB Customer Microservice With 2 node cluster API Gateway (Reverse Proxy Server) routes the traffic to appropriate Microservices (Load Balancers) Load Balancer Rules 1. Round Robin 2. Based on Availability 3. Based on Response Time 4

- 74. Ingress Load Balancer – Kubernetes Model Kubernetes Objects Firewall Users Product 1 Product 2 Product 3 Product Service N4 N3 N1 EndPoints Internal Load Balancers DB Load Balancer API Gateway N1 N2 N2Customer 1 Customer 2 Customer 3 Customer Service EndPoints DB Internal Load Balancers Pods Nodes • Load Balancer receives the (request) packet from the User and it picks up a Virtual Machine in the Cluster to do the internal Load Balancing. • Kube Proxy using IP Tables redirect the Packet using internal load Balancing rules. • Packet enters Kubernetes Cluster and reaches Node (of that specific Pod) and Node handover the packet to the Pod. /customer /product /cart 4

- 75. Service Discovery – NetFlix Network Stack Model Firewall Users API Gateway LoadBalancer CircuitBreaker Product MySQL DB Product Microservice With 4 node cluster LoadBalancer CircuitBreaker UILayer WebServices BusinessLogic DatabaseLayer Customer Redis DB Customer Microservice With 2 node cluster • In this model Developers write the code in every Microservice to register with NetFlix Eureka Service Discovery Server. • Load Balancers and API Gateway also registers with Service Discovery. • Service Discovery will inform the Load Balancers about the instance details (IP Addresses). Service Discovery 4

- 76. Ingress Service Discovery – Kubernetes Model Kubernetes Objects Firewall Users Product 1 Product 2 Product 3 Product Service N4 N3 N1 EndPoints Internal Load Balancers DB API Gateway N1 N2 N2Customer 1 Customer 2 Customer 3 Customer Service EndPoints DB Internal Load Balancers Pods Nodes • API Gateway (Reverse Proxy Server) doesn't know the instances (IP Addresses) of News Pod. It knows the IP address of the Services defined for each Microservice (Customer / Product etc.) • Services handles the dynamic IP Addresses of the pods. Services Endpoints will automatically discover the new Pods based on Labels. Service Definition from Kubernetes Perspective /customer /product /cart Service Call Kube DNS 4

- 77. Circuit Breaker Pattern /ui /productms If Product Review is not available Product service will return the product details with a message review not available. Reverse Proxy Server Ingress Deployment / Replica / Pod NodesKubernetes Objects Firewall UI Pod UI Pod UI Pod UI Service N1 N2 N2 EndPoints Product Pod Product Pod Product Pod Product Service N4 N3 MySQL Pod EndPoints Internal Load Balancers 77 Users Routing based on Layer 3,4 and 7 Review Pod Review Pod Review Pod Review Service N4 N3 N1 Service Call Kube DNS EndPoints 4

- 78. Service Aggregator Pattern /newservice Reverse Proxy Server Ingress Deployment / Replica / Pod Nodes Kubernetes Objects Firewall Service Call Kube DNS Users Internal Load Balancers EndPoints News Pod News Pod News Pod News Service N4 N3 N2 News Service Portal • News Category wise Microservices • Aggregator Microservice to aggregate all category of news. Auto Scaling • Sports Events (IPL / NBA) spikes the traffic for Sports Microservice. • Auto scaling happens for both News and Sports Microservices. N1 N2 N2National National National National Service EndPoints Internal Load Balancers DB N1 N2 N2Politics Politics Politics Politics Service EndPoints DB Sports Sports Sports Sports Service N4 N3 N1 EndPoints Internal Load Balancers DB 4

- 80. Service Aggregator Pattern /artist Reverse Proxy Server Ingress Deployment / Replica / Pod Nodes Kubernetes Objects Firewall Service Call Kube DNS 80 Users Internal Load Balancers EndPoints Artist Pod Artist Pod Artist Pod Artist Service N4 N3 N2 Spotify Microservices • Artist Microservice combines all the details from Discography, Play count and Playlists. Auto Scaling • Scaling of Artist and downstream Microservices will automatically scale depends on the load factor. N1 N2 N2Discography Discography Discography Discography Service EndPoints Internal Load Balancers DB N1 N2 N2Play Count Play Count Play Count Play Count Service EndPoints DB Playlist Playlist Playlist Playlist Service N4 N3 N1 EndPoints Internal Load Balancers DB 4

- 81. Config Store – Spring Config Server Firewall Users API Gateway LoadBalancer CircuitBreaker Product MySQL DB Product Microservice With 4 node cluster LoadBalancer CircuitBreaker UILayer WebServices BusinessLogic DatabaseLayer Customer Redis DB Customer Microservice With 2 node cluster • In this model Developers write the code in every Microservice to download the required configuration from a Central server (Ex. Spring Config Server for the Java World). • This creates an explicit dependency order in which service to come up will be critical. Config Server 4

- 82. Software Network Stack Vs Network Stack 11/19/2019 82 Pattern Software Stack Java Software Stack .NET Kubernetes 1 API Gateway Zuul Server SteelToe Istio Envoy 2 Service Discovery Eureka Server SteelToe Kube DNS 3 Load Balancer Ribbon Server SteelToe Istio Envoy 4 Circuit Breaker Hysterix SteelToe Istio 5 Config Server Spring Config SteelToe Secrets, Env - K8s Master Web Site https://netflix.github.io/ https://steeltoe.io/ https://kubernetes.io/ The Developer needs to write code to integrate with the Software Stack (Programming Language Specific. For Ex. Every microservice needs to subscribe to Service Discovery when the Microservice boots up. Service Discovery in Kubernetes is based on the Labels assigned to Pod and Services and its Endpoints (IP Address) are dynamically mapped (DNS) based on the Label. 4

- 83. Let-it-Crash Design Pattern – Erlang Philosophy 11/19/2019 83 • The Erlang view of the world is that everything is a process and that processes can interact only by exchanging messages. • A typical Erlang program might have hundreds, thousands, or even millions of processes. • Letting processes crash is central to Erlang. It’s the equivalent of unplugging your router and plugging it back in – as long as you can get back to a known state, this turns out to be a very good strategy. • To make that happen, you build supervision trees. • A supervisor will decide how to deal with a crashed process. It will restart the process, or possibly kill some other processes, or crash and let someone else deal with it. • Two models of concurrency: Shared State Concurrency, & Message Passing Concurrency. The programming world went one way (toward shared state). The Erlang community went the other way. • All languages such as C, Java, C++, and so on, have the notion that there is this stuff called state and that we can change it. The moment you share something you need to bring Mutex a Locking Mechanism. • Erlang has no mutable data structures (that’s not quite true, but it’s true enough). No mutable data structures = No locks. No mutable data structures = Easy to parallelize. 4

- 84. Let-it-Crash Design Pattern 11/19/2019 84 1. The idea of Messages as the first class citizens of a system, has been rediscovered by the Event Sourcing / CQRS community, along with a strong focus on domain models. 2. Event Sourced Aggregates are a way to Model the Processes and NOT things. 3. Each component MUST tolerate a crash and restart at any point in time. 4. All interaction between the components must tolerate that peers can crash. This mean ubiquitous use of timeouts and Circuit Breaker. 5. Each component must be strongly encapsulated so that failures are fully contained and cannot spread. 6. All requests sent to a component MUST be self describing as is practical so that processing can resume with a little recovery cost as possible after a restart. 4

- 85. Let-it-Crash : Comparison Erlang Vs. Microservices Vs. Monolithic Apps 85 Erlang Philosophy Micro Services Architecture Monolithic Apps (Java, C++, C#, Node JS ...) 1 Perspective Everything is a Process Event Sourced Aggregates are a way to model the Process and NOT things. Things (defined as Objects) and Behaviors 2 Crash Recovery Supervisor will decide how to handle the crashed process Kubernetes Manager monitors all the Pods (Microservices) and its Readiness and Health. K8s terminates the Pod if the health is bad and spawns a new Pod. Circuit Breaker Pattern is used handle the fallback mechanism. Not available. Most of the monolithic Apps are Stateful and Crash Recovery needs to be handled manually and all languages other than Erlang focuses on defensive programming. 3 Concurrency Message Passing Concurrency Domain Events for state changes within a Bounded Context & Integration Events for external Systems. Mostly Shared State Concurrency 4 State Stateless : Mostly Immutable Structures Immutability is handled thru Event Sourcing along with Domain Events and Integration Events. Predominantly Stateful with Mutable structures and Mutex as a Locking Mechanism 5 Citizen Messages Messages are 1st class citizen by Event Sourcing / CQRS pattern with a strong focus on Domain Models Mutable Objects and Strong focus on Domain Models and synchronous communication. 4

- 86. Day 1 - Summary 86 Setup 1. Setting up Kubernetes Cluster • 1 Master and • 2 Worker nodes Getting Started 1. Create Pods 2. Create ReplicaSets 3. Create Deployments 4. Rollouts and Rollbacks 5. Create Service 6. Create Ingress 7. App Auto Scaling App Setup 1. Secrets 2. Environments 3. ConfigMap 4. PodPresets 4 On Premise Setup 1. Setting up External Load Balancer using Metal LB 2. Setting up nginx Ingress Controller Infrastructure Design Patterns 1. API Gateway 2. Service Discovery 3. Load Balancer 4. Config Server 5. Circuit Breaker 6. Service Aggregator Pattern 7. Let It Crash Pattern Running Shopping Portal App 1. UI 2. Product Service 3. Product Review Service 4. MySQL Database

- 87. 19-11-2019 875 K8s Packet Path • Kubernetes Networking • Compare Docker and Kubernetes Networking • Pod to Pod Networking within the same Node • Pod to Pod Networking across the Node • Pod to Service Networking • Ingress - Internet to Service Networking • Egress – Pod to Internet Networking

- 88. Kubernetes Networking Mandatory requirements for Network implementation 1. All Pods can communicate with All other Pods without using Network Address Translation (NAT). 2. All Nodes can communicate with all the Pods without NAT. 3. The IP that is assigned to a Pod is the same IP the Pod sees itself as well as all other Pods in the cluster.19-11-2019 88 Source: https://github.com/meta-magic/kubernetes_workshop 5

- 89. 89 Container 1 172.17.3.2 Web Server 8080 Veth: eth0 Container 2 172.17.3.3 Microservice 9002 Veth: eth0 Container 3 172.17.3.4 Microservice 9003 Veth: eth0 Container 4 172.17.3.5 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.101/24 Node 1 Docker0 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3 Container 1 172.17.3.2 Web Server 8080 Veth: eth0 Container 2 172.17.3.3 Microservice 9002 Veth: eth0 Container 3 172.17.3.4 Microservice 9003 Veth: eth0 Container 4 172.17.3.5 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.102/24 Node 2 Docker0 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3 Docker Networking Vs. Kubernetes Networking Pod 1 172.17.3.2 Web Server 8080 Veth: eth0 Pod 2 172.17.3.3 Microservice 9002 Veth: eth0 Pod 3 172.17.3.4 Microservice 9003 Veth: eth0 Pod 4 172.17.3.5 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.101/24 Node 1 L2 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3 Same IP Range. NAT Required Uniq IP Range. netFilter, IP Tables / IPVS. No NAT required 5 Pod 1 172.17.3.6 Web Server 8080 Veth: eth0 Pod 2 172.17.3.7 Microservice 9002 Veth: eth0 Pod 3 172.17.3.8 Microservice 9003 Veth: eth0 Pod 4 172.17.3.9 Microservice 9004 Veth: eth0 IP tables rules eth0 10.130.1.102/24 Node 2 L2 Bridge 172.17.3.1/16 Veth0 Veth1 Veth2 Veth3

- 90. Kubernetes Networking 3 Networks Networks 1. Physical Network 2. Pod Network 3. Service Network 19-11-2019 90 Source: https://github.com/meta-magic/kubernetes_workshop CIDR Range (RFC 1918) 1. 10.0.0.0/8 2. 172.0.0.0/11 3. 192.168.0.0/16 Keep the Address ranges separate – Best Practices RFC 1918 1. Class A 2. Class B 3. Class C 5

- 91. Kubernetes Networking 3 Networks 91 Source: https://github.com/meta-magic/kubernetes_workshop eth0 10.130.1.102/24 Node 1 veth0 eth0 Pod 1 Container 1 172.17.4.1 eth0 Pod 2 Container 1 172.17.4.2 veth1 eth0 10.130.1.103/24 Node 2 veth1 eth0 Pod 1 Container 1 172.17.5.1 eth0 10.130.1.104/24 Node 3 veth1 eth0 Pod 1 Container 1 172.17.6.1 Service EP EP EP VIP 192.168.1.2/16 1. Physical Network 2. Pod Network 3. Service Network End Points handles dynamic IP Addresses of the Pods selected by a Service based on Pod Labels 5 Virtual IP doesn’t have any physical network card or system attached.

- 92. Kubernetes: Pod to Pod Networking inside a Node By Default Linux has a Single Namespace and all the process in the namespace share the Network Stack. If you create a new namespace then all the process running in that namespace will have its own Network Stack, Routes, Firewall Rules etc. $ ip netns add namespace1 A mount point for namespace1 is created under /var/run/netns Create Namespace $ ip netns List Namespace eth0 10.130.1.101/24 Node 1 Root NW Namespace L2 Bridge 10.17.3.1/16 veth0 veth1 ForwardingTables BridgeimplementsARPtodiscoverlink- layerMACAddress eth0 Container 1 10.17.3.2 Pod 1 Container 2 10.17.3.2 eth0 Pod 2 Container 1 10.17.3.3 1. Pod 1 sends packet to eth0 – eth0 is connected to veth0 2. Bridge resolves the Destination with ARP protocol and 3. Bridge sends the packet to veth1 4. veth1 forwards the packet directly to Pod 2 thru eth0 1 2 4 3 This entire communication happens in localhost. So Data transfer speed will NOT be affected by Ethernet card speed. Kube Proxy 19-11-2019 925

- 93. eth0 10.130.1.102/24 Node 2 Root NW Namespace L2 Bridge 10.17.4.1/16 veth0 Kubernetes: Pod to Pod Networking Across Node eth0 10.130.1.101/24 Node 1 Root NW Namespace L2 Bridge 10.17.3.1/16 veth0 veth1 ForwardingTables eth0 Container 1 10.17.3.2 Pod 1 Container 2 10.17.3.2 eth0 Pod 2 Container 1 10.17.3.3 1. Pod 1 sends packet to eth0 – eth0 is connected to veth0 2. Bridge will try to resolve the Destination with ARP protocol and ARP will fail because there is no device connected to that IP. 3. On Failure Bridge will send the packet to eth0 of the Node 1. 4. At this point packet leaves eth0 and enters the Network and network routes the packet to Node 2. 5. Packet enters the Root namespace and routed to the L2 Bridge. 6. veth0 forwards the packet to eth0 of Pod 3 1 2 4 3 eth0 Pod 3 Container 1 10.17.4.1 5 6 Kube ProxyKube Proxy Src-IP:Port: Pod1:17711 – Dst-IP:Port: Pod3:80 19-11-2019 935

- 94. eth0 10.130.1.102/24 Node 2 Root NW Namespace L2 Bridge 10.17.4.1/16 veth0 Kubernetes: Pod to Service to Pod – Load Balancer eth0 10.130.1.101/24 Node 1 Root NW Namespace L2 Bridge 10.17.3.1/16 veth0 veth1 ForwardingTables eth0 Container 1 10.17.3.2 Pod 1 Container 2 10.17.3.2 eth0 Pod 2 Container 1 10.17.3.3 1. Pod 1 sends packet to eth0 – eth0 is connected to veth0 2. Bridge will try to resolve the Destination with ARP protocol and ARP will fail because there is no device connected to that IP. 3. On Failure Bridge will give the packet to Kube Proxy 4. it goes thru ip tables rules installed by Kube Proxy and rewrites the Dst-IP with Pod3-IP. IPVS has done the Cluster load Balancing directly on the node and packet is given to eth0 of the Node1. 5. Now packet leaves Node 1 eth0 and enters the Network and network routes the packet to Node 2. 6. Packet enters the Root namespace and routed to the L2 Bridge. 7. veth0 forwards the packet to eth0 of Pod 3 1 2 4 3 eth0 Pod 3 Container 1 10.17.4.1 5 6 Kube ProxyKube Proxy 7 SrcIP:Port: Pod1:17711 – Dst-IP:Port: Service1:80 Src-IP:Port: Pod1:17711 – Dst-IP:Port: Pod3:80 19-11-2019 945

- 95. eth0 10.130.1.102/24 Node 2 Root NW Namespace L2 Bridge 10.17.4.1/16 veth0 Kubernetes Pod to Service to Pod – Return Journey eth0 10.130.1.101/24 Node 1 Root NW Namespace L2 Bridge 10.17.3.1/16 veth0 veth1 ForwardingTables eth0 Container 1 10.17.3.2 Pod 1 Container 2 10.17.3.2 eth0 Pod 2 Container 1 10.17.3.3 1. Pod 3 receives data from Pod 1 and sends the reply back with Source as Pod3 and Destination as Pod1 2. Bridge will try to resolve the Destination with ARP protocol and ARP will fail because there is no device connected to that IP. 3. On Failure Bridge will give the packet Node 2 eth0 4. Now packet leaves Node 2 eth0 and enters the Network and network routes the packet to Node 1. (Dst = Pod1) 5. it goes thru ip tables rules installed by Kube Proxy and rewrites the Src-IP with Service-IP. Kube Proxy gives the packet to L2 Bridge. 6. L2 bridge makes the ARP call and hand over the packet to veth0 7. veth0 forwards the packet to eth0 of Pod1 1 2 4 3 eth0 Pod 3 Container 1 10.17.4.1 5 6 Kube ProxyKube Proxy 7 Src-IP: Pod3:80 – Dst-IP:Port: Pod1:17711Src-IP:Port: Service1:80– Dst-IP:Port: Pod1:17711 19-11-2019 955

- 96. eth0 10.130.1.102/24 Node X Root NW Namespace L2 Bridge 10.17.4.1/16 veth0 Kubernetes: Internet to Pod 1. Client Connects to App published Domain. 2. Once the Ingress Load Balancer receives the packet it picks a VM (K8s Node). 3. Once inside the VM IP Tables knows how to redirect the packet to the Pod using internal load Balancing rules installed into the cluster using Kube Proxy. 4. Traffic enters Kubernetes cluster and reaches the Node X (10.130.1.102). 5. Node X gives the packet to the L2 Bridge 6. L2 bridge makes the ARP call and hand over the packet to veth0 7. veth0 forwards the packet to eth0 of Pod 8 1 2 4 3 5 6 7 Src: Client IP – Dst: App Dst Src: Client IP – Dst: Pod IP Ingress Load Balancer Client / User Src: Client IP – Dst: VM-IP eth0 Pod 8 Container 1 10.17.4.1 Kube Proxy 19-11-2019 96 VM VMVM 5

- 97. Kubernetes: Pod to Internet eth0 10.130.1.101/24 Node 1 Root NW Namespace L2 Bridge 10.17.3.1/16 veth0 veth1 ForwardingTables eth0 Container 1 10.17.3.2 Pod 1 Container 2 10.17.3.2 eth0 Pod 2 Container 1 10.17.3.3 1. Pod 1 sends packet to eth0 – eth0 is connected to veth0 2. Bridge will try to resolve the Destination with ARP protocol and ARP will fail because there is no device connected to that IP. 3. On Failure Bridge will give the packet to IP Tables 4. The Gateway will reject the Pod IP as it will recognize only the VM IP. So source IP is replaced with VM-IP 5. Packet enters the network and routed to Internet Gateway. 6. Packet reaches the GW and it replaces the VM-IP (internal) with an External IP. 7. Packet Reaches External Site (Google) 1 2 4 3 5 6 Kube Proxy 7 Src: Pod1 – Dst: Google Src: VM-IP – Dst: Google Gateway Google Src: Ex-IP – Dst: Google On the way back the packet follows the same path and any Src IP mangling is un done and each layer understands VM-IP and Pod IP within Pod Namespace. 97 VM 5

- 98. 19-11-2019 98 Kubernetes Networking Advanced • Kubernetes IP Network • OSI Layer | L2 | L3 | L4 | L7 | • IP Tables | IPVS | BGP | VXLAN • Kubernetes DNS • Kubernetes Proxy • Kubernetes Load Balancer, Cluster IP, Node Port • Kubernetes Ingress • Kubernetes Ingress – Amazon Load Balancer • Kubernetes Ingress – Metal LB (On Premise) 6

- 99. Kubernetes Network Requirements 19-11-2019 99 Source: https://github.com/meta-magic/kubernetes_workshop 1. IPAM (IP Address Management & Life cycle Management of Network Devices 2. Connectivity and Container Network 3. Route Advertisement 6

- 101. Networking Glossary Netfilter – Packet Filtering in Linux Software that does packet filtering, NAT and other Packet mangling IP Tables It allows Admin to configure the netfilter for managing IP traffic. ConnTrack Conntrack is built on top of netfilter to handle connection tracking.. IPVS – IP Virtual Server Implements a transport layer load balancing as part of the Linux Kernel. It’s similar to IP Tables and based on netfilter hook function and uses hash table for the lookup. 101 Border Gateway Protocol BGP is a standardized exterior gateway protocol designed to exchange routing and reachability information among autonomous systems (AS) on the Internet. The protocol is often classified as a path vector protocol but is sometimes also classed as a distance-vector routing protocol. Some of the well known & mandatory attributes are AS Path, Next Hop Origin. 6 L2 Bridge (Software Switch) Network devices, called switches (or bridges) are responsible for connecting several network links to each other, creating a LAN. Major components of a network switch are a set of network ports, a control plane, a forwarding plane, and a MAC learning database. The set of ports are used to forward traffic between other switches and end-hosts in the network. The control plane of a switch is typically used to run the Spanning Tree Protocol, that calculates a minimum spanning tree for the LAN, preventing physical loops from crashing the network. The forwarding plane is responsible for processing input frames from the network ports and making a forwarding decision on which network port or ports the input frame is forwarded to.

- 102. Networking Glossary Layer 2 Networking Layer 2 is the Data Link Layer (OSI Mode) providing Node to Node Data Transfer. Layer 2 deals with delivery of frames between 2 adjacent nodes on a network. Ethernet is an Ex. Of Layer 2 networking with MAC represented as a Sub Layer. Flannel uses L3 with VXLAN (L2) networking. Layer 4 Networking Transport layer controls the reliability of a given link through flow control. Layer 7 Networking Application layer networking (HTTP, FTP etc.,) This is the closet layer to the end user. Kubernetes Ingress Controller is a L7 Load Balancer. 102 Layer 3 Networking Layer 3’s primary concern involves routing packets between hosts on top of the layer 2 connections. IPv4, IPv6, and ICMP are examples of Layer 3 networking protocols. Calico uses L3 networking. VXLAN Networking Virtual Extensible LAN used to help large cloud deployments by encapsulating L2 Frames within UDP Datagrams. VXLAN is similar to VLAN (which has a limitation of 4K network IDs). VXLAN is an encapsulation and overlay protocol that runs on top of existing Underlay networks. VXLAN can have 16 million Network IIDs. Overlay Networking An overlay network is a virtual, logical network built on top of an existing network. Overlay networks are often used to provide useful abstractions on top of existing networks and to separate and secure different logical networks. 6 Source Network Address Translation SNAT refers to a NAT procedure that modifies the source address of an IP Packet. Destination Network Address Translation DNAT refers to a NAT procedure that modifies the Destination address of an IP Packet.

- 103. eth0 10.130.1.102 Node / Server 1 172.17.4.1 VSWITCH 172.17.4.1 Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 VSWITCH 172.17.5.1 Customer 1 Customer 2 VXLAN Encapsulation 1036 10.130.1.0/24 10.130.2.0/24 Underlay Network VSWITCH: Virtual Switch Switch SwitchRouter

- 104. eth0 10.130.1.102 Node / Server 1 172.17.4.1 VSWITCH VTEP 172.17.4.1 Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 VSWITCH VTEP 172.17.5.1 Customer 1 Customer 2 VXLAN Encapsulation 1046 Overlay Network VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point VXLAN encapsulate L2 into UDP packets tunneling using L3. This means no specialized hardware required. So, the Overlay networks could be created purely in Software. VLAN = 4094 (2 reserved) Networks VNI = 16 Million Networks (24-bit ID)

- 105. eth0 10.130.1.102 Node / Server 1 172.17.4.1 VSWITCH VTEP 172.17.4.1 Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 VSWITCH VTEP 172.17.5.1 Customer 1 Customer 2 VXLAN Encapsulation 1056 Overlay Network ARP Broadcast ARP BroadcastARP Broadcast Multicast VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point ARP Unicast

- 106. eth0 10.130.1.102 Node / Server 1 172.17.4.1 B1 – MAC VSWITCH VTEP 172.17.4.1 Y1 – MAC Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 B2 – MAC VSWITCH VTEP 172.17.5.1 Y2 – MAC Customer 1 Customer 2 VXLAN Encapsulation 1066 Overlay Network Src: 172.17.4.1 Src: B1 – MAC Dst: 172.17.5.1 Dst: B2 - MAC Src: 10.130.1.102 Dst: 10.130.2.187 Src UDP Port: Dynamic Dst UDP Port: 4789 VNI: 100 Src: 172.17.4.1 Src: B1 – MAC Dst: 172.17.5.1 Dst: B2 - MAC Src: 172.17.4.1 Src: B1 – MAC Dst: 172.17.5.1 Dst: B2 - MAC VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point | VNI : Virtual Network Identifier

- 107. eth0 10.130.1.102 Node / Server 1 172.17.4.1 B1 – MAC VSWITCH VTEP 172.17.4.1 Y1 – MAC Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 B2 – MAC VSWITCH VTEP 172.17.5.1 Y2 – MAC Customer 1 Customer 2 VXLAN Encapsulation 1076 Overlay Network Src: 10.130.2.187 Dst: 10.130.1.102 Src UDP Port: Dynamic Dst UDP Port: 4789 VNI: 100 VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point | VNI : Virtual Network Identifier Src: 172.17.5.1 Src: B2 - MAC Dst: 172.17.4.1 Dst: B1 – MAC Src: 172.17.5.1 Src: B2 - MAC Dst: 172.17.4.1 Dst: B1 – MAC Src: 172.17.5.1 Src: B2 - MAC Dst: 172.17.4.1 Dst: B1 – MAC

- 108. eth0 10.130.1.102 Node / Server 1 172.17.4.1 B1 – MAC VSWITCH VTEP 172.17.4.1 Y1 – MAC Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 B2 – MAC VSWITCH VTEP 172.17.5.1 Y2 – MAC Customer 1 Customer 2 VXLAN Encapsulation 1086 Overlay Network Src: 172.17.4.1 Src: Y1 – MAC Dst: 172.17.5.1 Dst: Y2 - MAC Src: 10.130.1.102 Dst: 10.130.2.187 Src UDP Port: Dynamic Dst UDP Port: 4789 VNI: 200 Src: 172.17.4.1 Src: Y1 – MAC Dst: 172.17.5.1 Dst: Y2 - MAC Src: 172.17.4.1 Src: Y1 – MAC Dst: 172.17.5.1 Dst: Y2 - MAC VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point | VNI : Virtual Network Identifier

- 109. eth0 10.130.1.102 Node / Server 1 172.17.4.1 B1 – MAC VSWITCH VTEP 172.17.4.1 Y1 – MAC Customer 1 Customer 2 eth0 10.130.2.187 Node / Server 2 172.17.5.1 B2 – MAC VSWITCH VTEP 172.17.5.1 Y2 – MAC Customer 1 Customer 2 VXLAN Encapsulation 1096 Overlay Network VNI: 100 VNI: 200 VSWITCH: Virtual Switch. | VTEP : Virtual Tunnel End Point | VNI : Virtual Network Identifier

- 110. Kubernetes Network Support 19-11-2019 110 Source: https://github.com/meta-magic/kubernetes_workshop 6 Features L2 L3 Overlay Cloud Pods Communicate using L2 Bridge Pod Traffic is routed in underlay network Pod Traffic is encapsulated & uses underlay for reachability Pod Traffic is routed in Cloud Virtual Network Technology Linux L2 Bridge L2 ARP Routing Protocol BGP VXLAN Amazon EKS Google GKE Encapsulation No No Yes No Example Cilium Calico, Ciliium Flannel, Weave, Cilium AWS EKS, Google GKE, Microsoft ACS

- 111. Kubernetes Networking 3 Networks 111 Source: https://github.com/meta-magic/kubernetes_workshop eth0 10.130.1.102/24 Node 1 veth0 eth0 Pod 1 Container 1 172.17.4.1 eth0 Pod 2 Container 1 172.17.4.2 veth1 eth0 10.130.1.103/24 Node 2 veth1 eth0 Pod 1 Container 1 172.17.5.1 eth0 10.130.1.104/24 Node 3 veth1 eth0 Pod 1 Container 1 172.17.6.1 Service EP EP EP VIP 192.168.1.2/16 1. Physical Network 2. Pod Network 3. Service Network End Points handles dynamic IP Addresses of the Pods selected by a Service based on Pod Labels Virtual IP doesn’t have any physical network card or system attached. 6 Virtual Network - L2 / L3 /Overlay / Cloud

- 112. Kubernetes DNS / Core DNS v1.11 onwards Kubernetes DNS to avoid IP Addresses in the configuration or Application Codebase. It Configures Kubelet running on each Node so the containers uses DNS Service IP to resolve the IP Address. A DNS Pod consists of three separate containers 1. Kube DNS: Watches the Kubernetes Master for changes in Service and Endpoints 2. DNS Masq: Adds DNS caching to Improve the performance 3. Sidecar: Provides a single health check endpoint to perform health checks for Kube DNS and DNS Masq. • DNS Pod itself is a Kubernetes Service with a Cluster IP. • DNS State is stored in etcd. • Kube DNS uses a library the converts etcd name – value pairs into DNS Records. • Core DNS is similar to Kube DNS but with a plugin Architecture in v1.11 Core DNS is the default DNS Server.19-11-2019 112 Source: https://github.com/meta-magic/kubernetes_workshop 6

- 113. Kube Proxy Kube-proxy comes close to Reverse Proxy model from design perspective. It can also work as a load balancer for the Service’s Pods. It can do simple TCP, UDP, and SCTP stream forwarding or round-robin TCP, UDP, and SCTP forwarding across a set of backend. • When Service of the type “ClusterIP” is created, the system assigns a virtual IP to it and there is no network interface or MAC address associated with it. • Kube-Proxy uses netfilter and iptables in the Linux kernel for the routing including VIP. 19-11-2019 113Proxy Type • Tunnelling proxy passes unmodified requests from clients to servers on some network. It works as a gateway that enables packets from one network access servers on another network. • A forward proxy is an Internet-facing proxy that mediates client connections to web resources/servers on the Internet. • A Reverse proxy is an internal-facing proxy. It takes incoming requests and redirects them to some internal server without the client knowing which one he/she is accessing. Load balancing between backend Pods is done by the round-robin algorithm by default. Other supported Algos: 1. lc: least connection 2. dh: destination hashing 3. sh: source hashing 4. sed: shortest expected delay 5. nq: never queue Kube-Proxy can work in 3 modes 1. User space 2. IPTABLES 3. IPVS The differences comes in how Kube-Proxy interact with User Space and Kernel Space. How this is different for each of the modes by routing the traffic to service and then doing load balancing. 6

- 114. 114 Kubernetes Cluster IP, Load Balancer, & Node Port LoadBalancer: This is the standard way to expose service to the internet. All the traffic on the port is forwarded to the service. It's designed to assign an external IP to act as a load balancer for the service. There's no filtering, no routing. LoadBalancer uses cloud service or MetalLB for on-premise. Cluster IP: Cluster IP is the default and used when access within the cluster is required. We use this type of service when we want to expose a service to other pods within the same cluster. This service is accessed using kubernetes proxy. Nodeport: Opens a port in the Node when Pod needs to be accessed from outside the cluster. Few Limitations & hence its not advised to use NodePort • only one service per port • Ports between 30,000-32,767 • HTTP Traffic exposed in non std port • Changing node/VM IP is difficult 6

- 115. 115 K8s Cluster IP: Kube Proxy Service Pods Pods Pods Traffic KubernetesCluster Node Port: VM Service Pods Pods Pods Traffic VM VM NP: 30000 NP: 30000 NP: 30000 KubernetesCluster Load Balancer: Load Balancer Service Pods Pods Pods Traffic KubernetesCluster Ingress: Does Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods 6

- 116. Ingress 116 An Ingress can be configured to give Services 1. Externally-reachable URLs, 2. Load balance traffic, 3. Terminate SSL / TLS, and offer 4. Name based Virtual hosting. An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional frontends to help handle the traffic. Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods Source: https://kubernetes.io/docs/concepts/services-networking/ingress/ 6 An Ingress does not expose arbitrary ports or protocols. Exposing services other than HTTP and HTTPS to the internet typically uses a service of type Service.Type=NodePort or Service.Type=LoadBalancer.

- 117. Ingress 117 Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods Source: https://kubernetes.io/docs/concepts/services-networking/ingress/ 6 Ingress Rules 1. Optional Host – If Host is specified then the rules will be applied to that host. 2. Paths – Each path under a host can routed to a specific backend service 3. Backend is a combination of Service and Service Ports

- 118. Ingress 118 Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods Source: https://kubernetes.io/docs/concepts/services-networking/ingress/ 6 Ingress Rules 1. Optional Host – If Host is specified then the rules will be applied to that host. 2. Paths – Each path under a host can routed to a specific backend service 3. Backend is a combination of Service and Service Ports

- 119. Ingress 119 Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods Source: https://kubernetes.io/docs/concepts/services-networking/ingress/ 6 Name based Virtual Hosting

- 120. Smart Routing Ingress Load Balancer Order Pods Pods Pods Traffic Kubernetes Cluster Product Pods Pods Pods /order /product Review Pods Pods Pods Ingress – TLS 120 Source: https://kubernetes.io/docs/concepts/services-networking/ingress/ 6

- 121. 121 Kubernetes Ingress & Amazon Load Balancer (alb) 6

- 122. 19-11-2019 122 Kubernetes Network Security Policy • Kubernetes Network Policy – L3 / L4 • Kubernetes Security Policy for Microservices • Cilium Network / Security Policy • Berkeley Packet Filter (BPF) • Express Data Path (XDP) • Compare Weave | Calico | Romana | Cilium | Flannel • Cilium Architecture • Cilium Features 7

- 123. K8s Network Policies L3/L4 123 Kubernetes blocks the Product UI to access Database or Product Review directly. You can create Network policies across name spaces, services etc., for both incoming (Ingress) and outgoing (Egress) traffic. Product UI Pod Product UI Pod Product UI Pod Product Pod Product Pod Product Pod Review Pod Review Pod Review Pod MySQL Pod Mongo Pod Order UI Pod Order UI Pod Order UI Pod Order Pod Order Pod Order Pod Oracle Pod 7 Blocks Access Blocks Access

- 124. K8s Network Policies – L3 / L4 19-11-2019 124 Source: https://github.com/meta-magic/kubernetes_workshop 7

- 125. Network Security Policy for Microservices 19-11-2019 125 Product Review Microservice Product Microservice 172.27.1.2 L3 / L4 L7 – API GET /live GET /ready GET /reviews/{id} POST /reviews PUT /reviews/{id} DELETE /reviews/{id} GET /reviews/192351 Product review can be accessed ONLY by Product. IP Tables enforces this rule. Exposed Exposed Exposed Exposed Exposed All other method calls are also exposed to Product Microservice. iptables –s 172.27.1.2 -p tcp –dport 80 -j accept 7

- 126. Network Security Policy for Microservices 19-11-2019 126 Product Review Microservice Product MicroserviceL3 / L4 L7 – API GET /live GET /ready GET /reviews/{id} POST /reviews PUT /reviews/{id} DELETE /reviews/{id} GET /reviews/192351 Rules are implemented by BPF (Berkeley Packet Filter) at Linux Kernel level. From Product Microservice only GET /reviews/{id} allowed. BPF / XDP performance is much superior to IPVS. Except GET /reviews All other calls are blocked for Product Microservice 7

- 127. Cilium Network Policy 19-11-2019 127 1. Cilium Network Policy works in sync with Istio in the Kubernetes world. 2. In Docker world Cilium works as a network driver and you can apply the policy using ciliumctl. In the previous example with Kubernetes Network policy you will be allowing access to Product Review from Product Microservice. However, that results in all the API calls of Product Review accessible by the Product Microservice. Now with the New Policy only GET /reviews/{id} is allowed. These Network policy gets executed at Linux Kernel using BPF. Product Microservice can access ONLY GET /reviews from Product Review Microservice User Microservice can access GET /reviews & POST /reviews from Product Review Microservice 7

- 128. BPF / XDP (eXpress Data Path) 19-11-2019 128 Network Driver Software StackNetwork Card BPF Regular BPF (Berkeley Packet Filter) mode Network Driver Software StackNetwork Card BPF XDP allows BPF program to run inside the network driver with access to DMA buffer. Berkeley Packet Filters (BPF) provide a powerful tool for intrusion detection analysis. Use BPF filtering to quickly reduce large packet captures to a reduced set of results by filtering based on a specific type of traffic. Source: https://www.ibm.com/support/knowledgecenter/en/SS42VS_7.3.2/com.ibm.qradar.doc/c_forensics_bpf.html 7

- 129. XDP (eXpress Data Path) 19-11-2019 129 BPF Program can drop millions packet per second when there is DDoS attack.Network Driver Software StackNetwork Card BPF Drop Stack Network Driver Software StackNetwork Card BPF Drop Stack LB & Tx BPF can perform Load Balancing and transmit out the data to wire again. Source: http://www.brendangregg.com/ebpf.html 7

- 130. Kubernetes Container Network Interface 130 Container Runtime Container Network Interface Weave Calico Romana Cilium Flannel Layer 3 BGP BGP Route Reflector Network Policies IP Tables Stores data in Etcd Project Calico Layer 3 VXLAN (No Encryption) IPSec Overlay Network Host-GW (L2) Stores data in Etcd https://coreos.com/ Layer 3 IPSec Network Policies Multi Cloud NW Stores data in Etcd https://www.weave.works/ Layer 3 L3 + BGP & L2 +VXLAN IPSec Network Policies IP Tables Stores data in Etcd https://romana.io/ Layer 3 / 7 BPF / XDP L7 Filtering using BPF Network Policies L2 VXLAN API Aware (HTTP, gRPC, Kafka, Cassandra… ) Multi Cluster Support https://cilium.io/ BPF (Berkeley Packet Filter) – Runs inside the Linux KernelOn-Premise Ingress Load Balancer Mostly Mostly Yes Yes Yes 7

- 131. Cilium Architecture 19-11-2019 131 Plugins Cilium Agent BPF BPF BPF CLI Monitor Policy 1. Can compile and deploy BPF code (based on the labels of that Container) in the kernel when the containers is started. 2. When the 2nd container is deployed Cilium generates the 2nd BPF and deploy that rule in the kernel. 3. To get the network Connectivity Cilium compiles the BPF and attach it to the network device. 7

- 132. Day 2 - Summary 132 Networking – Packet Routing 1. Compare Docker and Kubernetes Networking 2. Pod to Pod Networking within the same Node 3. Pod to Pod Networking across the Node 4. Pod to Service Networking 5. Ingress - Internet to Service Networking 6. Egress – Pod to Internet Networking 4 Kubernetes Volume • Installed nfs server in the cluster • Created Persistent Volume • Create Persistent Volume Claim • Linked Persistent Volume Claim to Pod Network Policies 1. Kubernetes Network Policy – L3 / L4 2. Created Network Policies within the same Namespace and across Namespace Best Practices 1. Docker Best Practices 2. Kubernetes Best Practices Helm Charts 1. Helm Chart Installation 2. Helm Chart Search 3. Create Helm Charts 4. Install Helm Charts Logging and Monitoring 1. Jagger UI for Request Monitoring Networking - Components 1. Kubernetes IP Network 2. Kubernetes DNS 3. Kubernetes Proxy 4. Created Service (with Cluster IP) 5. Created Ingress

- 133. Service Mesh: Istio Service Discovery Traffic Routing Security 133 Gateway Virtual Service Destination Rule Service Entry

- 134. • Enforces access control and usage policies across service mesh and • Collects telemetry data from Envoy and other services. • Also includes a flexible plugin model. Mixer Provides • Service Discovery • Traffic Management • Routing • Resiliency (Timeouts, Circuit Breakers, etc.) Pilot Provides • Strong Service to Service and end user Authentication with built-in Identity and credential management. • Can enforce policies based on Service identity rather than network controls. Citadel Provides • Configuration Injection • Processing and • Distribution Component of Istio Galley Control Plane Envoy is deployed as a Sidecar in the same K8S Pod. • Dynamic Service Discovery • Load Balancing • TLS Termination • HTTP/2 and gRPC Proxies • Circuit Breakers • Health Checks • Staged Rollouts with % based traffic split • Fault Injection • Rich Metrics Envoy Data Plane Istio Components 19-11-2019 1348