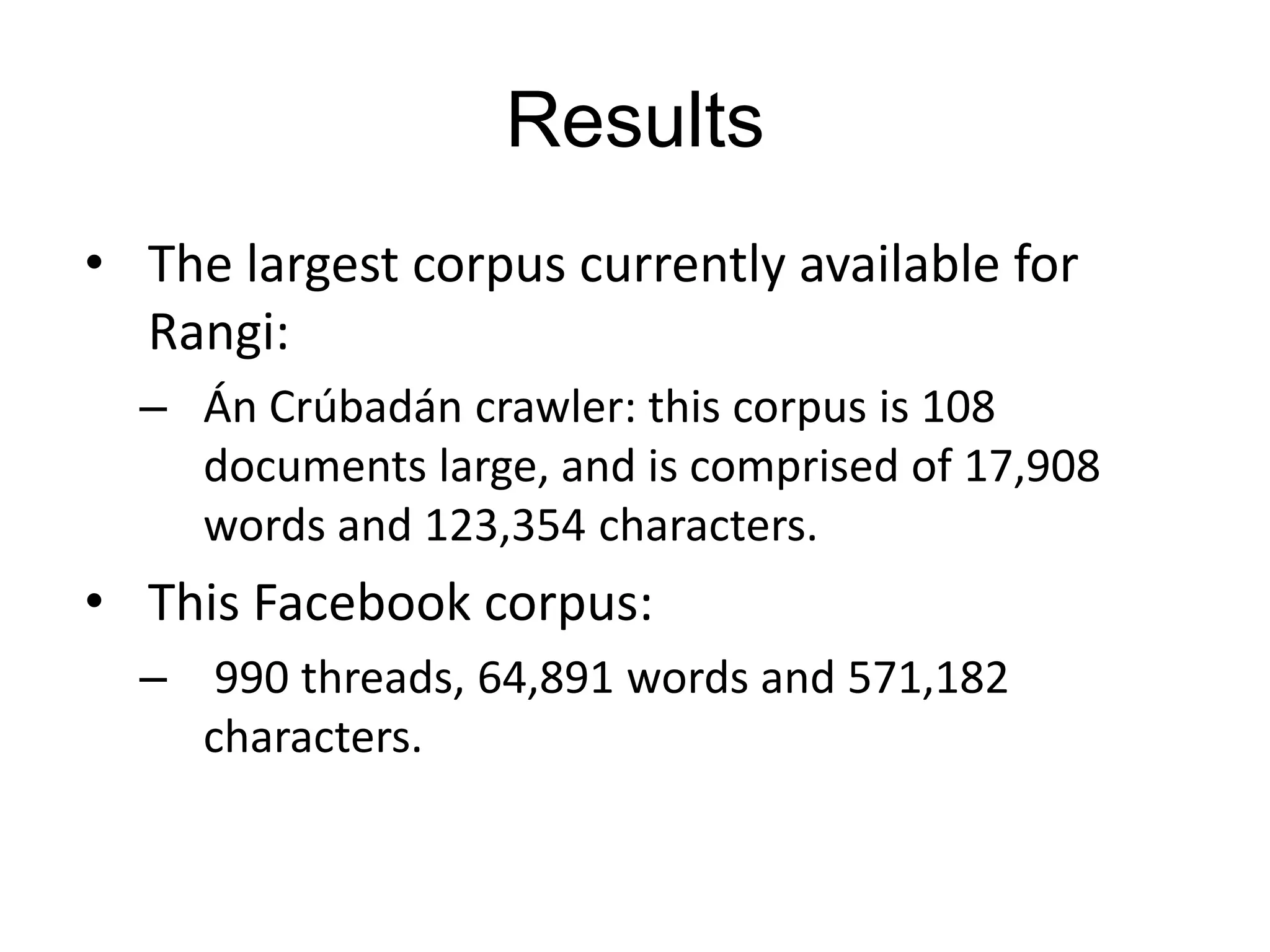

The document discusses the challenges and necessity of creating corpora for 'low resource' languages, particularly focusing on the Bantu language tʉlʉʉsɨke kɨlaangi with a brief overview of its speaker community and the role of a Facebook group for corpus generation. It highlights previous work on social media data collection, legal issues surrounding automated data collection, and privacy considerations. The document also outlines the technical process of corpus creation and describes the resulting data from the Facebook group, emphasizing the aim to eventually make this corpus publicly available.