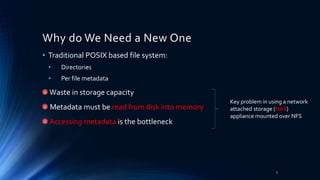

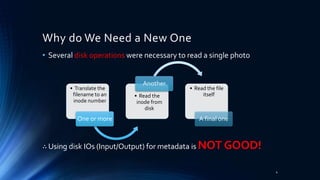

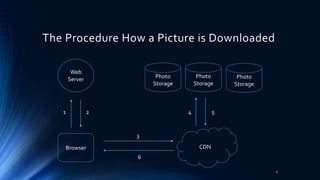

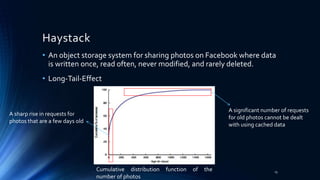

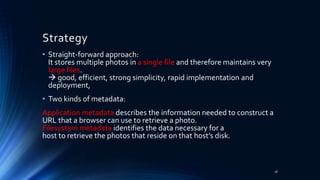

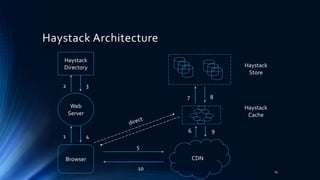

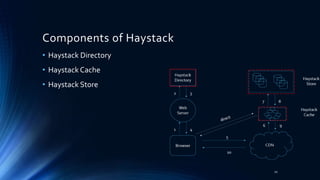

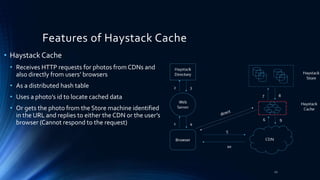

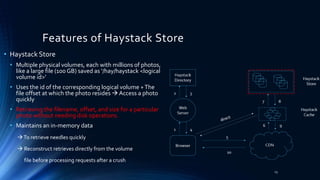

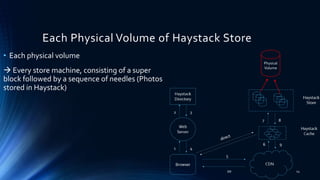

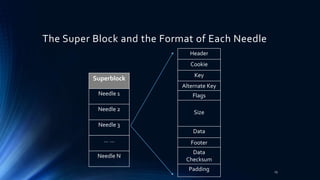

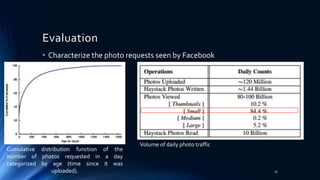

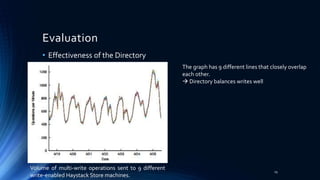

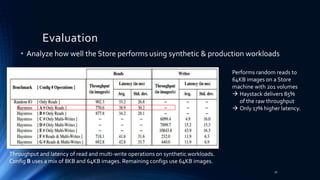

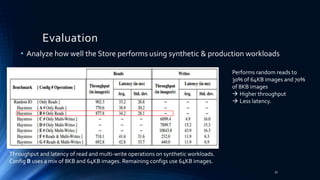

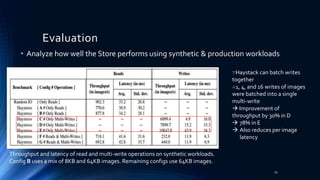

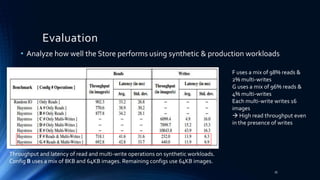

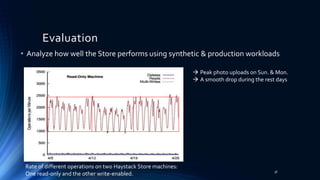

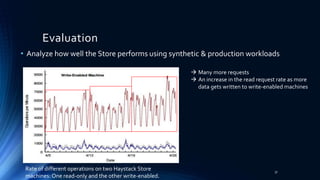

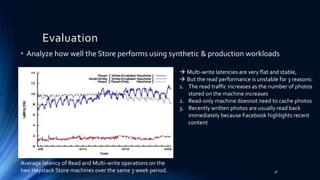

Haystack is Facebook's object storage system optimized for storing and retrieving billions of photos. It aims to achieve high throughput and low latency while being fault-tolerant and cost-effective. Haystack stores multiple photos in large files on physical volumes and uses application and filesystem metadata to efficiently access individual photos. Evaluation shows the directory balances writes well across volumes and the cache achieves 80% hit rates. Synthetic benchmarks demonstrate Haystack delivers 85% of raw storage throughput with only slightly higher latency.