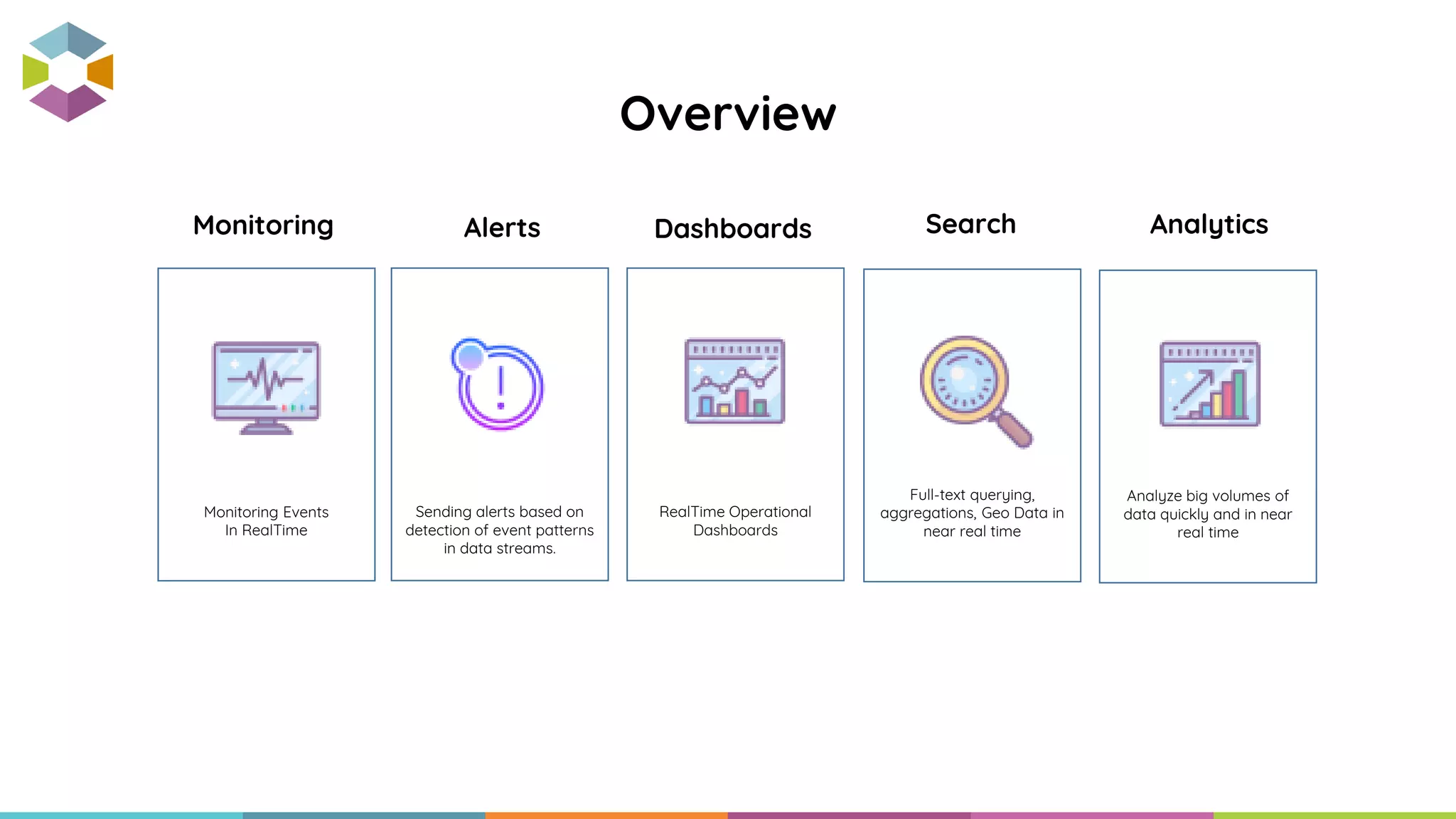

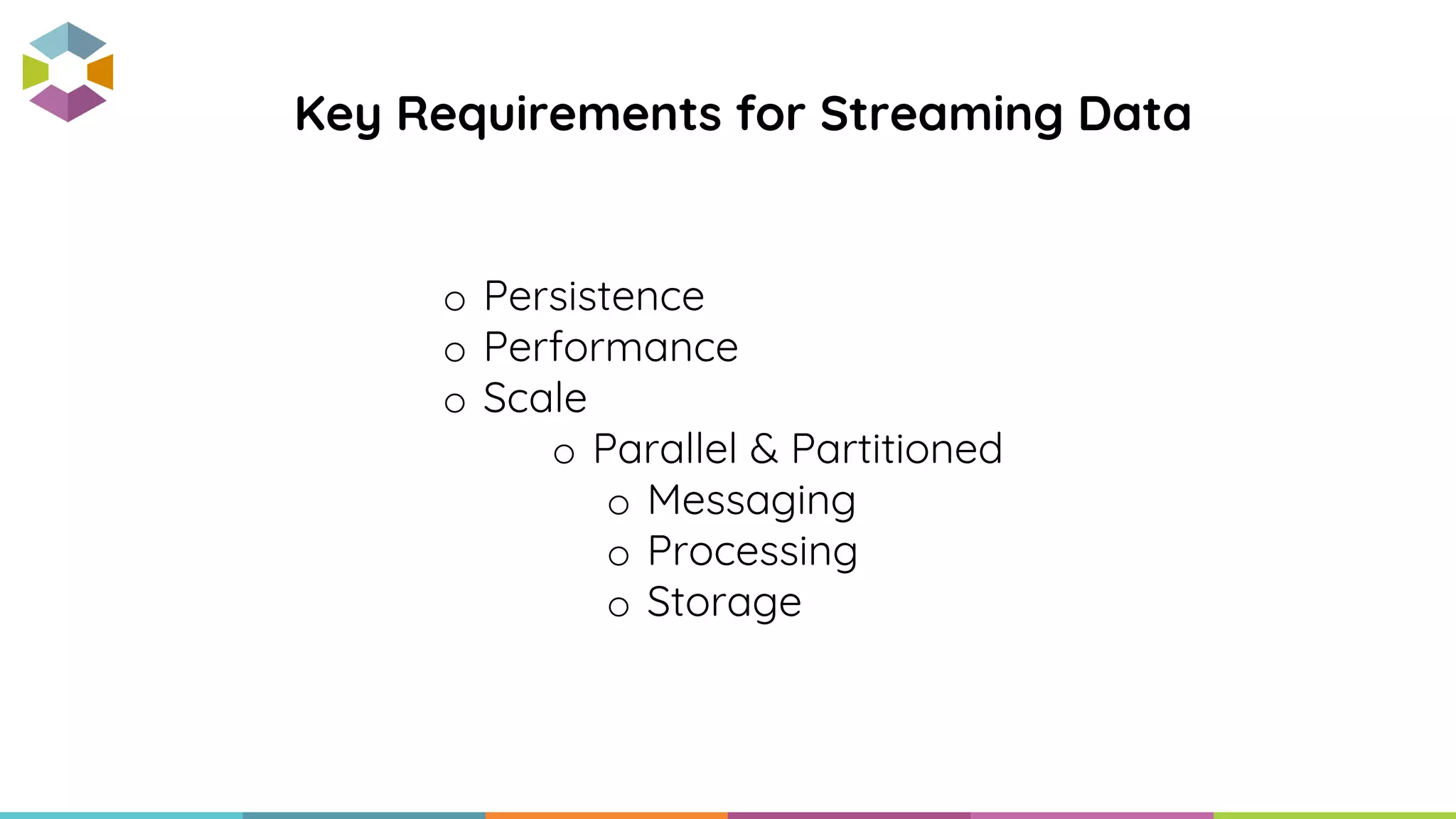

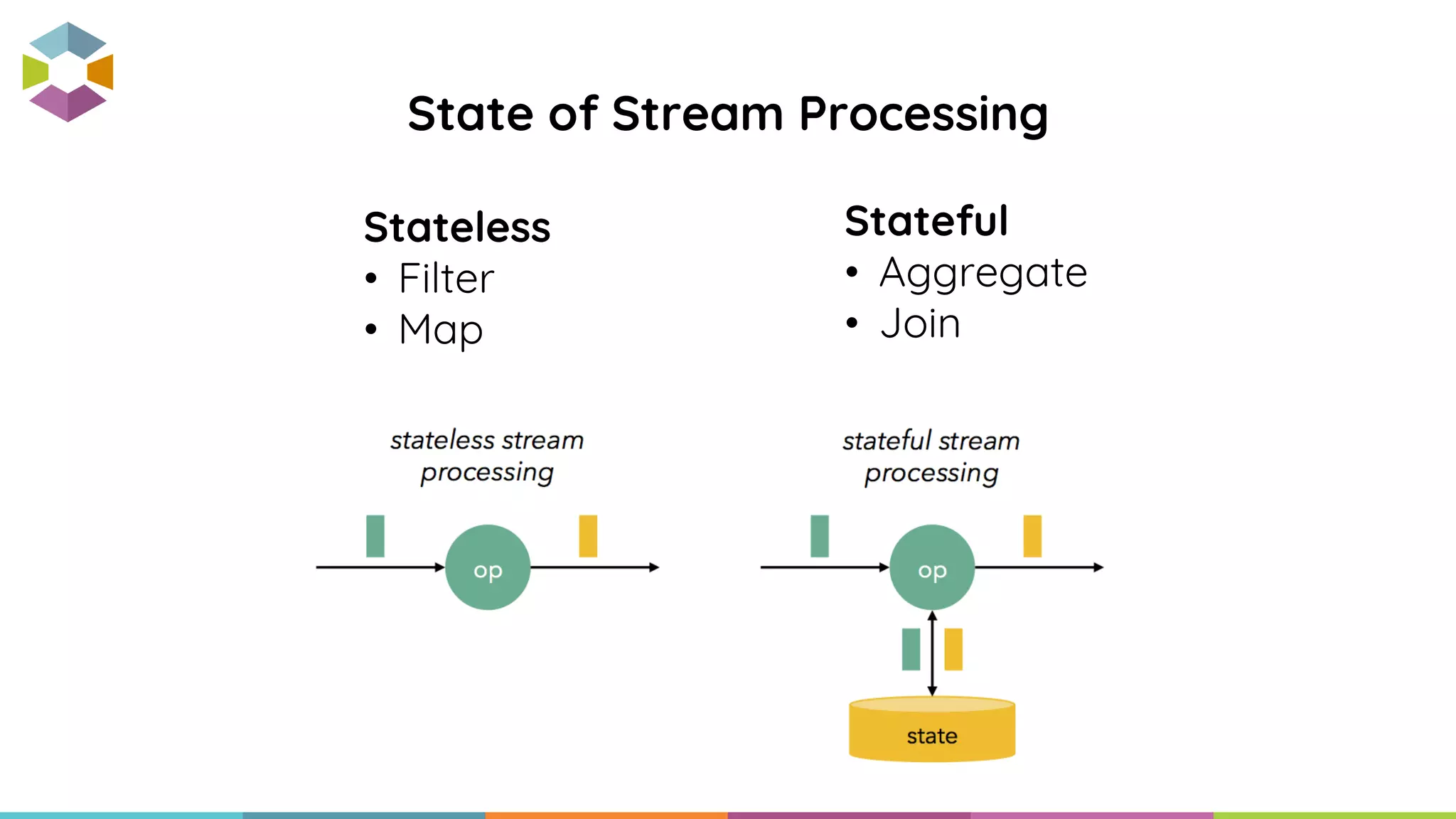

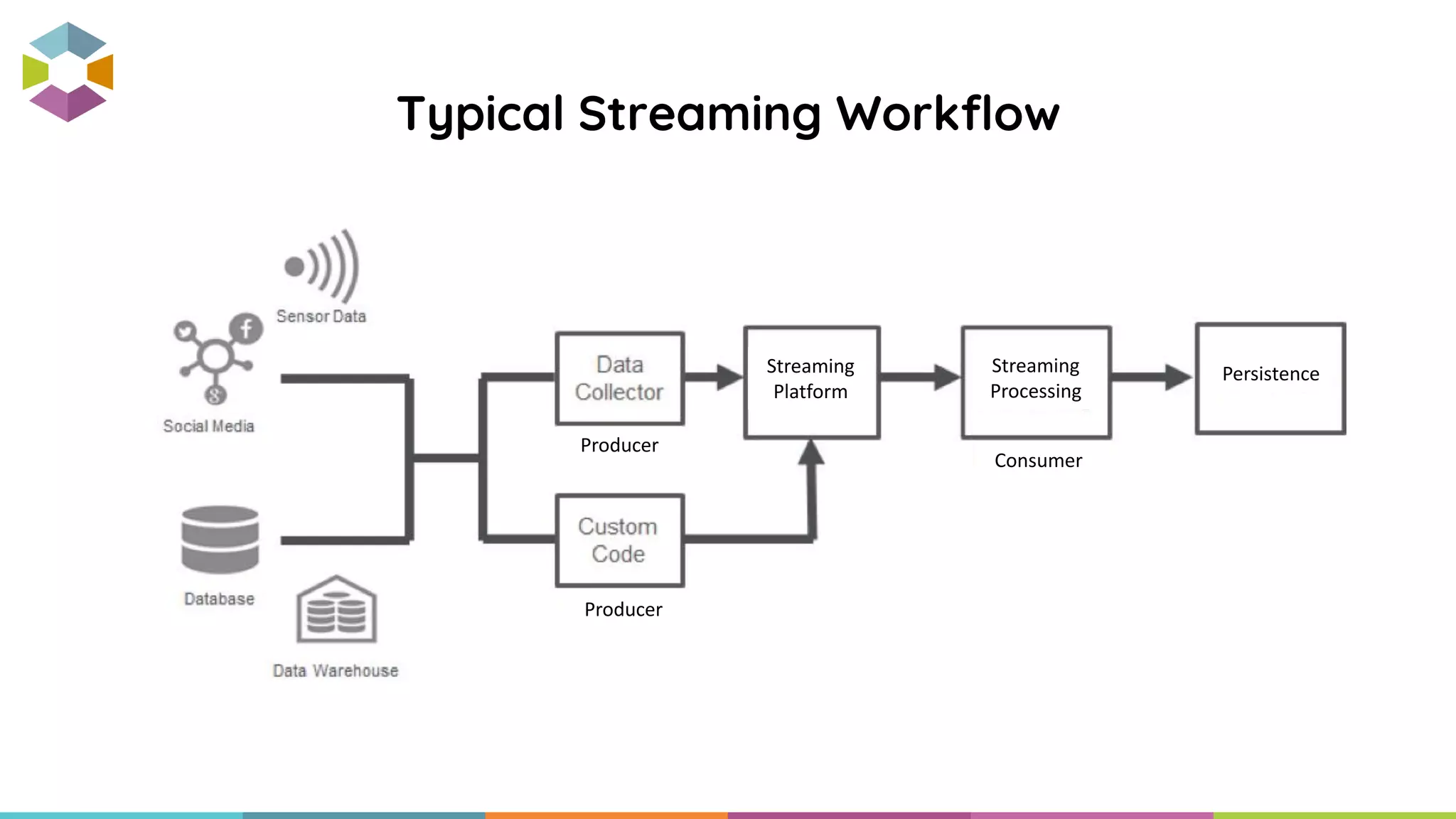

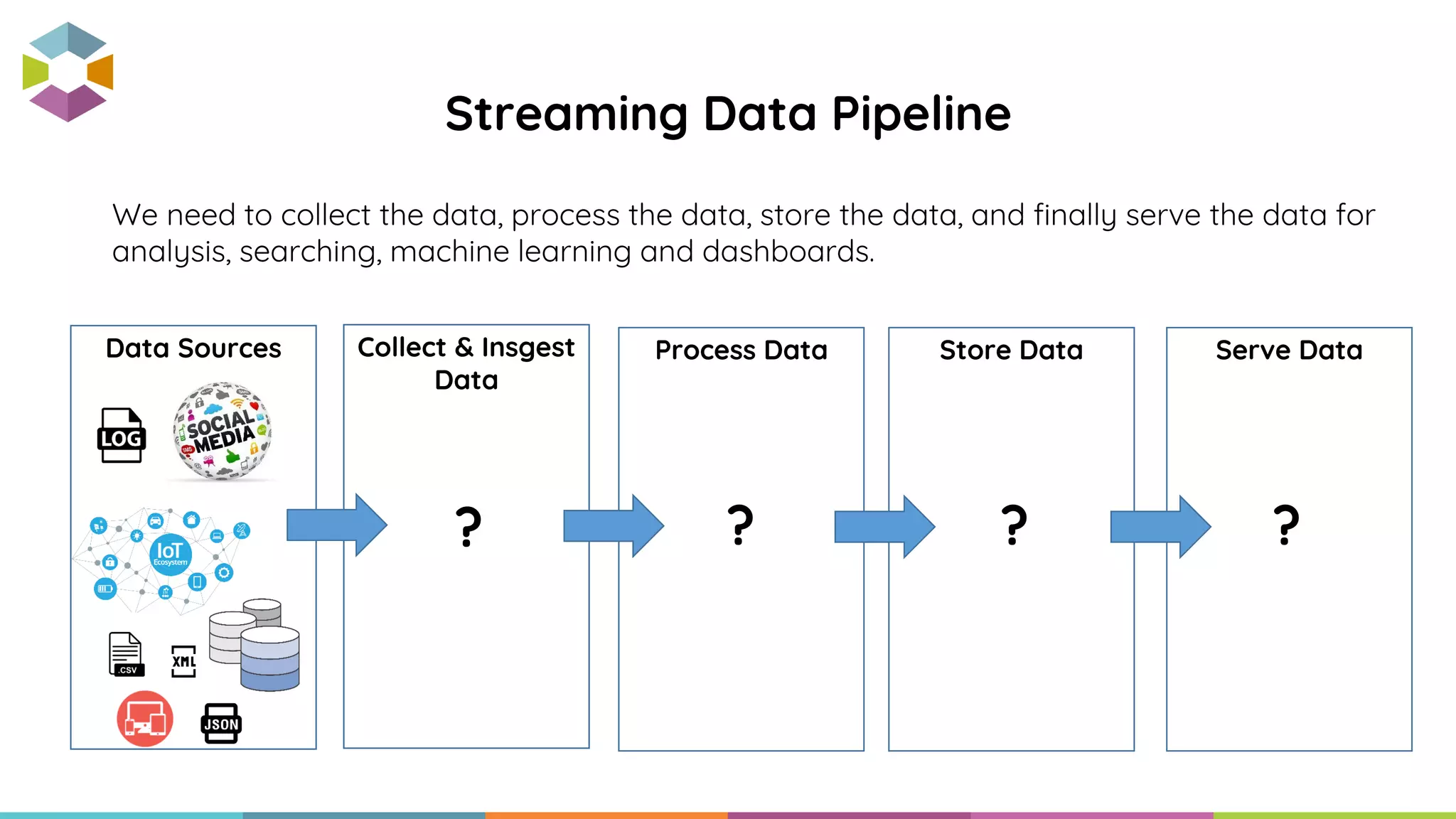

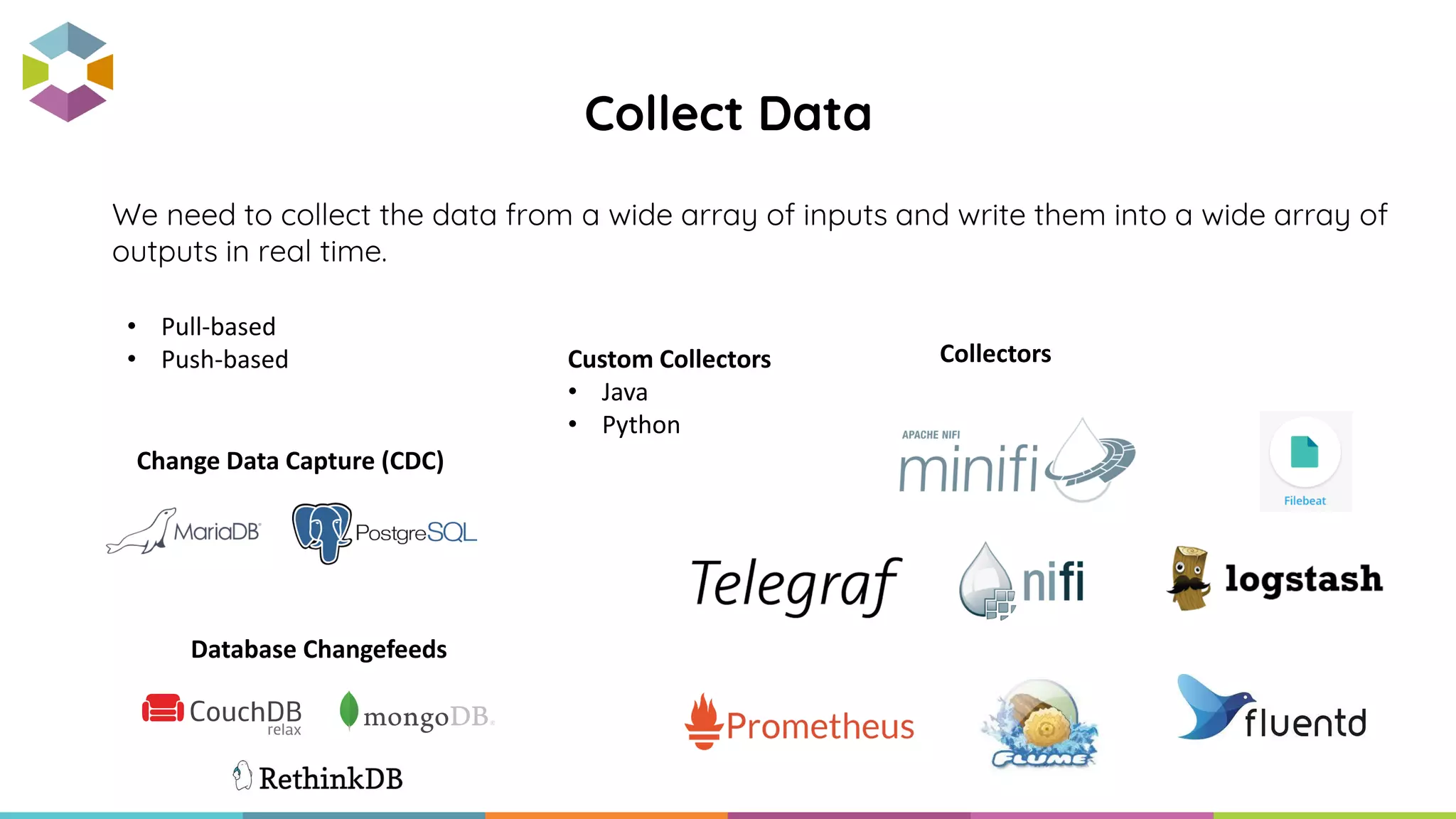

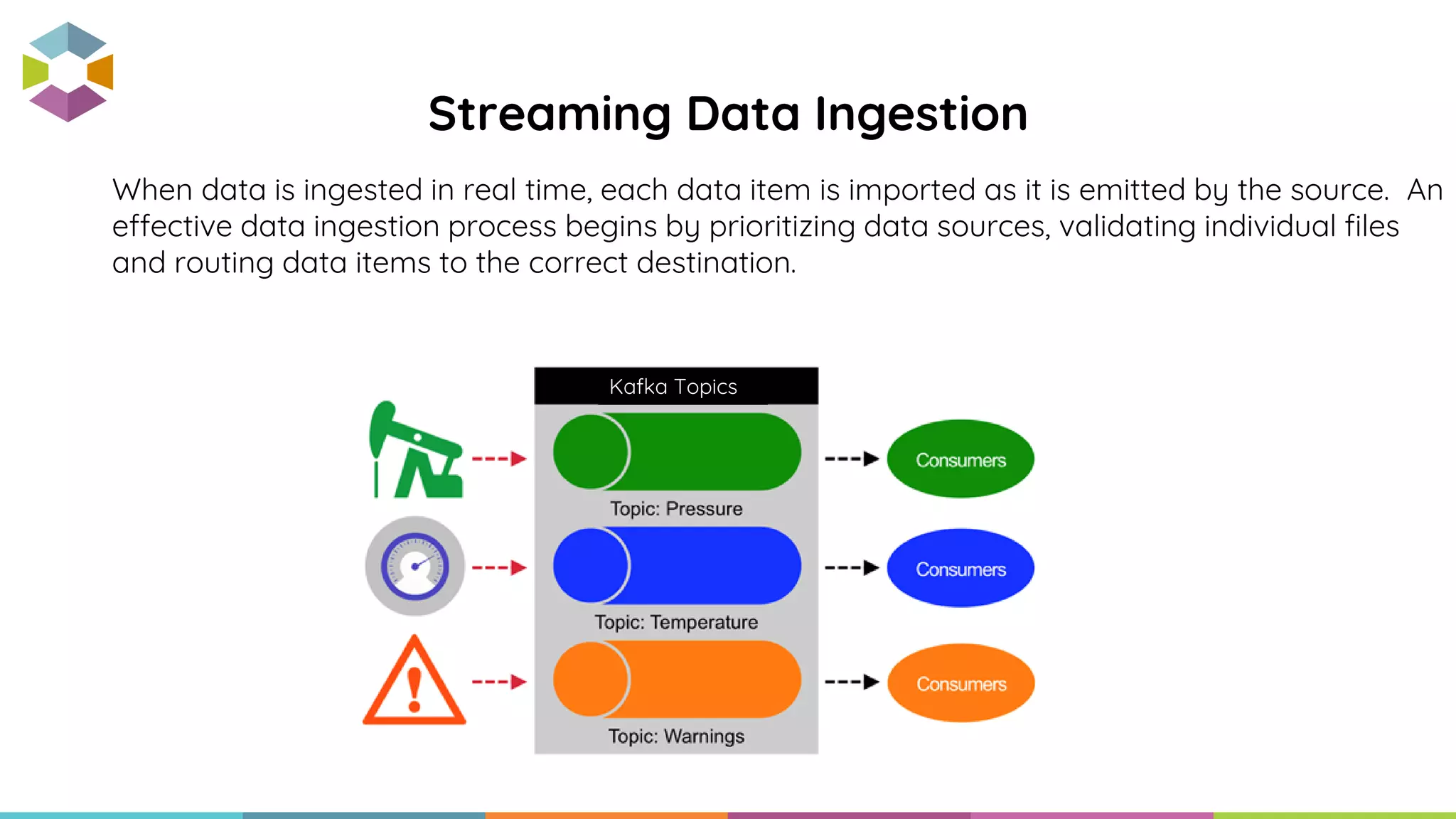

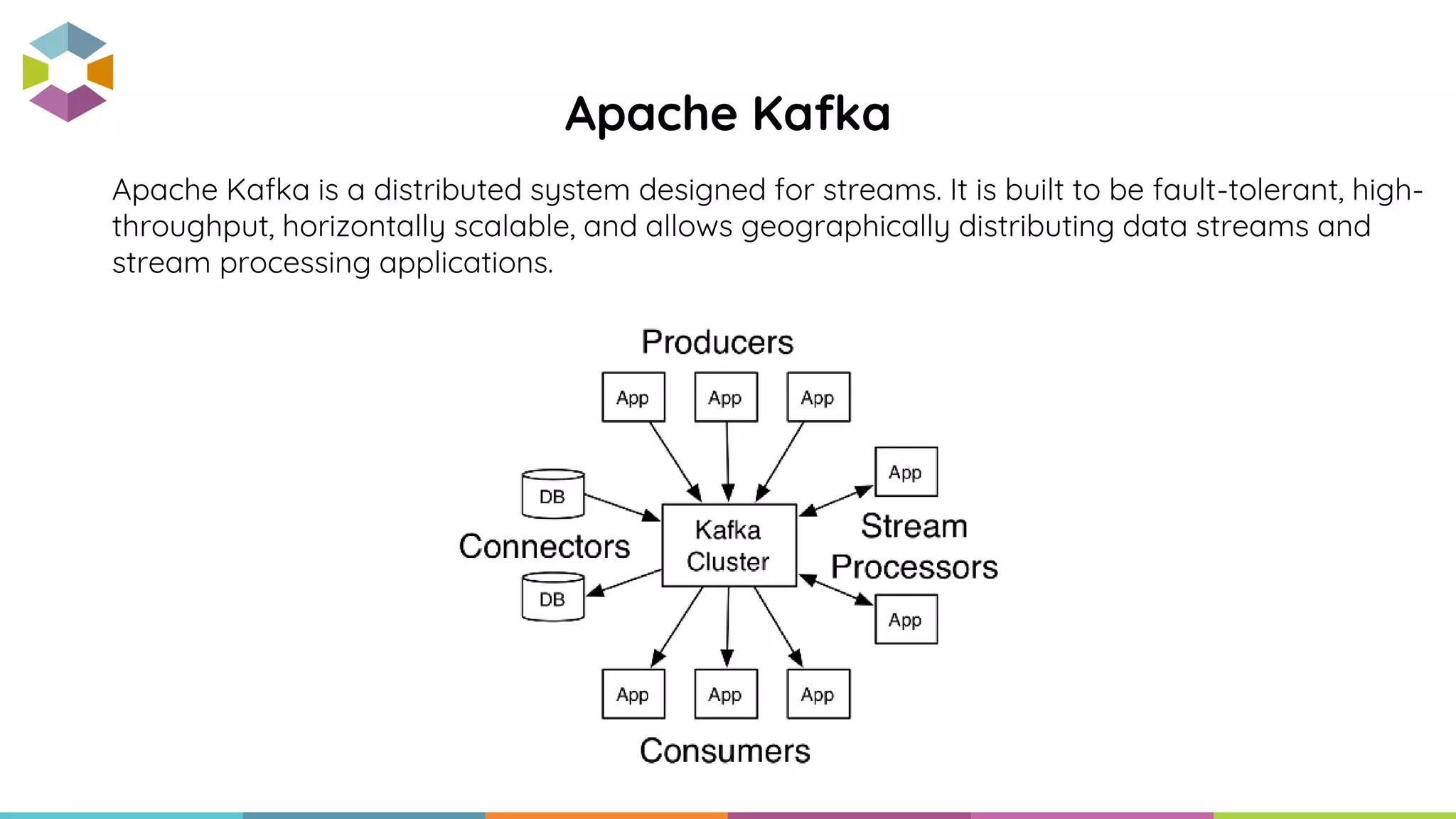

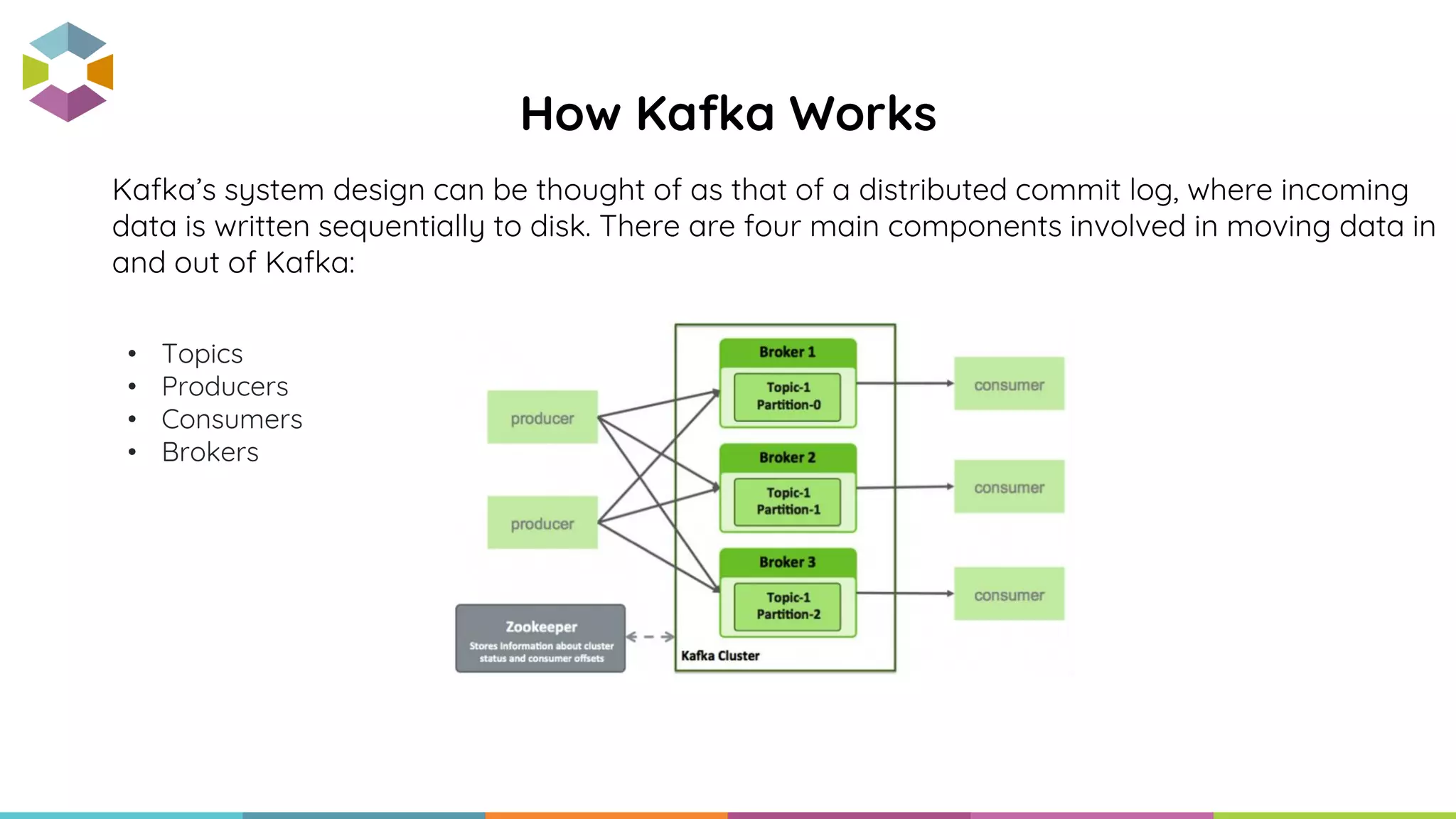

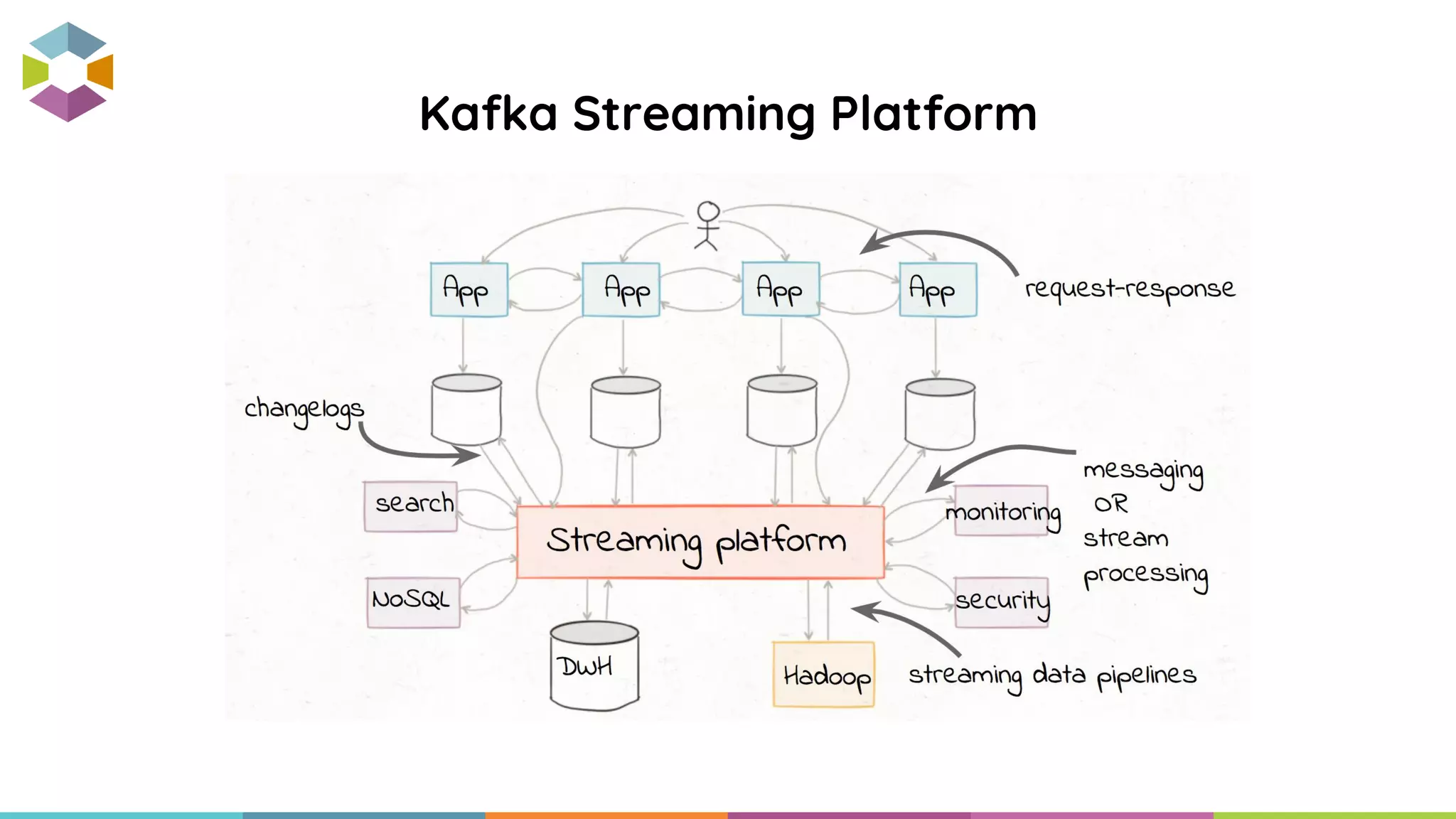

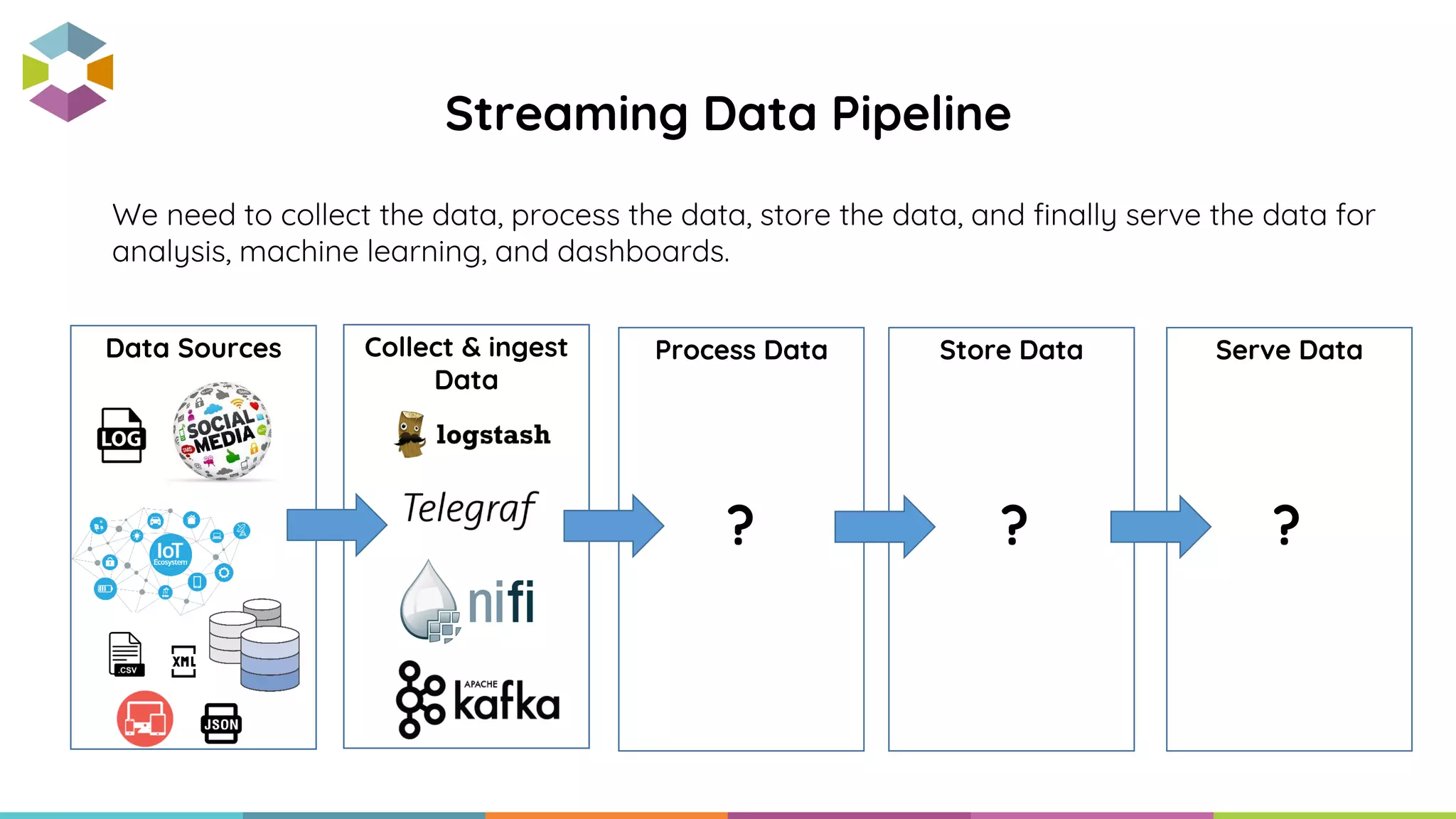

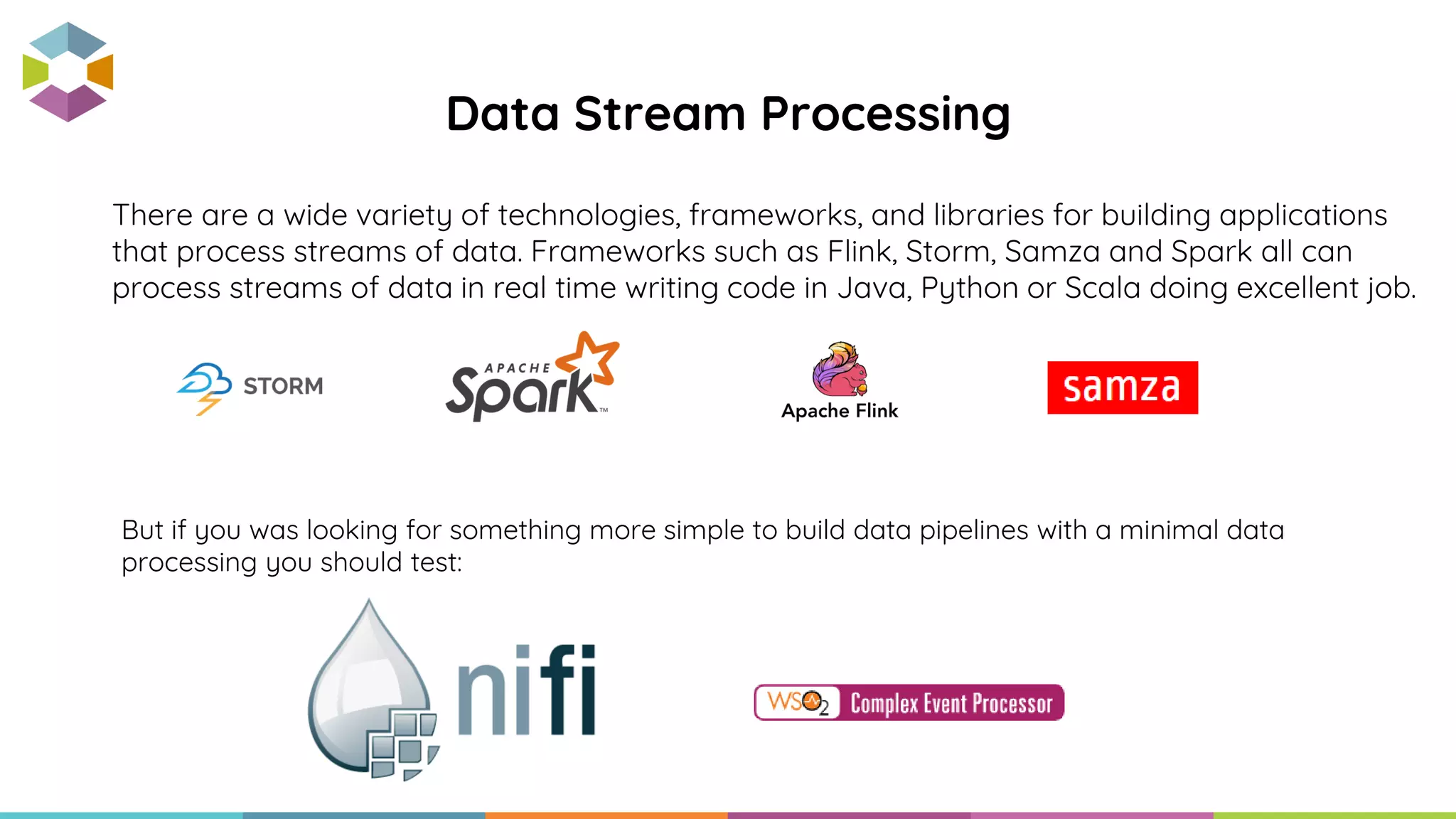

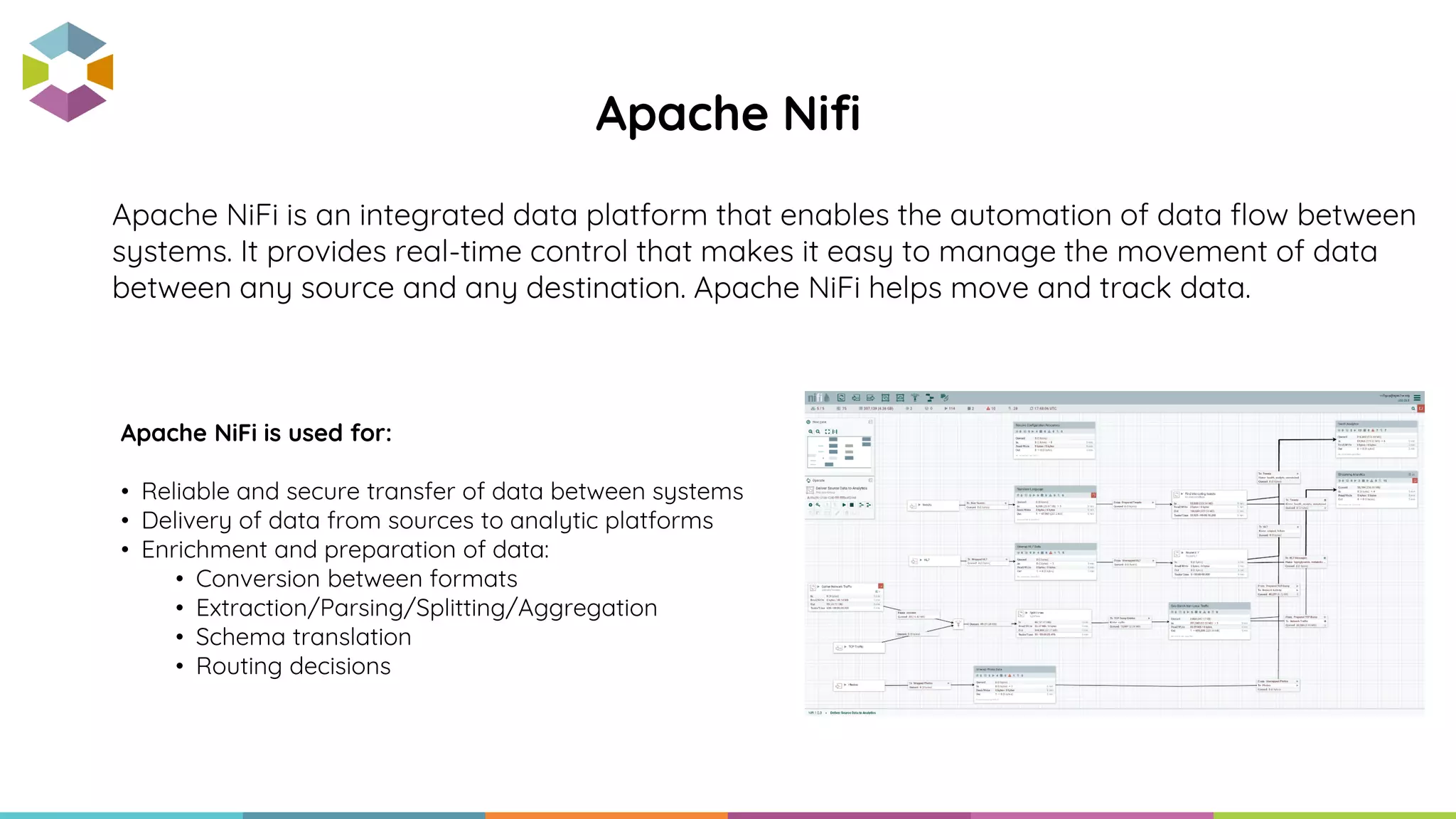

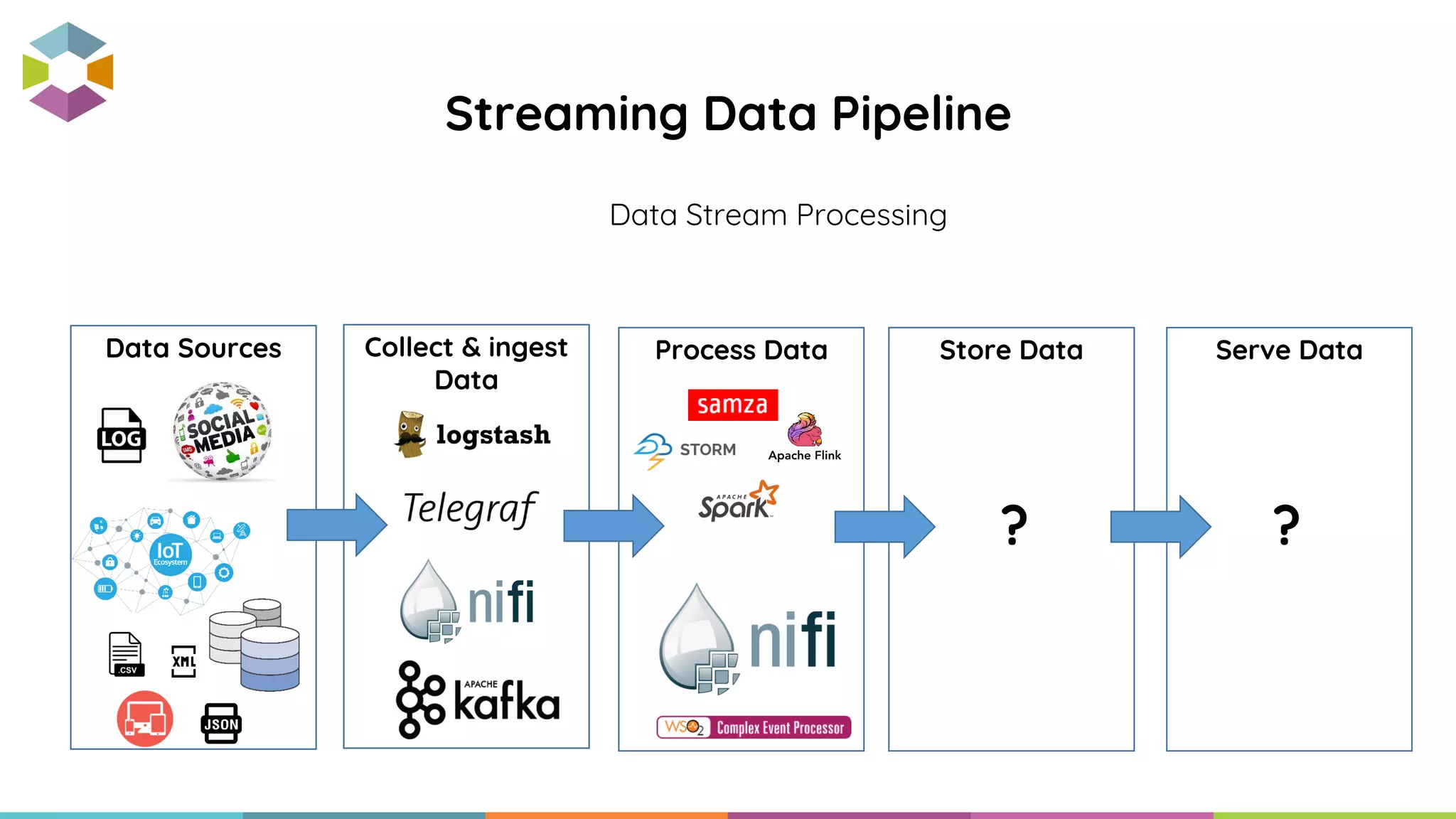

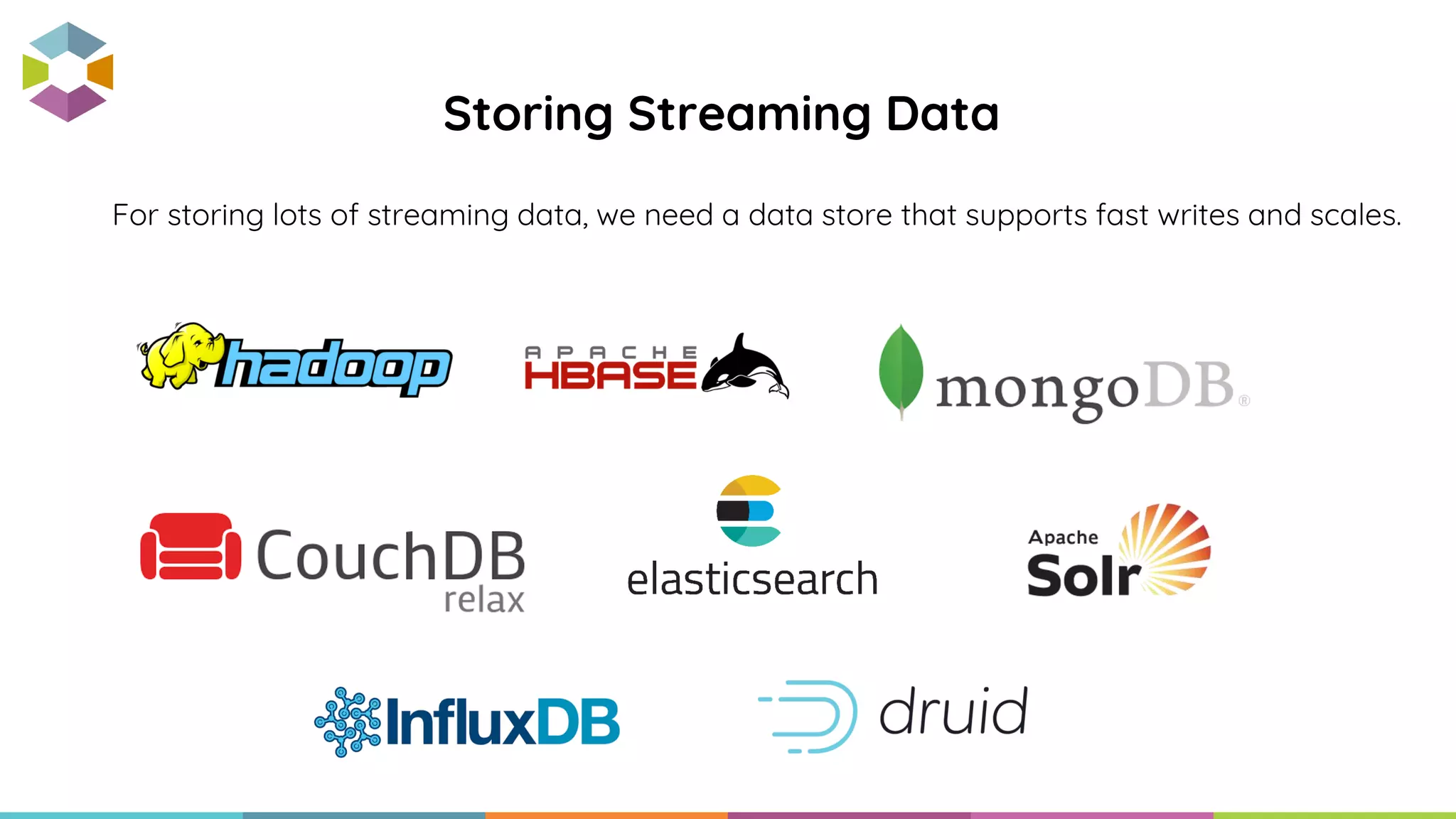

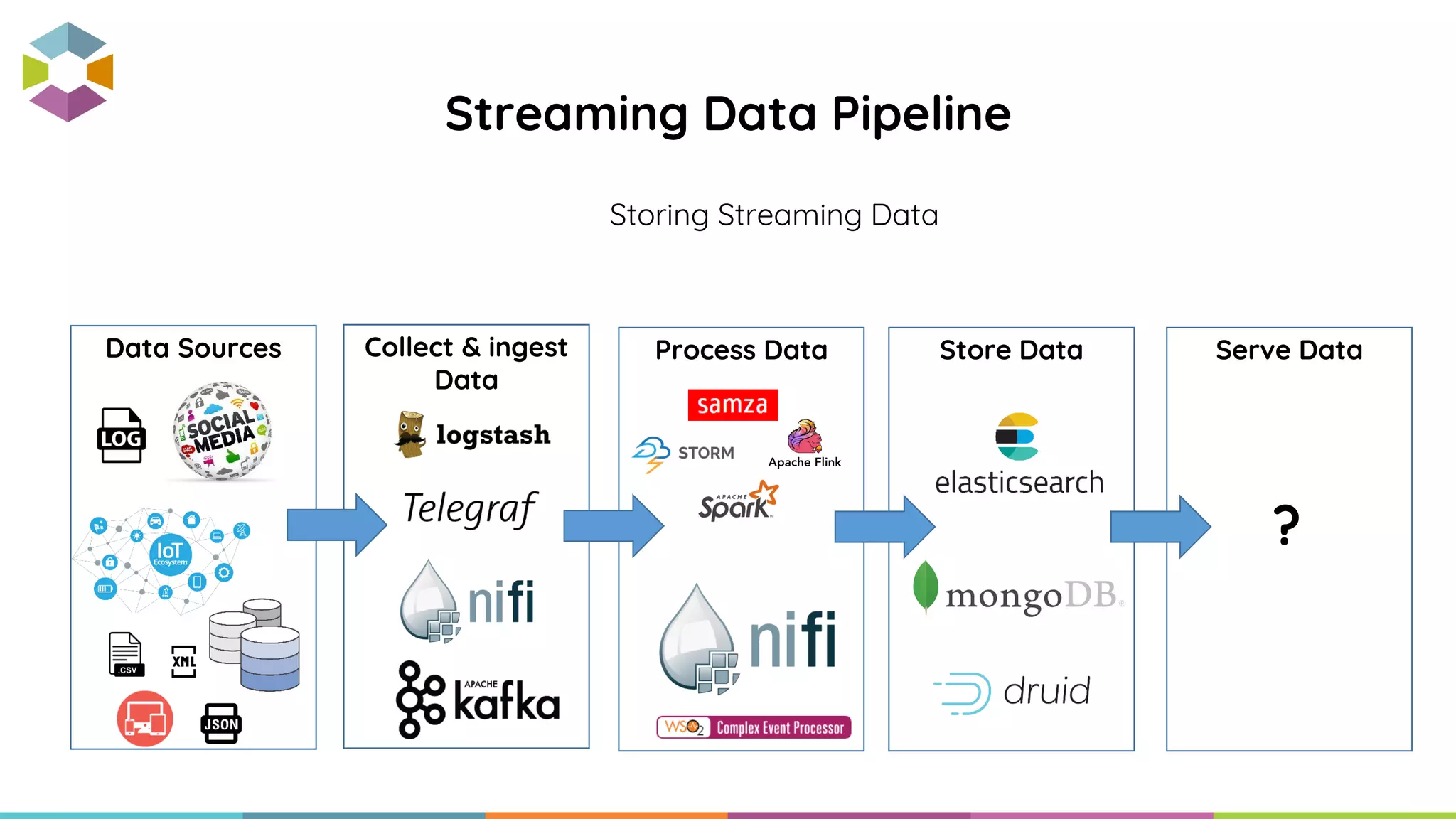

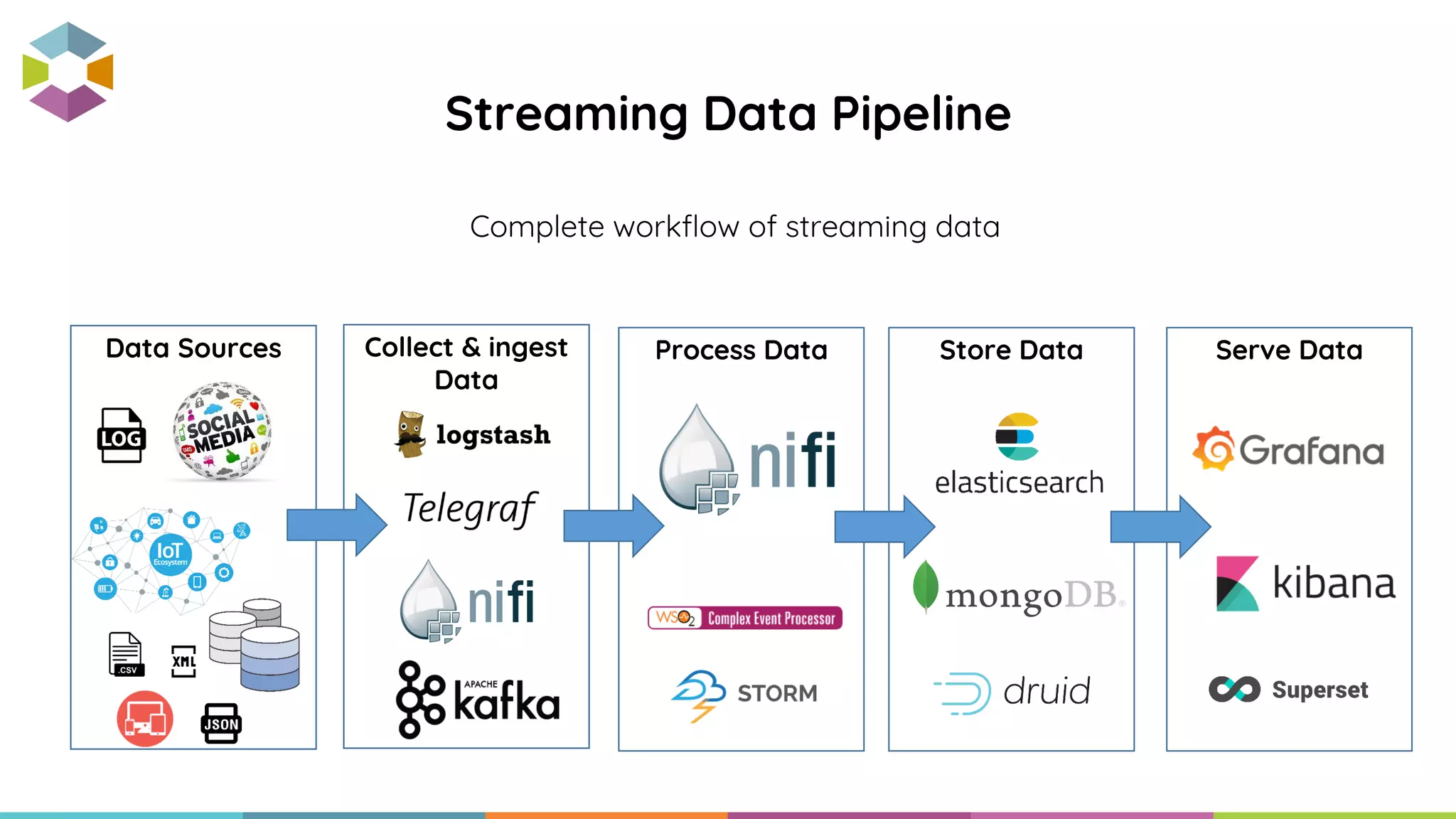

This document provides an overview of data stream processing. It discusses what streaming data is and examples like IoT sensors, social media, and website monitoring. It also outlines the typical components of a streaming data pipeline including collecting and ingesting data from various sources, processing the data in real-time, storing the processed data, and serving it for analytics, search, and dashboards. Key streaming technologies mentioned include Apache Kafka, Apache NiFi, and various stream processing frameworks. It also introduces Stishovite as a console for managing an entire streaming data platform built from open source components.