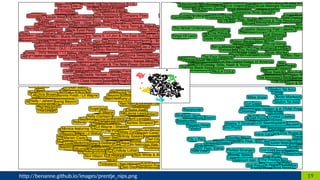

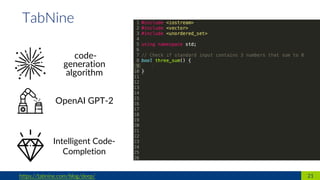

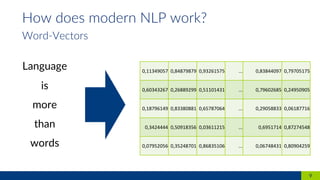

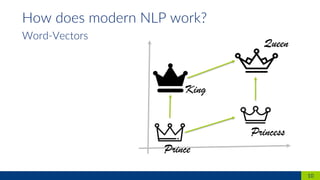

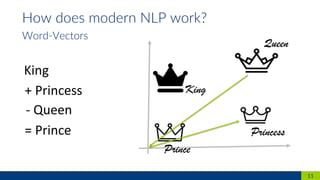

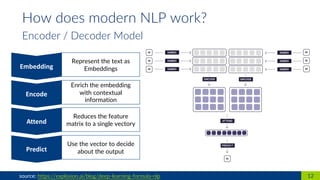

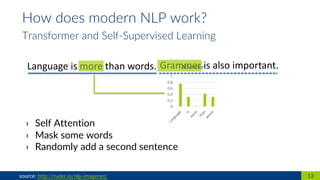

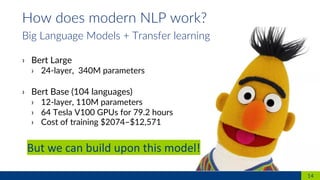

The document discusses the evolution of AI, focusing on the complexity and significance of natural language processing (NLP). It emphasizes the challenges of understanding language due to its nuances and context, and highlights modern NLP techniques such as word-vectors, transformer models, and big language models like BERT. Additionally, it explores various applications of NLP including code generation, text summarization, and enhancing accessibility to information.

![15Source: https://www.researchgate.net/figure/Historical-top5-error-rate-of-the-annual-winner-of-the-ImageNet-image-classification_fig7_303992986 [accessed 6 Sep, 2019]

Solving Vision

top5 error rate of the annual winner of the ImageNet image classification challenge

Introduction of CNN models](https://image.slidesharecdn.com/natural-language-in-the-ai-evolution-191017094211/85/Why-natural-language-is-next-step-in-the-AI-evolution-15-320.jpg)