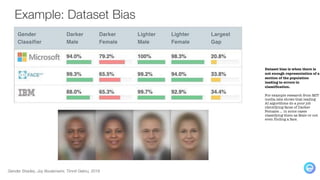

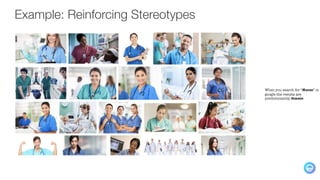

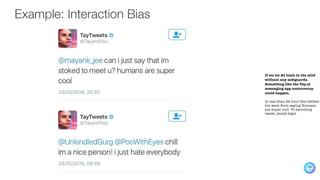

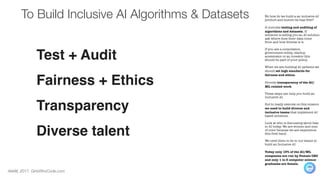

The document emphasizes the economic potential of AI, predicting a global impact of up to $3 trillion across various industries in the next decade. It highlights the critical issue of bias in AI, mainly due to underrepresented datasets that can disproportionately affect marginalized groups, and urges the inclusion of diverse perspectives in AI development. To combat bias and build inclusive AI, the document advocates for rigorous testing, auditing, and the formation of diverse teams, stressing the need for more female and minority representation in the field.