Patrick Stox provides tips for managing website migrations and redirects, including:

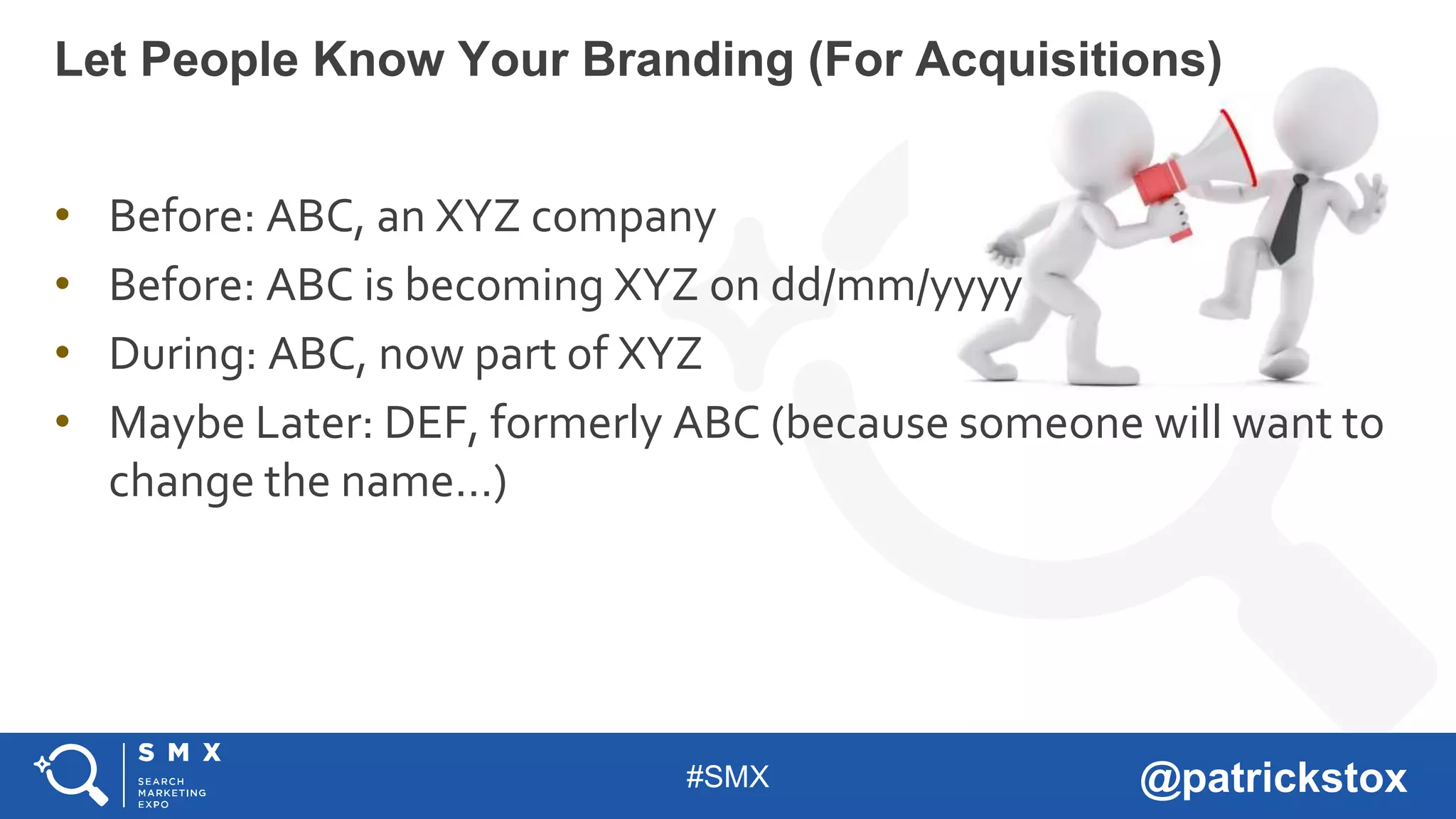

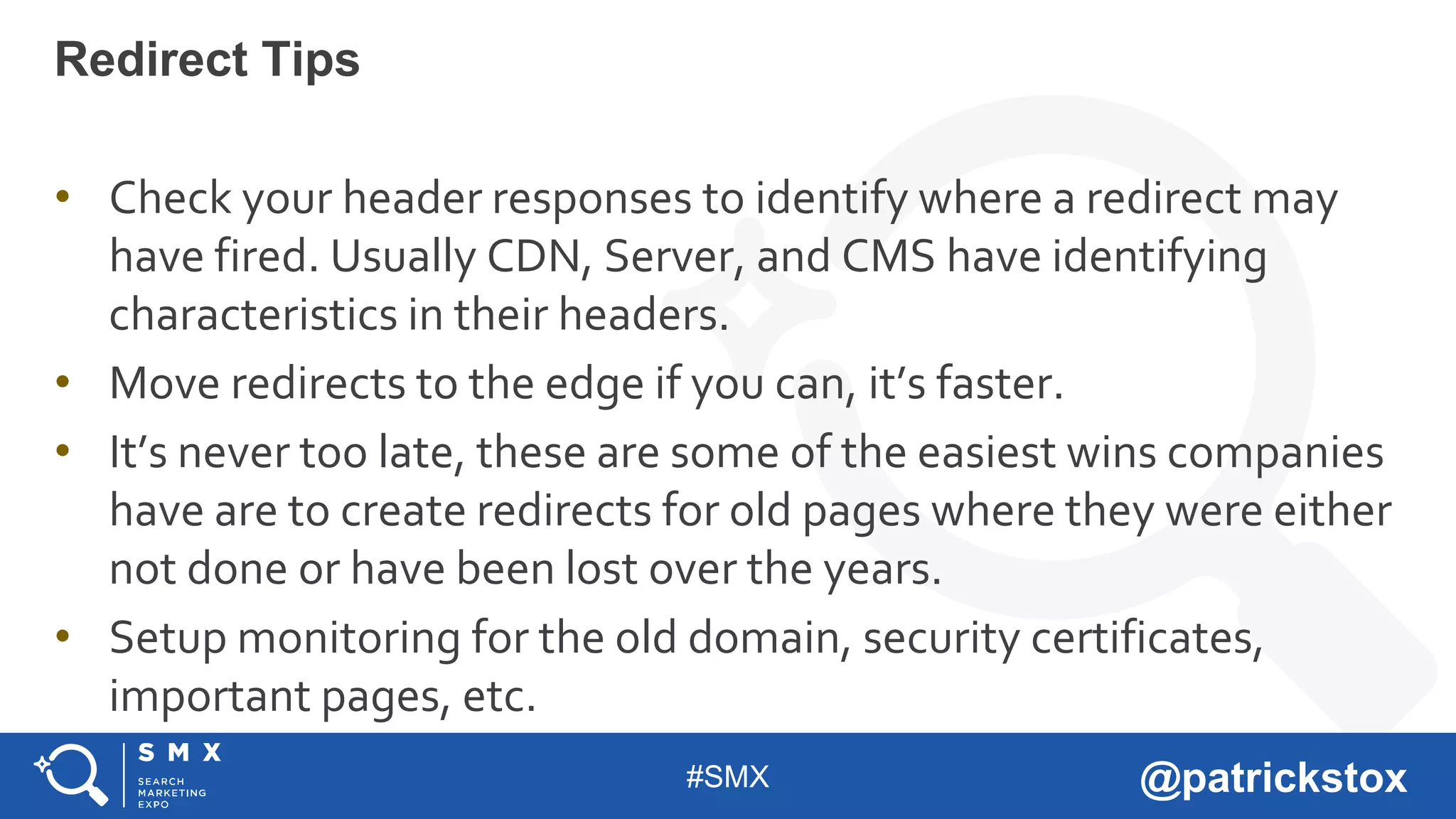

1. Make a detailed plan, get the right people involved, set realistic goals and deadlines, and get enough resources.

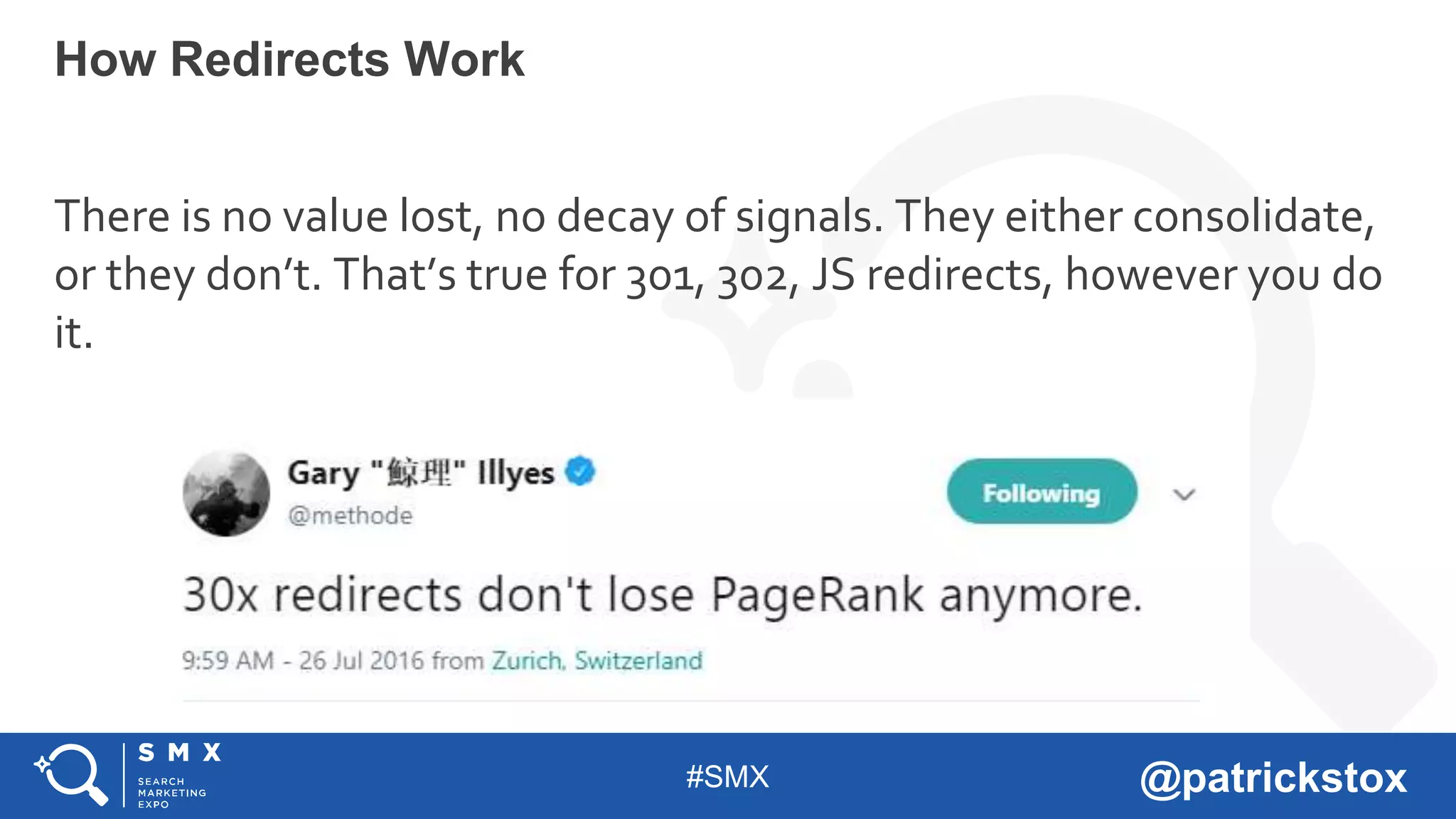

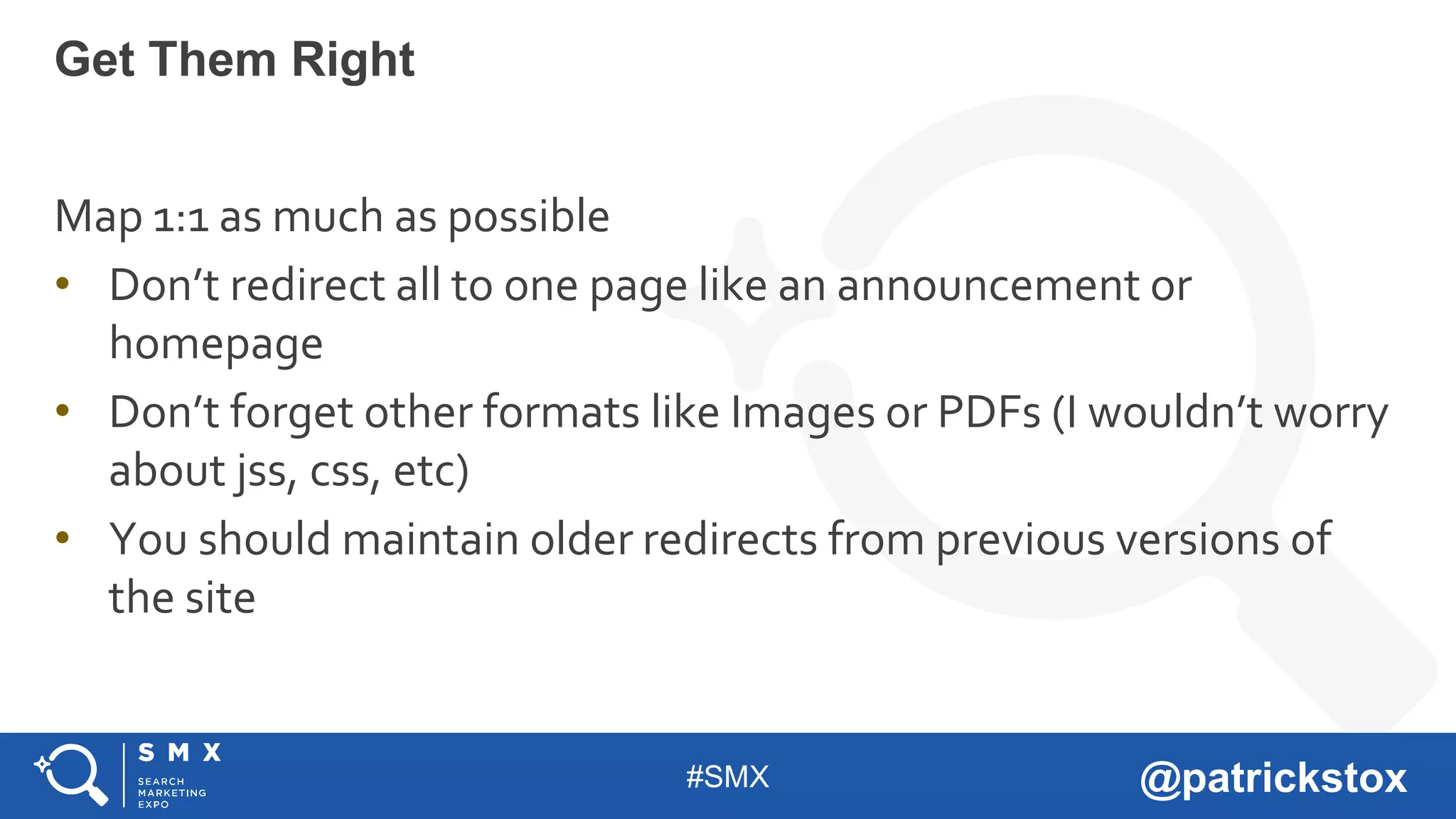

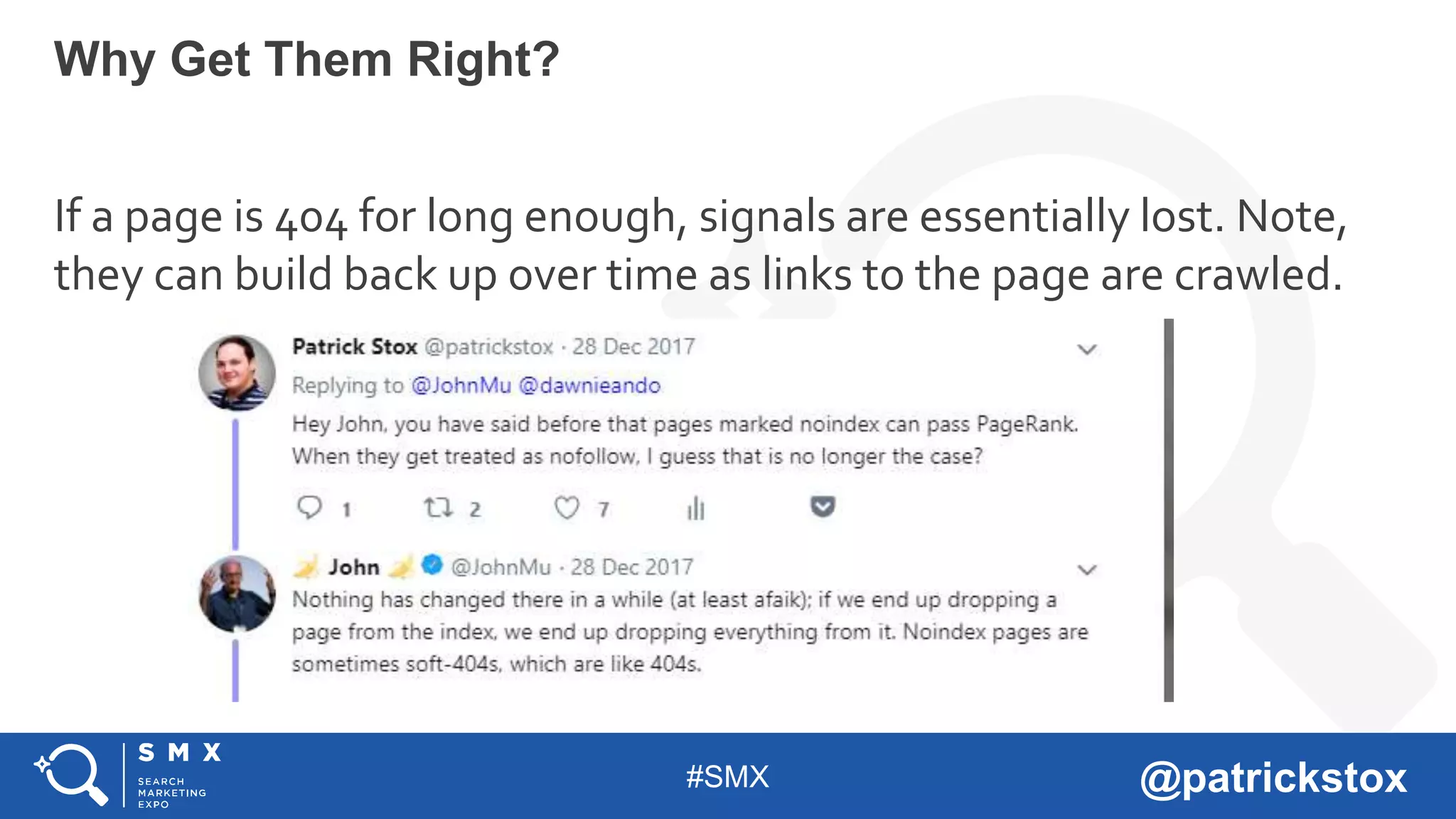

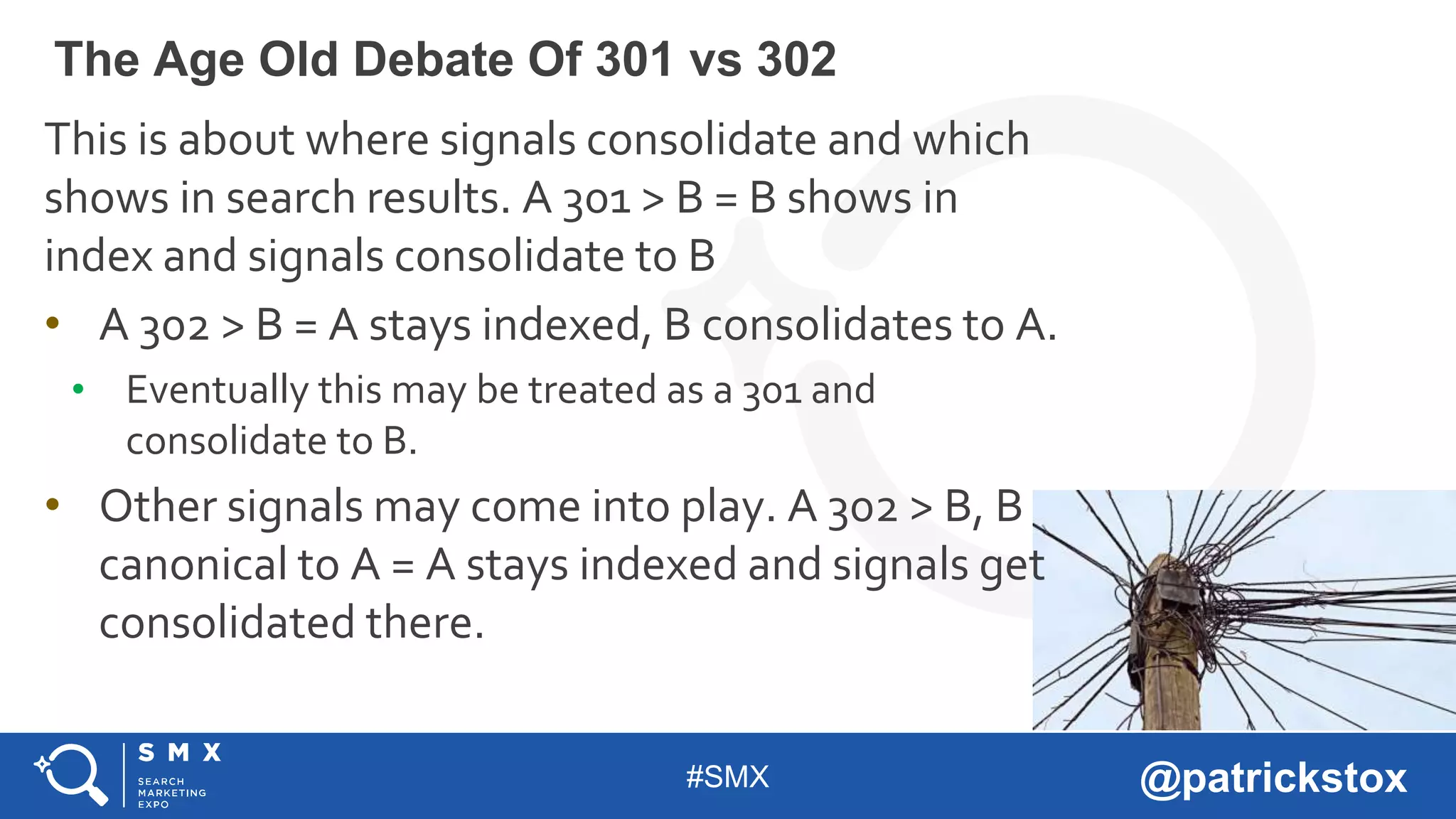

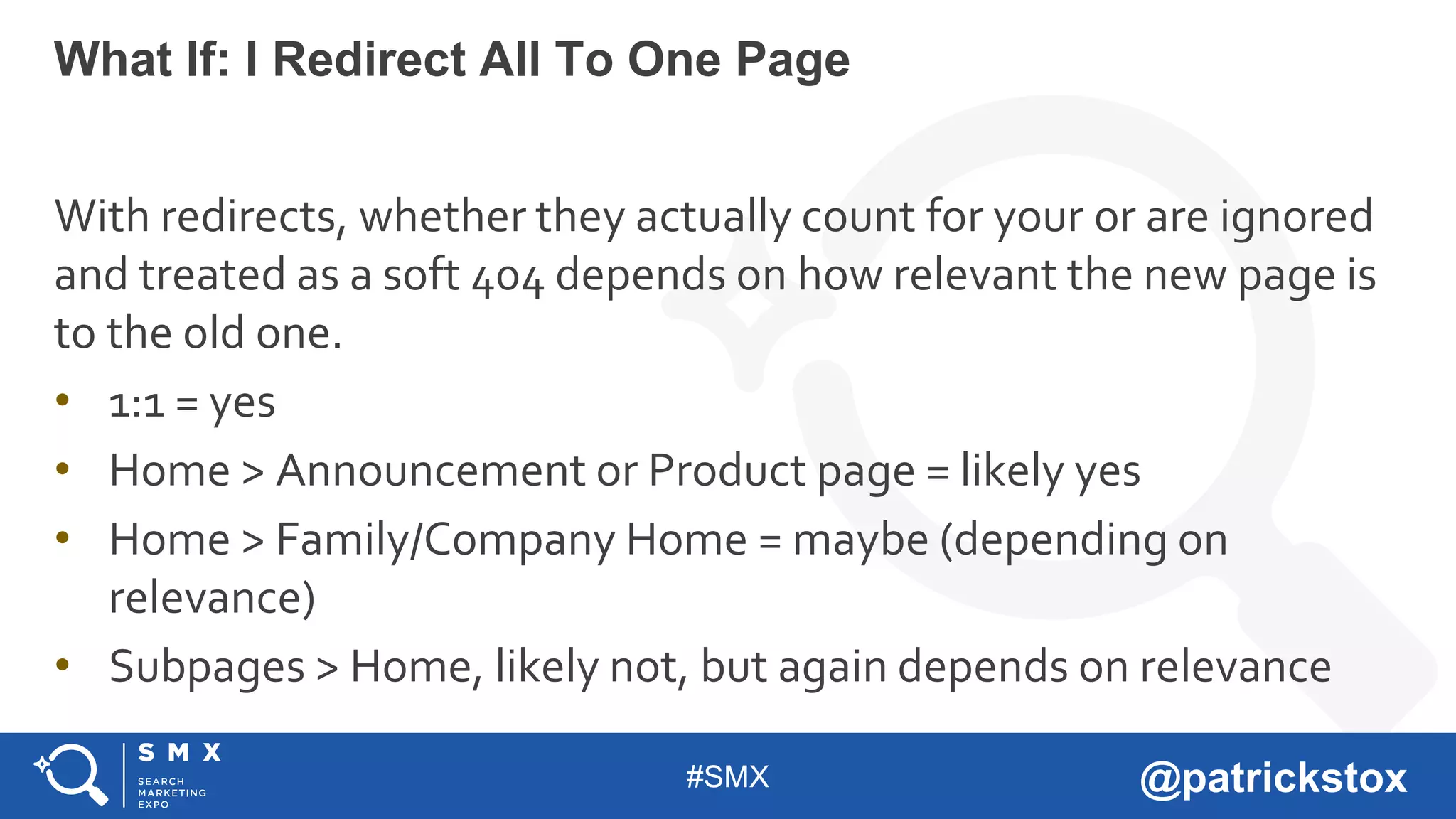

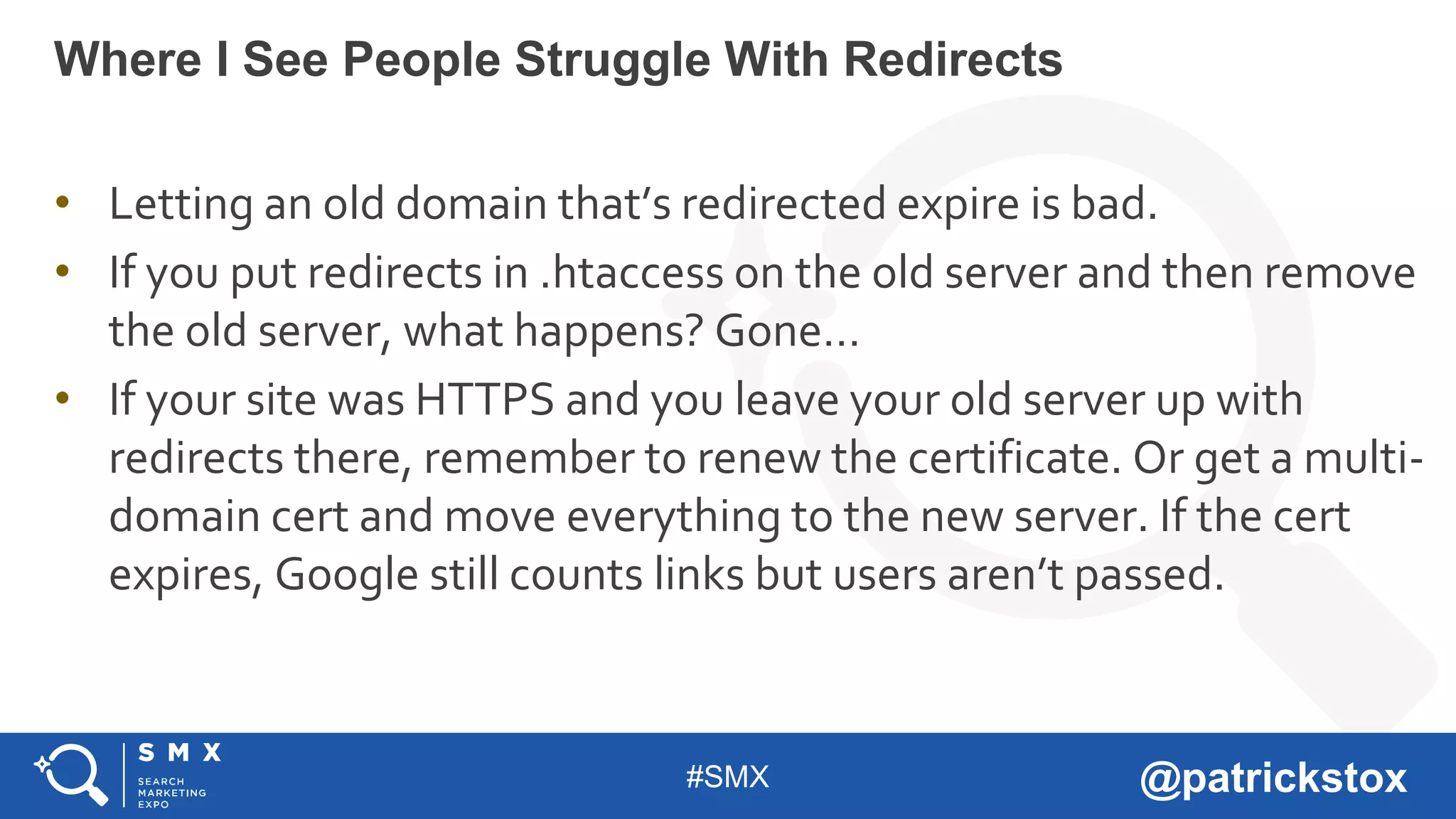

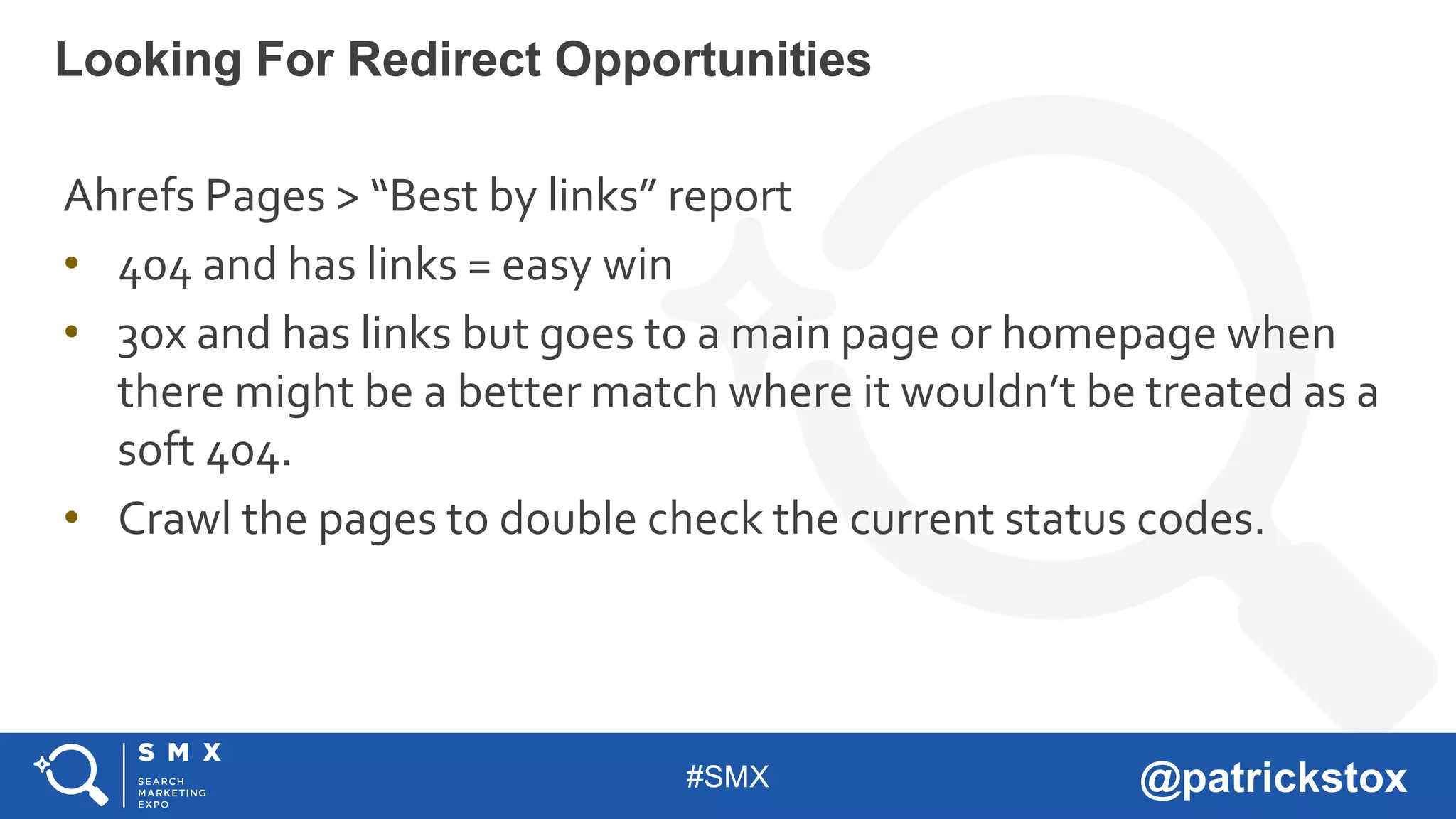

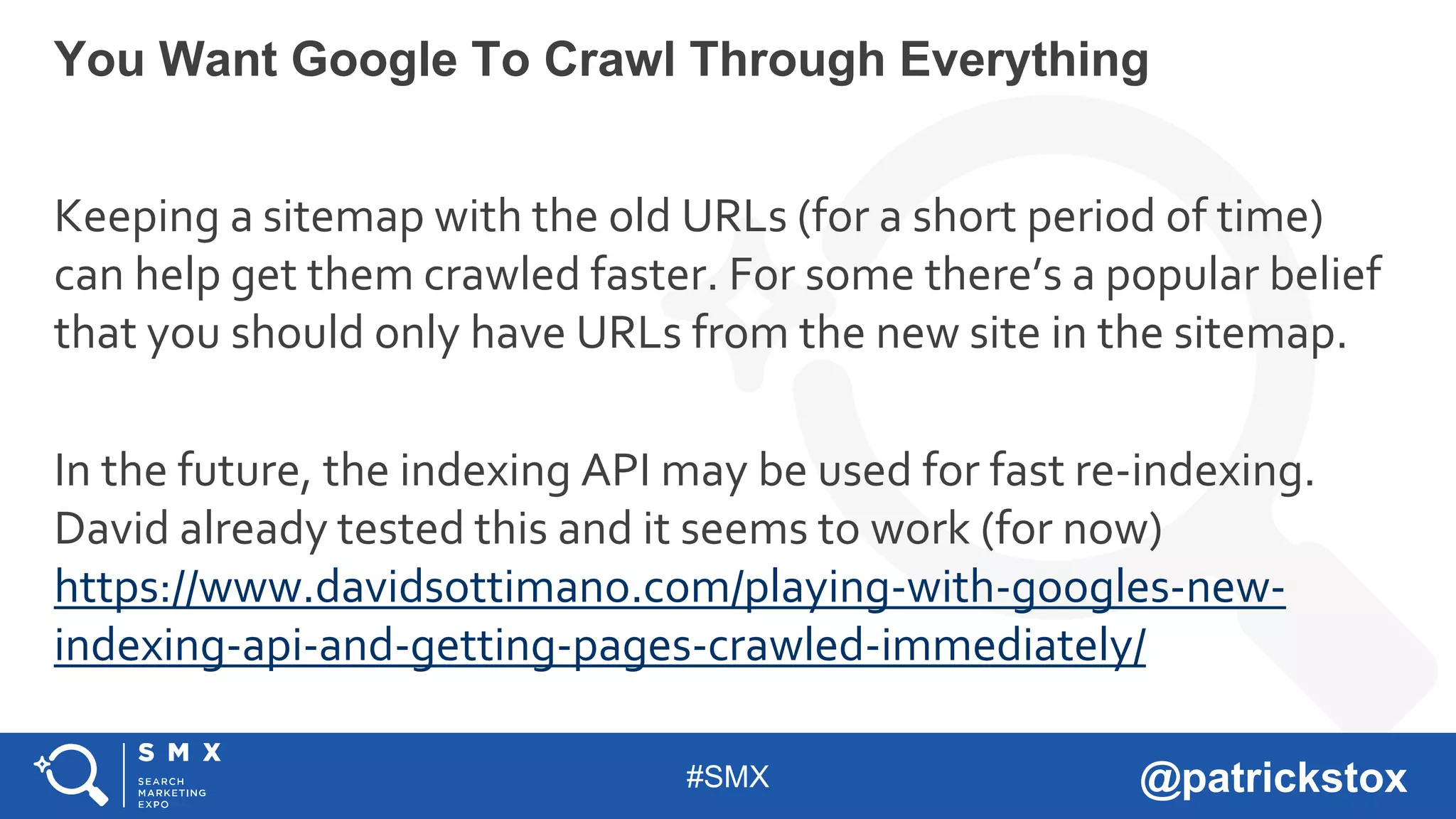

2. Expect some issues and don't panic - not everything needs to be perfect at launch. Ensure redirects are set up properly to consolidate links and signals to the new site.

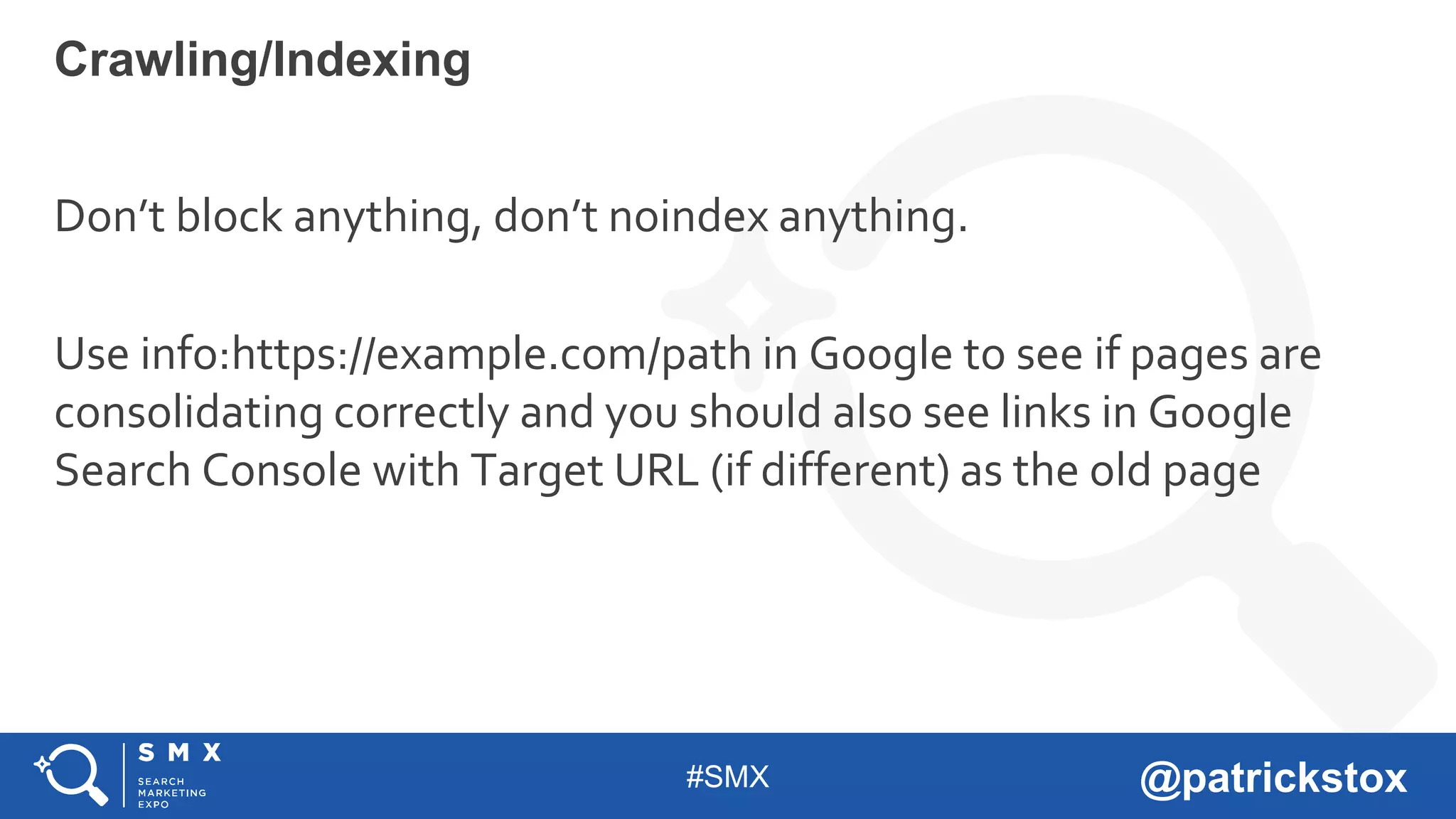

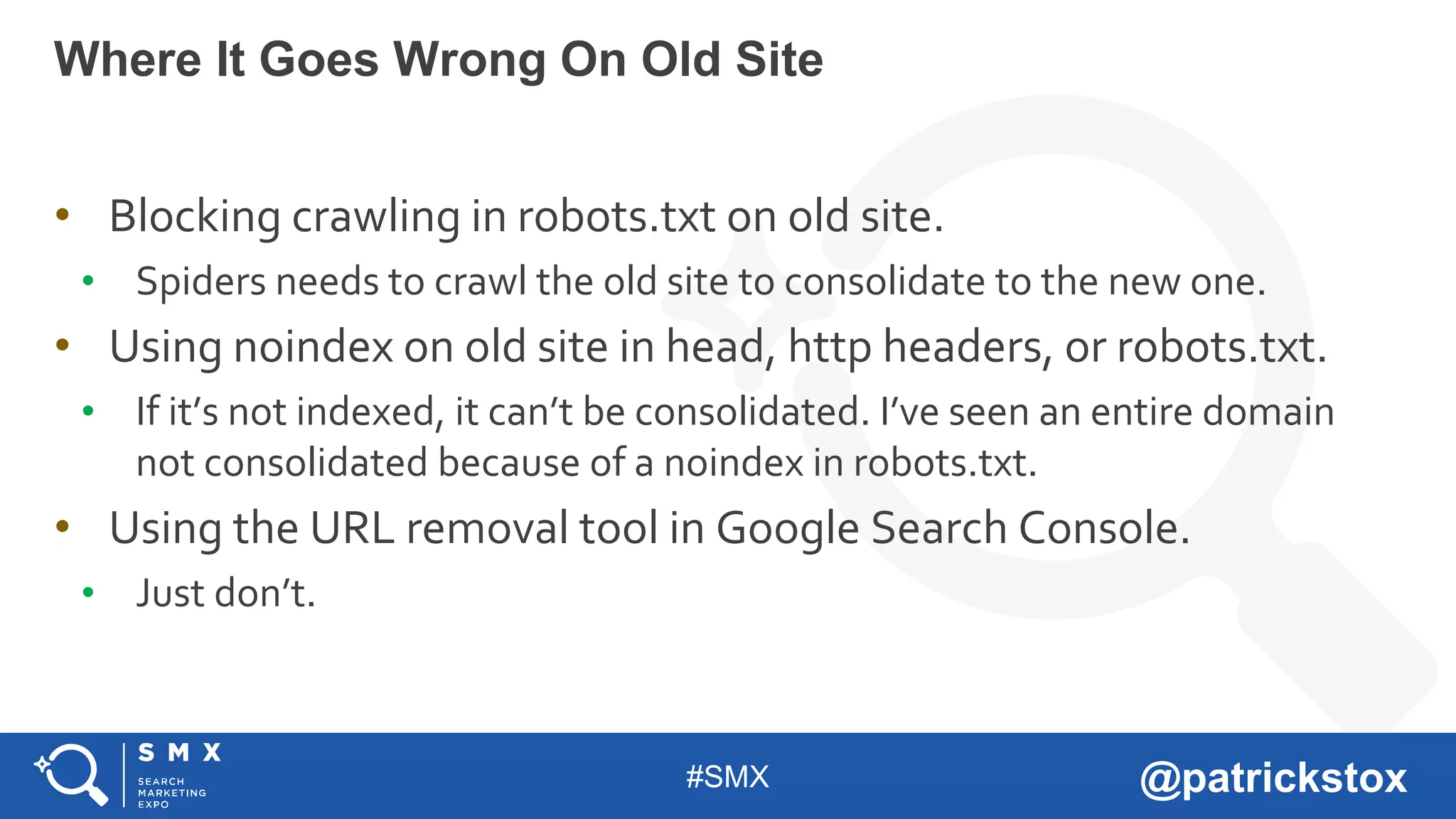

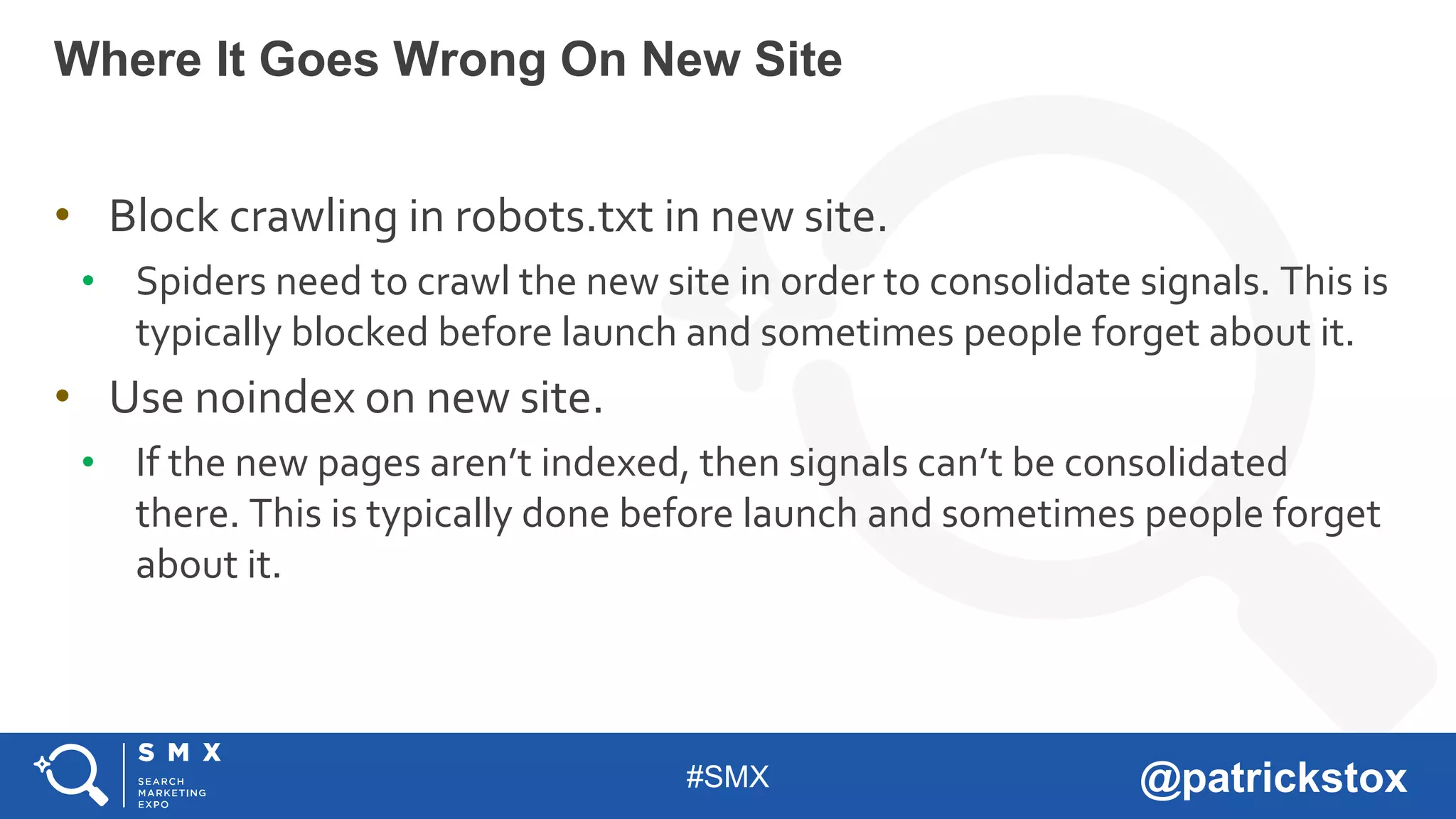

3. Monitor the old and new sites to ensure proper crawling and indexing, and that nothing is blocked or noindexed that could prevent consolidation of signals. Look at analytics comprehensively.