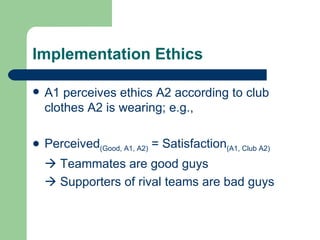

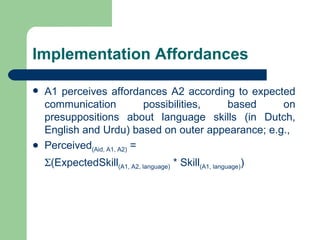

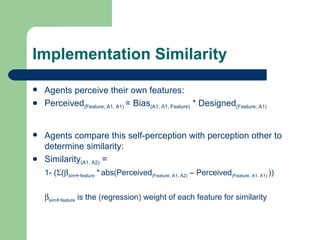

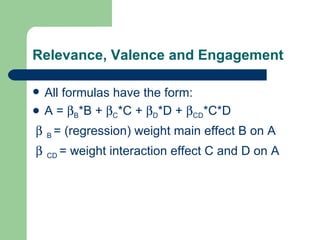

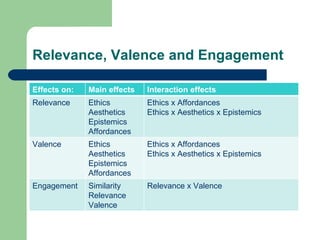

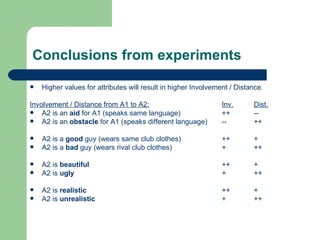

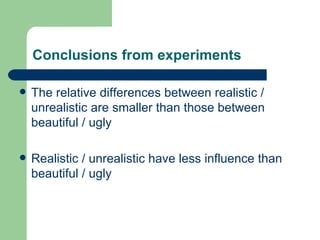

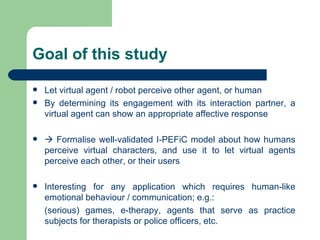

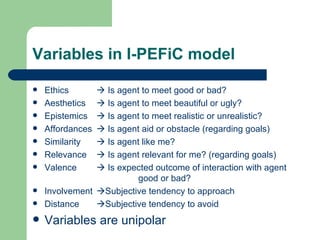

The document describes a study where virtual soccer agents use the I-PEFiC model to perceive and evaluate other agents based on their features. The I-PEFiC model considers variables like ethics, aesthetics, epistemics, affordances, and similarity. The agents calculate perceived values for these variables and use them to determine their relevance, valence, and engagement with other agents. Simulation experiments showed that agents felt more involvement with aids and less with obstacles, as well as with agents of the same team or appearance. The model provides a way for virtual agents to perceive each other similarly to how humans perceive other characters.

![Implementation Agents perceive each others features good , bad , realistic , and unrealistic using a designed value [0, 1] (what would the average agent think) and a (personal) bias [0, 2]; e.g., Perceived (Beautiful, A1, A2) = Bias (A1, A2, Beautiful) * Designed (Beautiful, A2)](https://image.slidesharecdn.com/waipresentatiemei2008-111208031552-phpapp02/85/A-Robot-s-Experience-of-Another-Robot-Simulation-7-320.jpg)