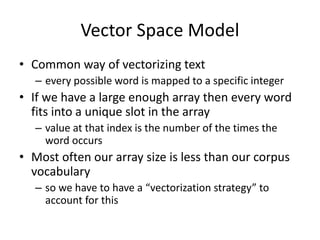

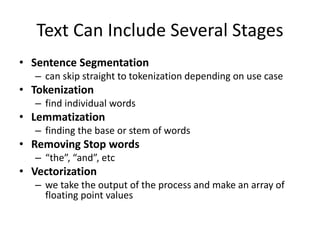

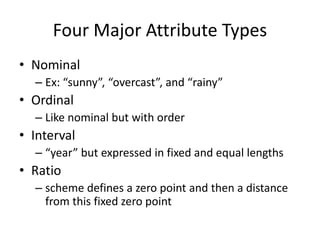

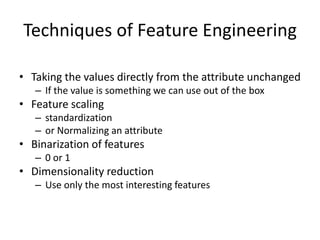

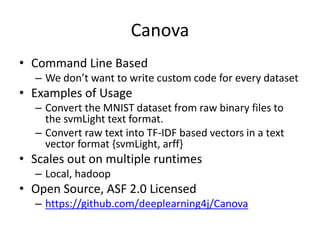

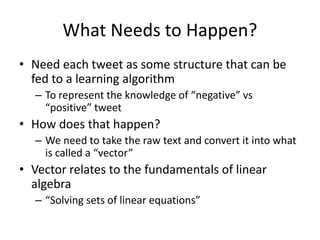

This document discusses vectorization, which is the process of converting raw data like text into numerical feature vectors that can be fed into machine learning algorithms. It covers the vector space model for text vectorization where each unique word is mapped to an index in a vector and the value is the word count. Common text vectorization strategies like bag-of-words, TF-IDF, and kernel hashing are explained. General vectorization techniques for different attribute types like nominal, ordinal, interval and ratio are also overviewed along with feature engineering methods and the Canova tool.

![Wait. What’s a Vector Again?

• An array of floating point numbers

• Represents data

– Text

– Audio

– Image

• Example:

–[ 1.0, 0.0, 1.0, 0.5 ]](https://image.slidesharecdn.com/gatechvectorization20150323-150325193643-conversion-gate01/85/Vectorization-Georgia-Tech-CSE6242-March-2015-7-320.jpg)