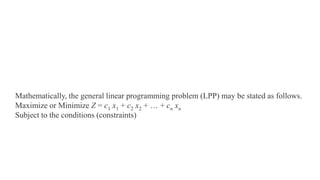

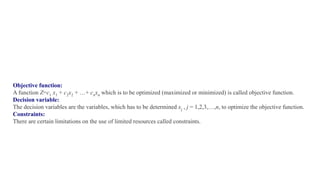

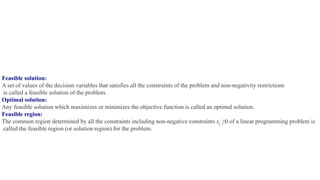

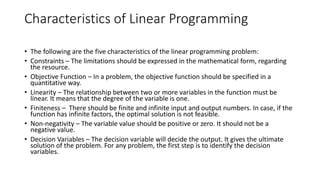

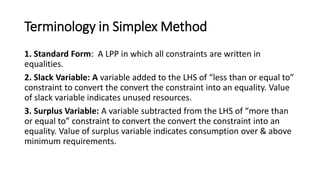

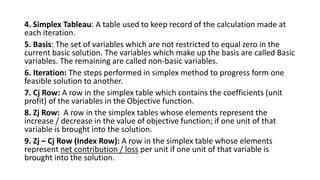

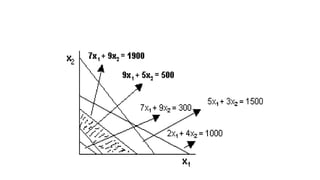

La programación lineal es un método de optimización utilizado para maximizar o minimizar una función lineal sujeta a restricciones lineales, que pueden ser igualdades o desigualdades. Este método se aplica en diversas áreas como ingeniería, manufactura y optimización del transporte, y se resuelve comúnmente mediante métodos como el simplex o gráfico. Las principales características incluyen la linealidad, la finitud de variables y la no negatividad, con un enfoque en identificar variables de decisión y soluciones óptimas dentro de un espacio factible.