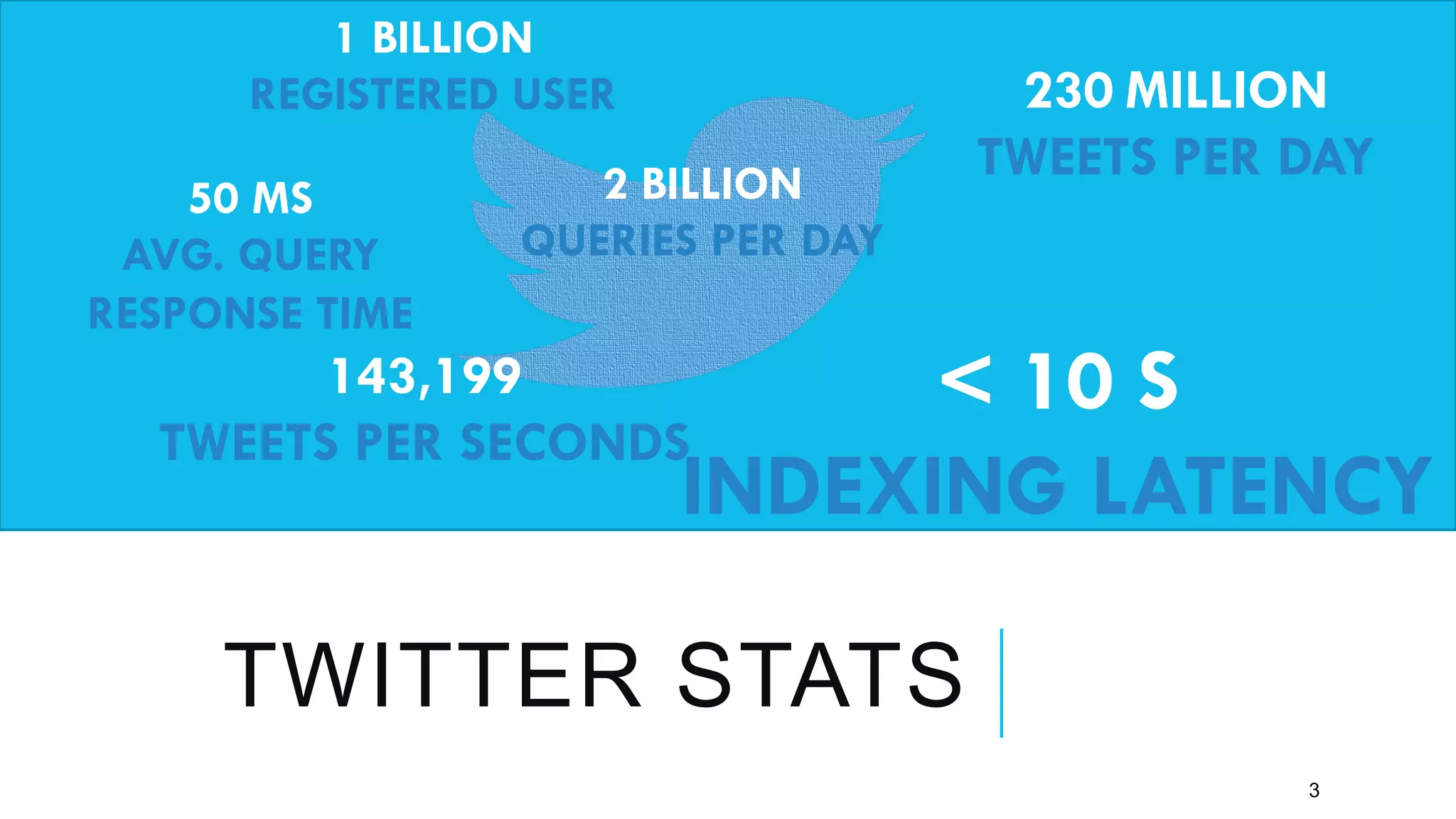

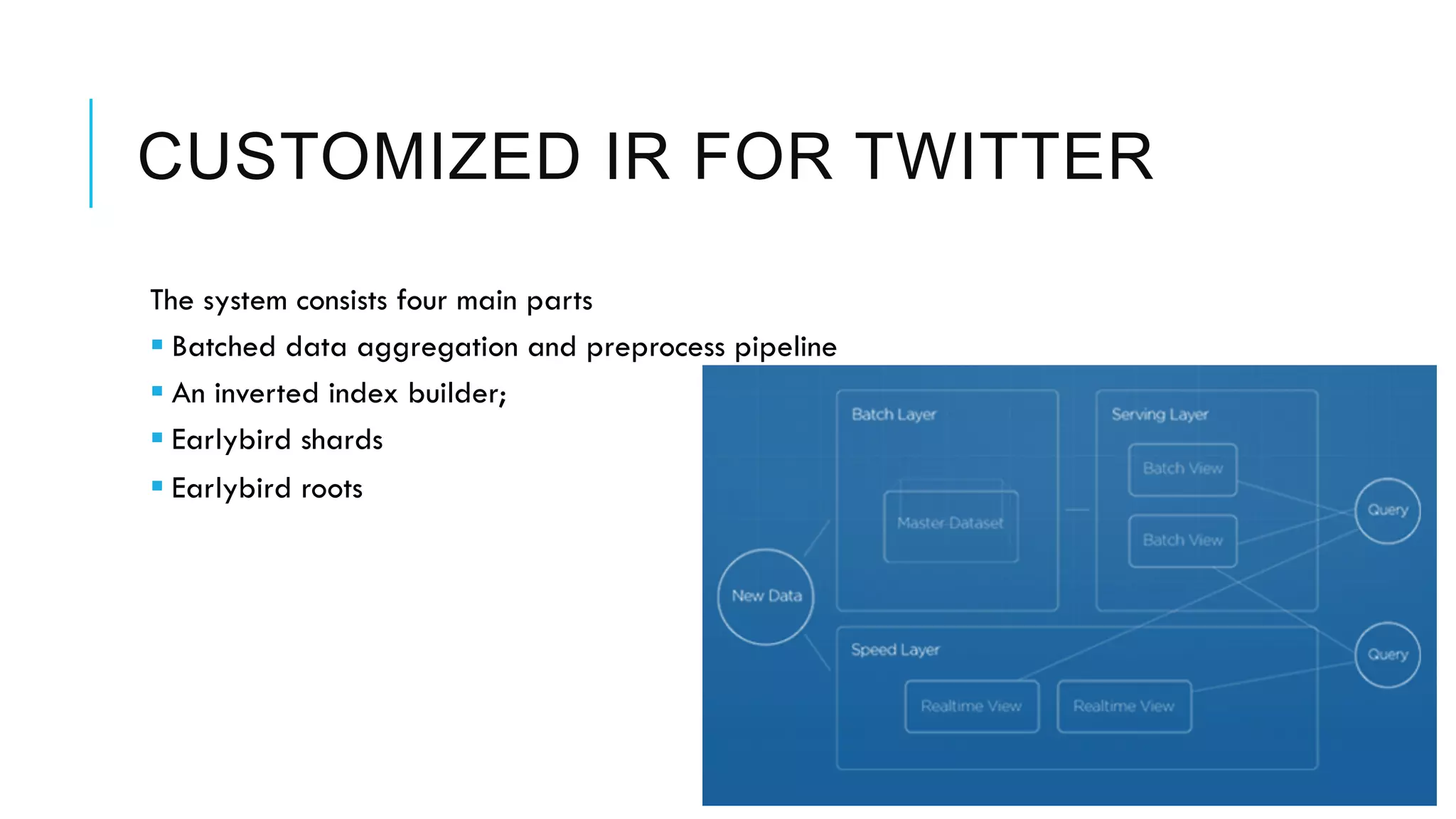

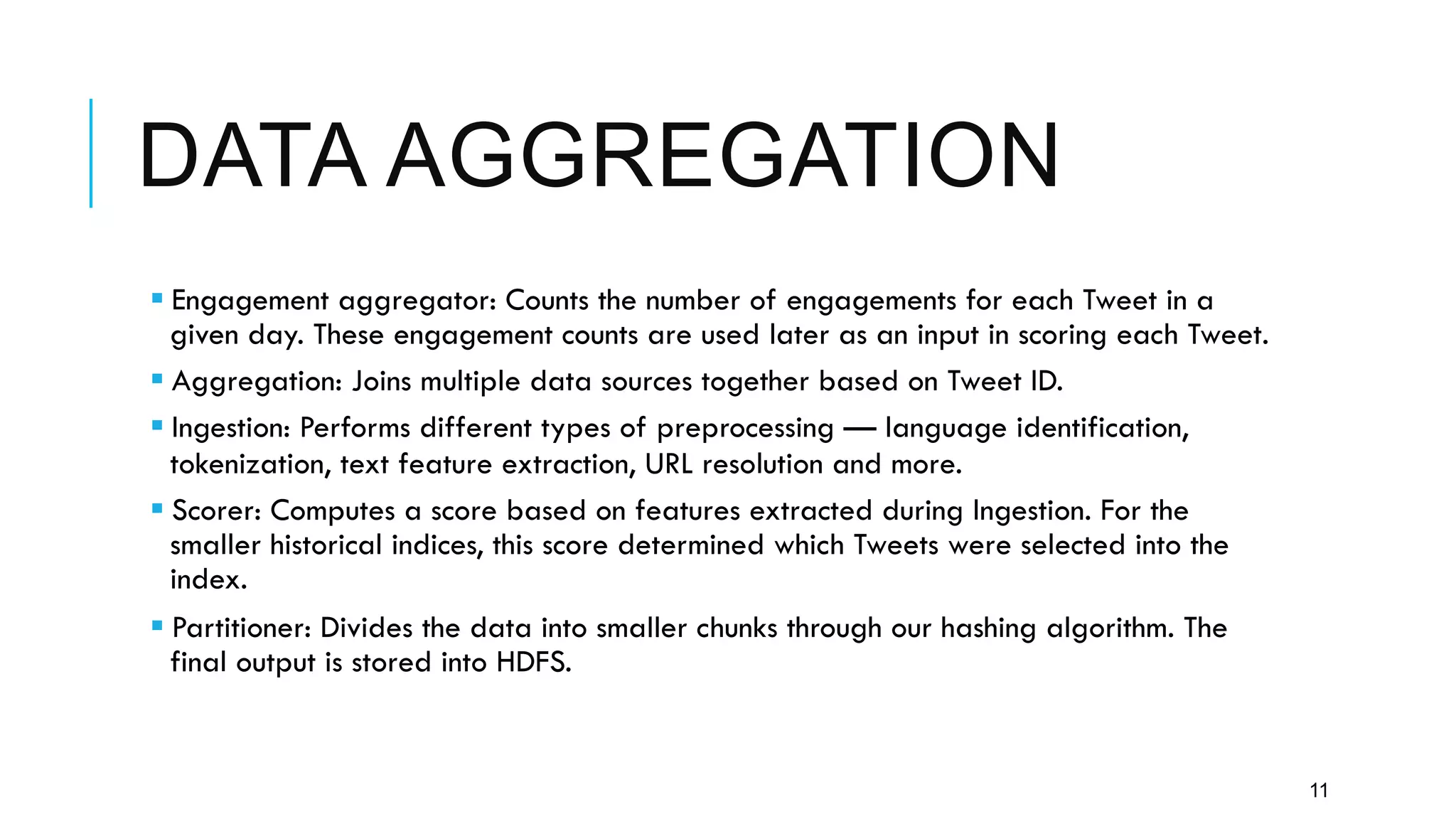

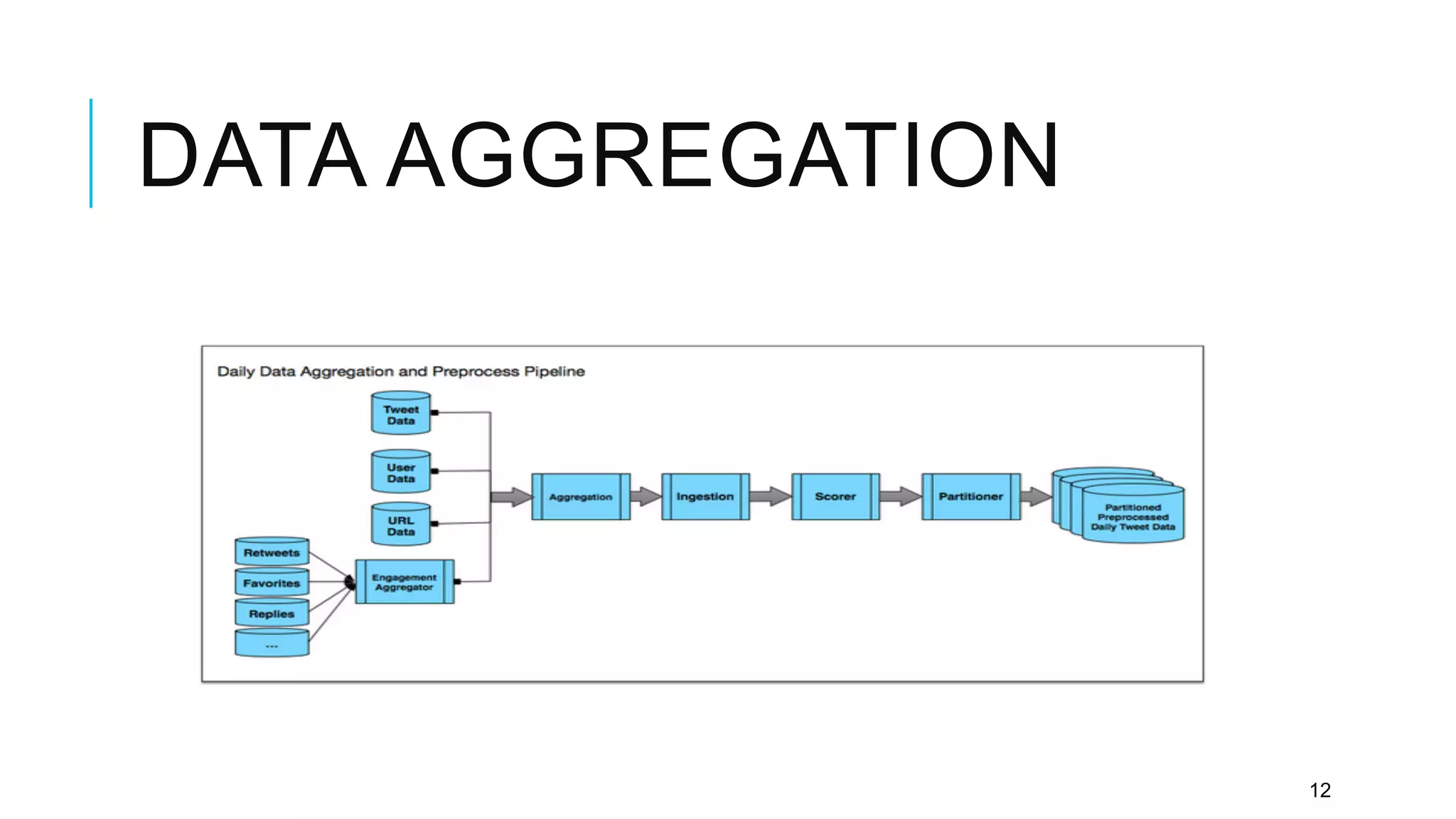

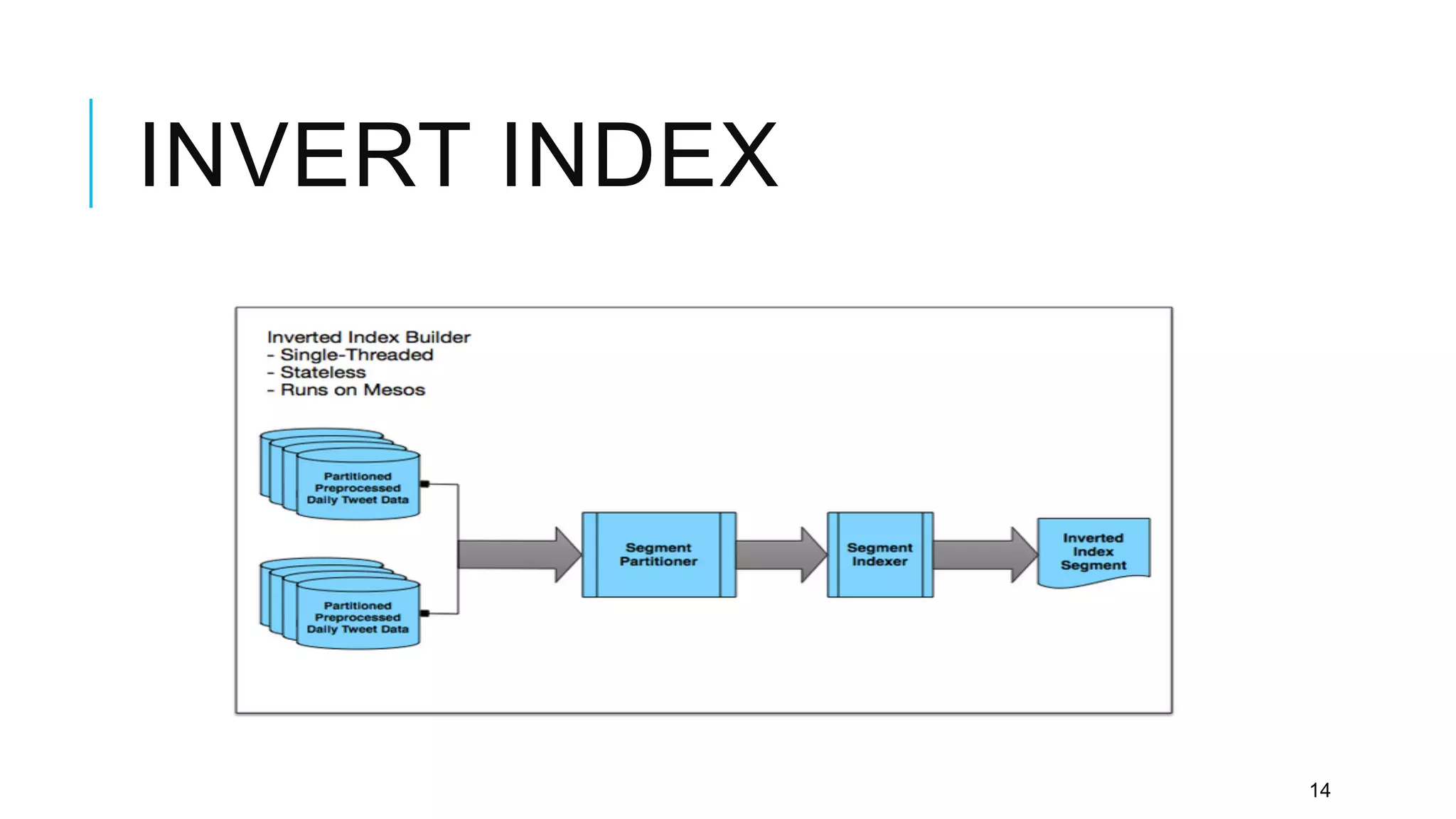

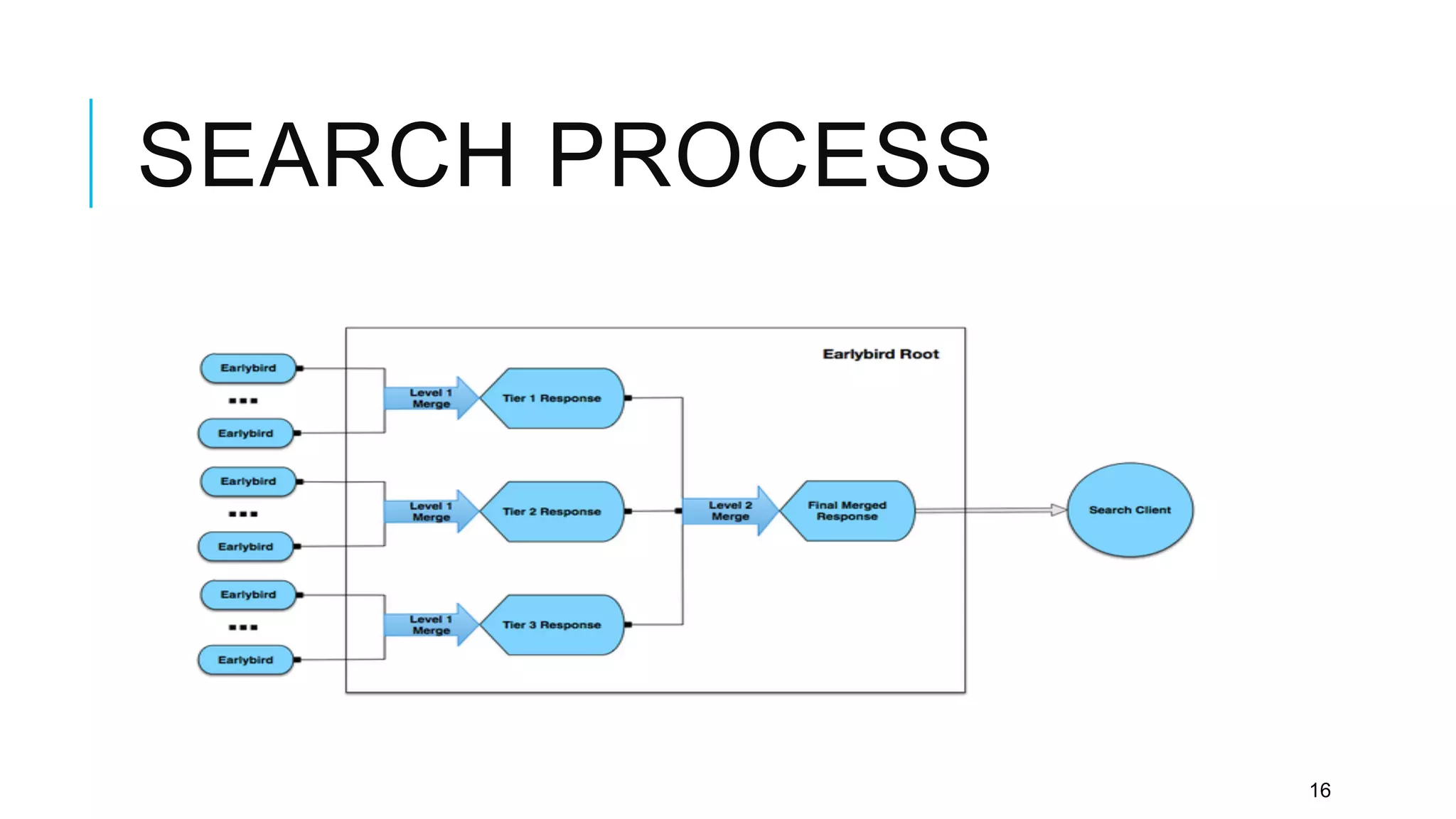

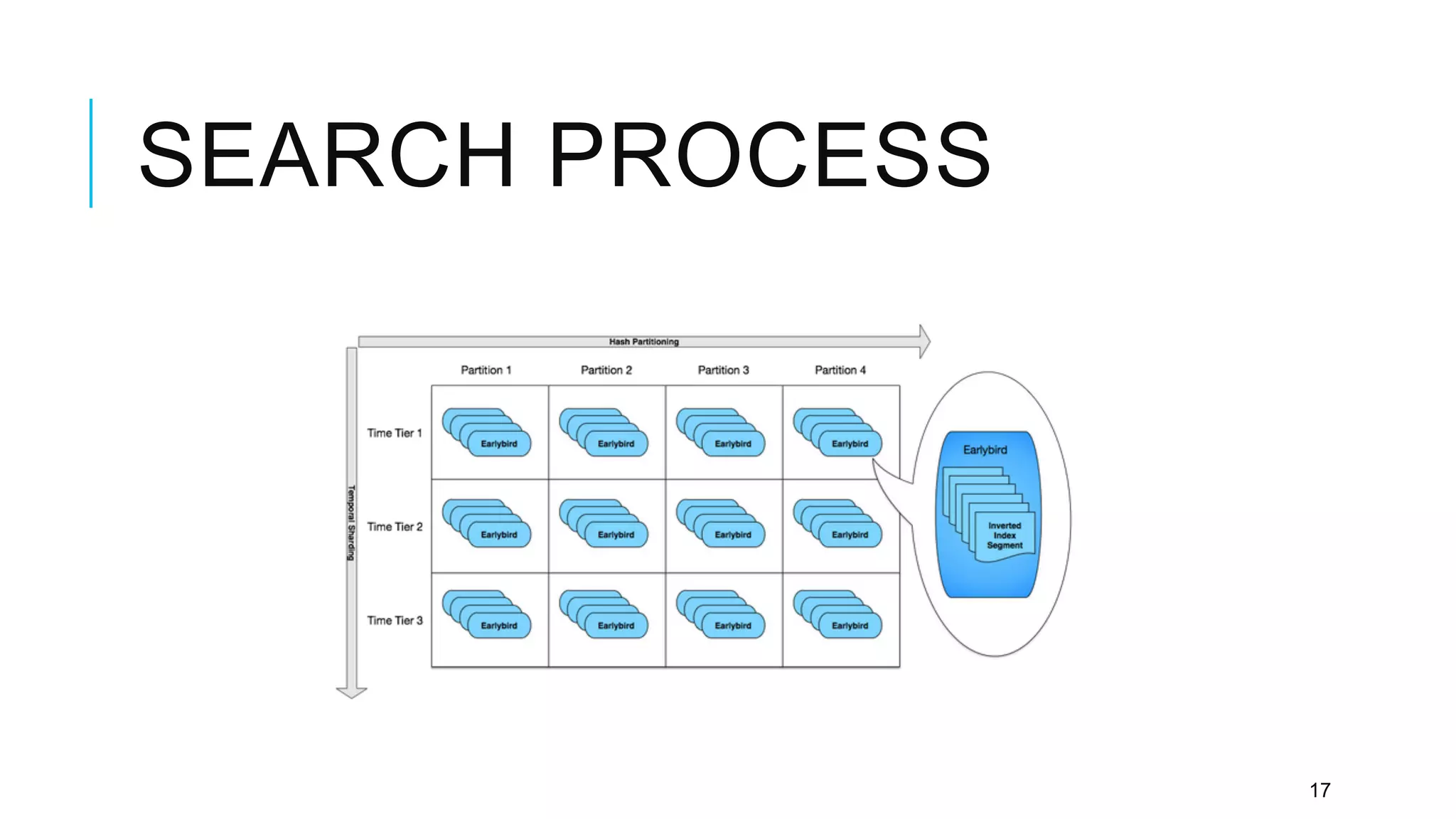

Twitter provides a platform for user-generated content in the form of short messages called tweets. It handles a massive volume of data, with over 230 million tweets and 2 billion search queries per day. Twitter has developed a customized search and indexing system to handle this scale. It uses a modular system that is scalable, cost-effective, and allows for incremental development. The system includes components for crawling Twitter data, preprocessing and aggregating tweets, building an inverted index, and distributing the index across server machines for low-latency search.