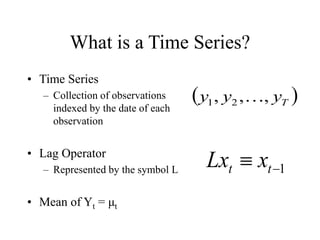

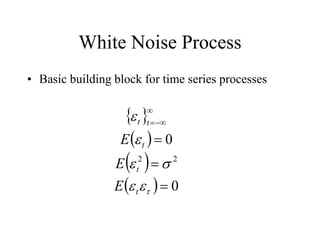

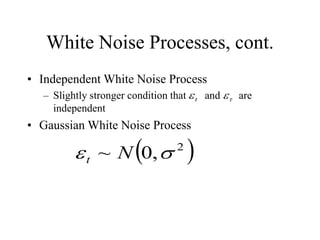

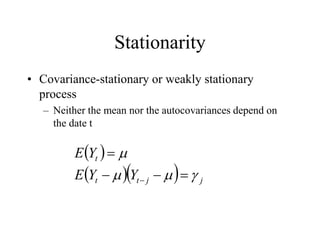

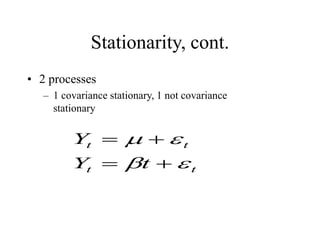

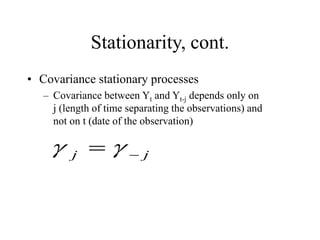

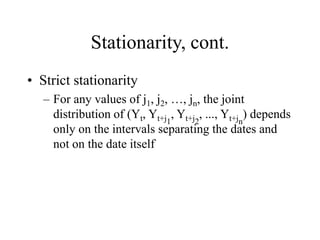

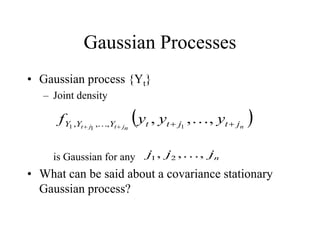

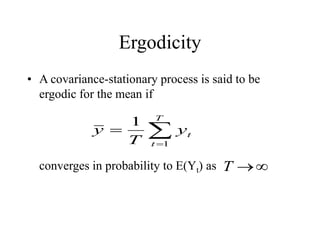

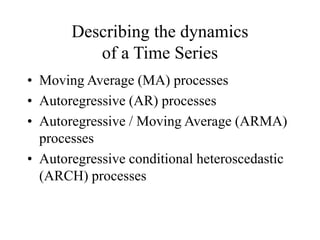

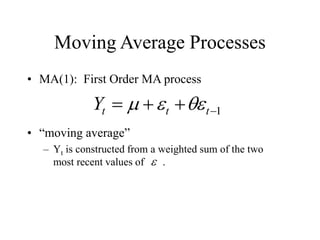

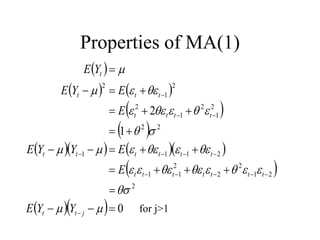

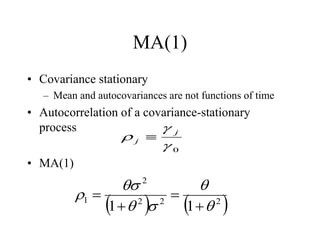

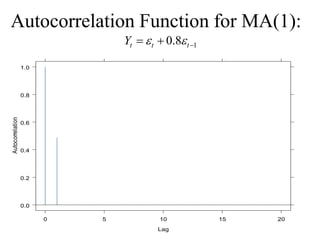

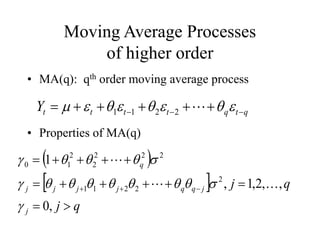

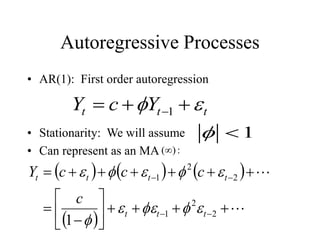

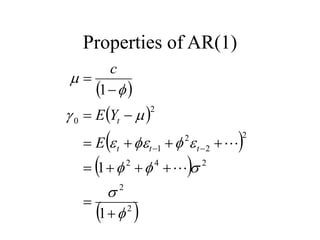

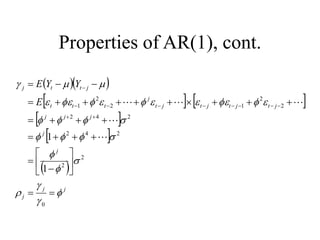

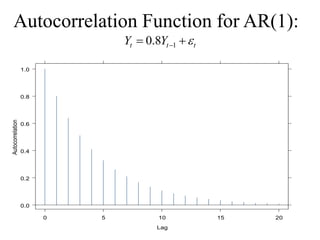

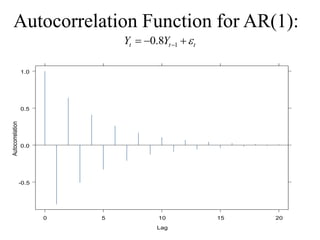

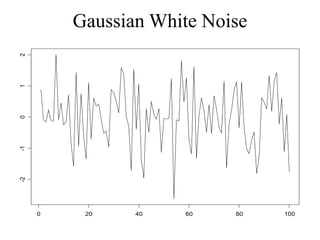

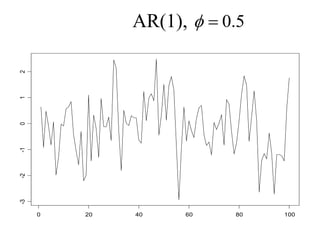

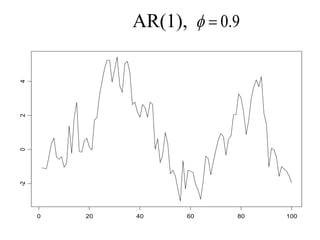

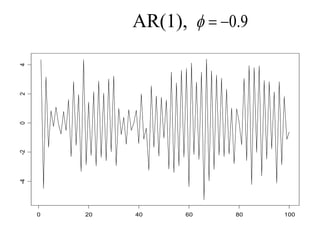

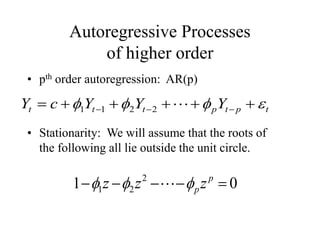

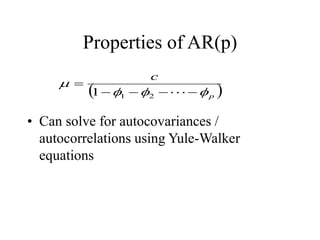

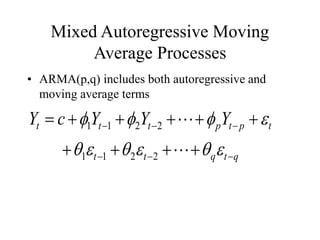

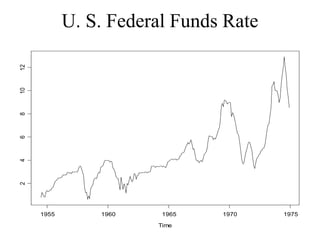

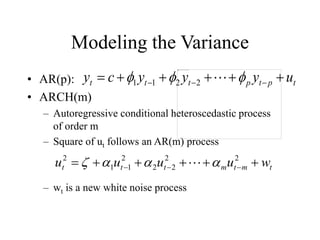

This document provides an introduction to time series analysis. It defines key concepts such as time series, lag operators, mean, autocovariance, stationarity, white noise processes, moving average processes, autoregressive processes, and mixed autoregressive moving average processes. Examples are provided to illustrate properties of different time series models including their autocorrelation functions.