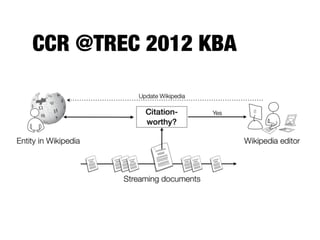

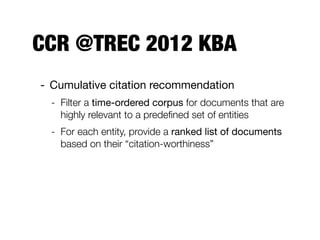

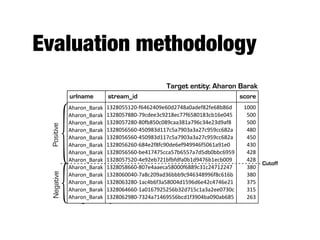

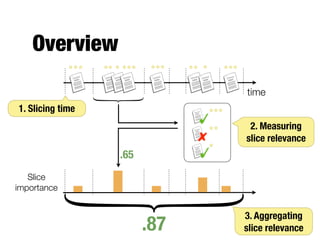

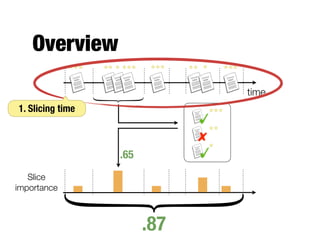

This document proposes a framework for temporally evaluating cumulative citation recommendation (CCR) systems on streaming data. It involves:

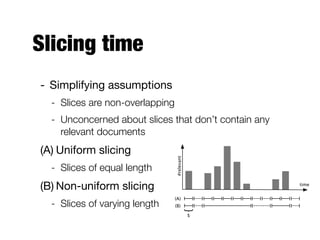

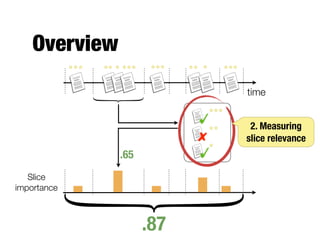

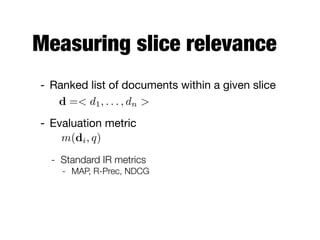

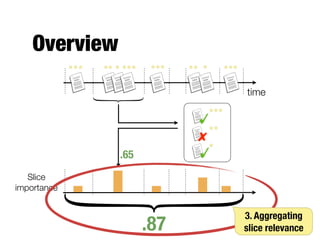

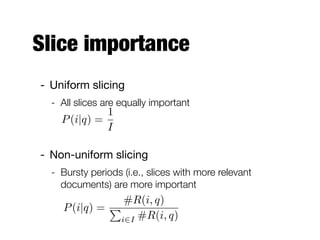

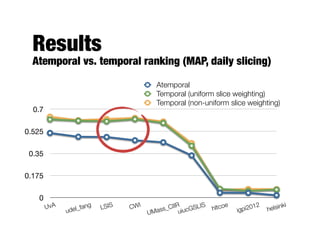

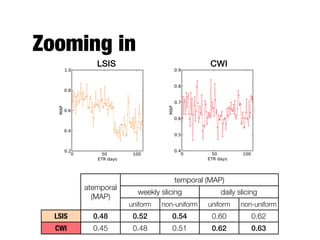

1) Slicing time into uniform or non-uniform intervals and measuring relevance within each slice.

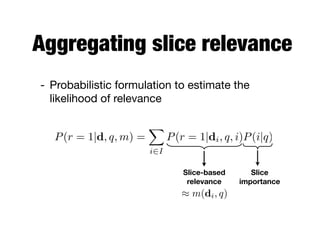

2) Aggregating slice-based relevance scores according to slice importance weights based on relevance burstiness.

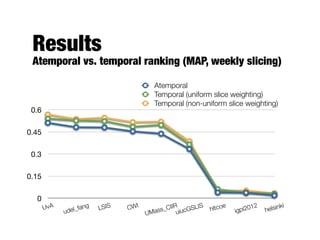

3) Applying the framework to CCR systems from TREC 2012 shows that considering temporal effects provides additional insights beyond atemporal evaluation and favors some systems over others. The framework could also be applied to other time-aware retrieval tasks.