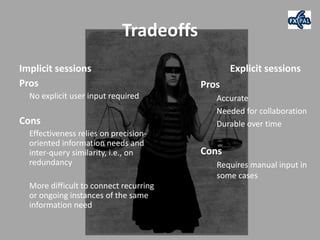

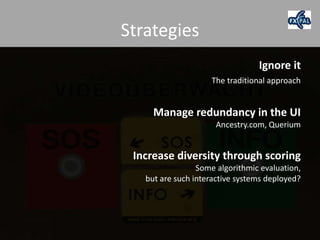

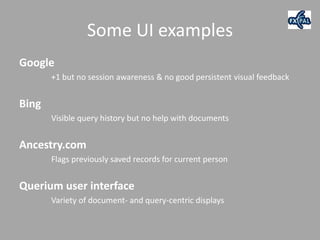

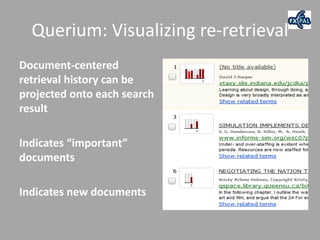

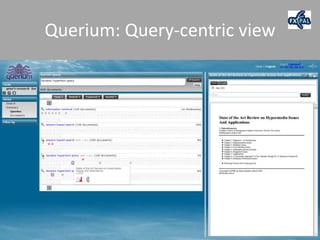

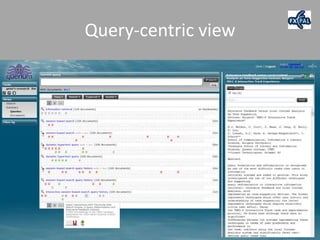

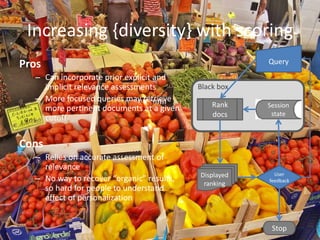

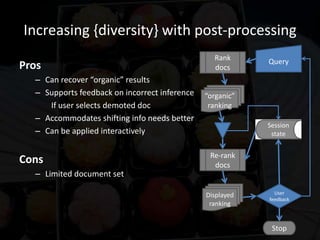

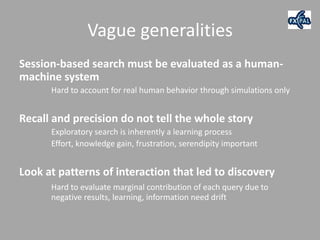

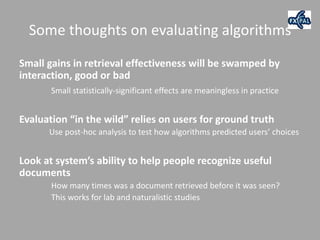

The document discusses exploratory search and the complexities of session detection in information retrieval systems, highlighting the difference between explicit and implicit session detection. It emphasizes the importance of managing redundancy and improving user interaction with search results through various strategies and UI examples. The talk concludes with reflections on the evaluation of search algorithms and the evolving nature of information needs.