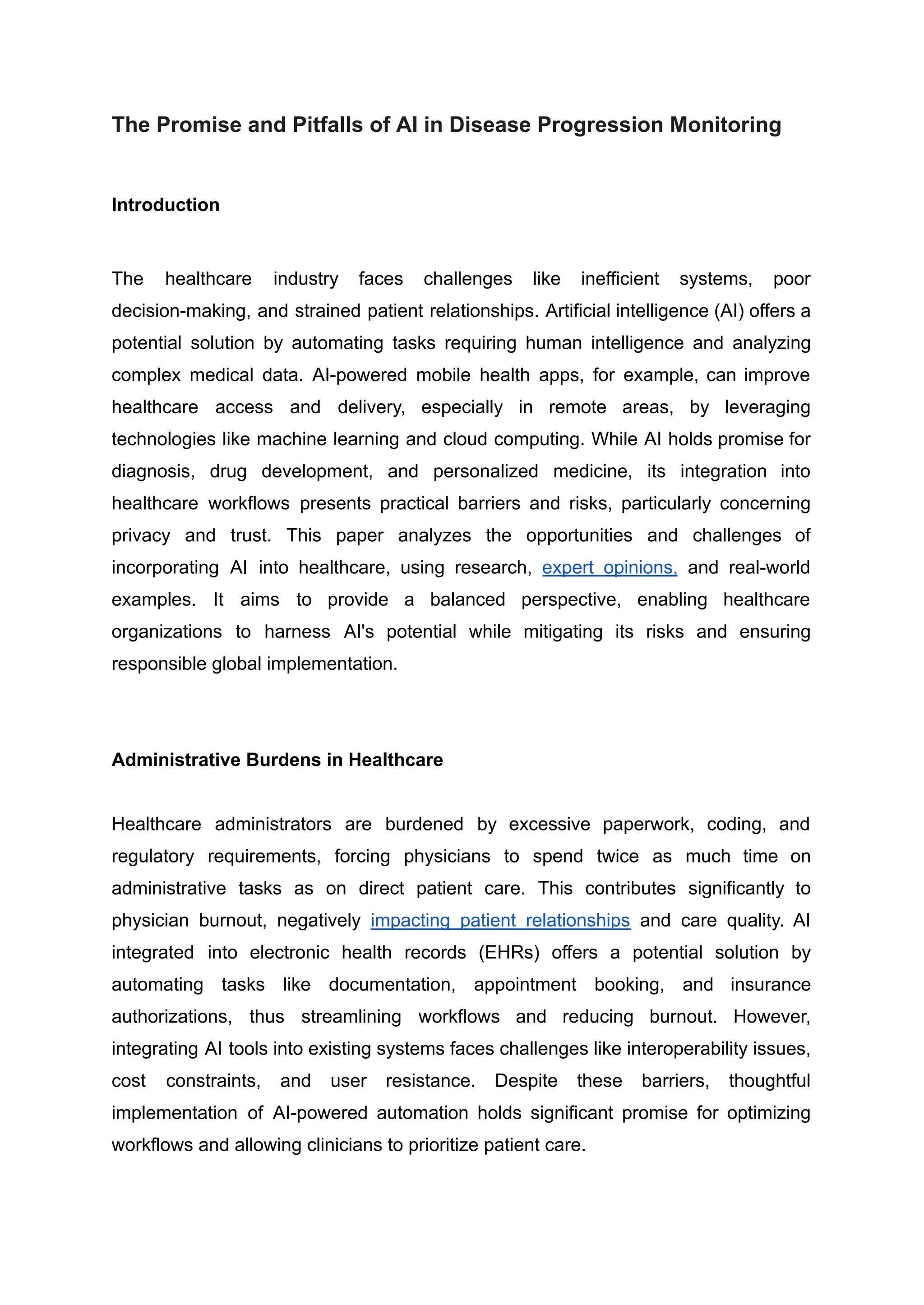

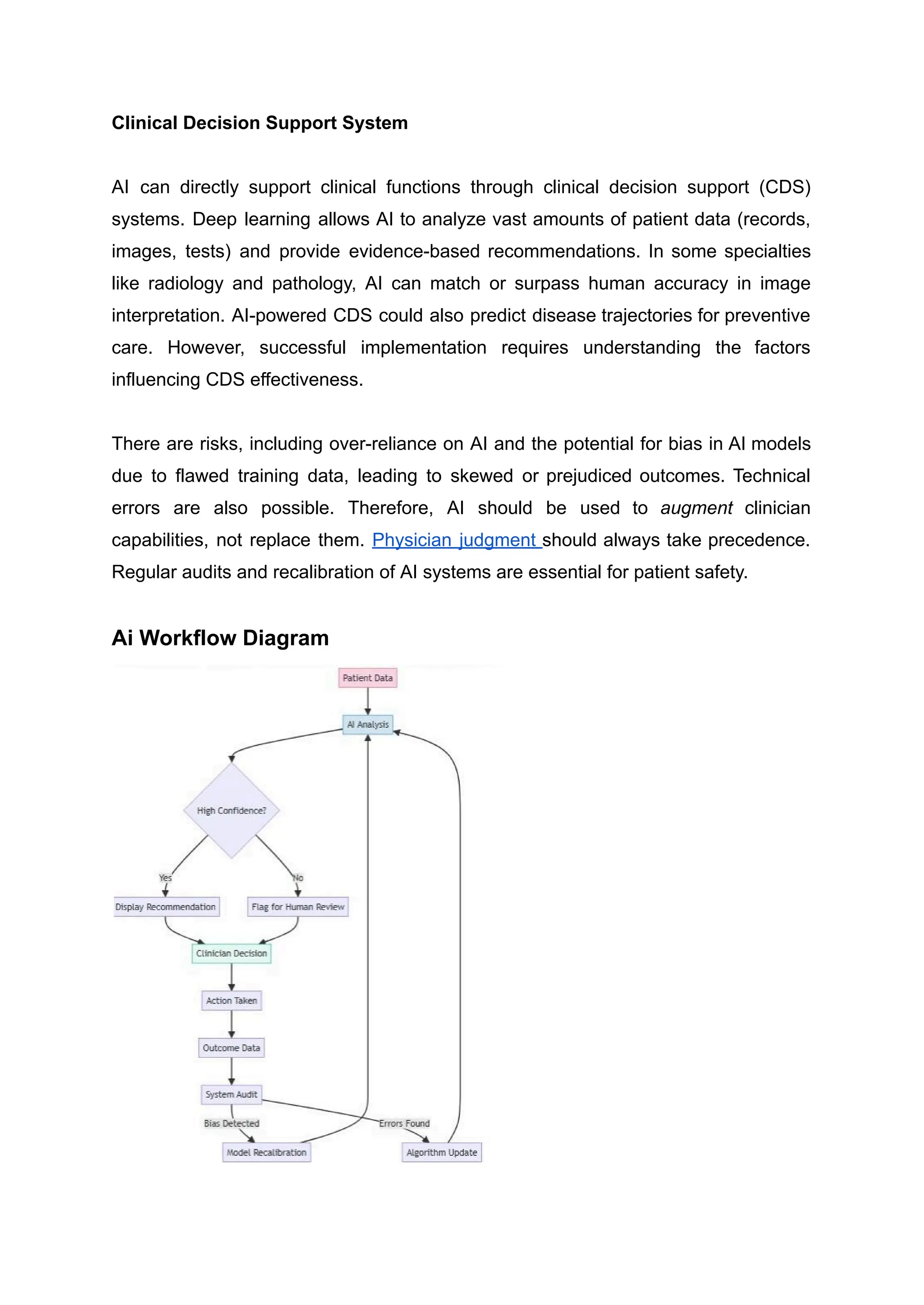

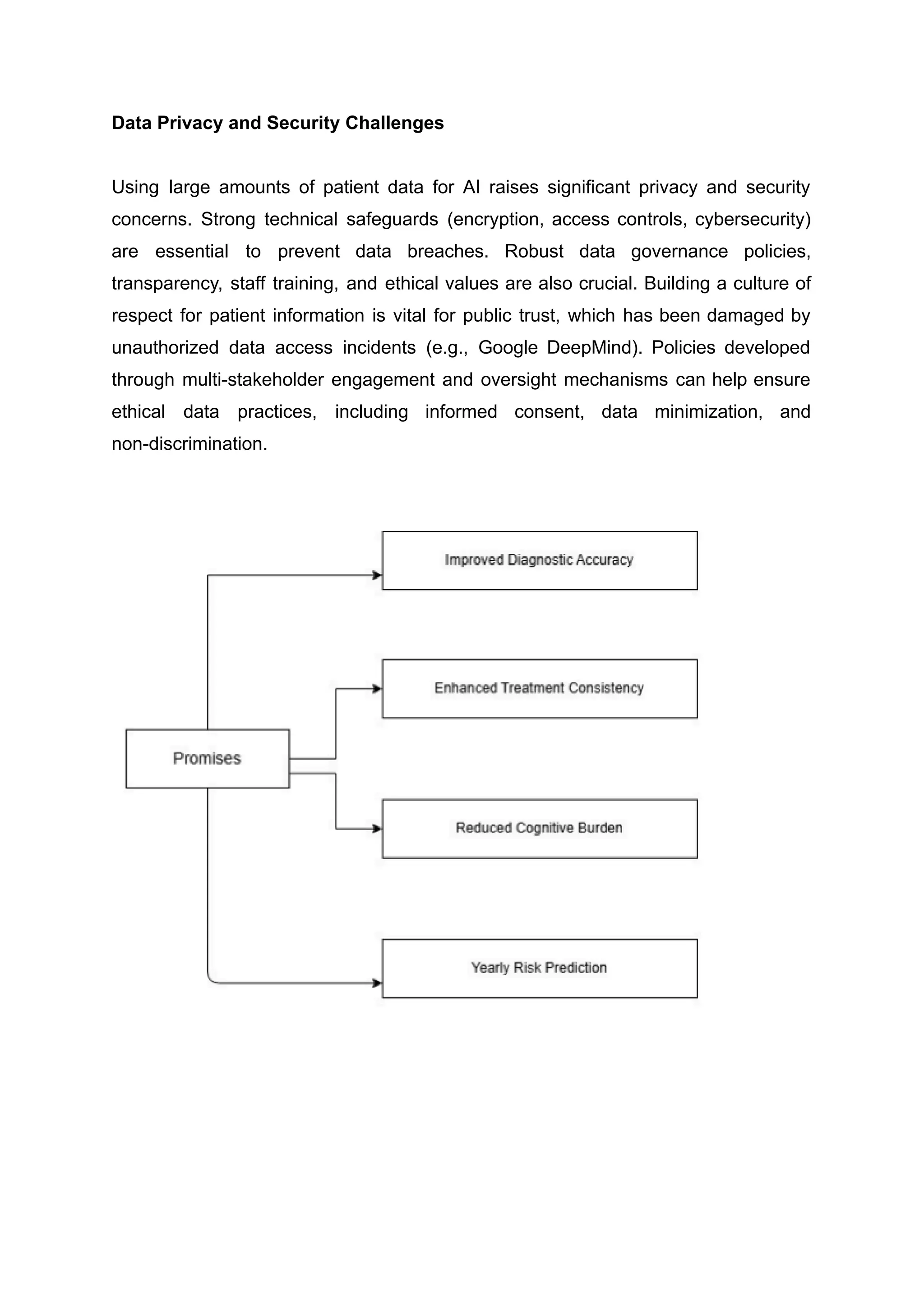

The healthcare industry faces challenges like inefficient systems, poor decision-making, and strained patient relationships. Artificial intelligence (AI) offers a potential solution by automating tasks requiring human intelligence and analyzing complex medical data. AI-powered mobile health apps, for example, can improve healthcare access and delivery, especially in remote areas, by leveraging technologies like machine learning and cloud computing. While AI holds promise for diagnosis, drug development, and personalized medicine, its integration into healthcare workflows presents practical barriers and risks, particularly concerning privacy and trust.