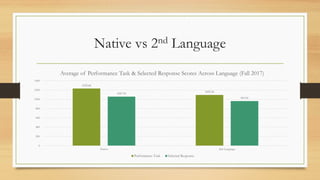

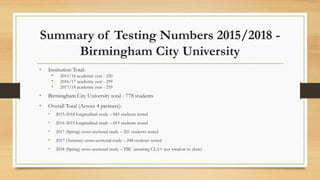

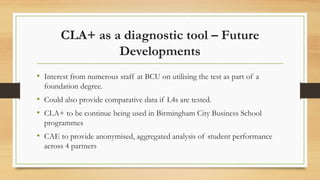

The document summarizes Birmingham City University's use of the Collegiate Learning Assessment (CLA+) to measure students' critical thinking, problem solving, and writing skills. It discusses administering the CLA+ as an optional and compulsory assessment, finding issues with optional testing. When made compulsory in the Professional Development module, 201 first year students were tested and results analyzed by demographics. The university has now tested over 700 students total from 2015-2018 using the CLA+ as a diagnostic tool to measure learning gain.