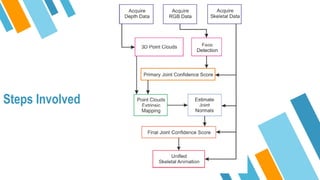

The document describes a method for capturing human motion over 360 degrees using multi-view RGB-D video data from Kinect sensors. The method involves:

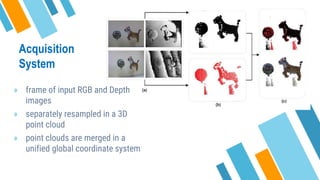

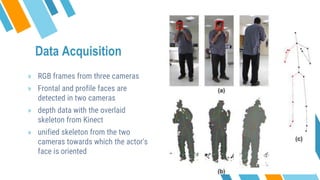

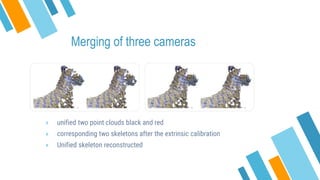

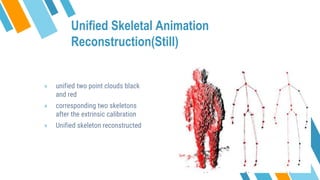

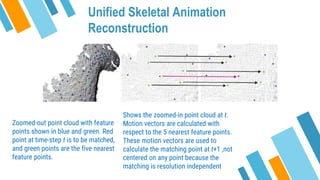

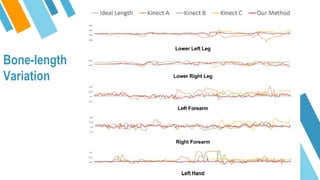

1) Acquiring RGB and depth frames from three Kinect cameras and merging the point clouds and skeleton data into a unified coordinate system.

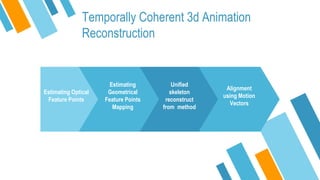

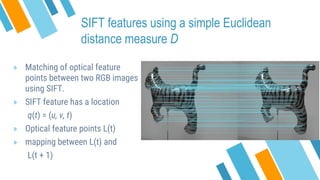

2) Tracking SIFT features between frames to establish correspondences and estimate motion vectors for temporally coherent 3D animation reconstruction.

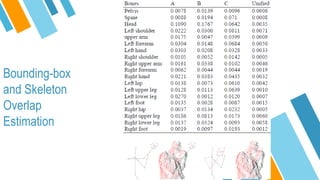

3) Evaluating the method by comparing it to direct RGB-D SLAM and feature-based RGB-D SLAM, and analyzing residual configuration, computation time, and failure modes.