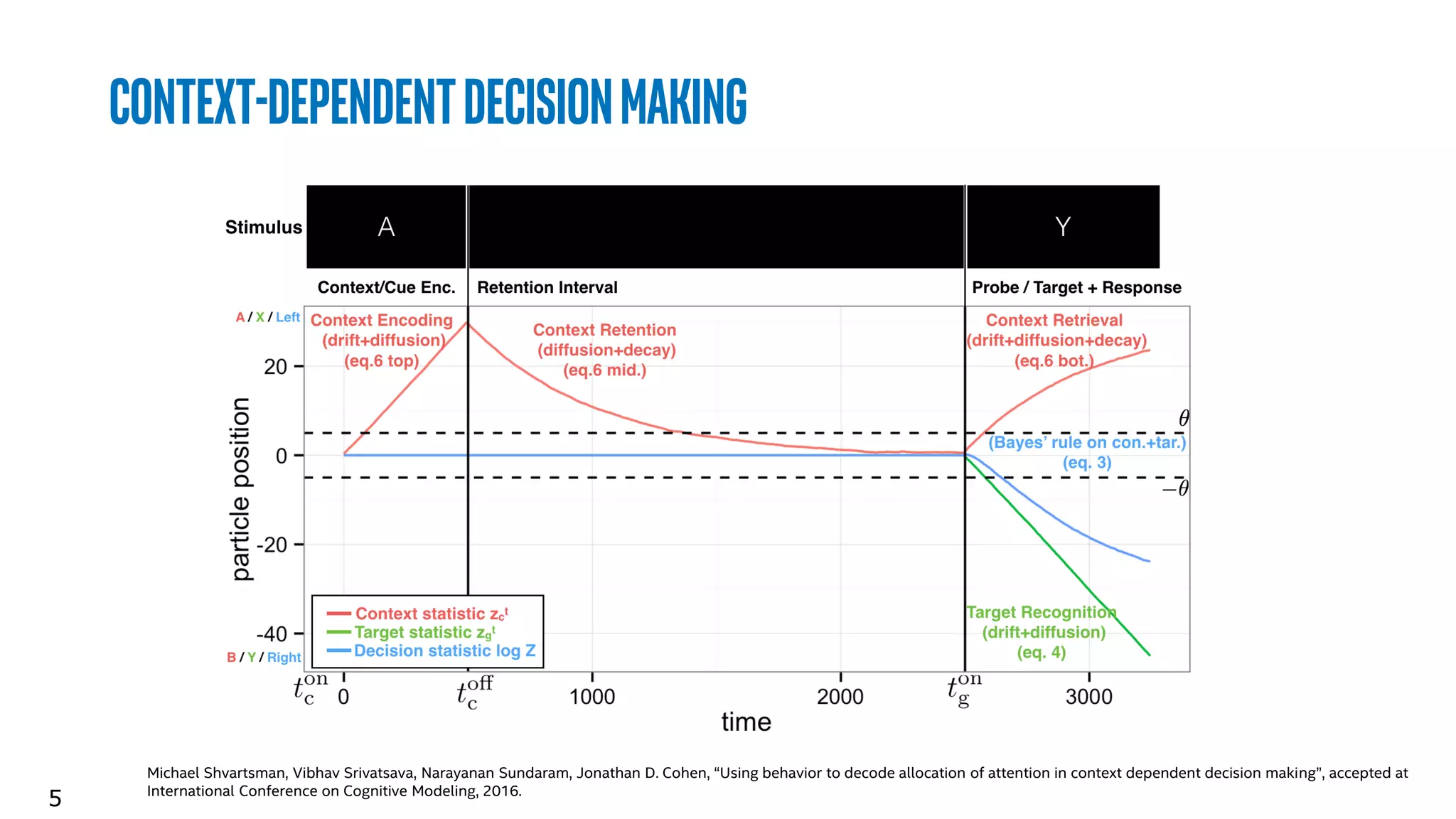

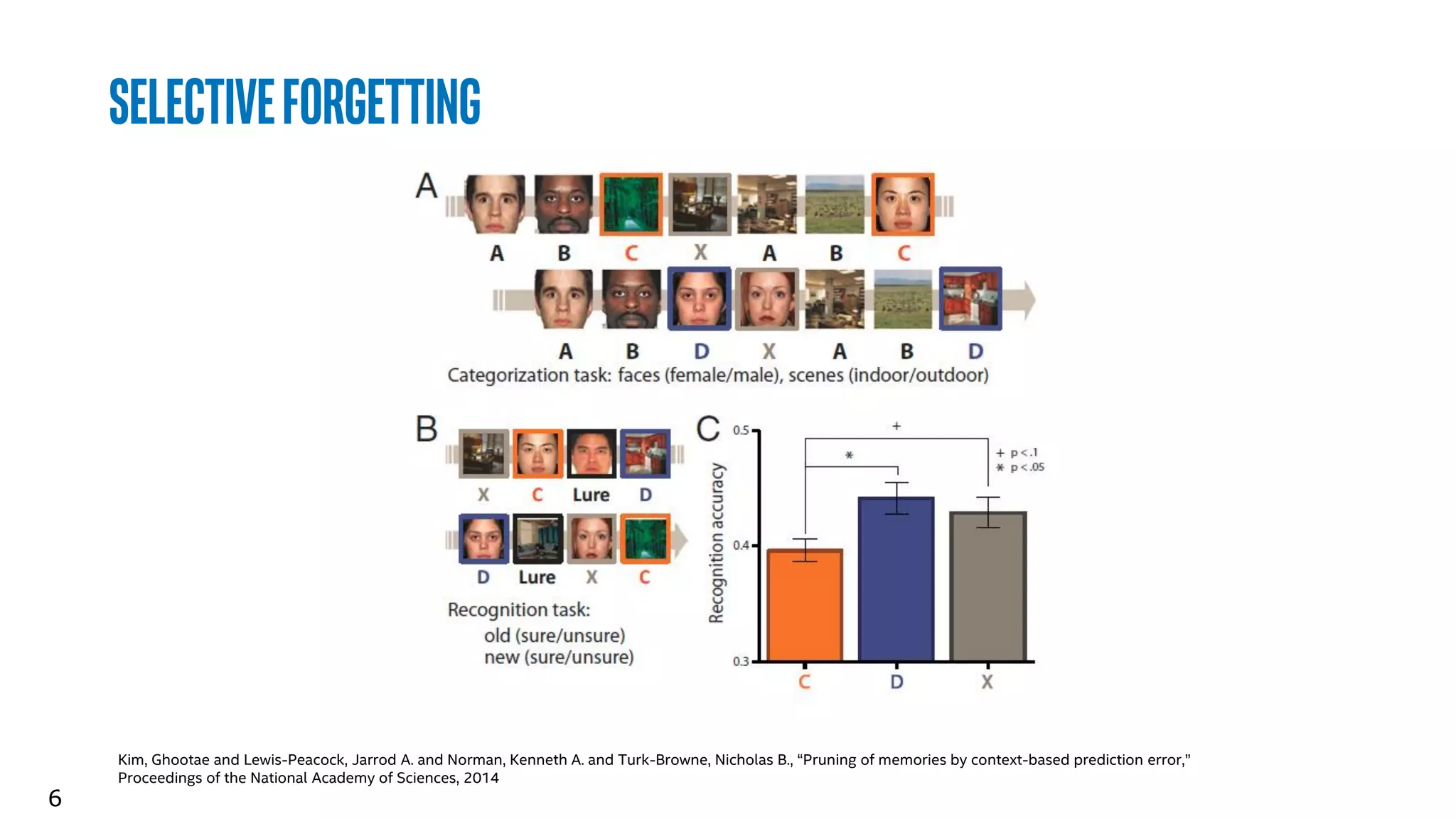

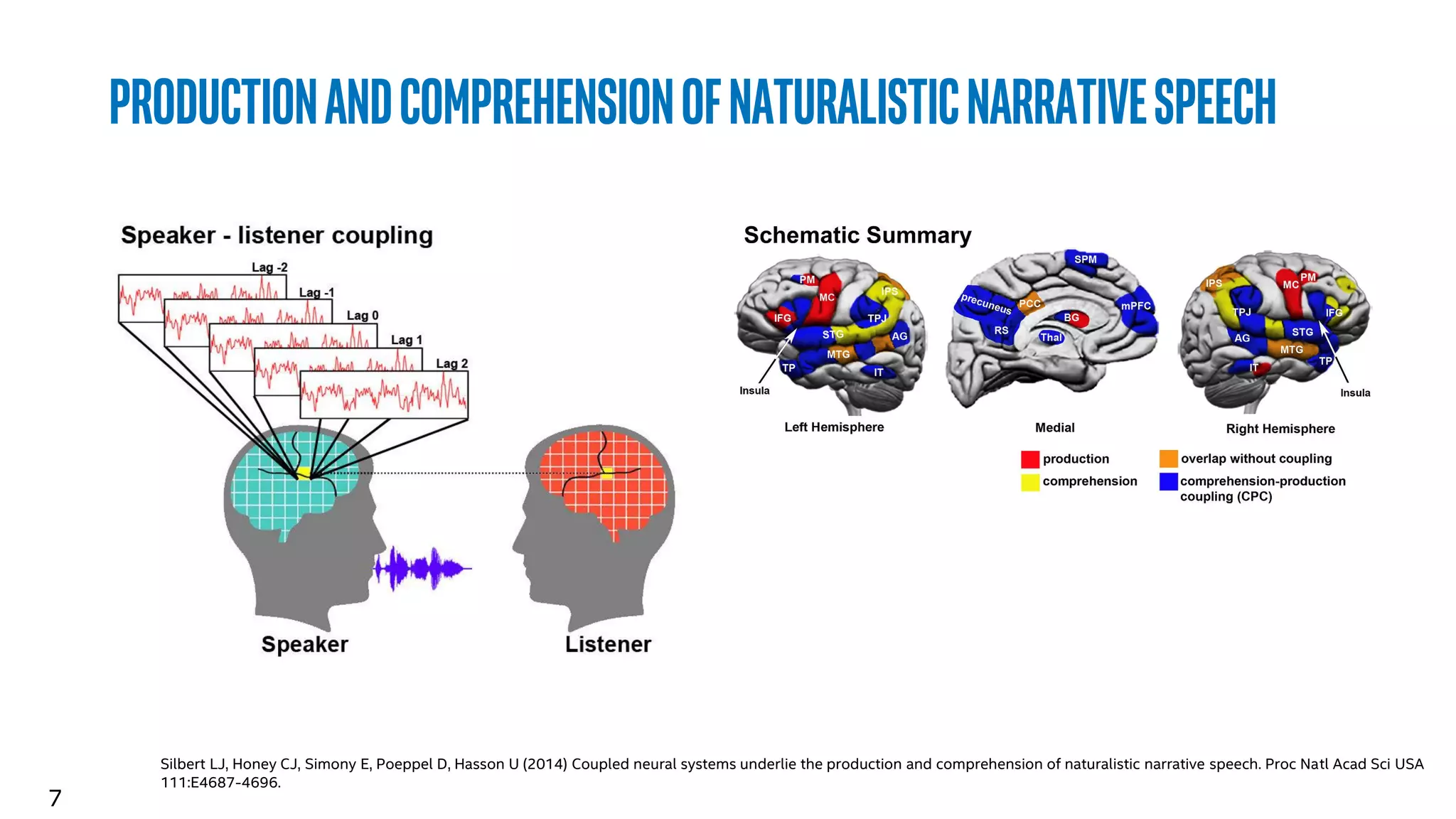

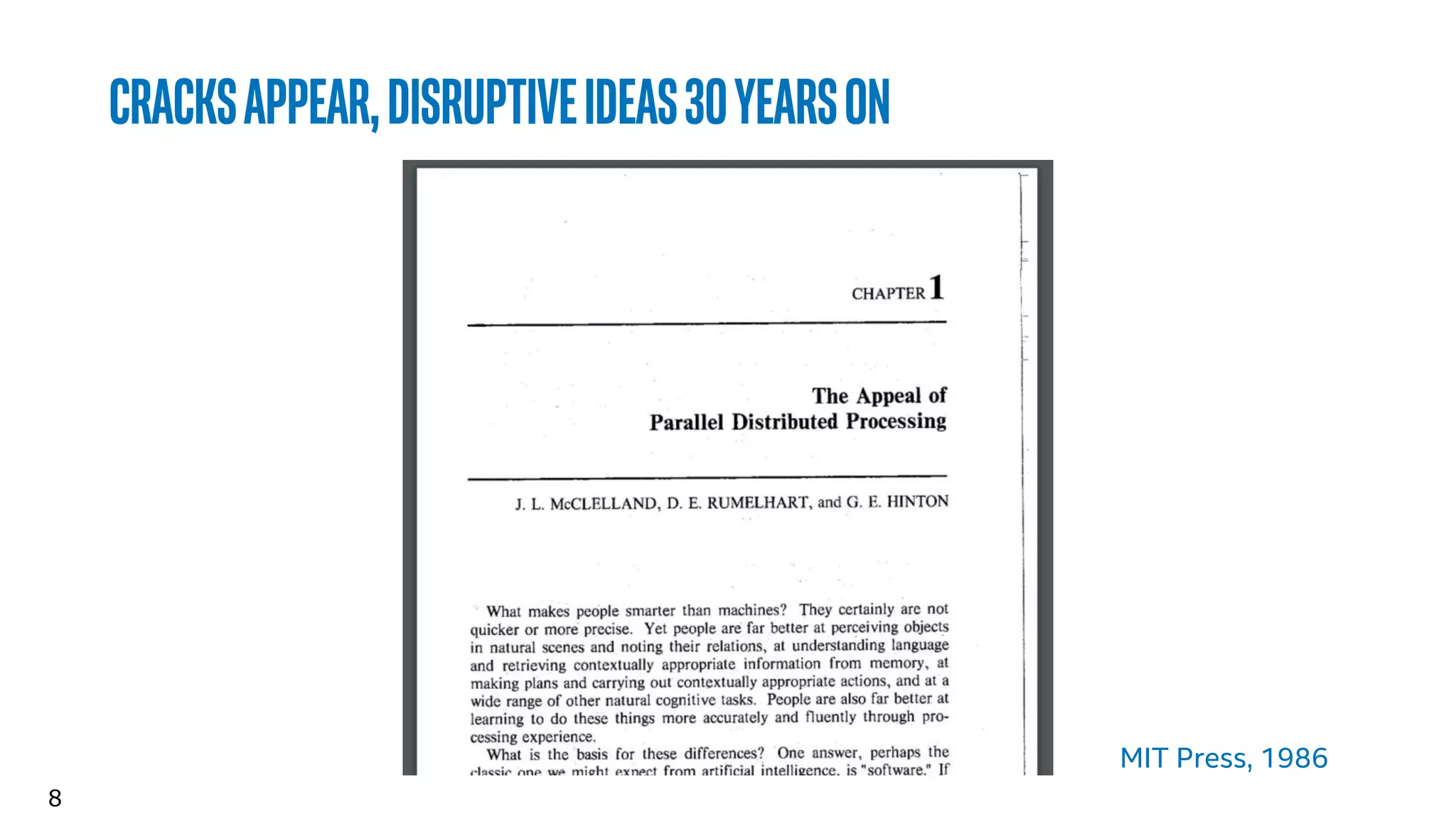

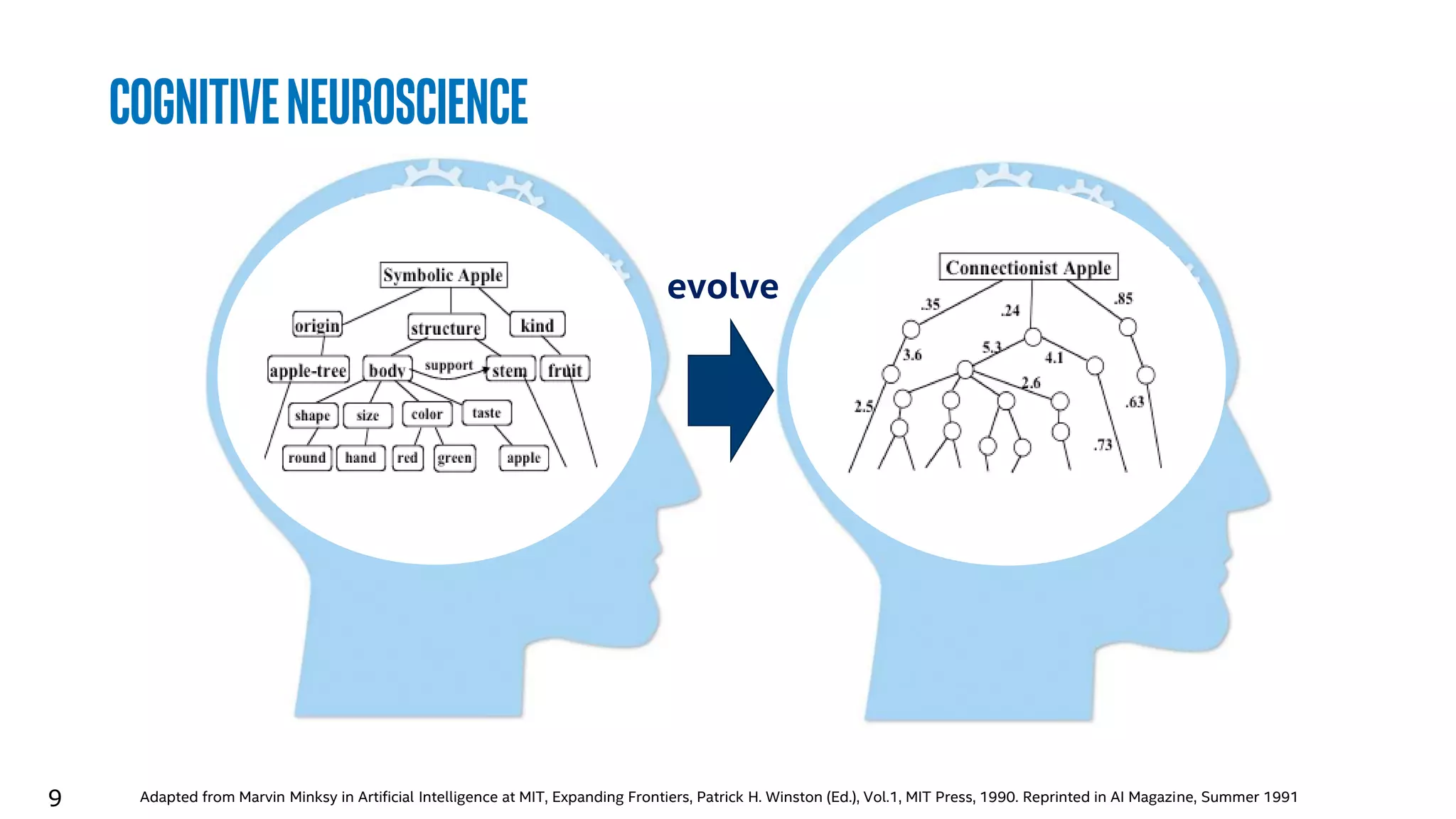

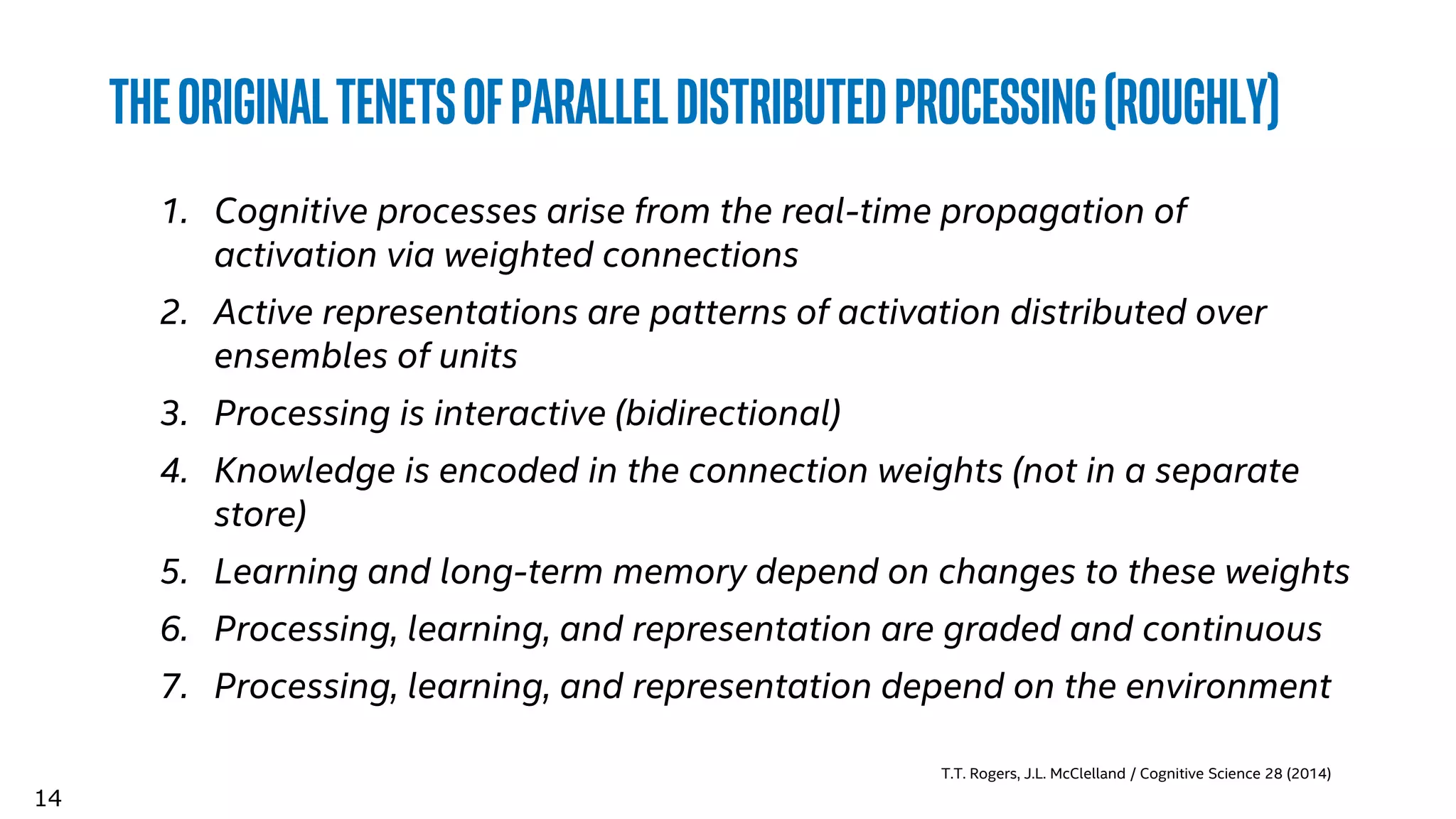

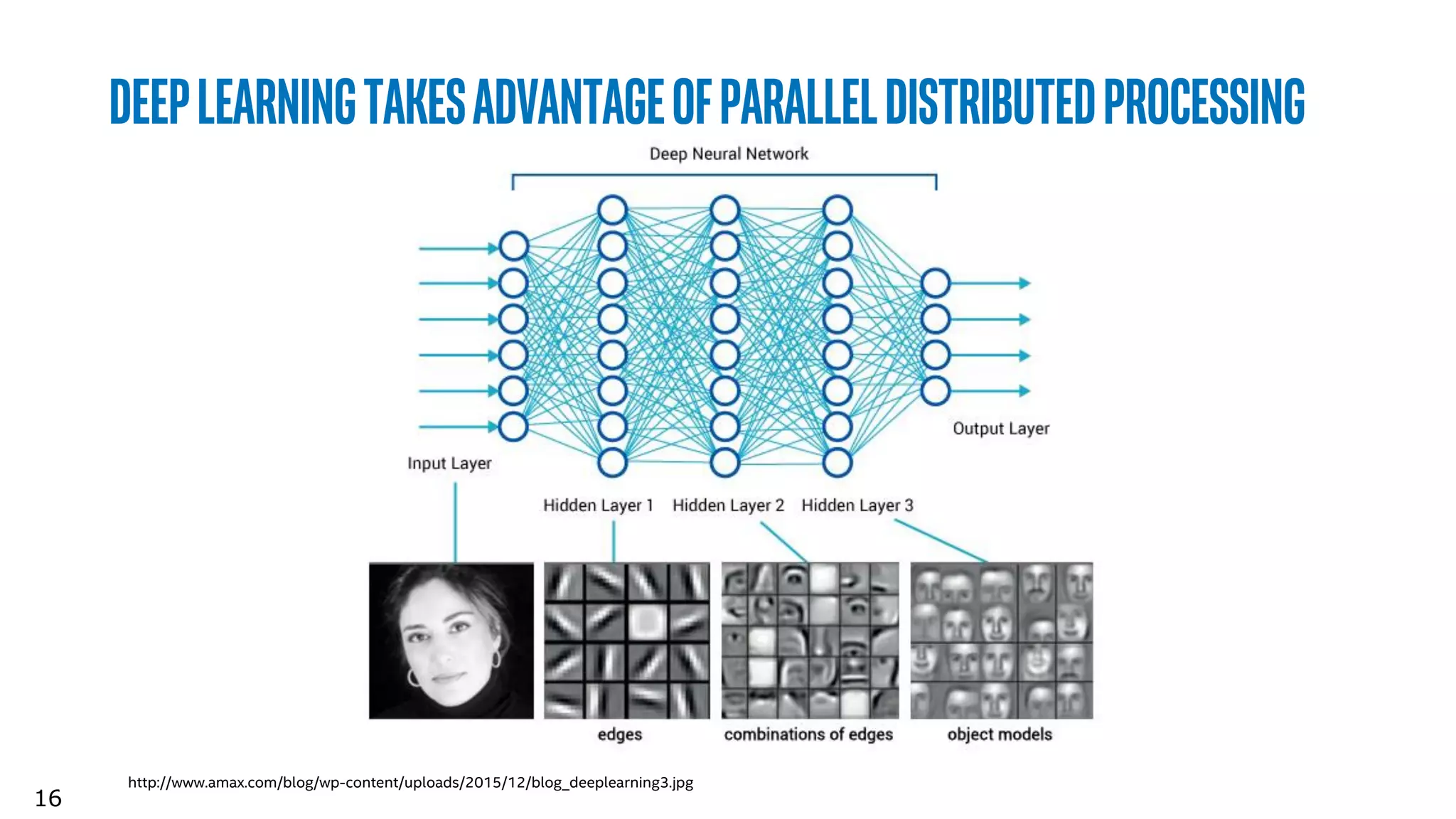

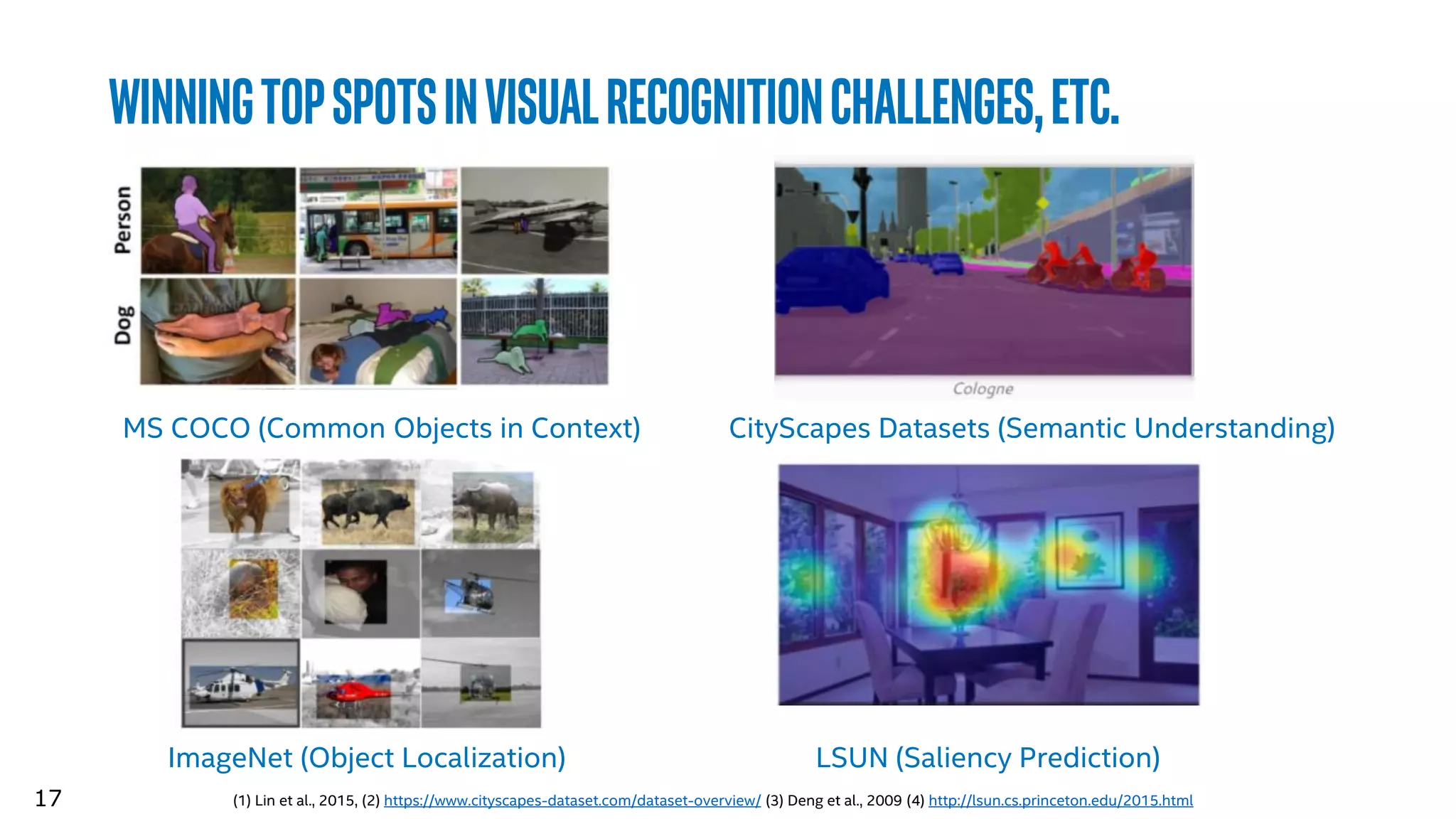

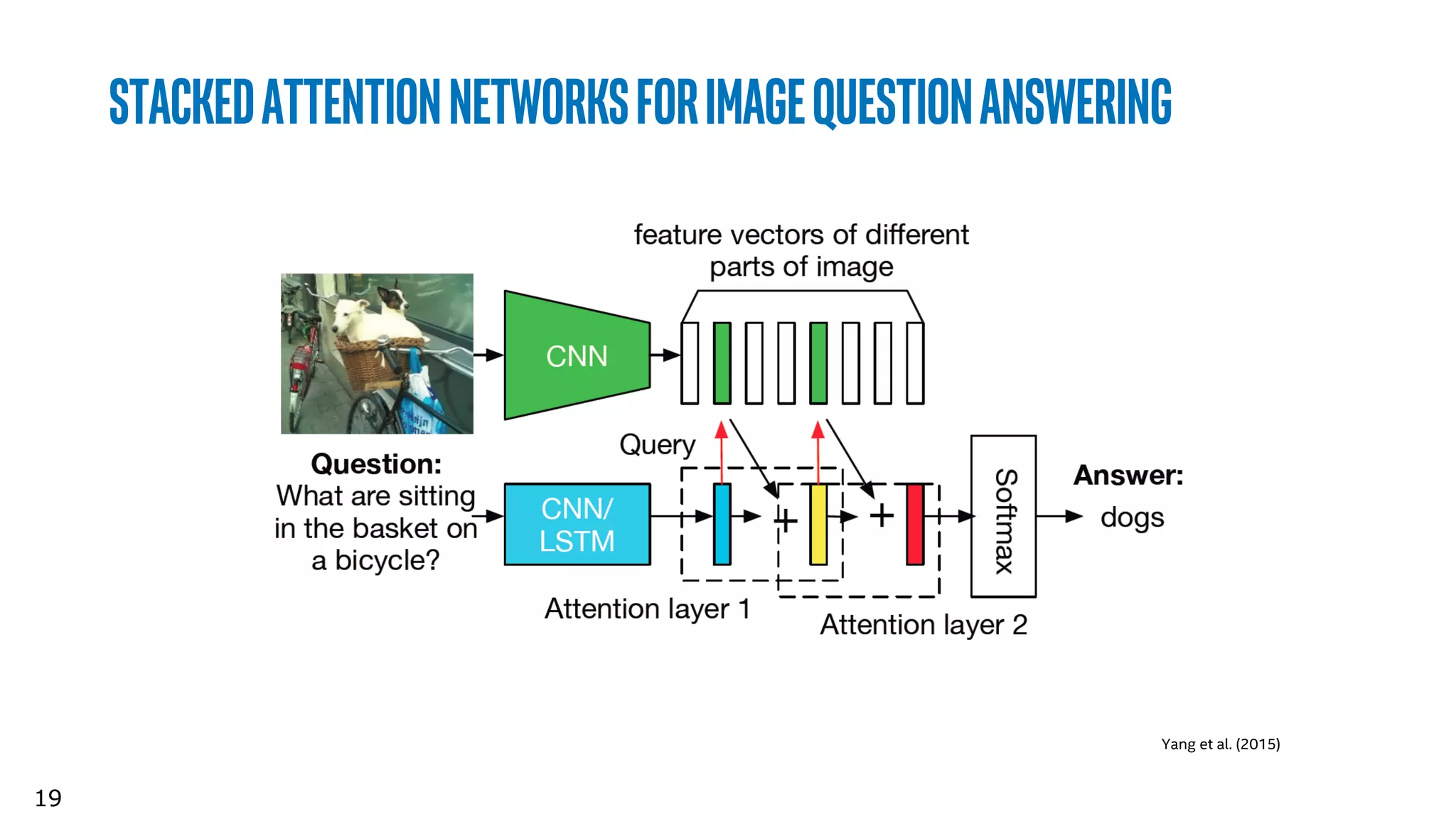

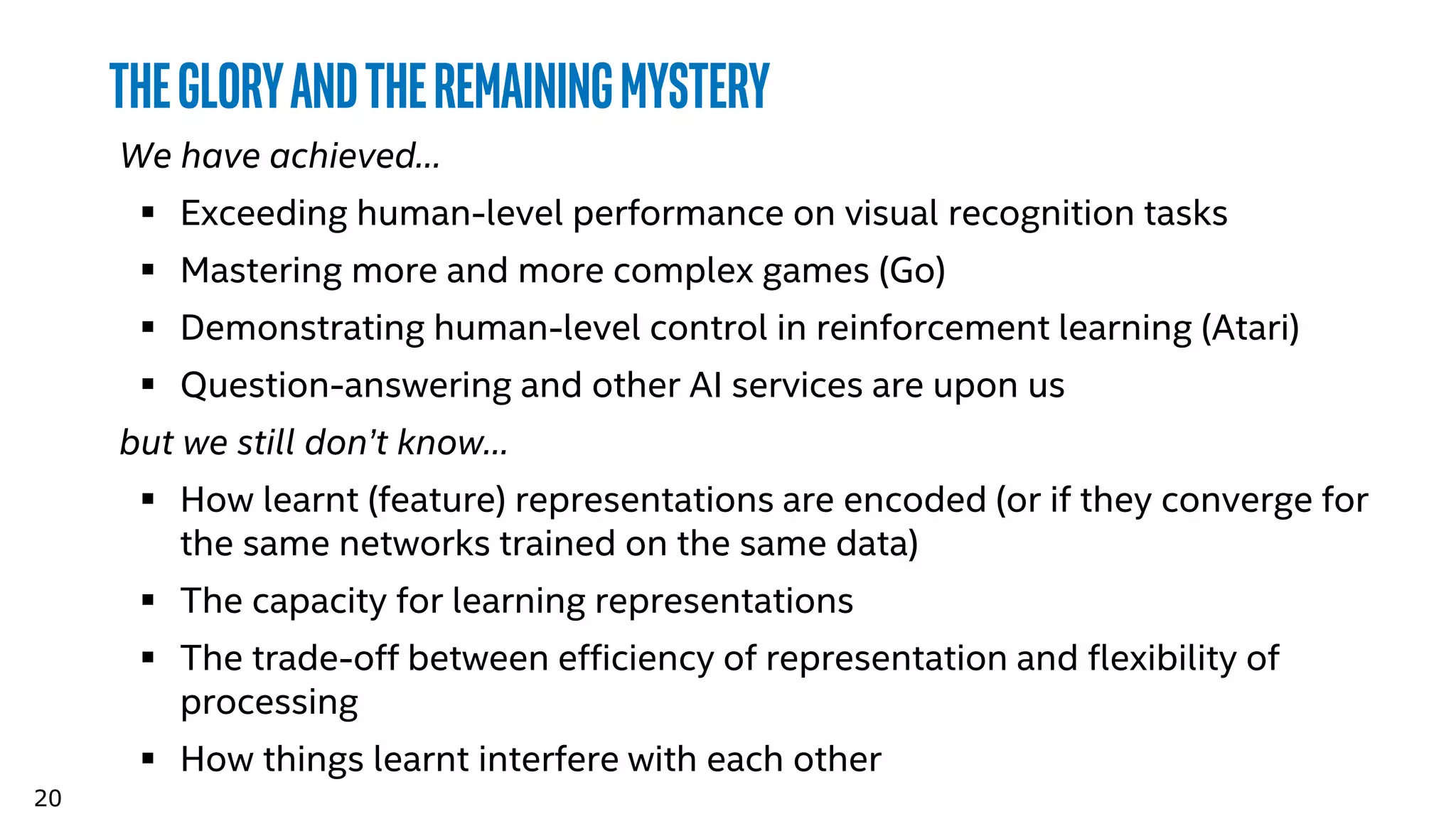

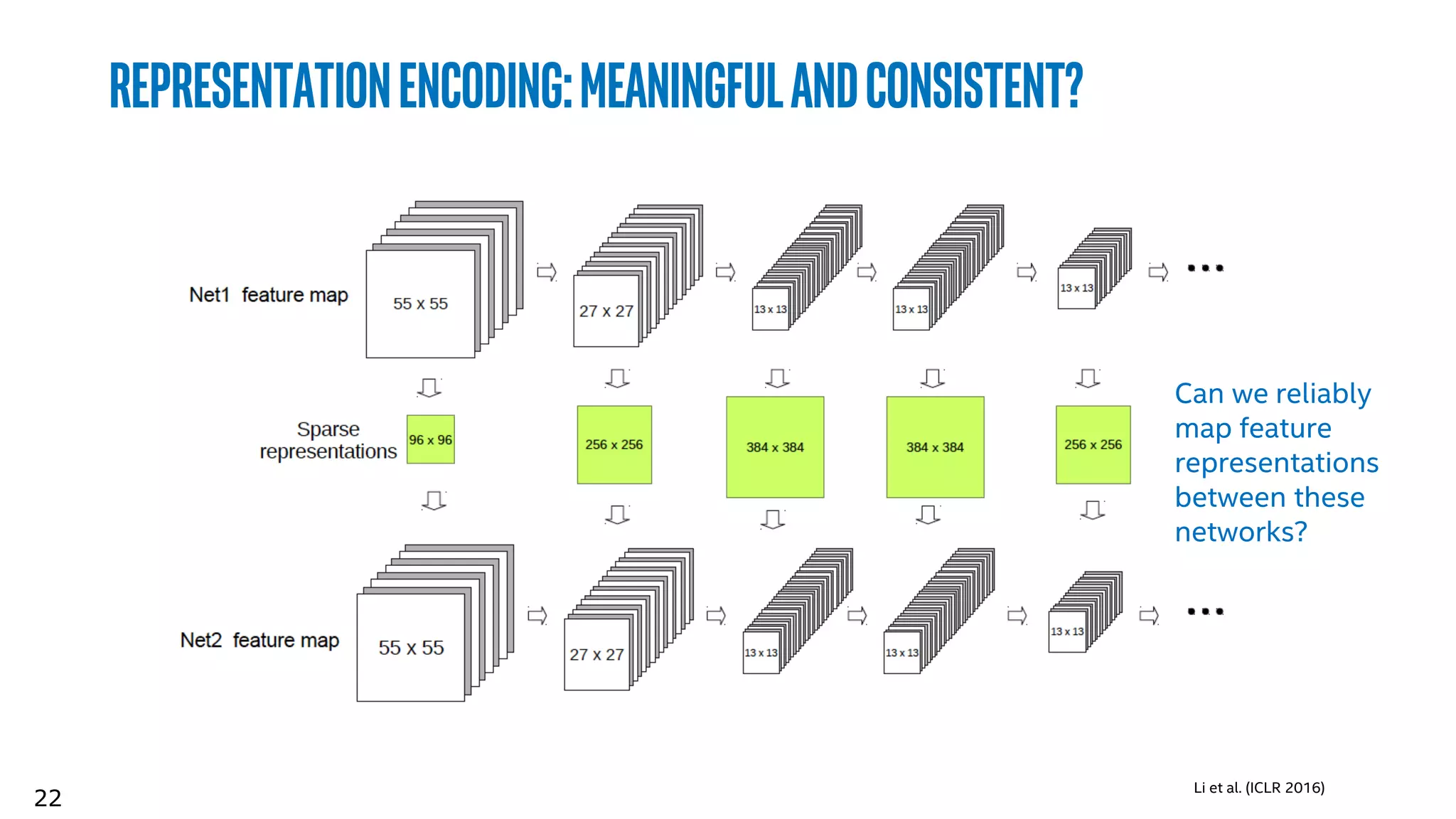

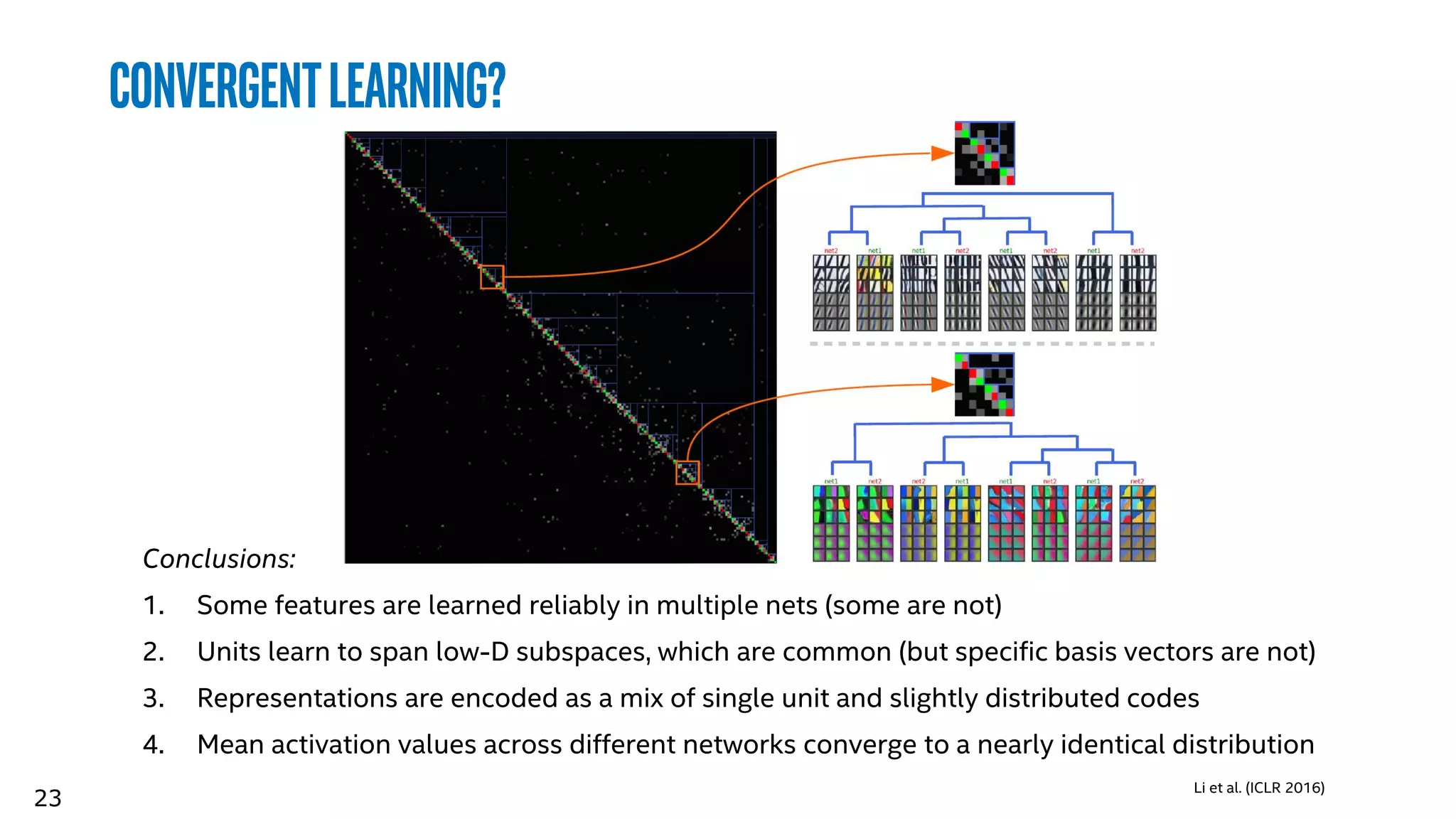

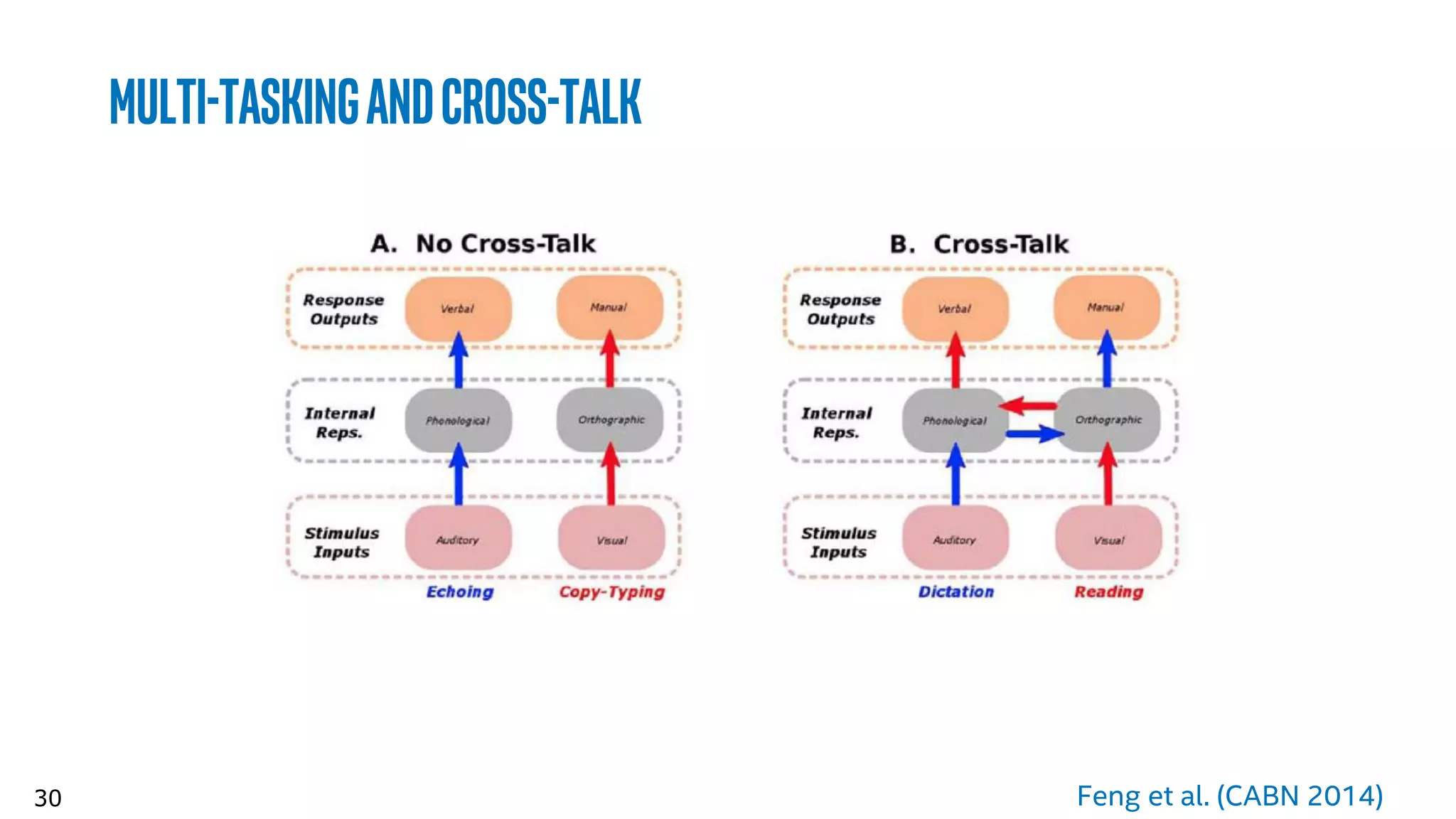

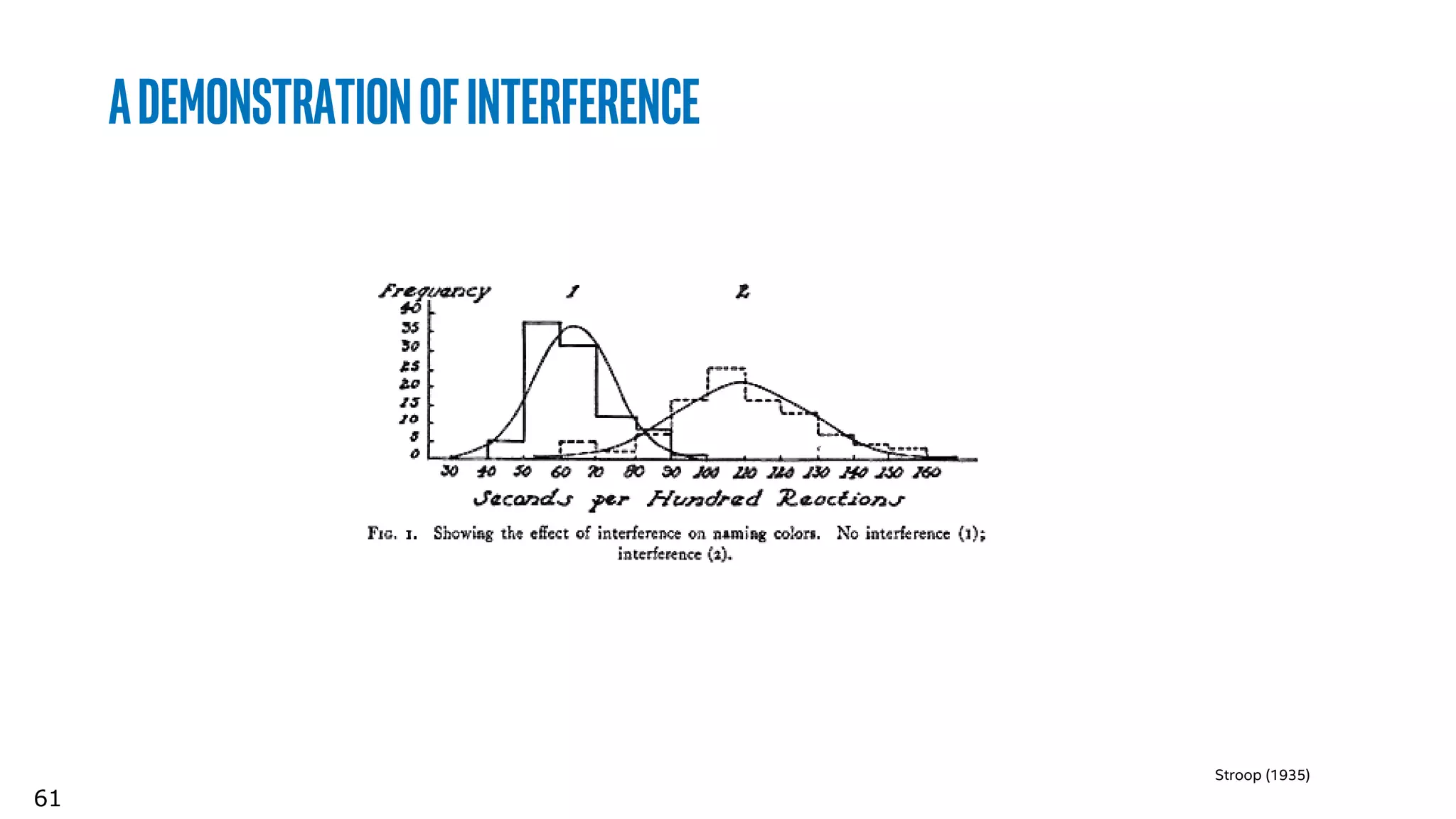

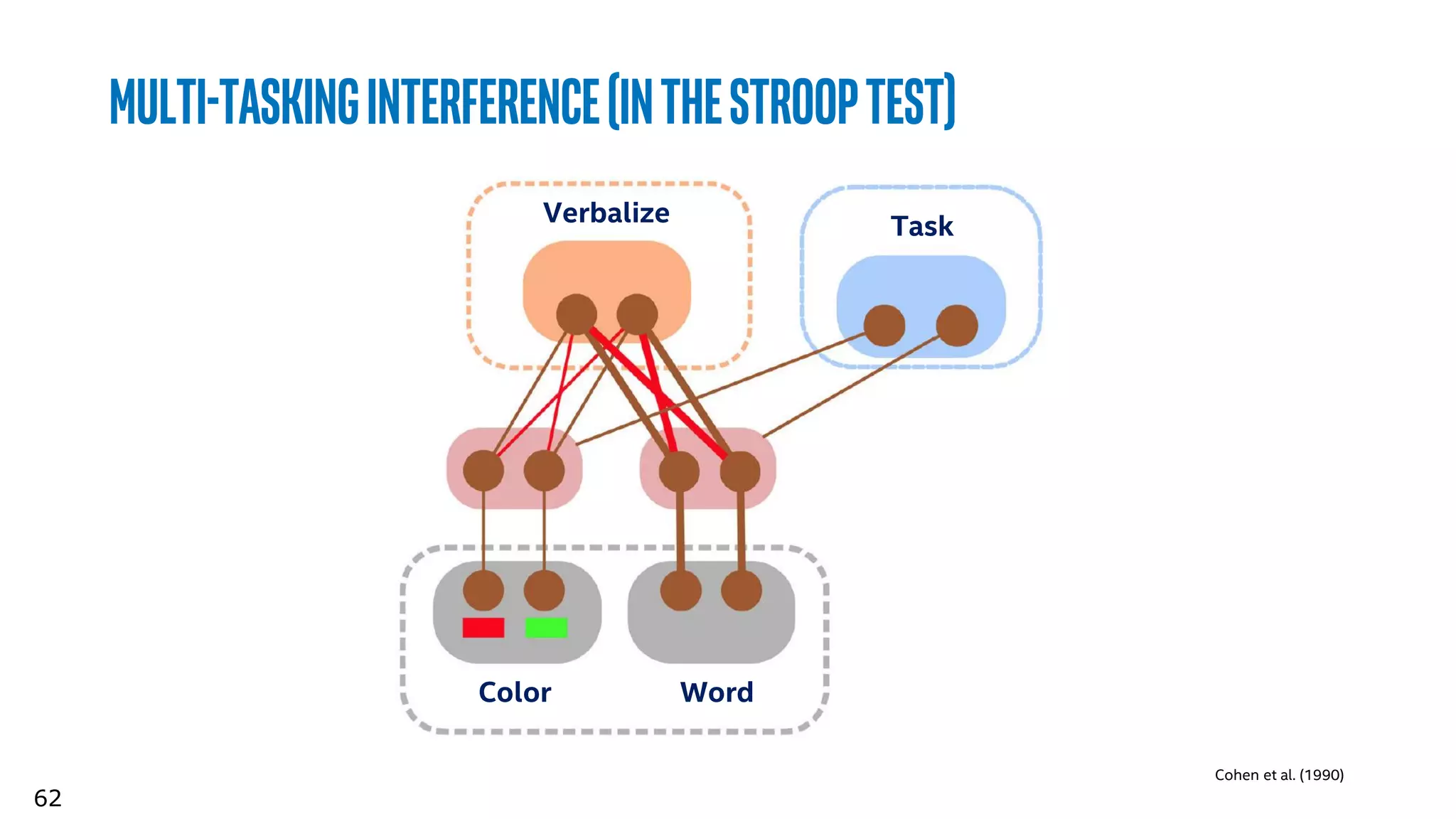

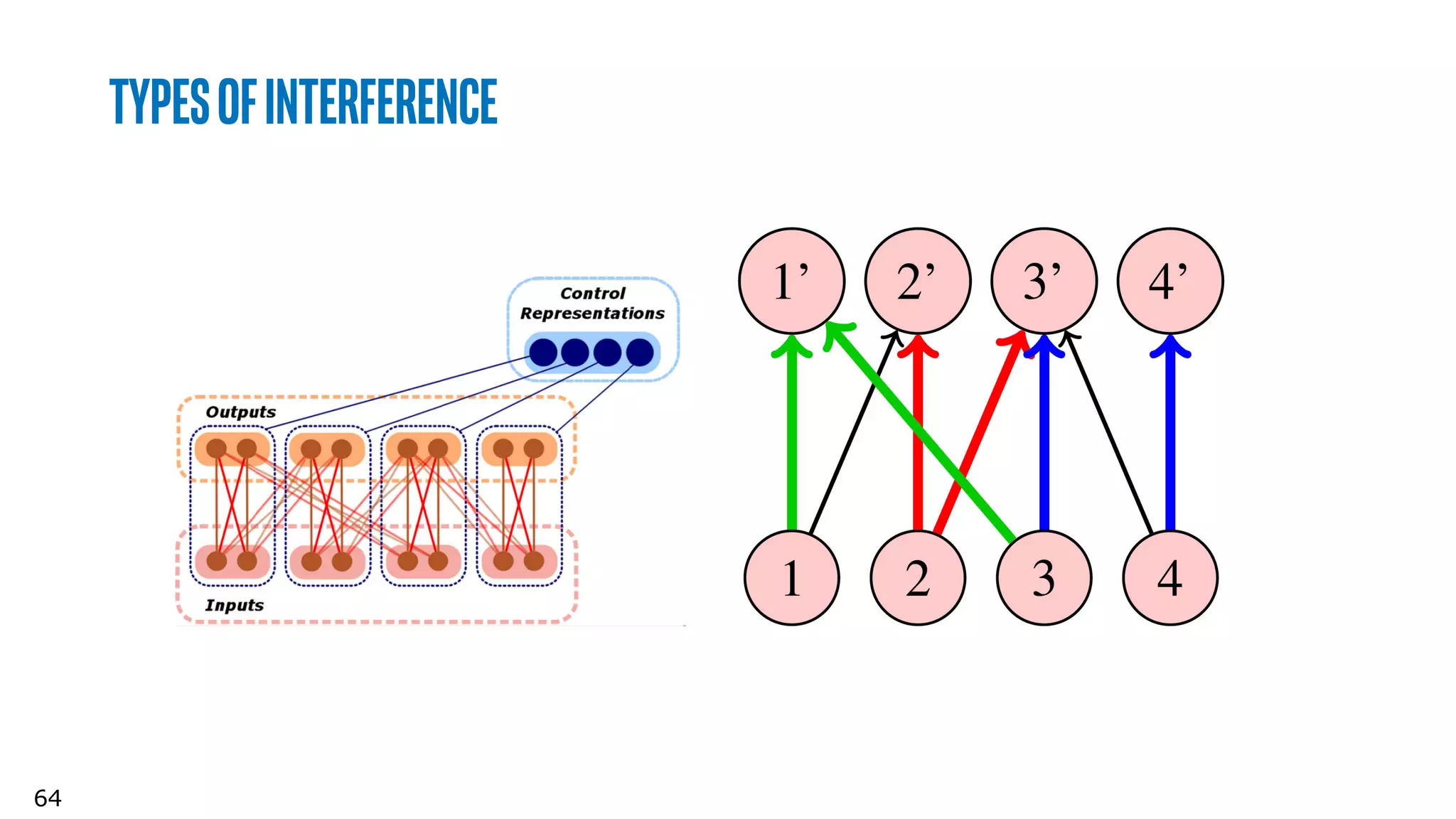

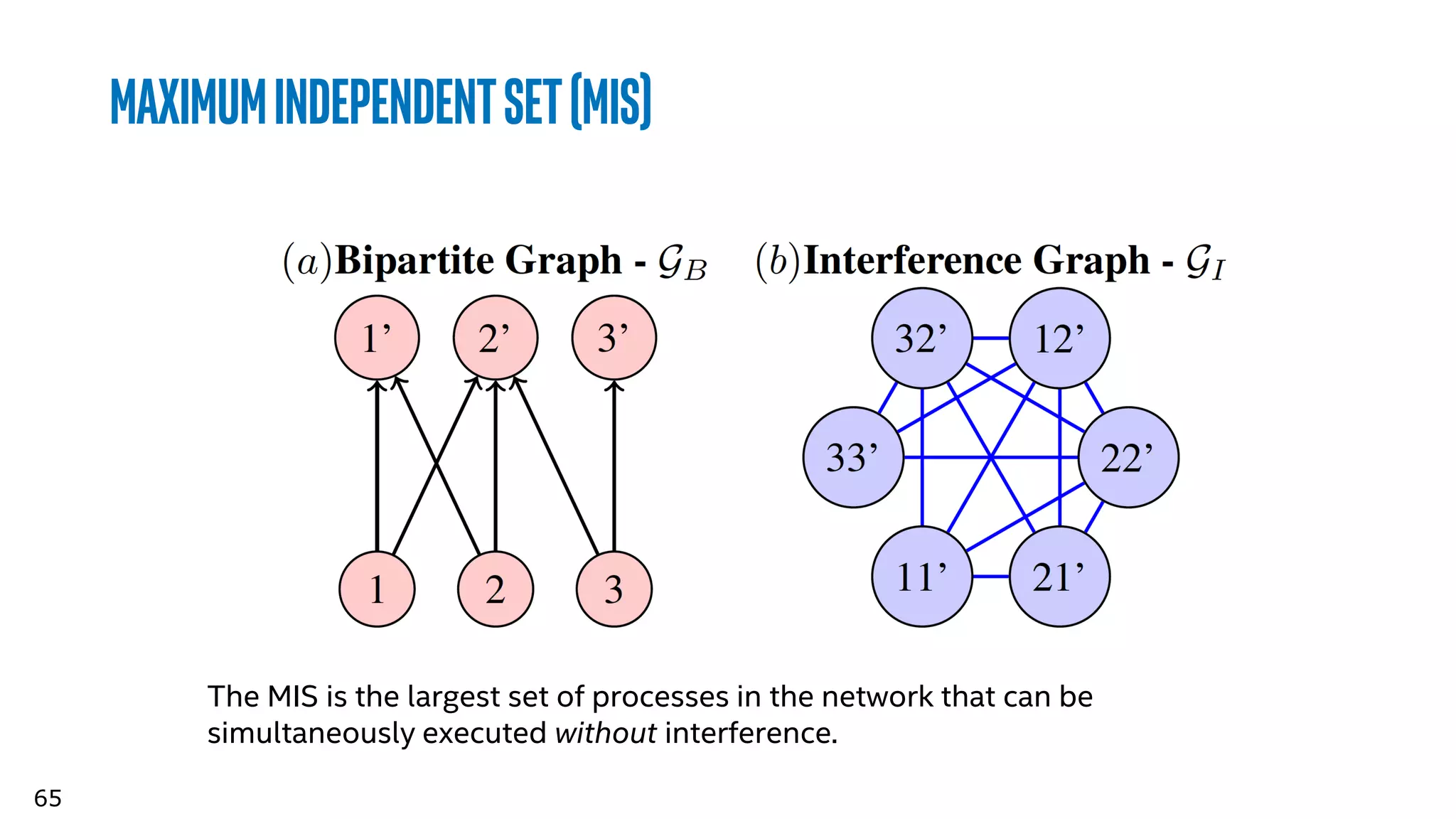

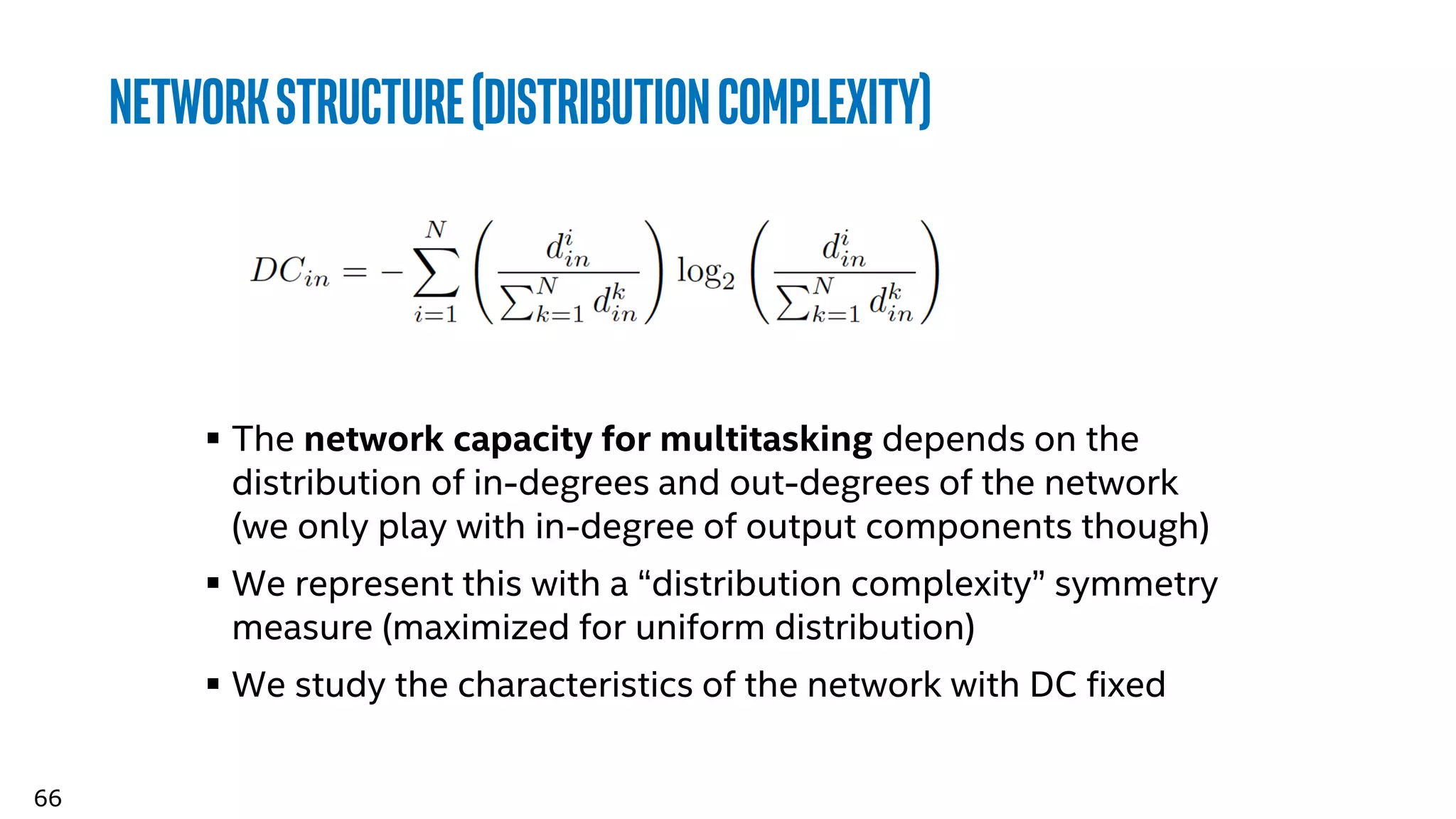

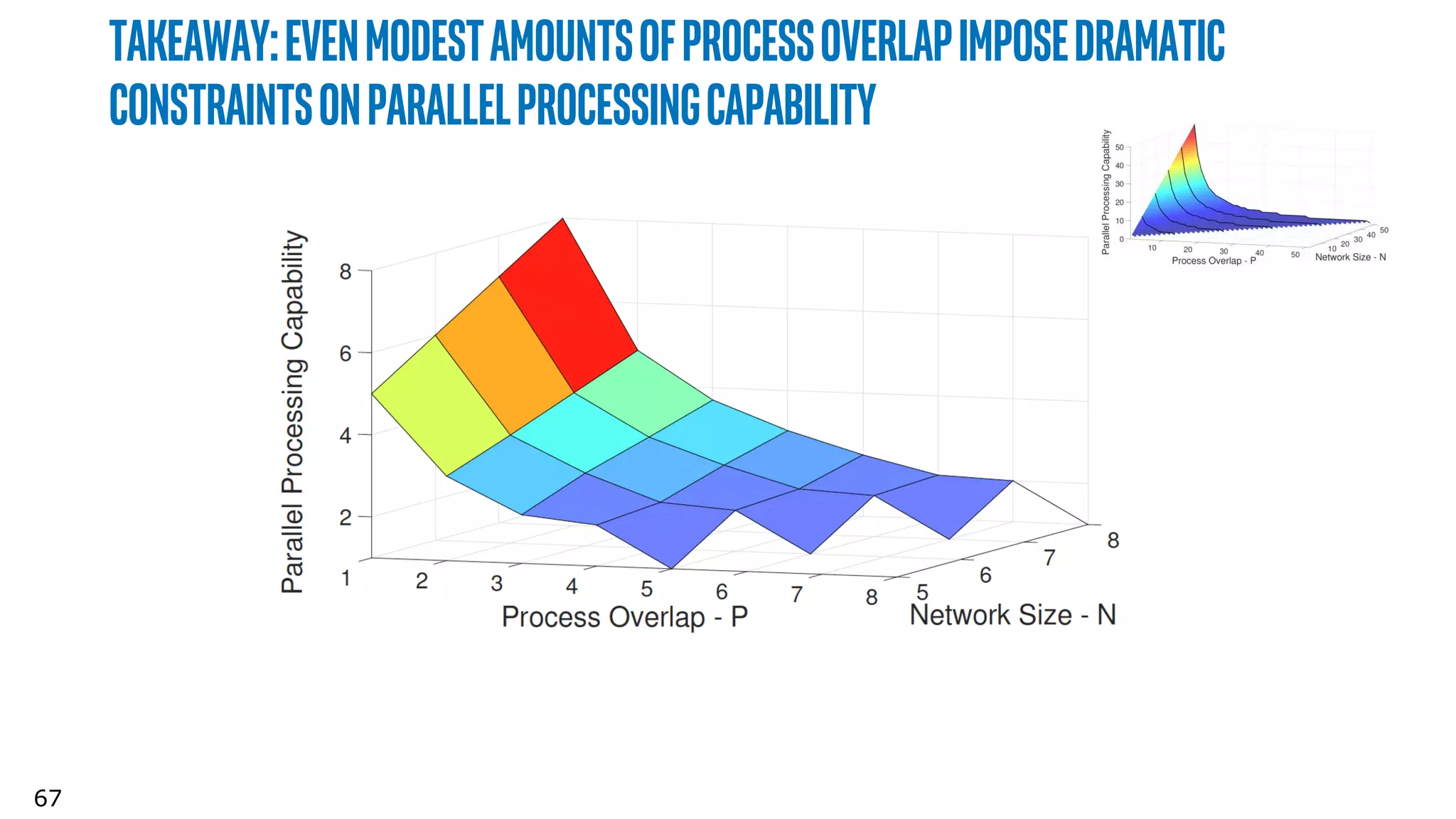

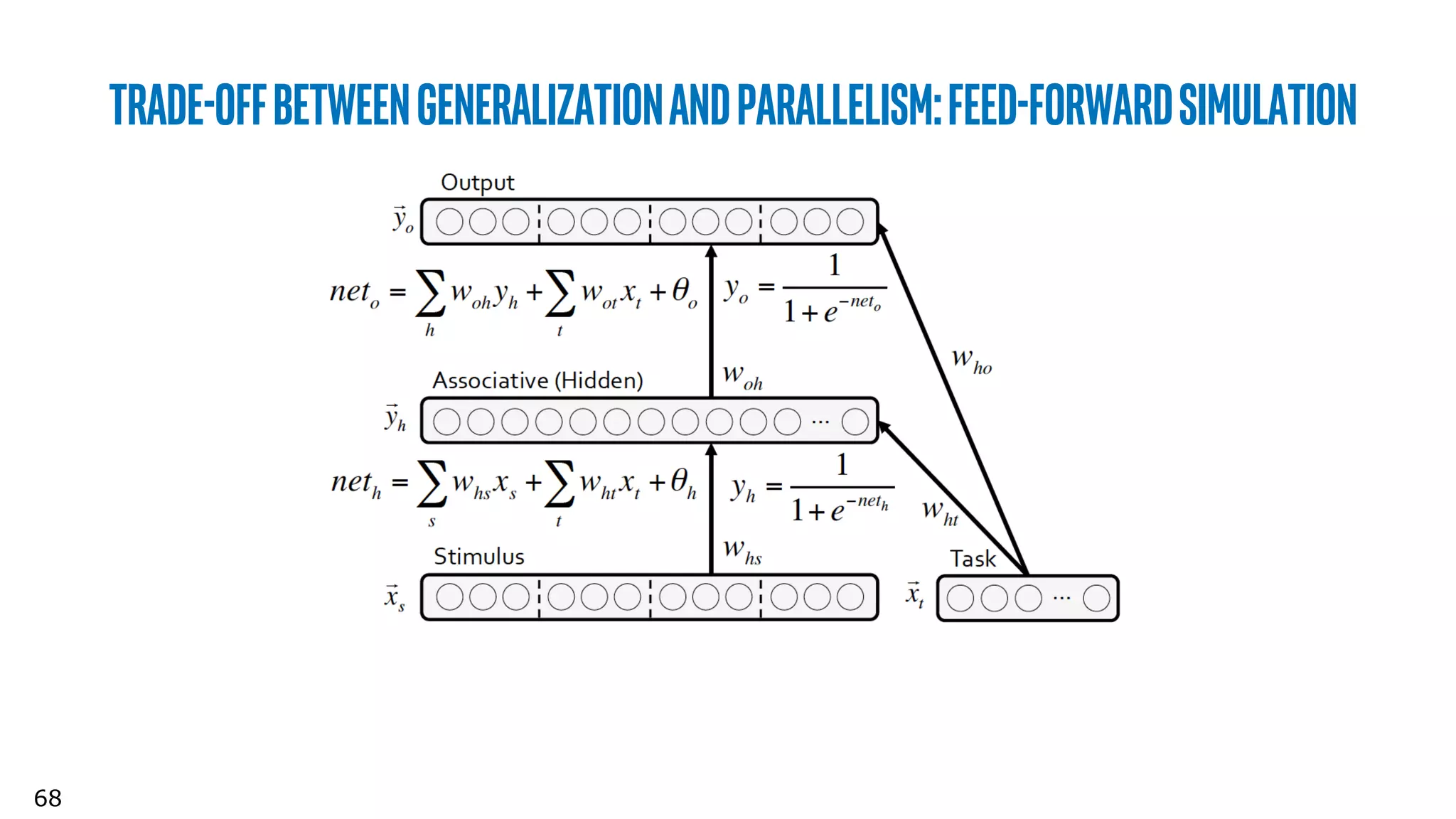

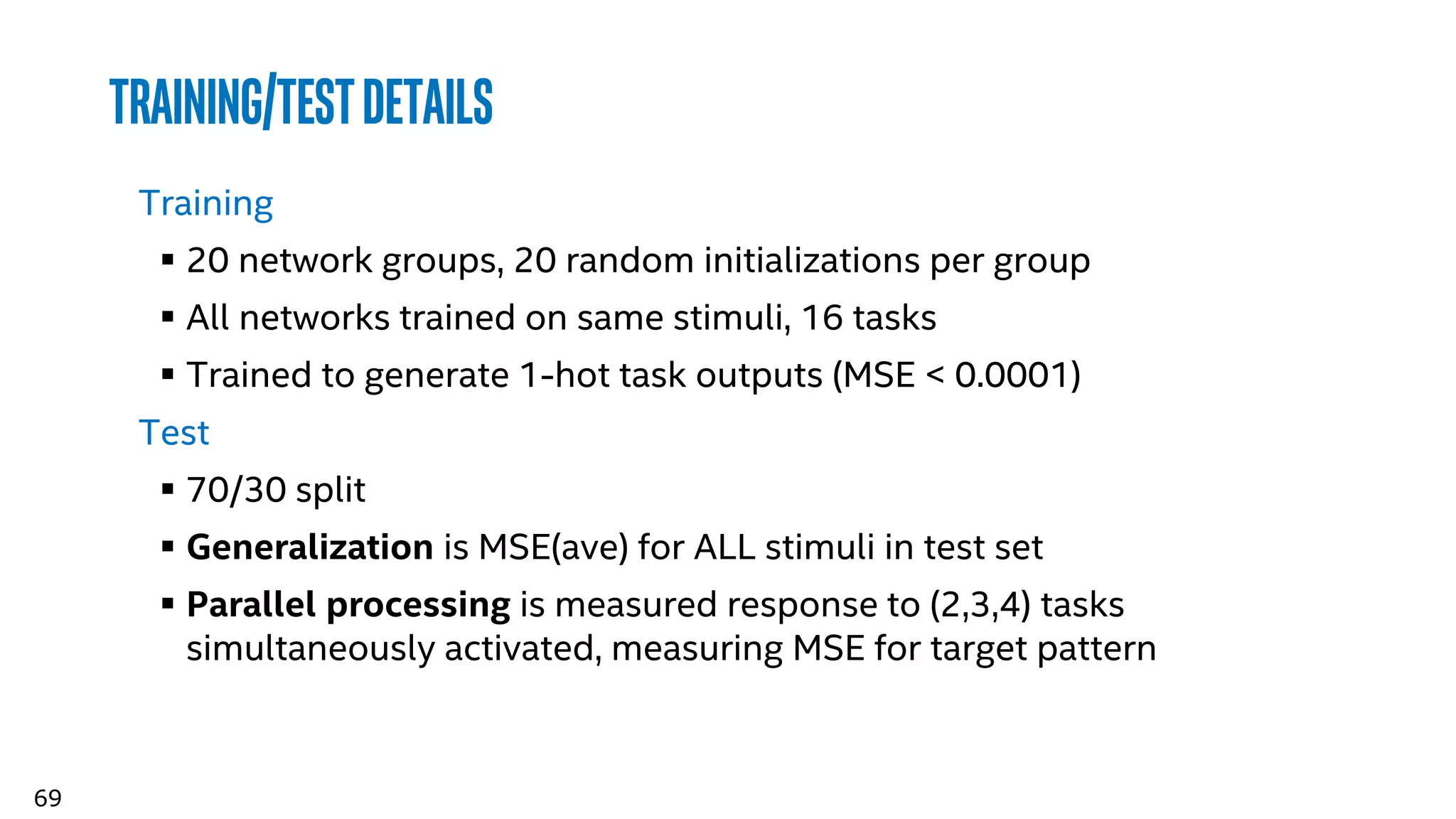

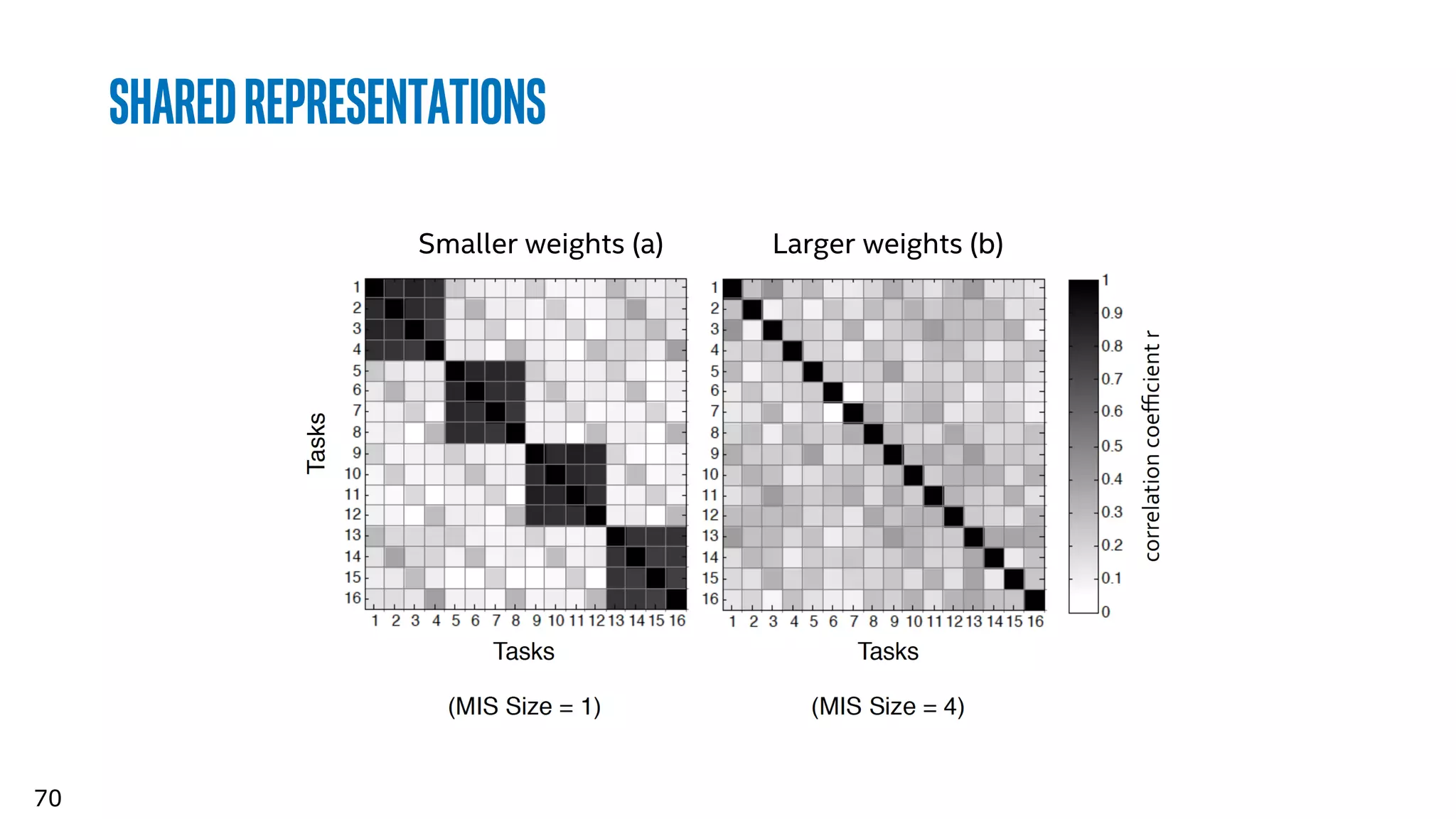

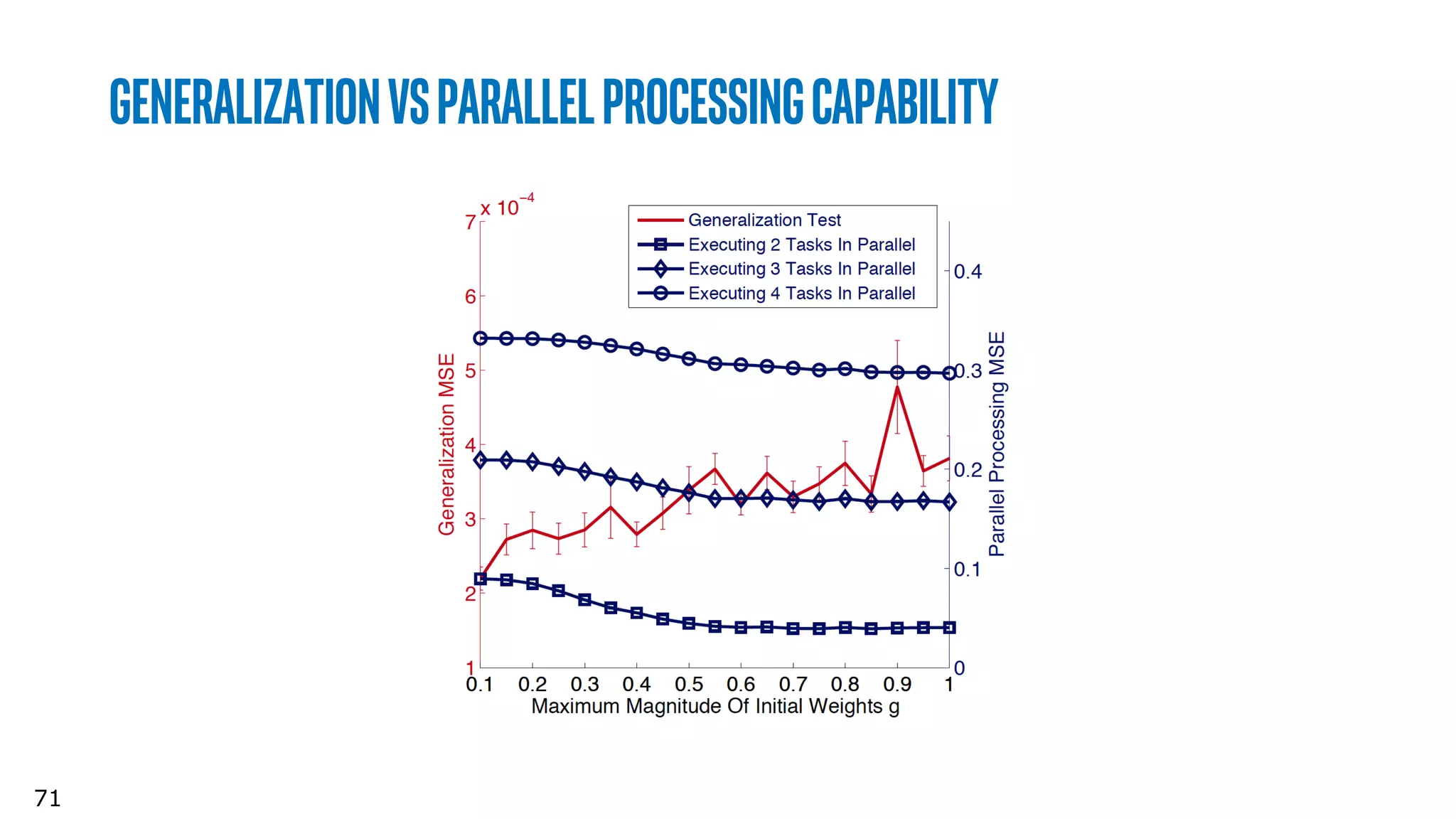

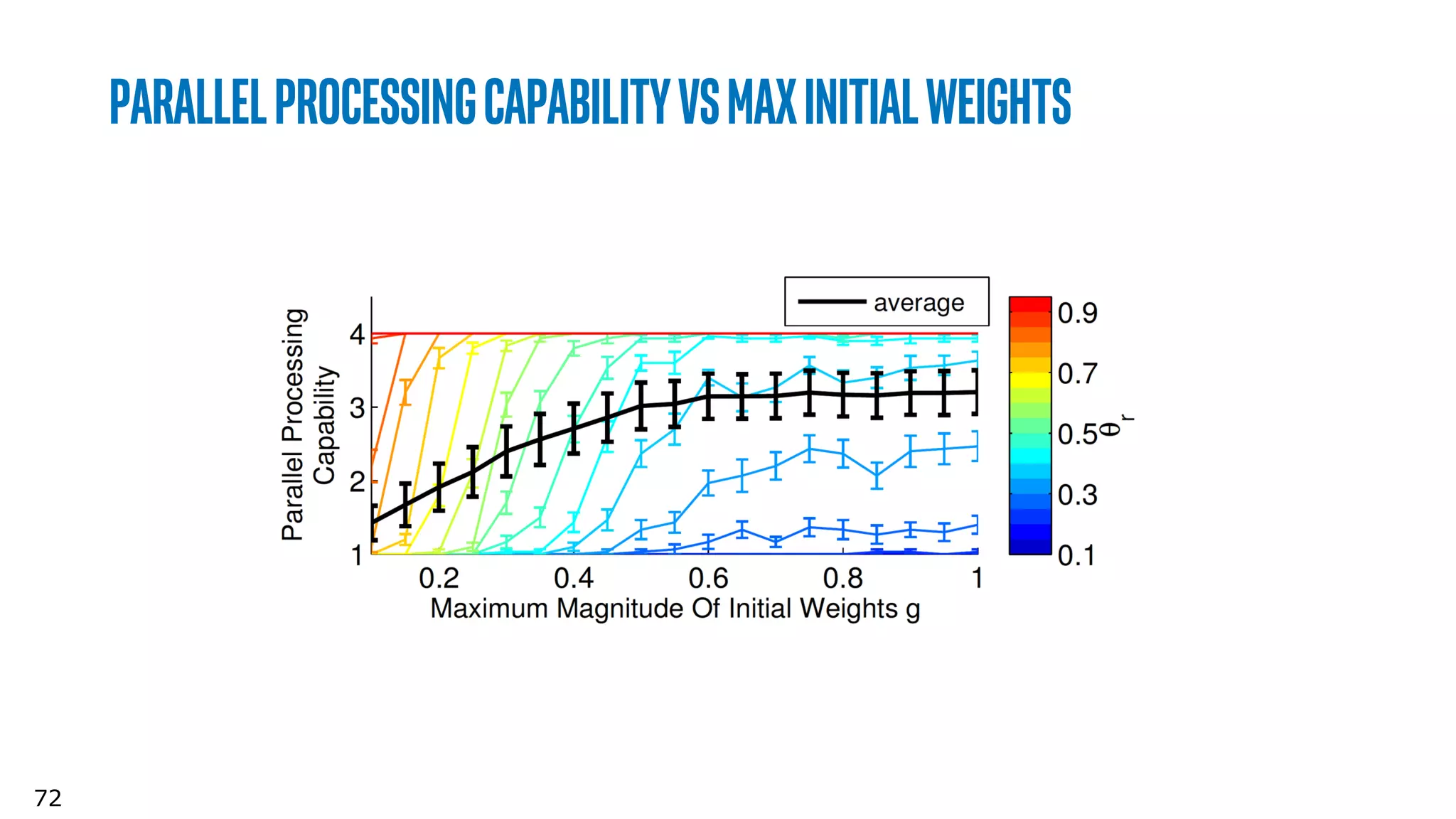

The document discusses the intersection of cognitive neuroscience and deep learning, exploring how insights from neural mechanisms can inform machine learning techniques. It highlights challenges in multitasking, representation learning, and the trade-offs between generalization and parallel processing in both human cognition and artificial neural networks. The conclusions emphasize the need for further research into the capabilities and limitations of neural networks in processing tasks simultaneously.