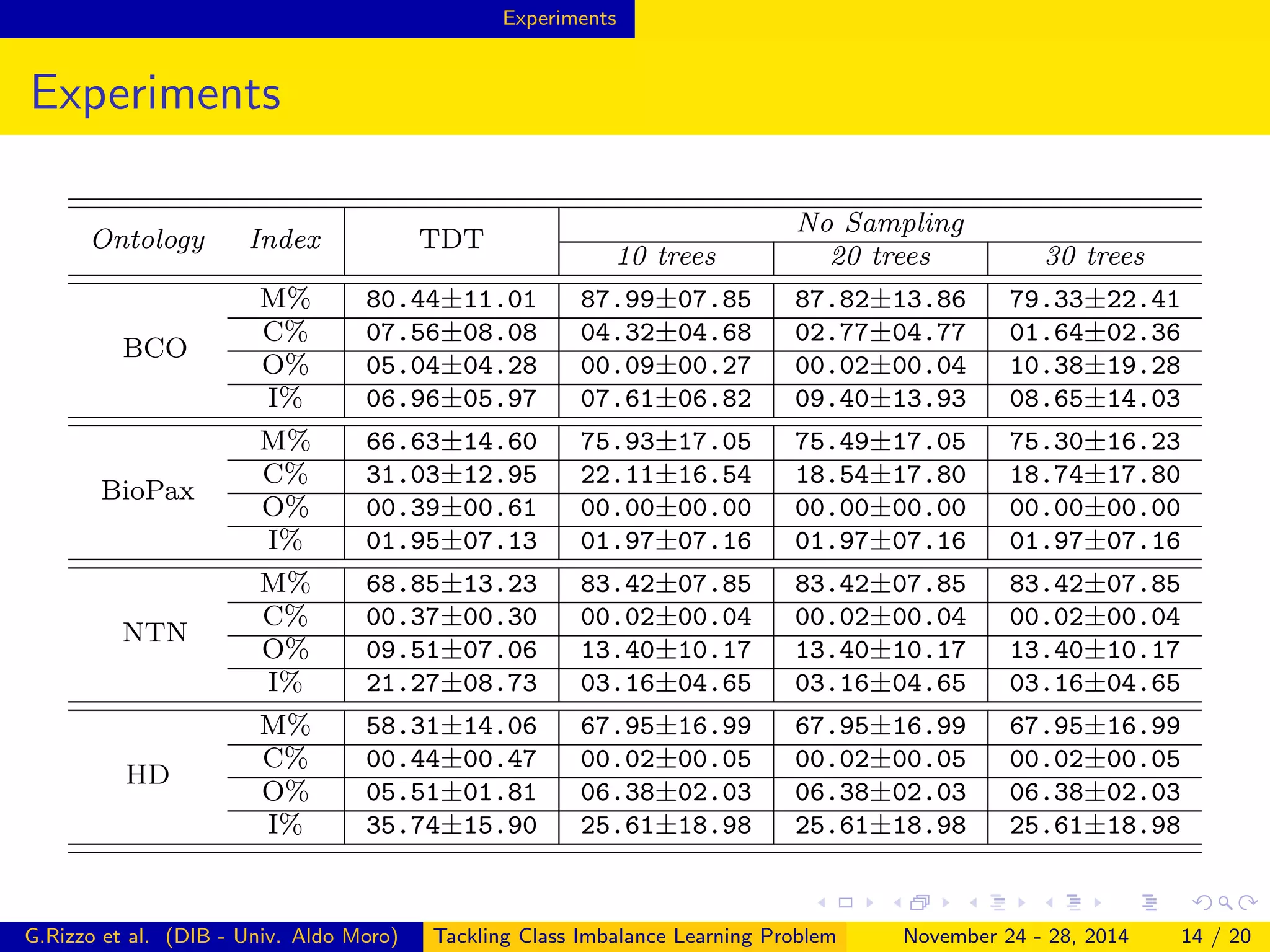

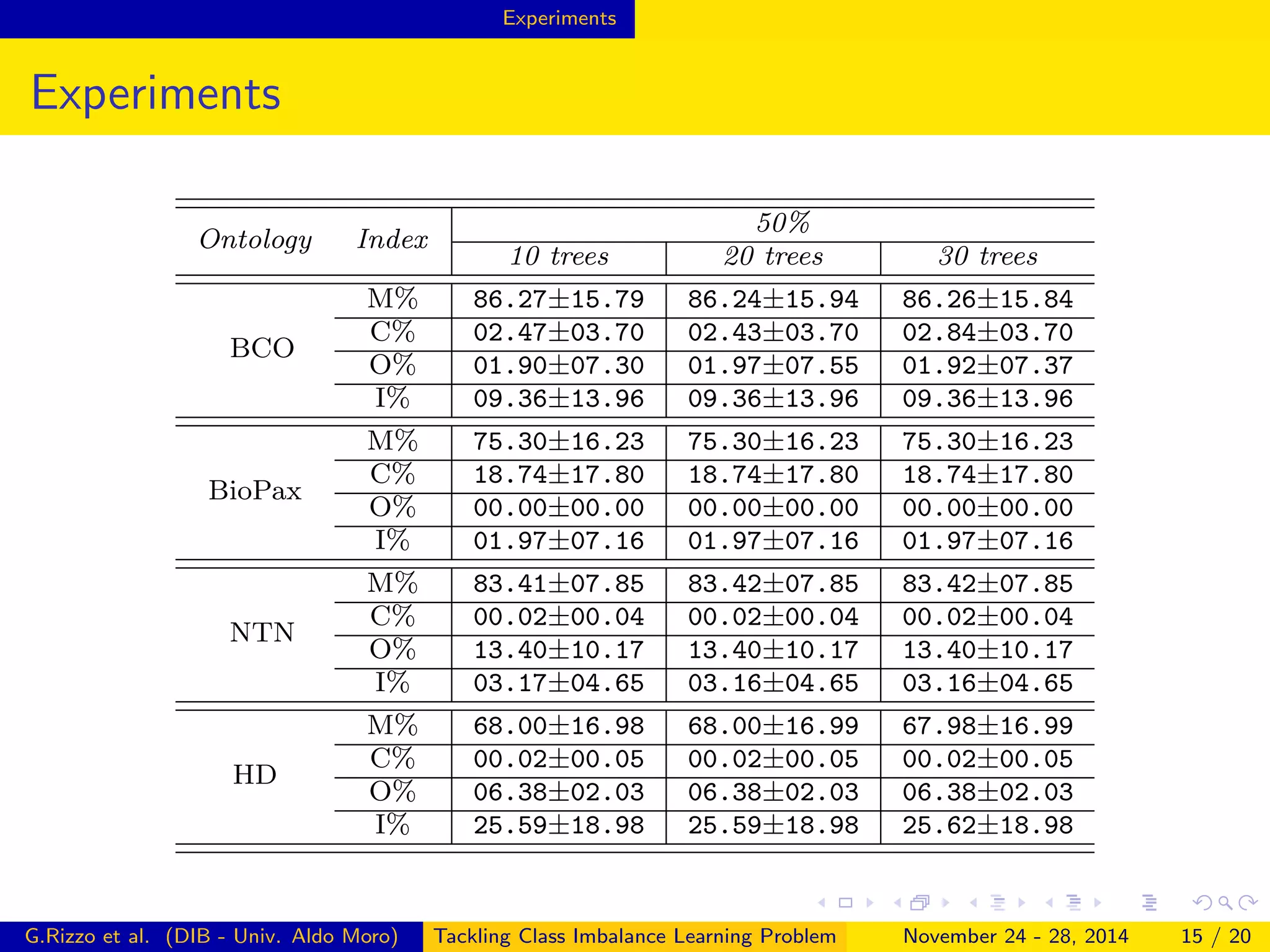

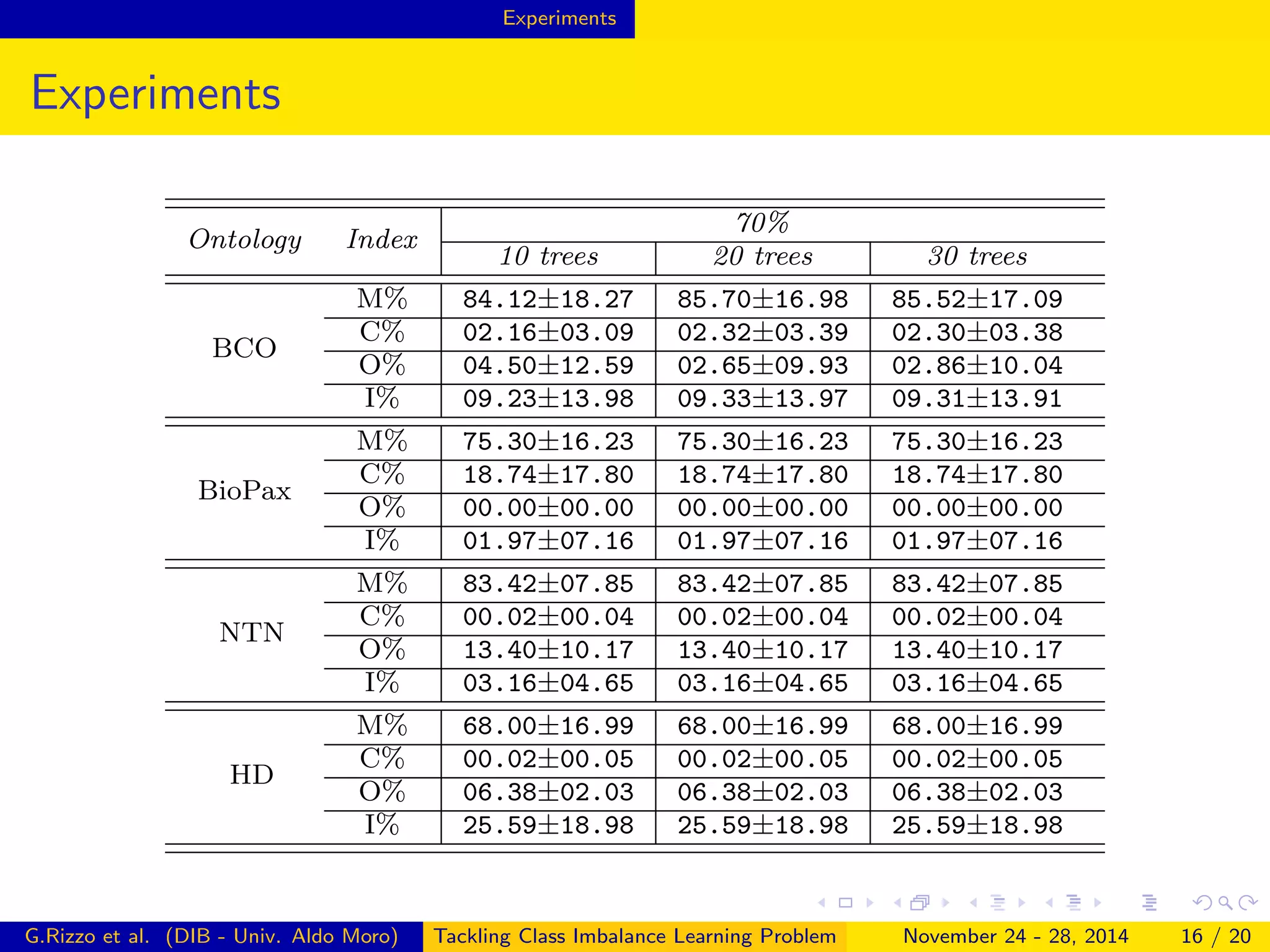

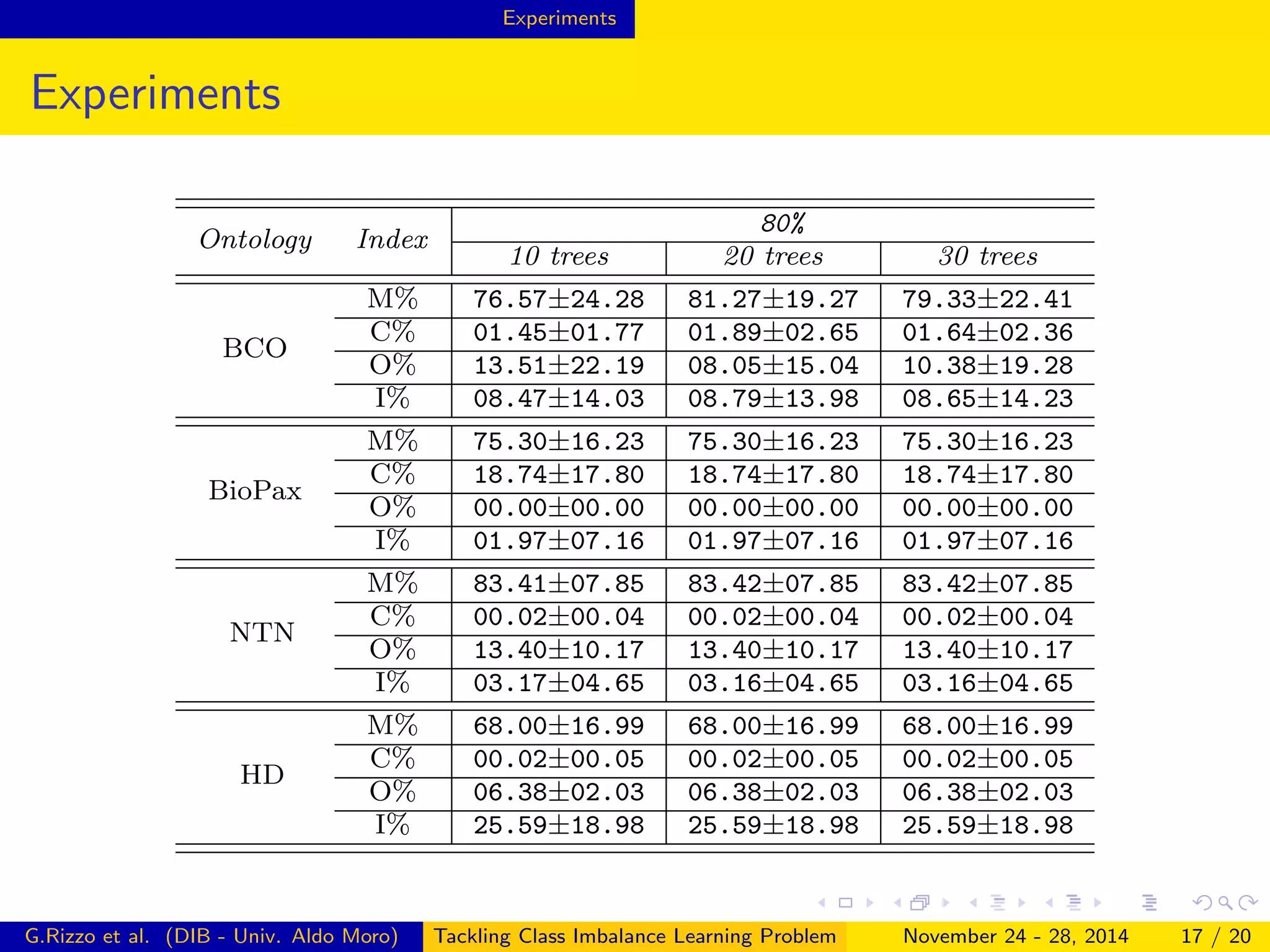

The document describes a framework for tackling class imbalance problems in machine learning on semantic web knowledge bases. It proposes combining sampling strategies with ensemble learning methods like bagging to generate multiple balanced training subsets. A technique called Terminological Random Forest is presented which uses terminological decision trees as weak learners. Experiments on several ontologies show the framework improves performance over single classifiers, with matching rates to a reasoner of up to 87% and low commission rates.