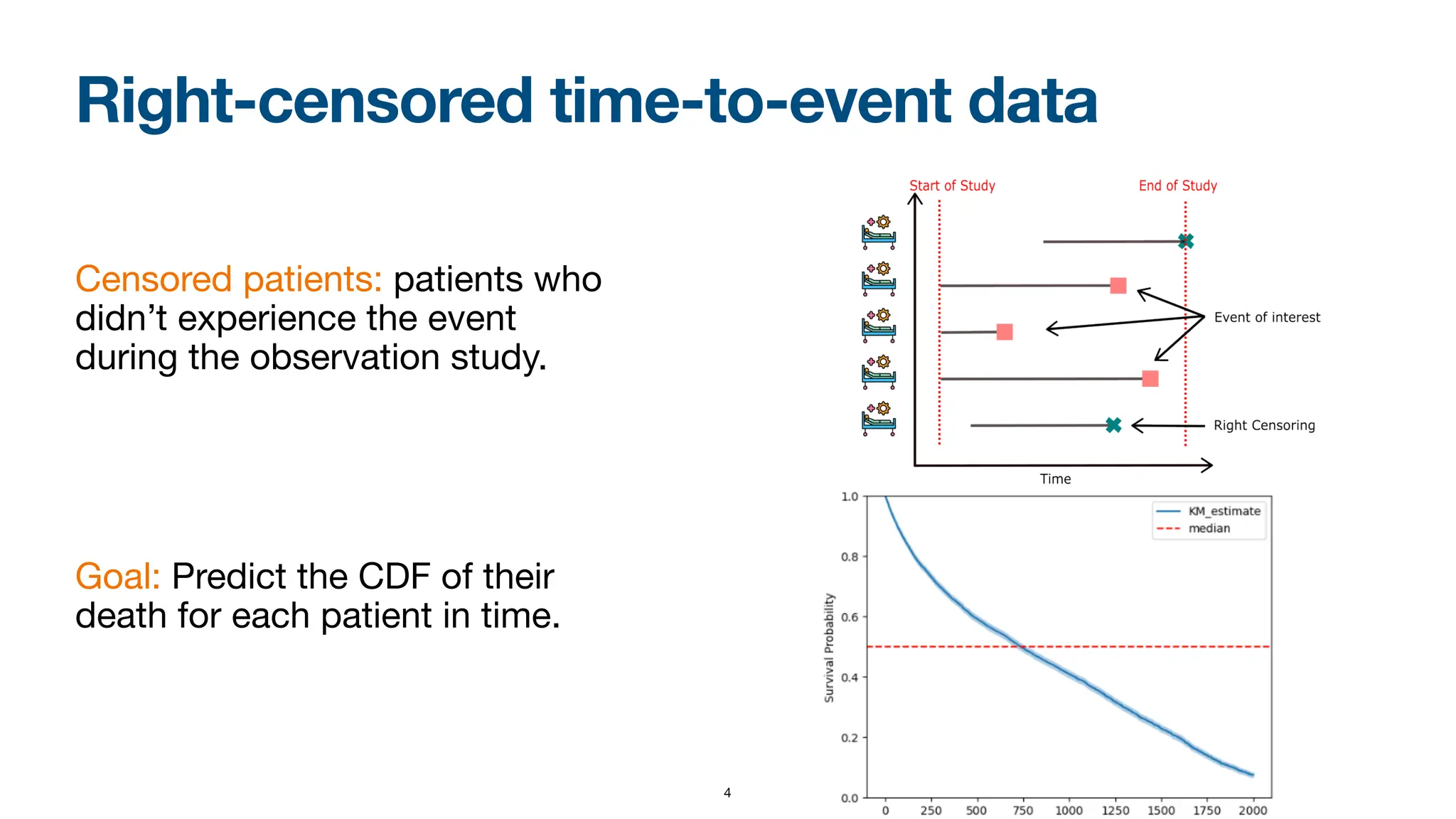

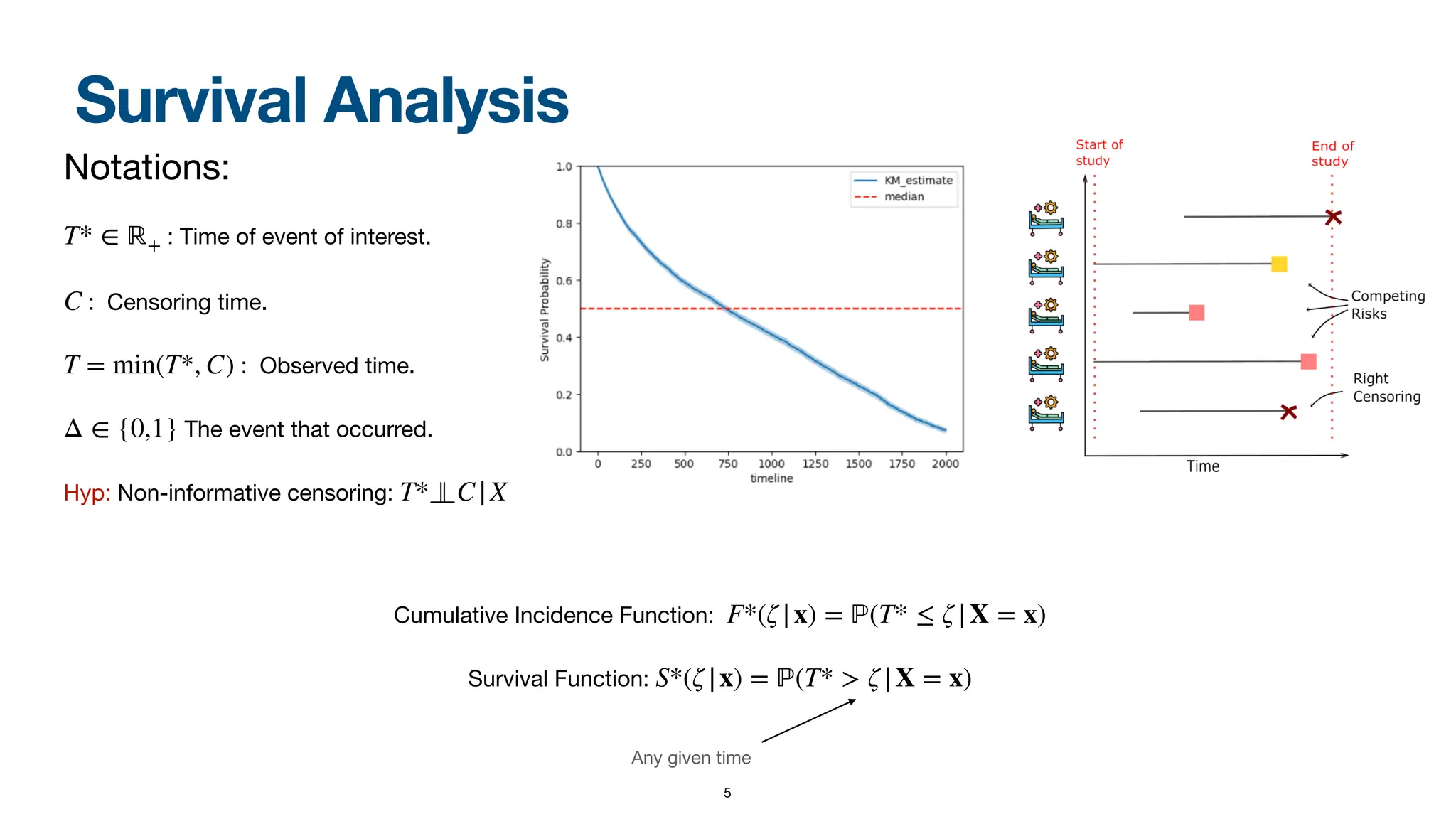

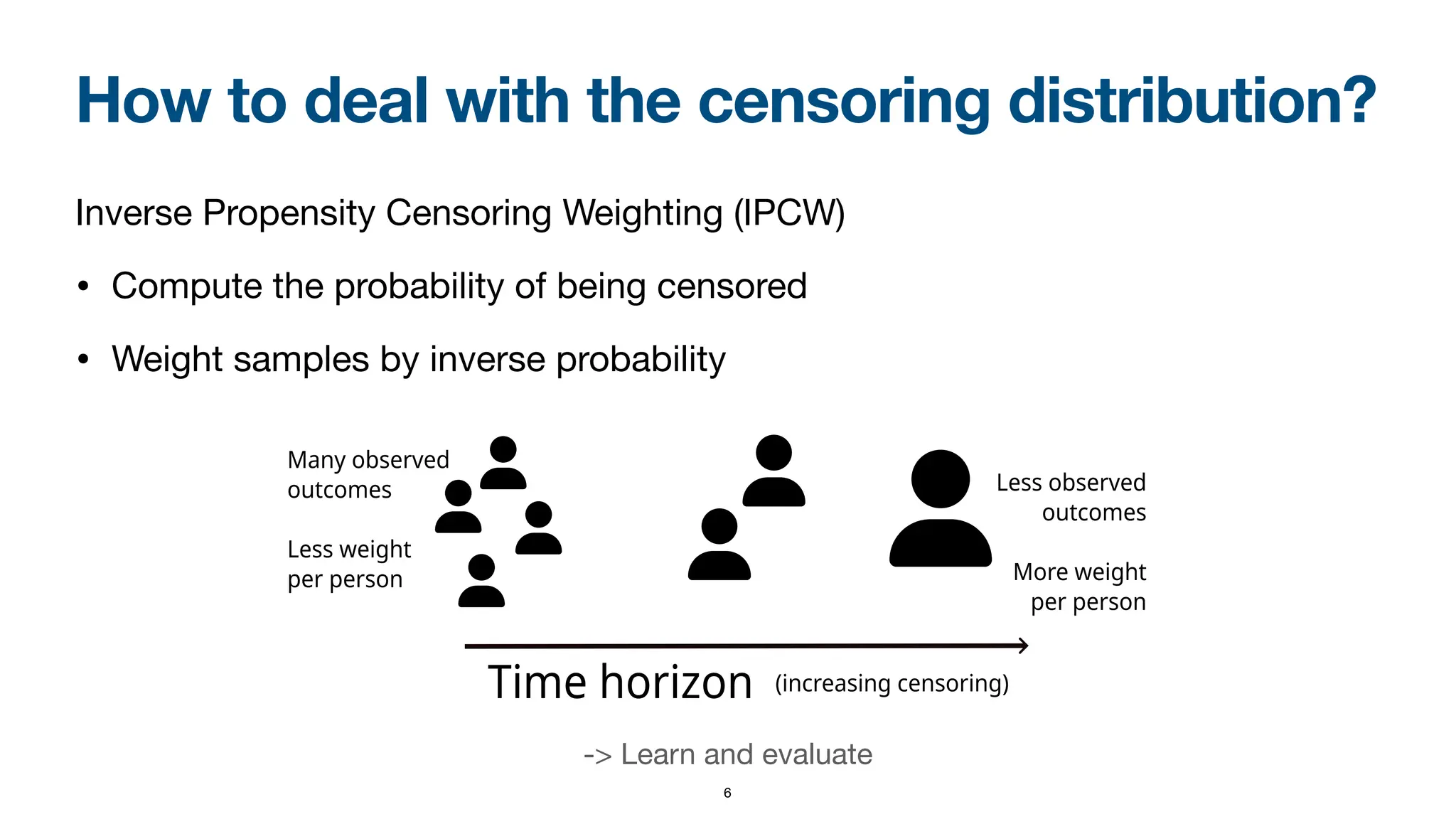

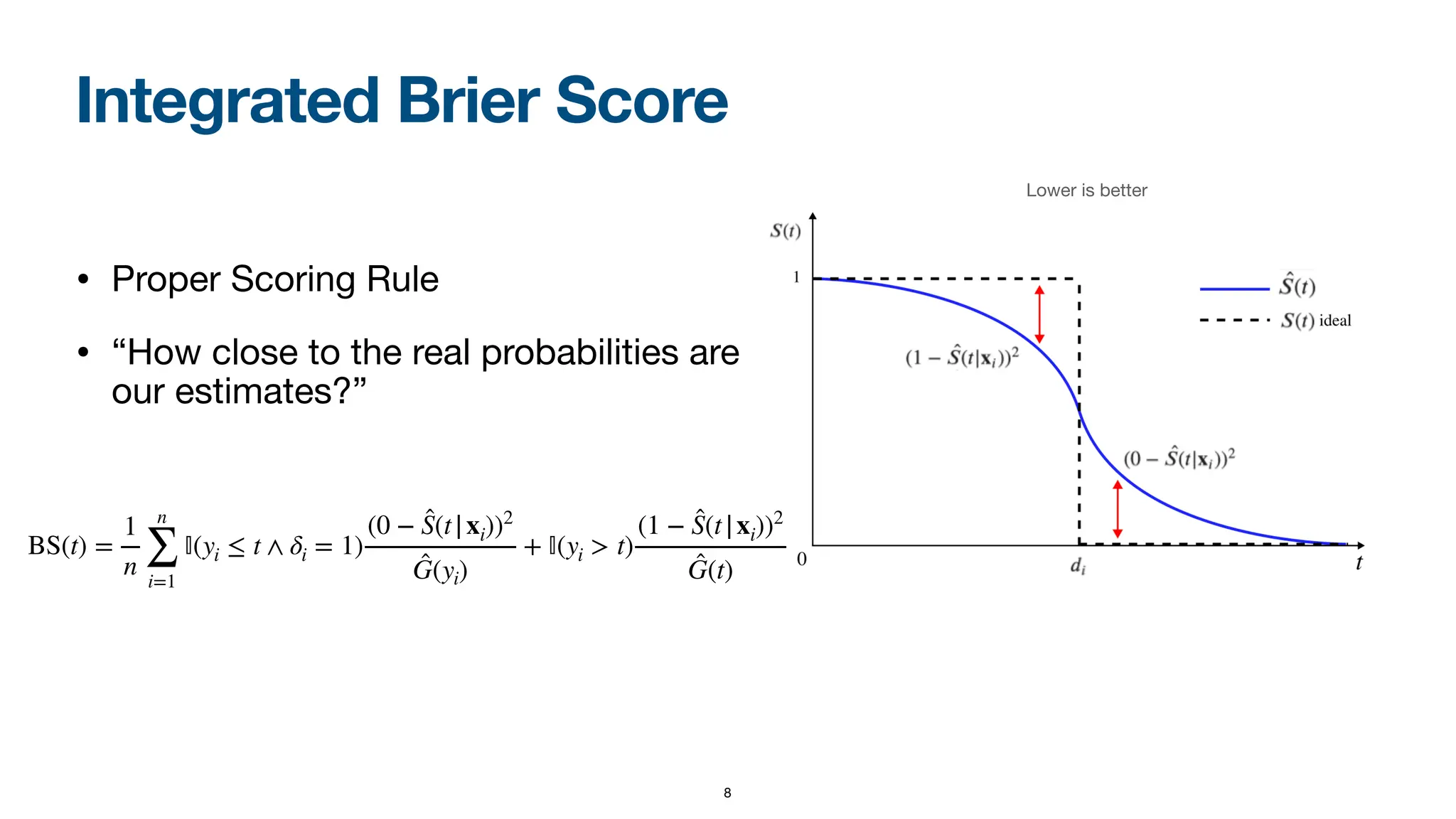

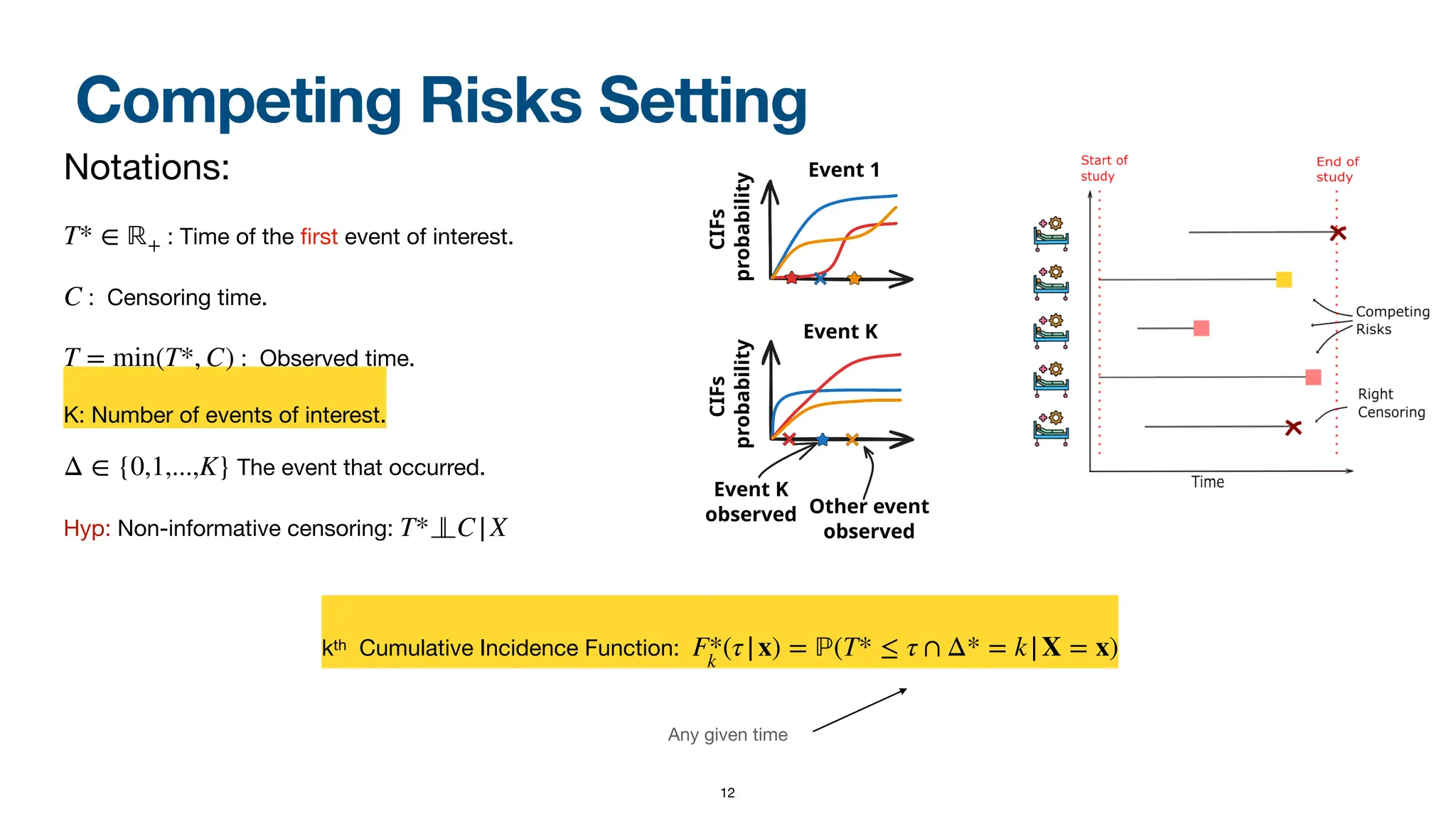

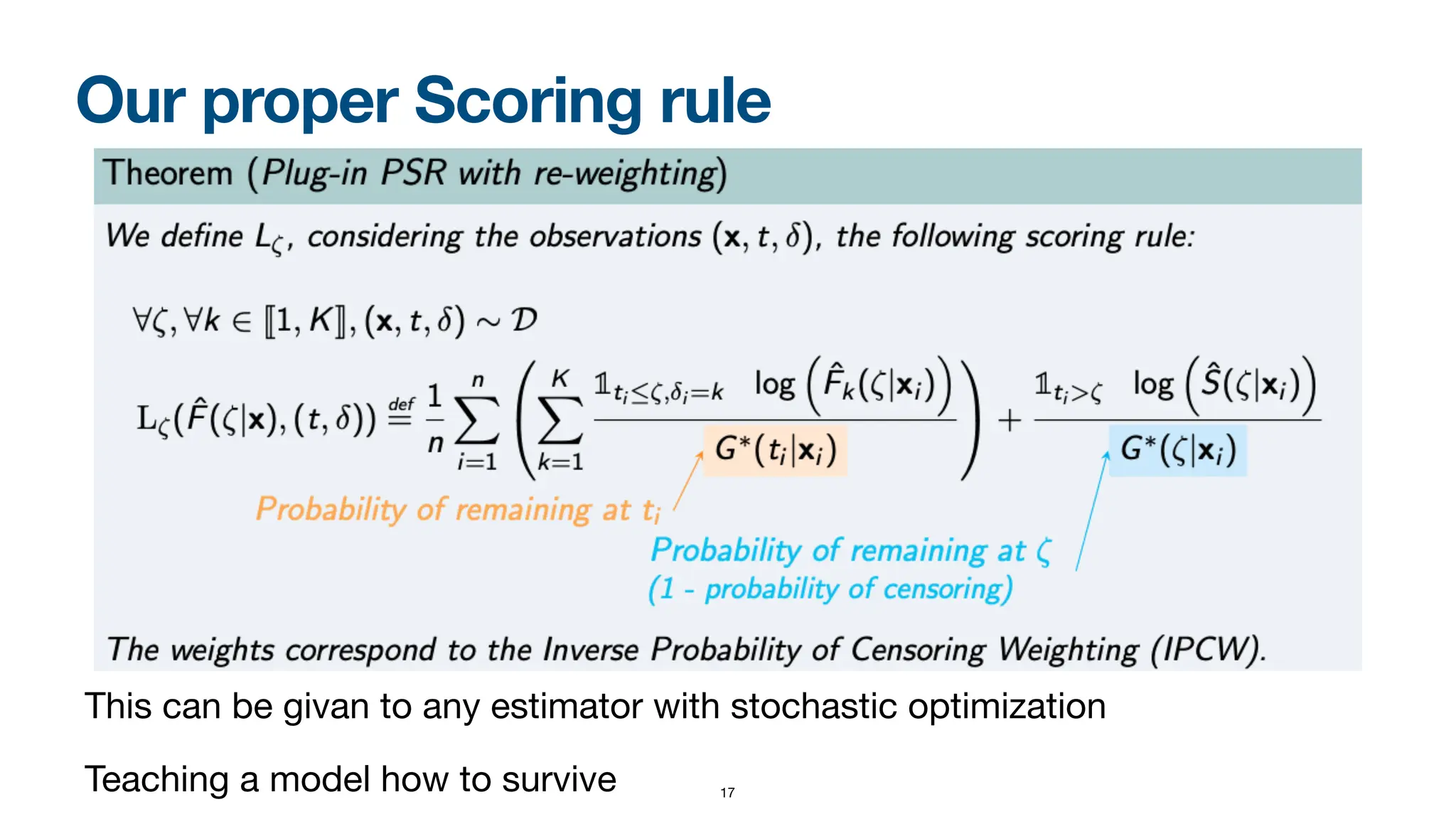

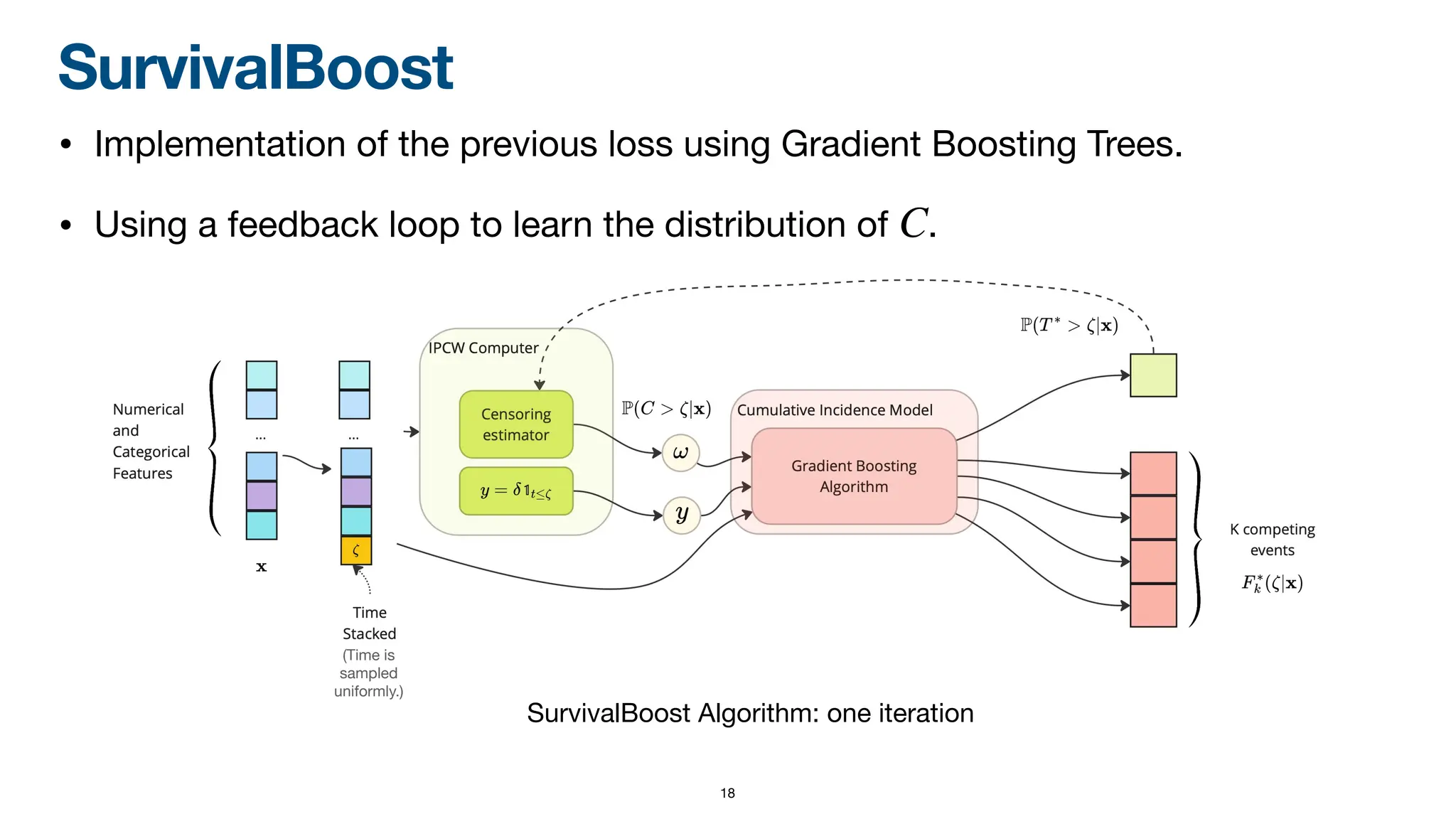

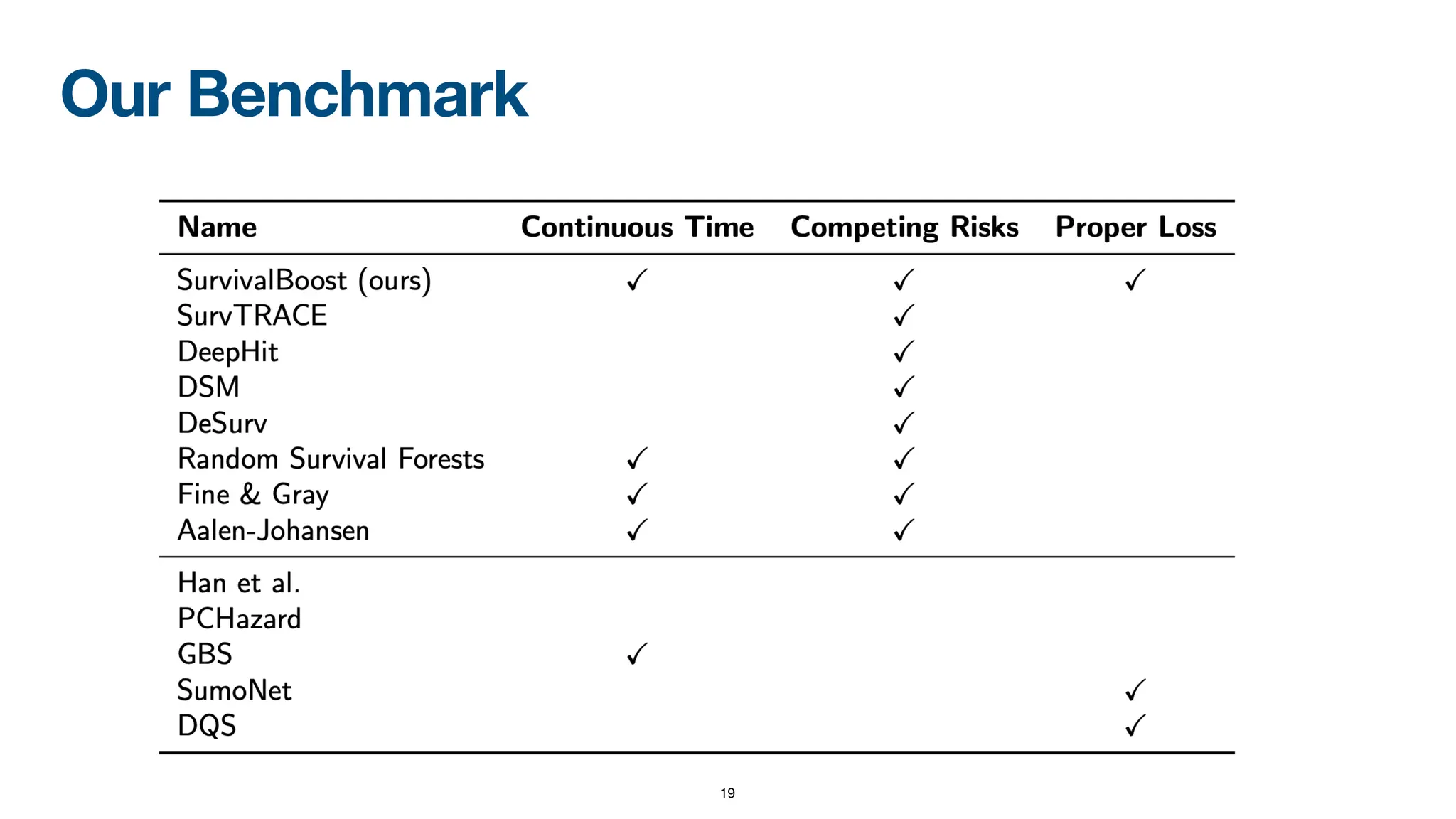

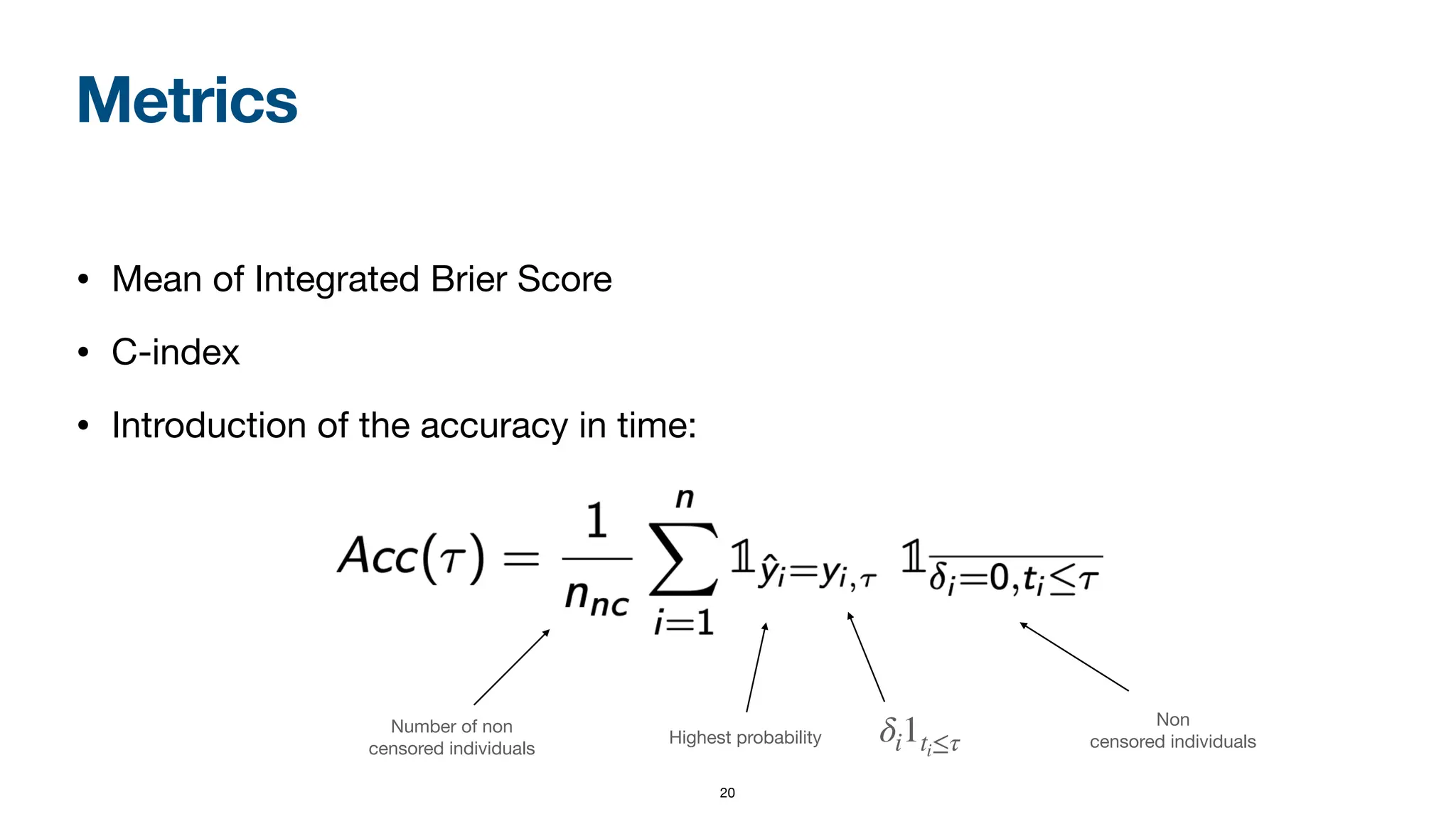

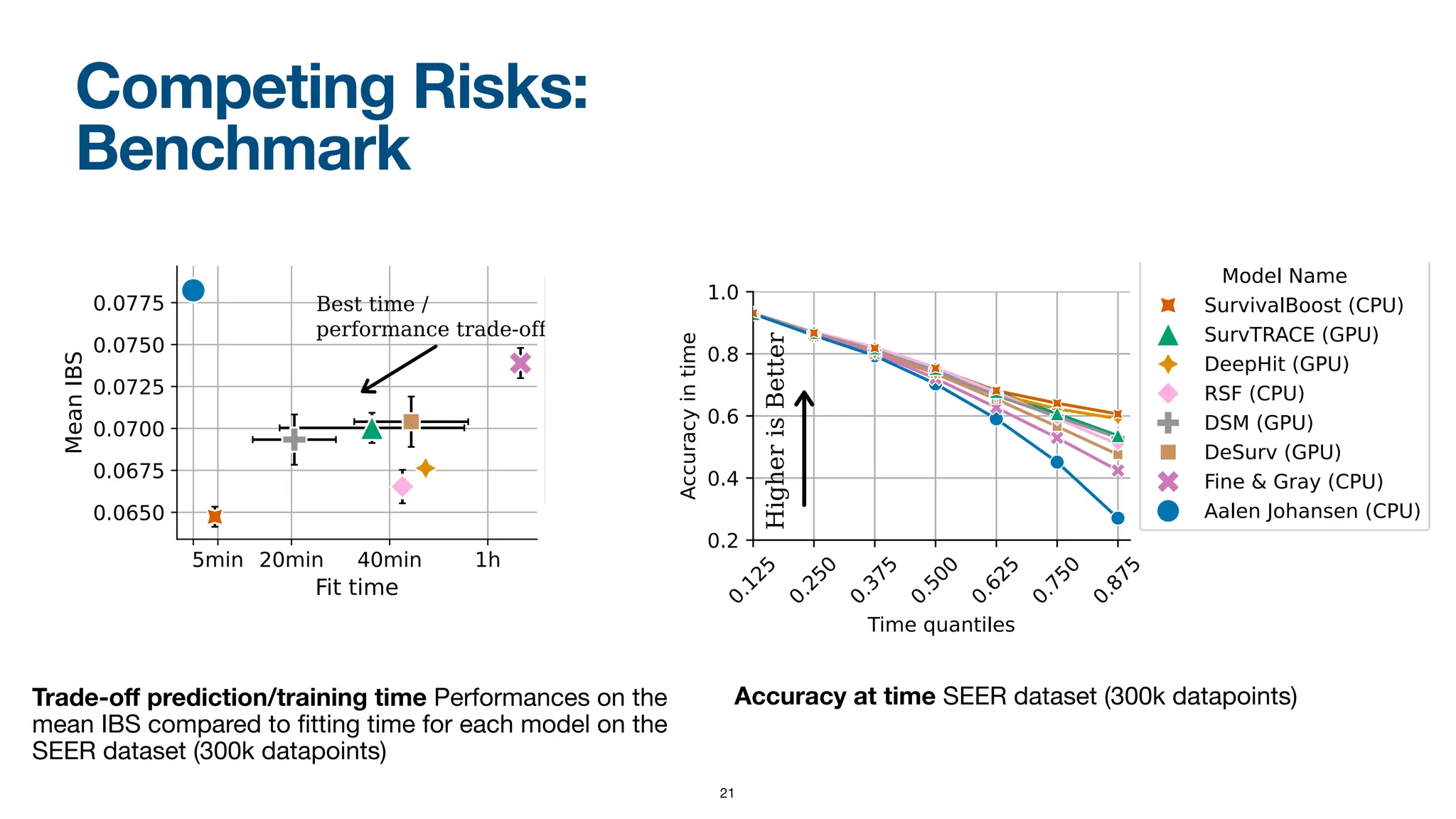

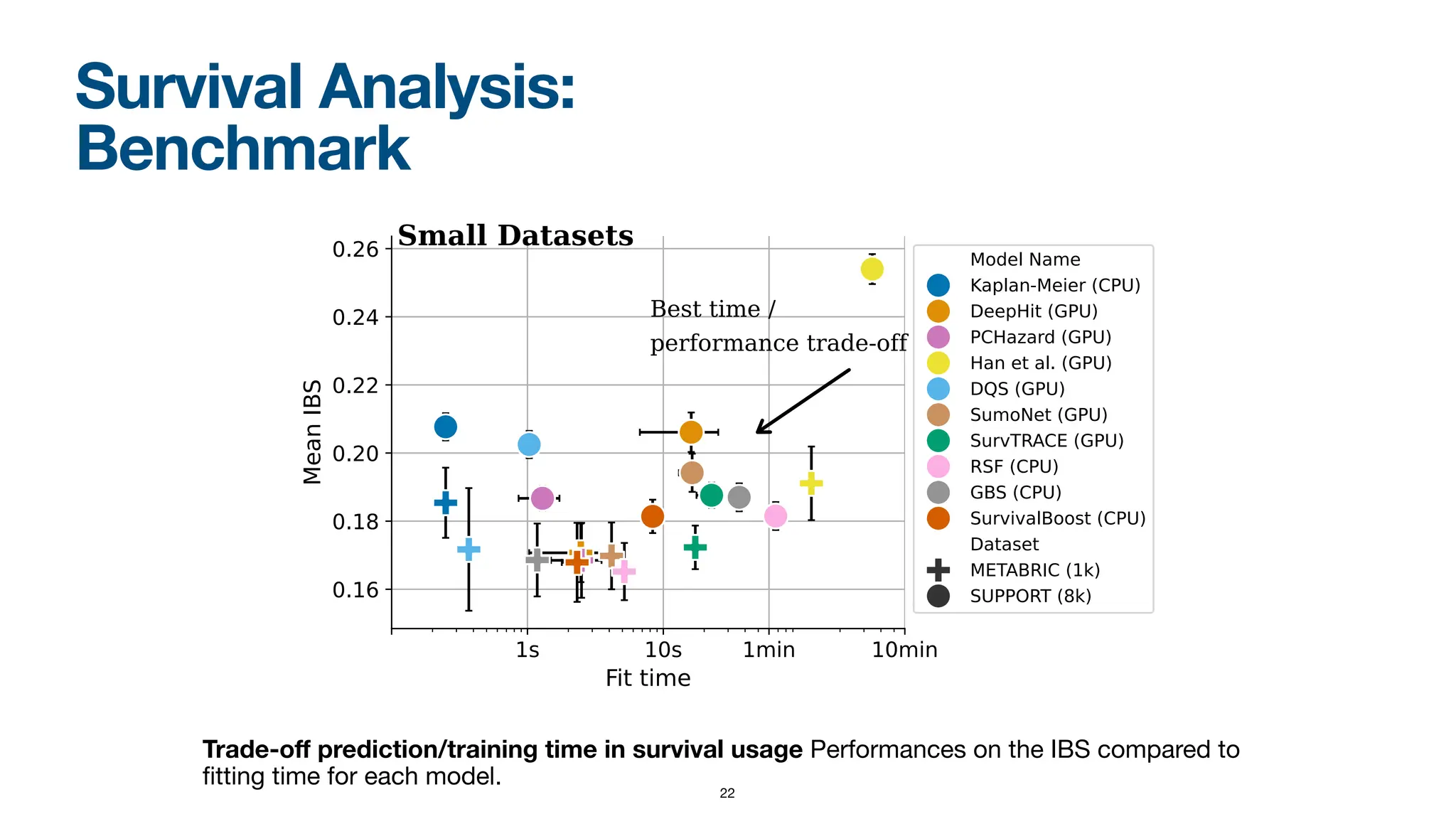

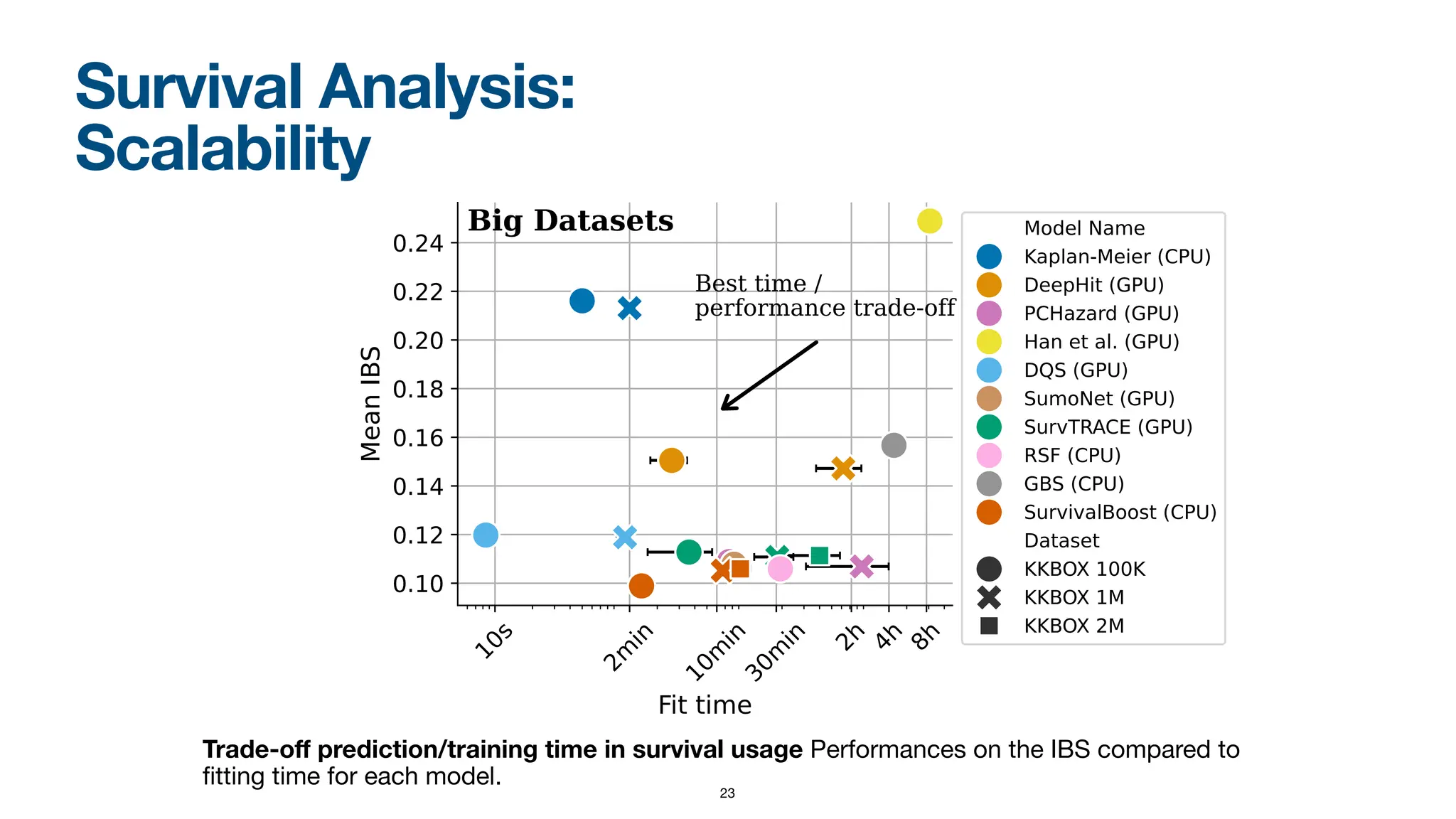

When dealing with right-censored data, where some outcomes are missing due to a limited observation period, survival analysis focuses on predicting the time until an event of interest occurs. Multiple classes of outcomes lead to a classification variant: predicting the most likely event, an area known as competing risks.