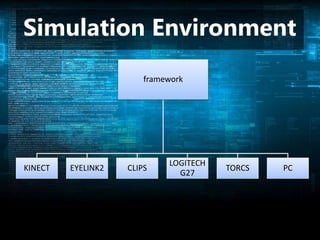

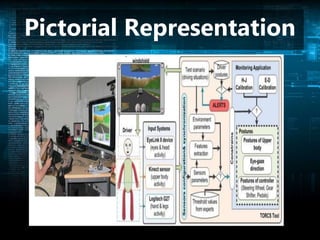

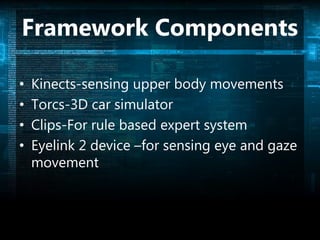

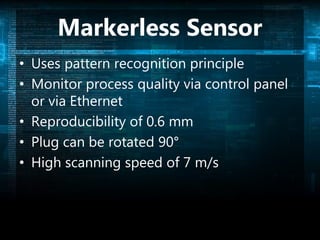

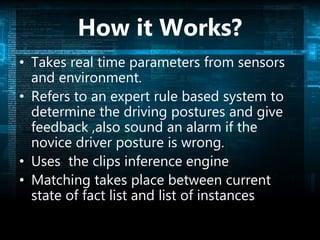

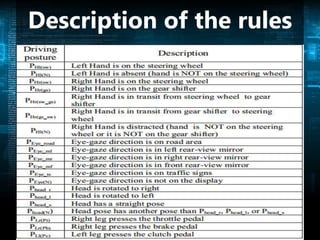

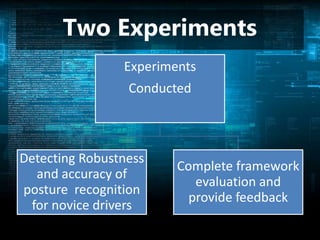

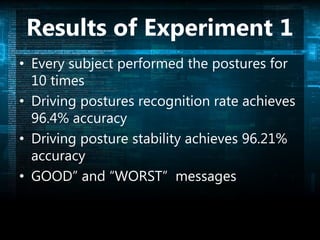

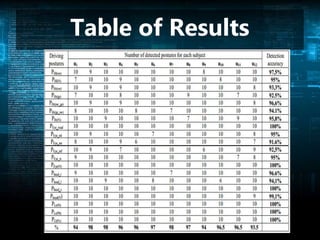

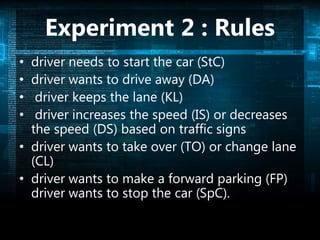

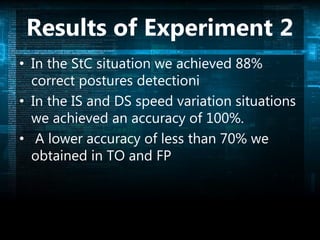

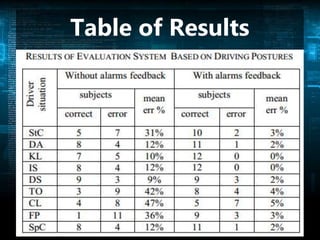

The document presents a car driver skills assessment system using posture recognition to aid novice drivers by providing real-time feedback through an alarm system. It utilizes markerless sensors to accurately measure driving postures and ensure safety while learning. Experiments showed high accuracy rates in recognizing driving postures, indicating its effectiveness in indoor training environments.