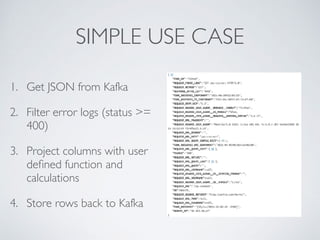

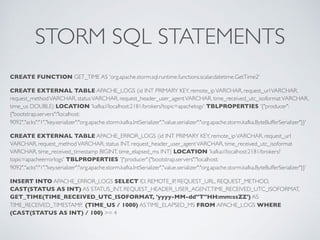

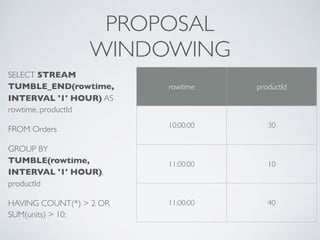

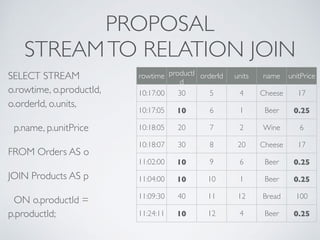

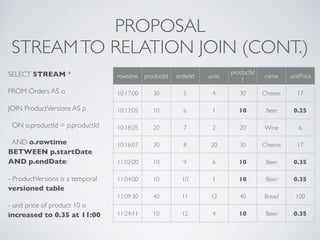

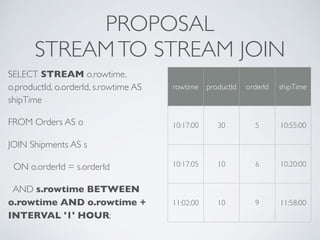

This document discusses streaming SQL and compares different streaming SQL implementations. It provides an overview of Apache Calcite's proposal for streaming SQL, which includes windowing, stream-to-relation joins, and stream-to-stream joins. The document also provides examples of streaming SQL statements in Storm SQL and the concepts proposed by Apache Calcite.