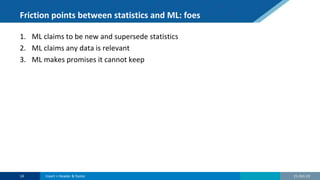

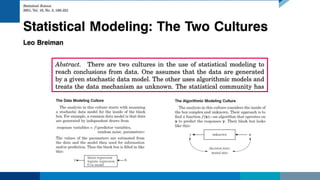

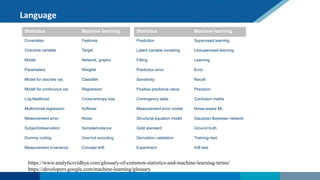

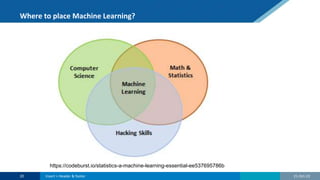

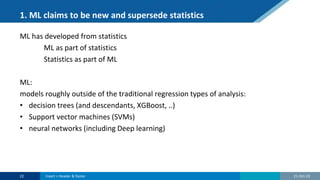

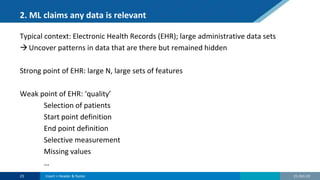

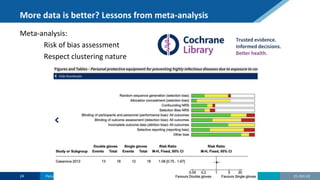

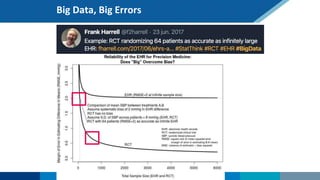

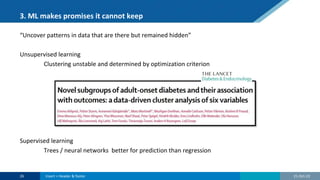

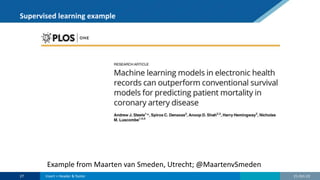

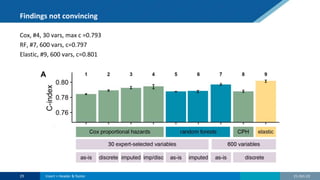

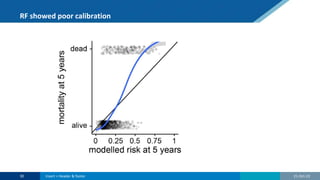

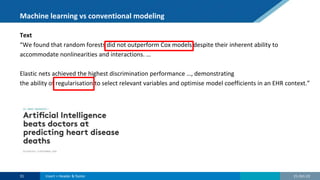

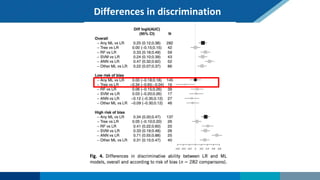

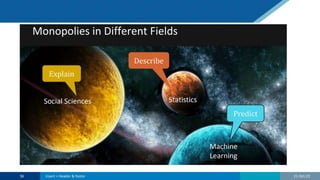

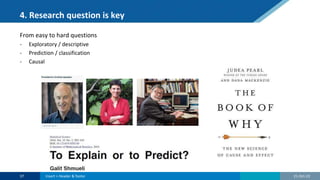

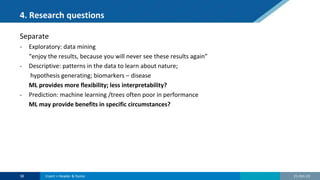

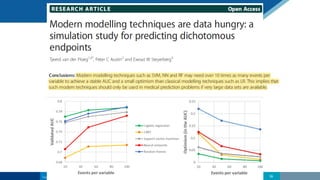

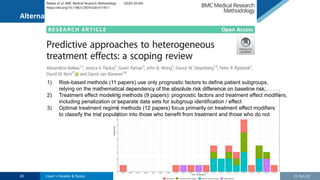

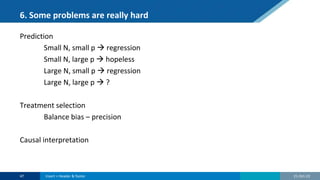

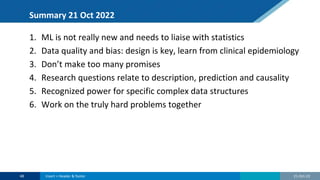

The document discusses the relationship between statistics and machine learning (ML) in medical research, highlighting both friction points and commonalities. It points out that while ML is often perceived as a new advancement, it fundamentally derives from statistical methods and emphasizes the importance of data quality and research questions. The summary concludes by suggesting collaboration between statistics and ML to tackle complex problems effectively.