This document contains slides for an introduction to natural language processing presentation. It discusses several key topics in the history and foundations of NLP, including Markov's analysis of literature, finite state machines, formal languages and grammars, probabilistic NLP, and recent advances in neural network approaches. The slides provide an overview of the empirical and rationalist traditions in NLP and outline some of the main problems addressed in the field.

![2019/2/17 intro-to-nlp slides

http://127.0.0.1:8000/intro-to-nlp.slides.html?print-pdf#/ 5/89

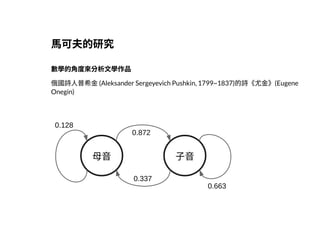

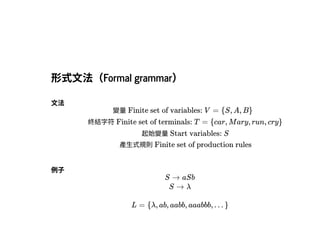

⾺可夫的研究⾺可夫的研究

P (⺟⾳ → ⺟⾳), P (⺟⾳ → ⼦⾳), P (⼦⾳ → ⺟⾳), P (⼦⾳ → ⼦⾳)

⺟⾳ ⼦⾳

M = [ ]

0.128

0.872

0.337

0.663

Ref.

,

⾺可夫⽣平簡介(1)(A Brief Introduction of Markov’s Life:

Part 1)(http://highscope.ch.ntu.edu.tw/wordpress/?p=51032)

⾺可夫⽣平簡介(2)(A Brief Introduction of Markov’s Life: Part

2)(http://highscope.ch.ntu.edu.tw/wordpress/?p=51034)](https://image.slidesharecdn.com/intro-to-nlpslides-190217061730/85/slide-5-320.jpg)

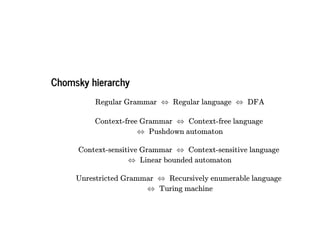

![2019/2/17 intro-to-nlp slides

http://127.0.0.1:8000/intro-to-nlp.slides.html?print-pdf#/ 17/89

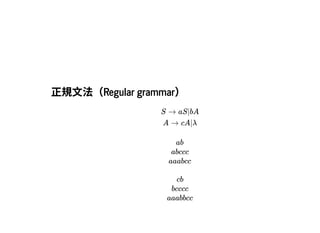

正規⽂法(Regular grammar)產⽣正規語⾔(Regular正規⽂法(Regular grammar)產⽣正規語⾔(Regular

language)language)

S → a|aB|λ

↓

L

正規表⽰法(Regular expression)正規表⽰法(Regular expression)

[A-Z]d{9}

"A123456789"

09d{8}

"0912345678"

d{4}-d{2}-d{2}

"1996-08-06"

.*@gmail.com

"test@gmail.com"](https://image.slidesharecdn.com/intro-to-nlpslides-190217061730/85/slide-17-320.jpg)

![2019/2/17 intro-to-nlp slides

http://127.0.0.1:8000/intro-to-nlp.slides.html?print-pdf#/ 60/89

Character EmbeddingsCharacter Embeddings

Capture the intra-word morphological and shape information can be useful

parts-of-speech (POS) tagging

named-entity recognition (NER)

Santos and Guimaraes [31] applied character-level representations, along with

word embeddings for NER, achieving state-of-the-art results in Portuguese and

Spanish corpora.

AdvantageAdvantage

out-of-vocabulary (OOV) words](https://image.slidesharecdn.com/intro-to-nlpslides-190217061730/85/slide-60-320.jpg)

![2019/2/17 intro-to-nlp slides

http://127.0.0.1:8000/intro-to-nlp.slides.html?print-pdf#/ 74/89

RNN for word-level classi cationRNN for word-level classi cation

Bidirectional LSTM for NER

[hb1 ;hf 1]

This is a book

[hb2 ;hf 2] [hb3 ;hf 3] [hb 4 ;hf 4]

RNN for sentence-level classi cationRNN for sentence-level classi cation

LSTM for sentiment classi cation](https://image.slidesharecdn.com/intro-to-nlpslides-190217061730/85/slide-74-320.jpg)