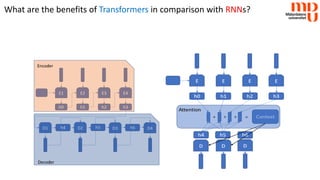

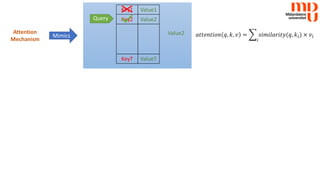

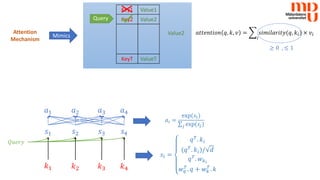

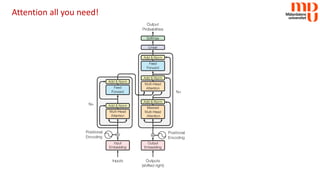

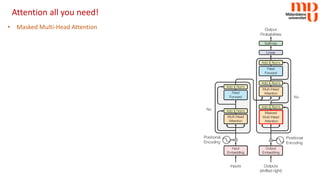

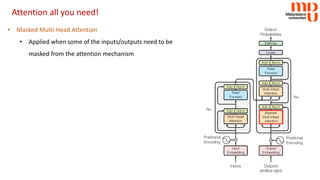

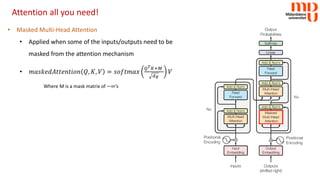

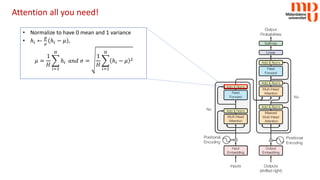

The document explains the transformer model, a transduction model leveraging attention mechanisms initially designed for natural language processing tasks. It highlights the advantages of transformers over RNNs, including better handling of long-range dependencies and fewer training steps, as well as their applications in computer vision. Key components such as the attention mechanism, multi-head attention, and normalization techniques are also discussed.