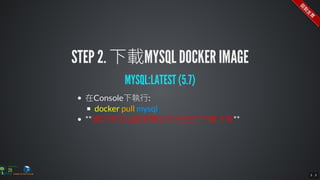

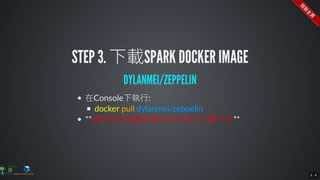

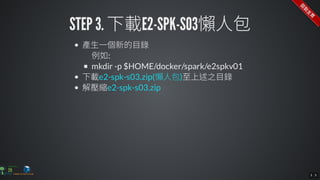

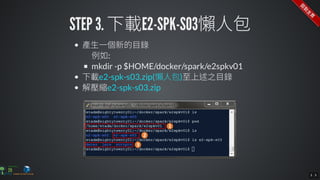

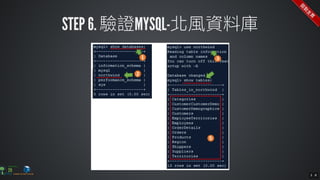

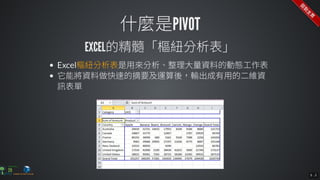

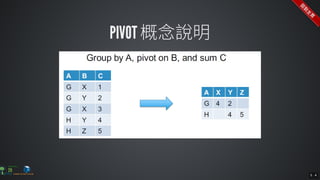

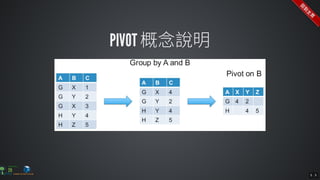

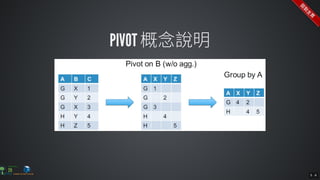

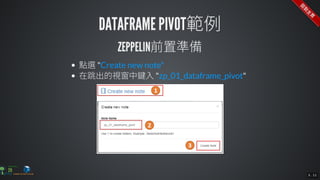

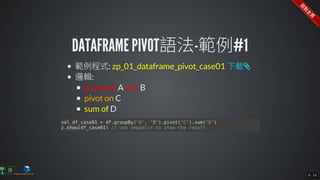

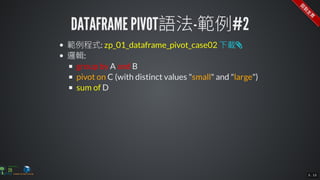

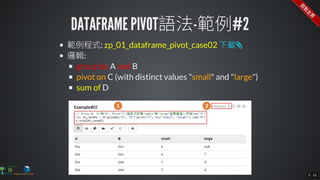

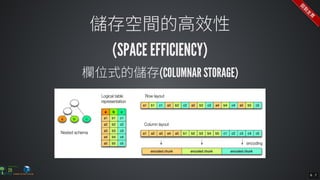

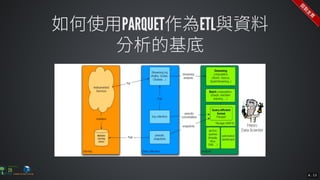

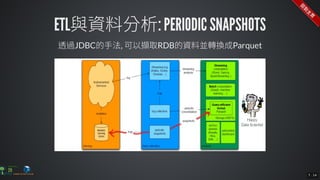

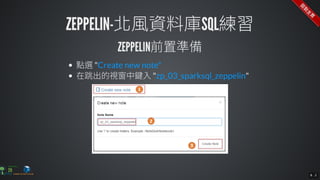

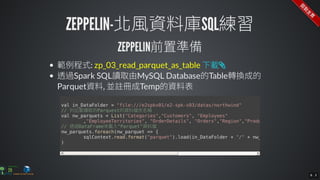

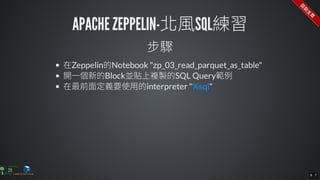

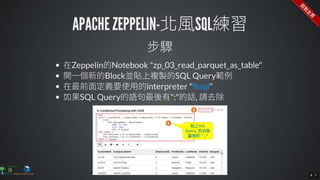

The document contains commands for setting up a Docker environment with MySQL and Zeppelin services, including running MySQL with specified environment variables and executing SQL scripts. It also details how to work with data frames in Spark, specifically using `.groupBy()` and `.pivot()` functions to manipulate data. Additionally, it involves reading data from JDBC and Parquet formats and performing operations like schema printing and showing data.