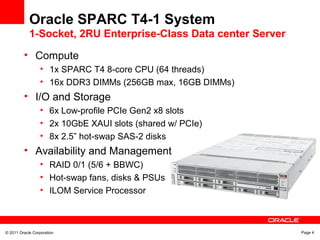

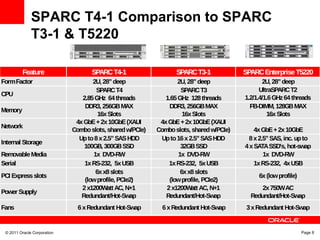

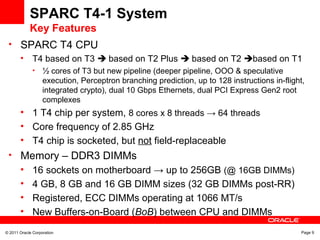

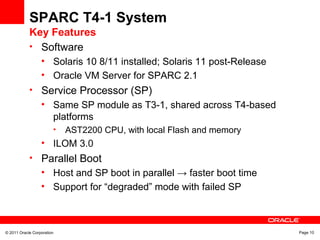

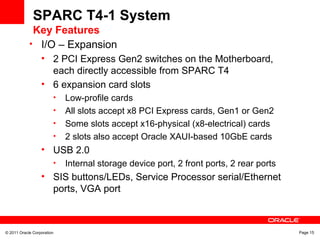

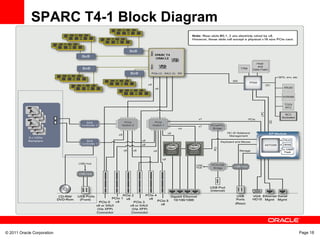

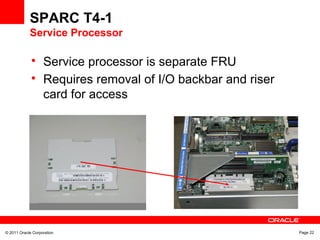

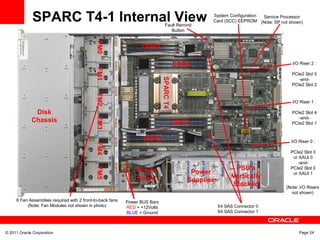

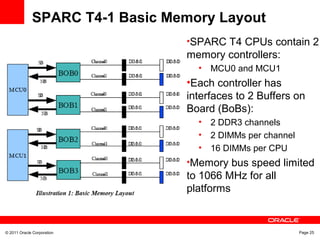

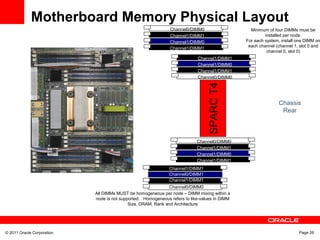

The Oracle SPARC T4-1 system is a 1-socket, 2RU enterprise server featuring Oracle's SPARC T4 processor with 8 cores and 64 threads. It has up to 256GB of DDR3 RAM, 6 PCIe slots, SAS storage, and dual 10GbE ports. The T4-1 is the successor to the SPARC T3-1 and uses the same chassis and service processor, targeting database, middleware, virtualization, and security workloads.

![© 2011 Oracle Corporation Page 40

Perform Live Domain Migration

• Uses the same CLI and XML interfaces as in prior

releases

• Also from Oracle Enterprise Manager Ops Center

• CLI example

– ldm migrate [-f] [-n] [-p <password_file>] <source-ldom>

[<user>@]<target-host>[:<target-ldom>]

• -n : dry-run option

• -f : force

• -p : specify password file for non-interactive migration

• Cancel an On-Going Migration

– ldm cancel-operation migration <ldom>](https://image.slidesharecdn.com/sparct4-1systemtechnicaloverview-151113095147-lva1-app6891/85/Sparc-t4-1-system-technical-overview-40-320.jpg)

![© 2011 Oracle Corporation Page 44

Inter-vnet LDC Channels Explained

• LDoms CLI modification

– ldm add-vsw [default-vlan-id=<vid>] [pvid=<pvid>] [vid=<vid1,vid2,...>] [mac-

addr=<num>] [net-dev=<device>] [linkprop=phys-state] [mode=<mode>] [mtu=<mtu>]

[id=<switchid>] [inter-vnet-link=<on|off>] <vswitch_name> <ldom>

• The default setting is ON.

• This option is a Virtual Switch wide setting, that is enabling/disabling affects all

Vnets in a given Virtual Switch.

• Can be dynamically enabled/disabled without stopping the Guest domains.

– The Guest domains dynamically handle this change.

– ldm set-vsw [pvid=[<pvid>]] [vid=[<vid1,vid2,...>]] [mac-addr=<num>] [net-

dev=[<device>]] [mode=[<mode>]] [mtu=[<mtu>]] [linkprop=[phys-state]] [inter-vnet-

link=<on|off>] <vswitch_name>](https://image.slidesharecdn.com/sparct4-1systemtechnicaloverview-151113095147-lva1-app6891/85/Sparc-t4-1-system-technical-overview-44-320.jpg)