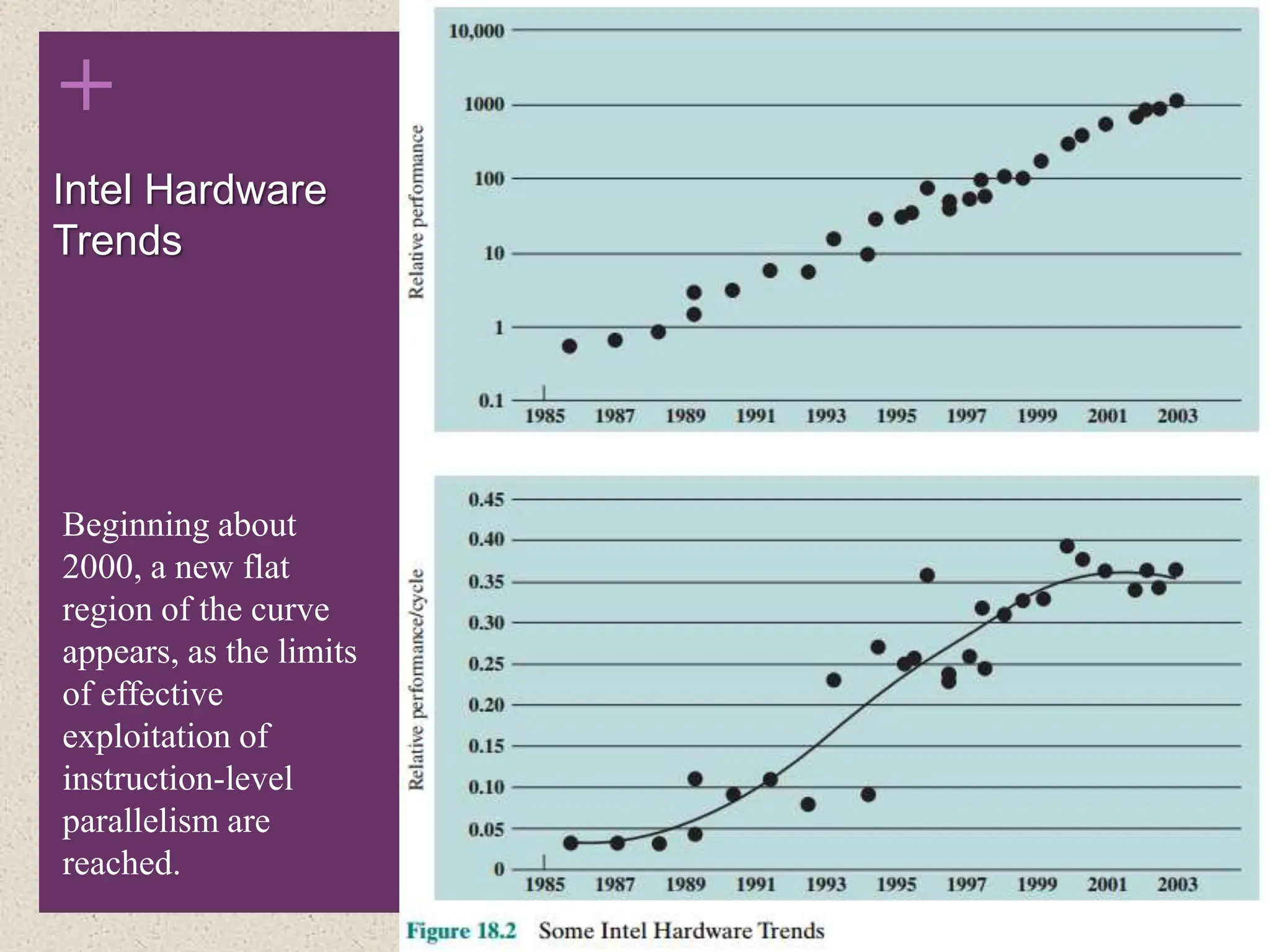

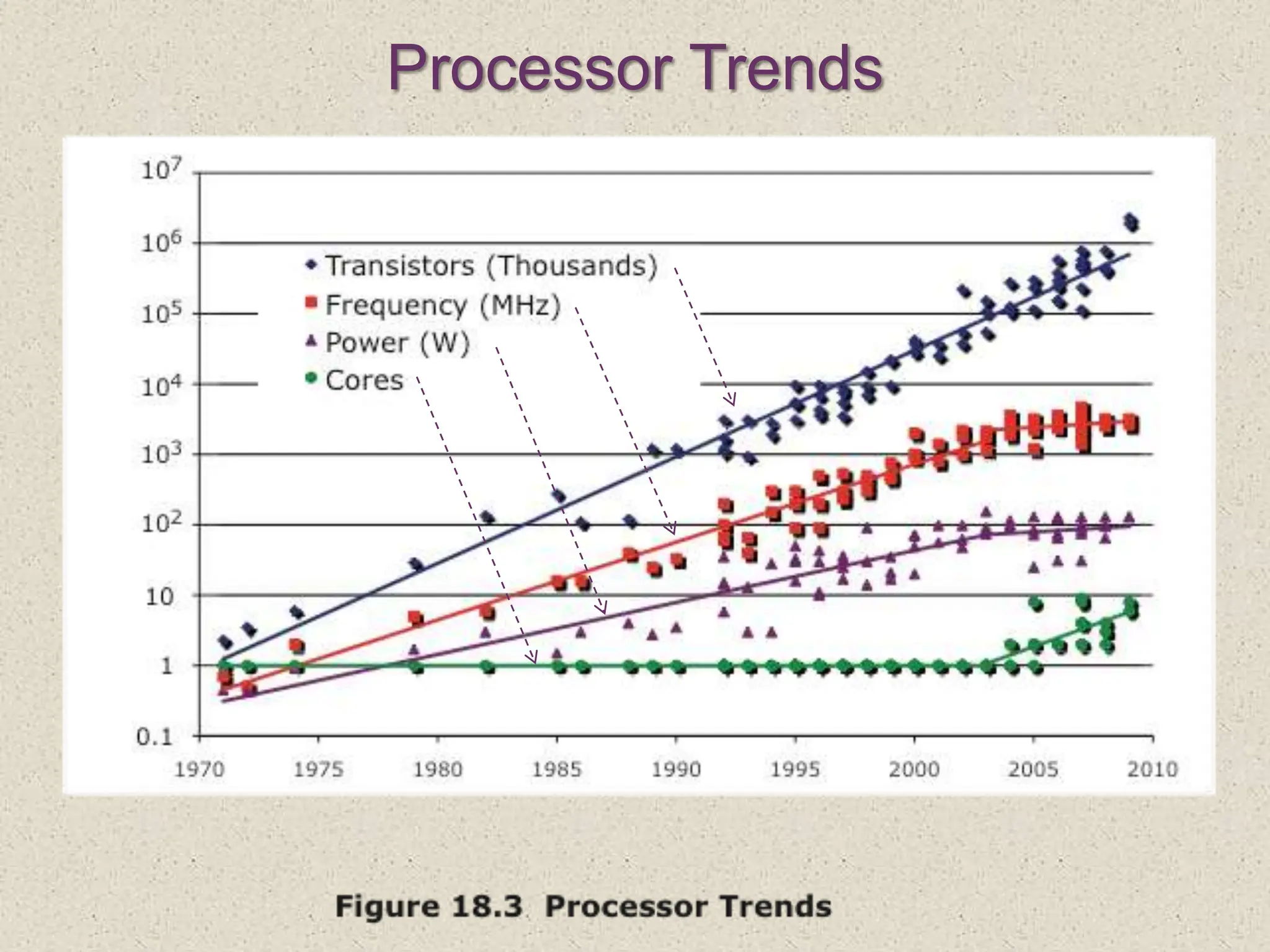

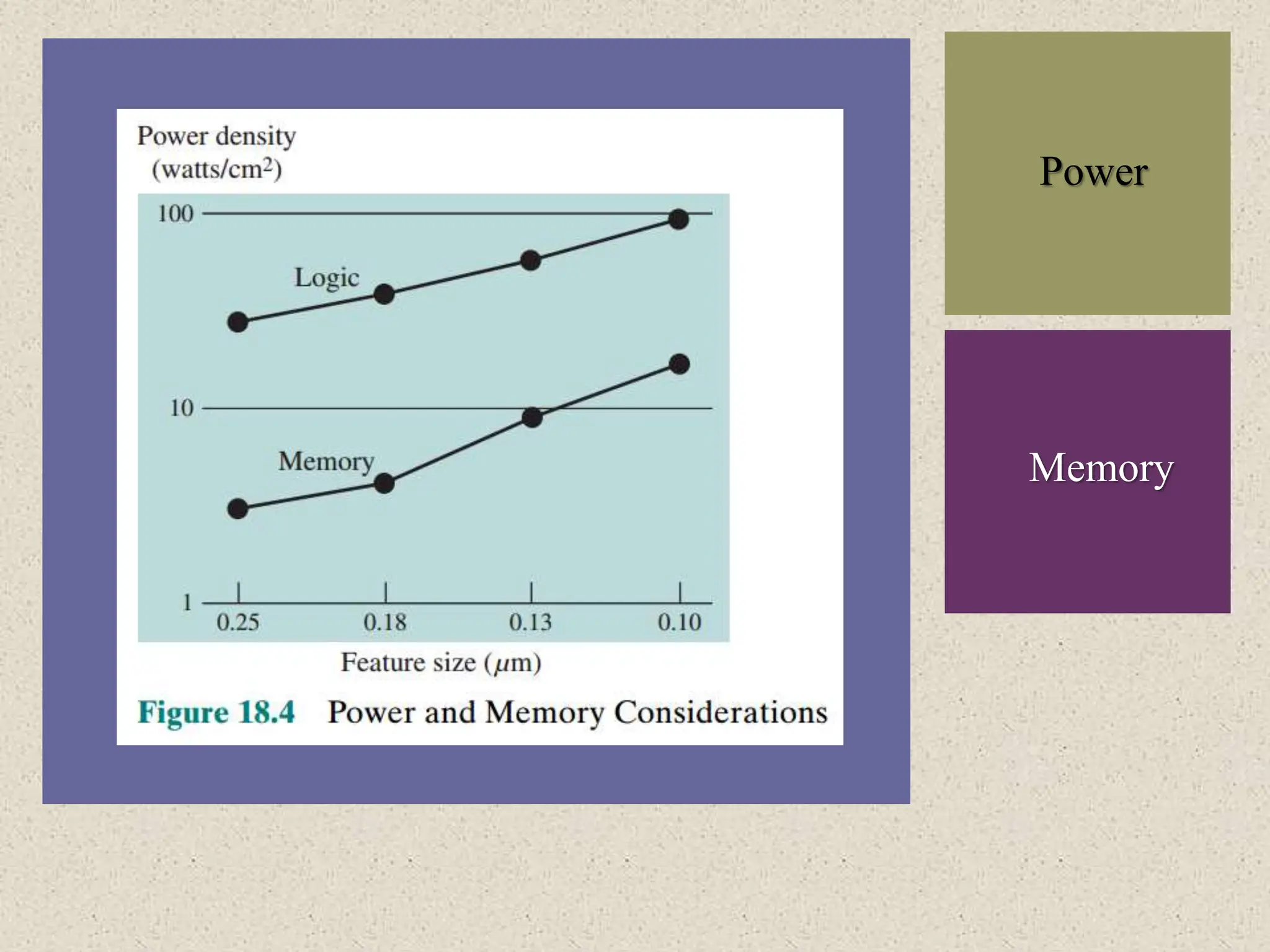

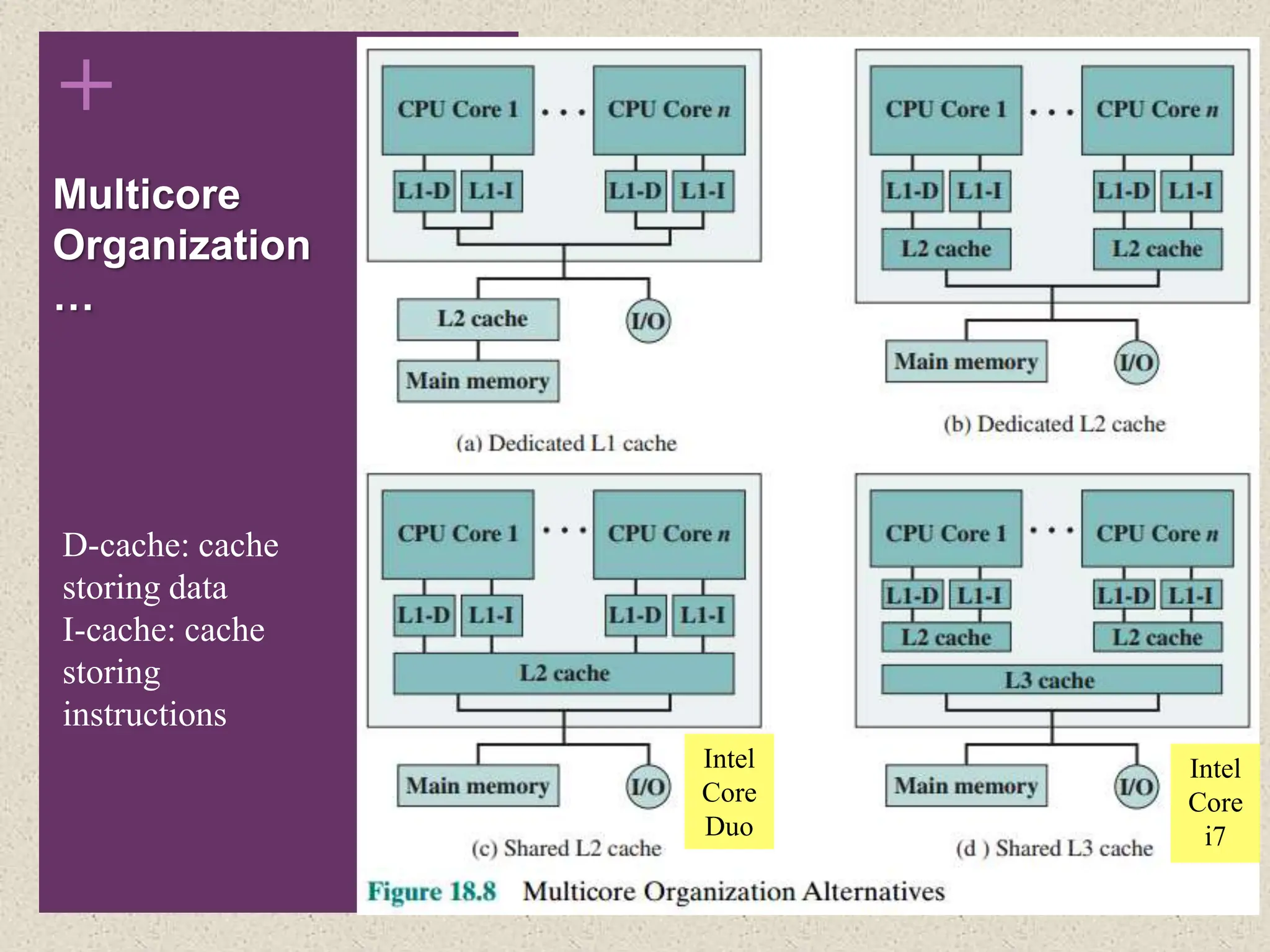

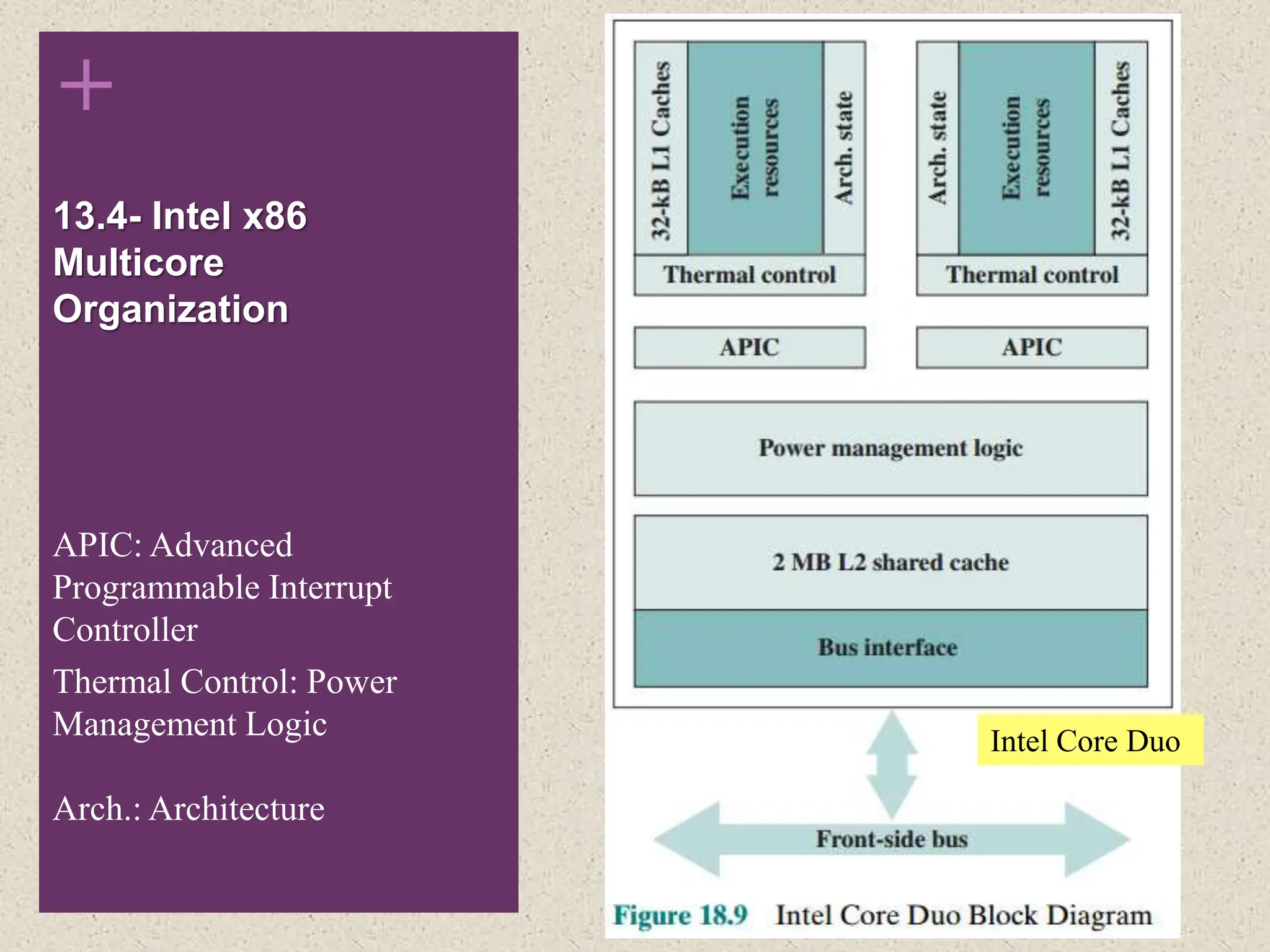

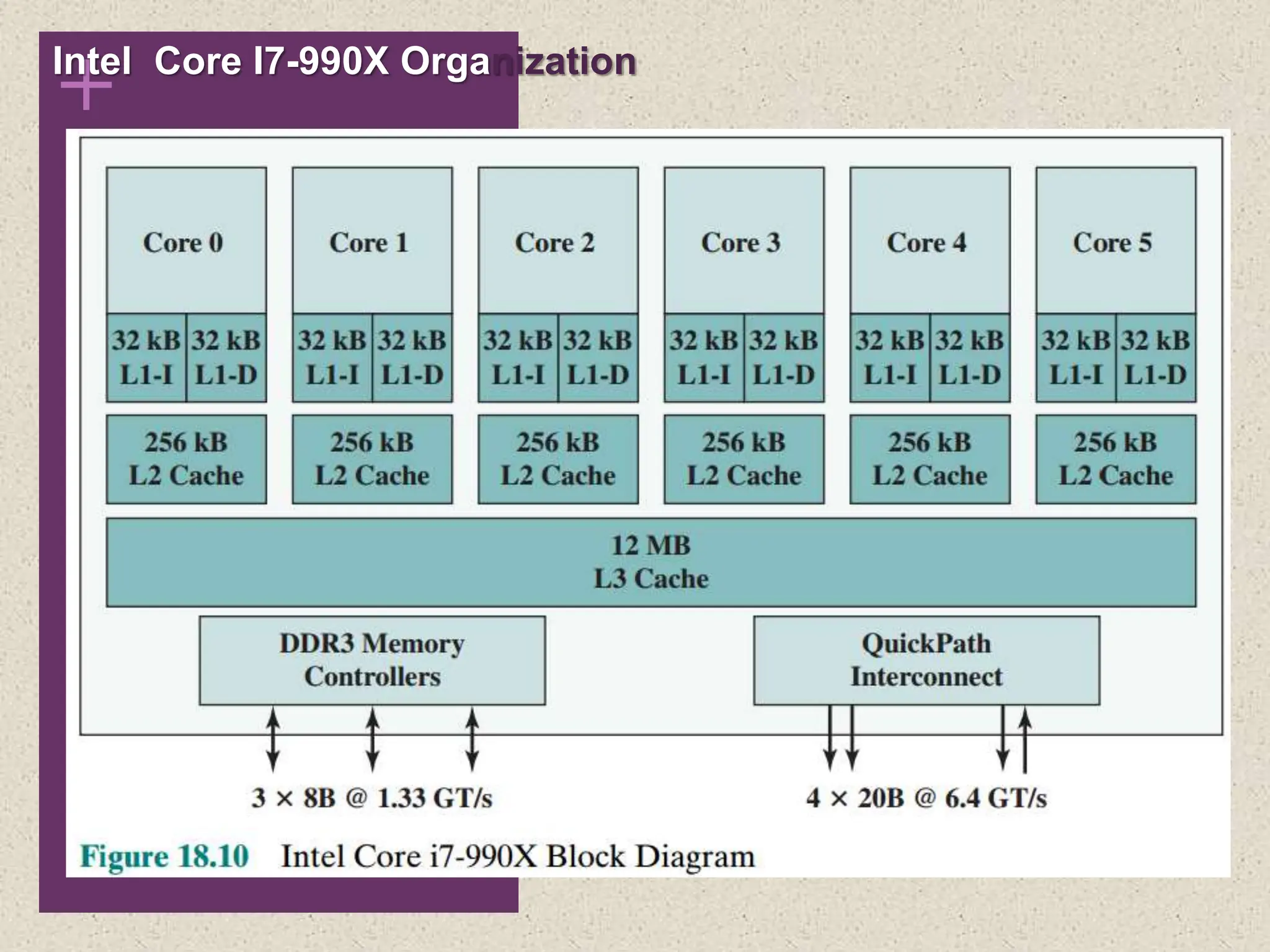

This chapter discusses multicore computers. It covers hardware performance issues that drove the shift to multicore, including limits to increasing parallelism in single processors. Software performance issues on multicore systems and Amdahl's Law are also covered. The chapter describes multicore organization variables like core count and cache levels/sharing. It provides examples of Intel's Core Duo and Core i7-990X multicore designs.