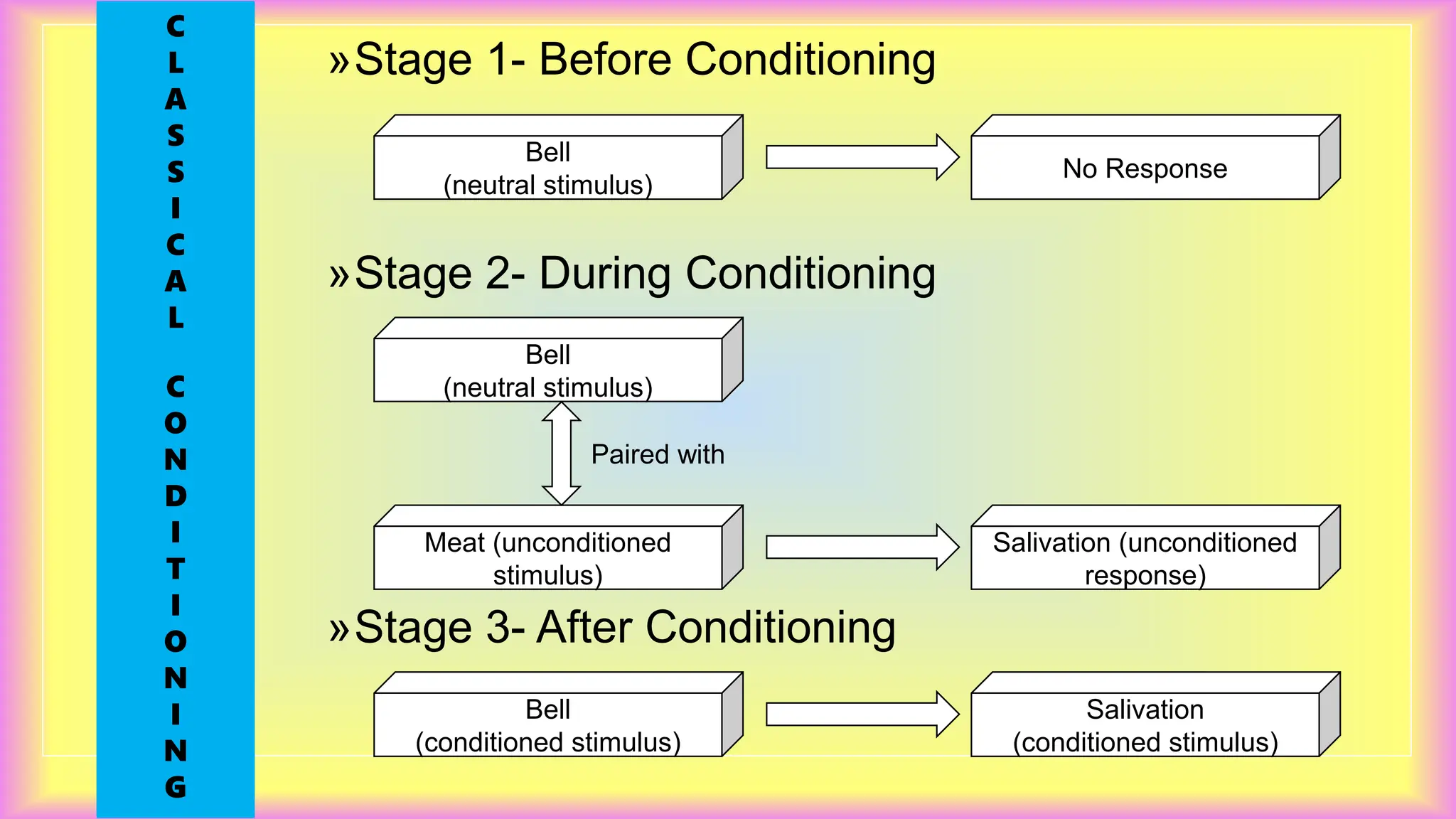

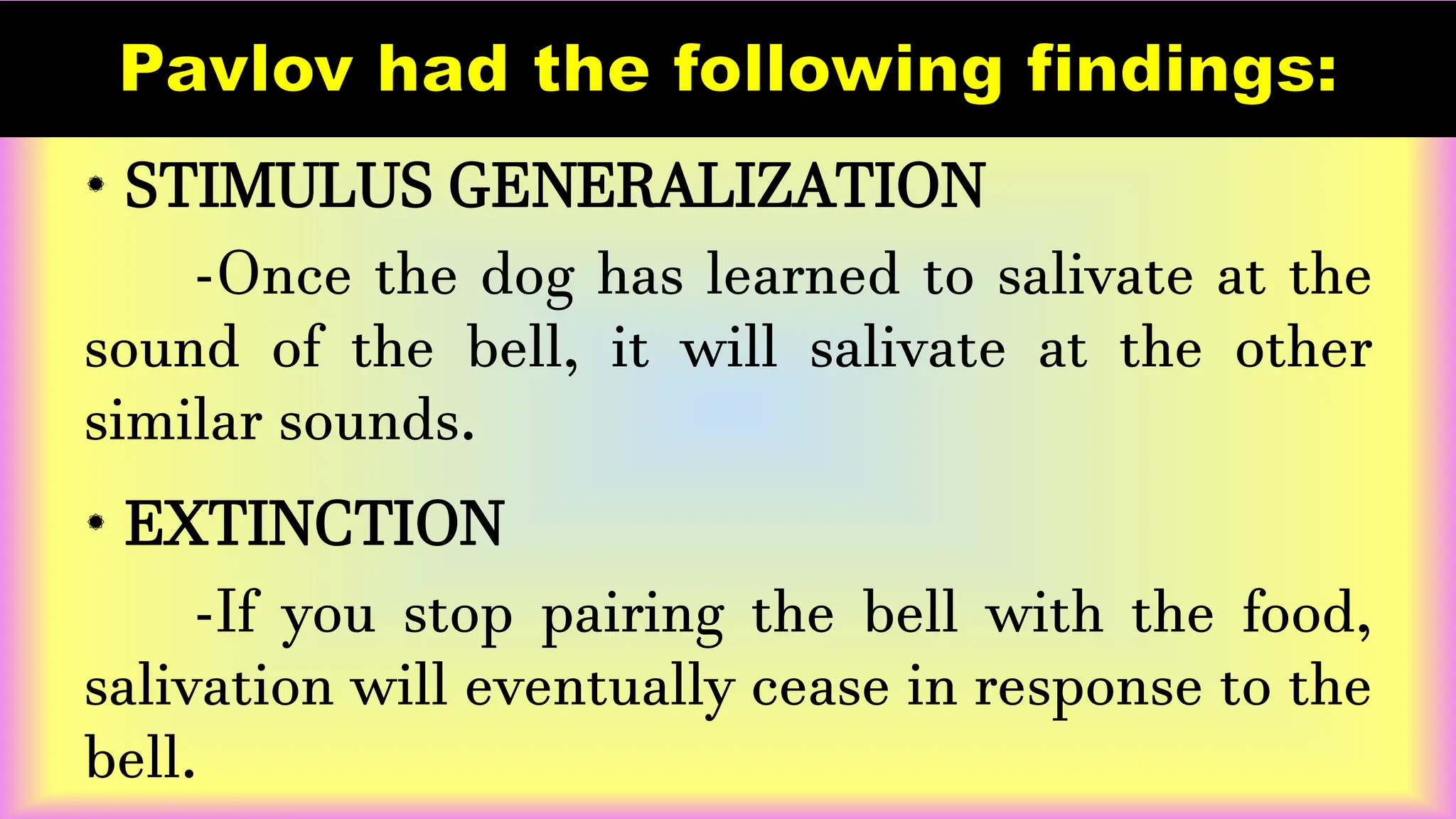

The document outlines the principles of behaviorism, focusing on the theories of key figures like Pavlov, Watson, Thorndike, and Skinner, who emphasize observable behavior and learning through conditioning. It details various concepts such as classical and operant conditioning, reinforcement strategies, and essential laws of learning, including the law of effect and the law of exercise. The text also discusses the practical applications of these theories in educational settings, emphasizing the importance of rewards and feedback in the learning process.