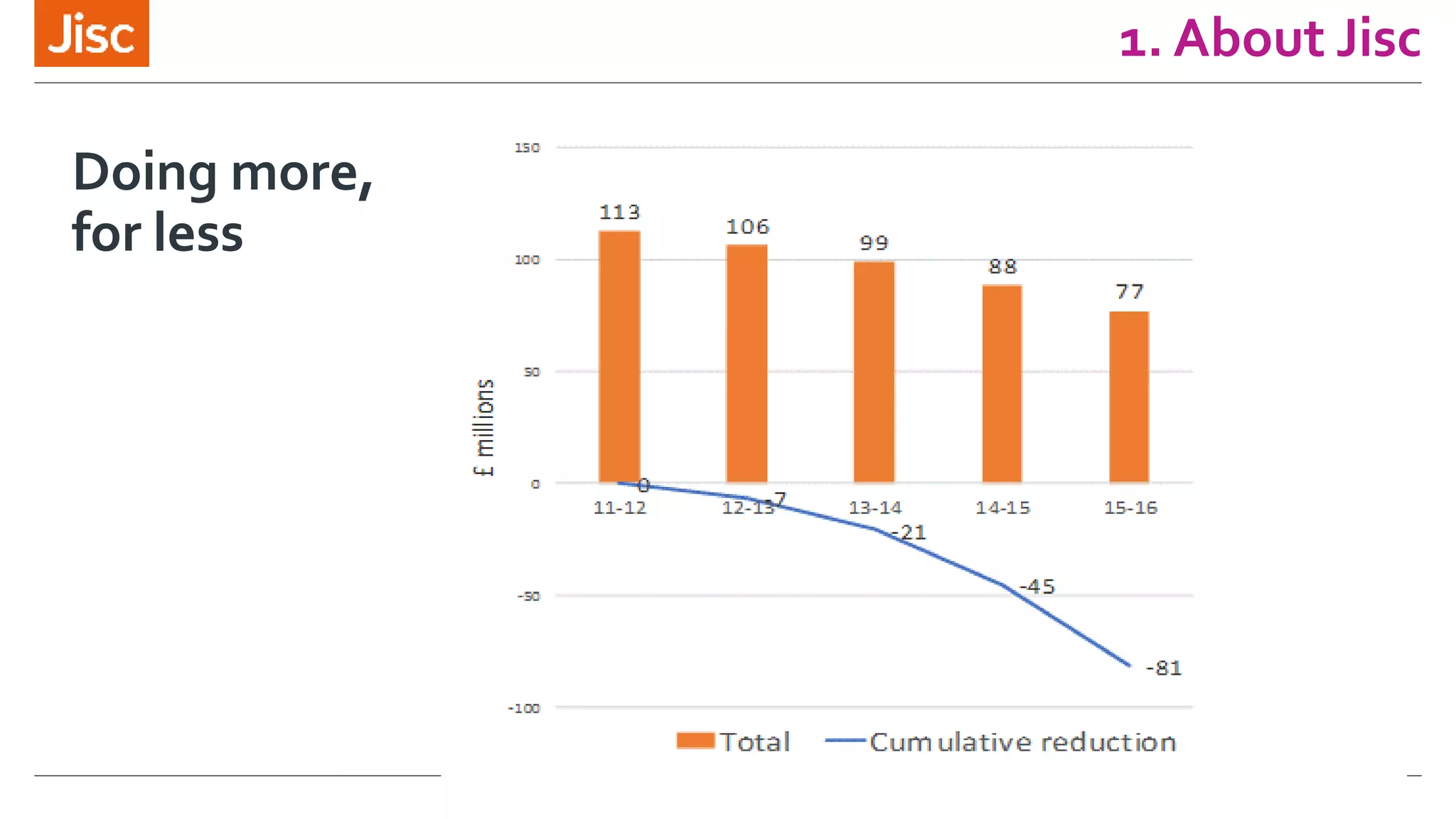

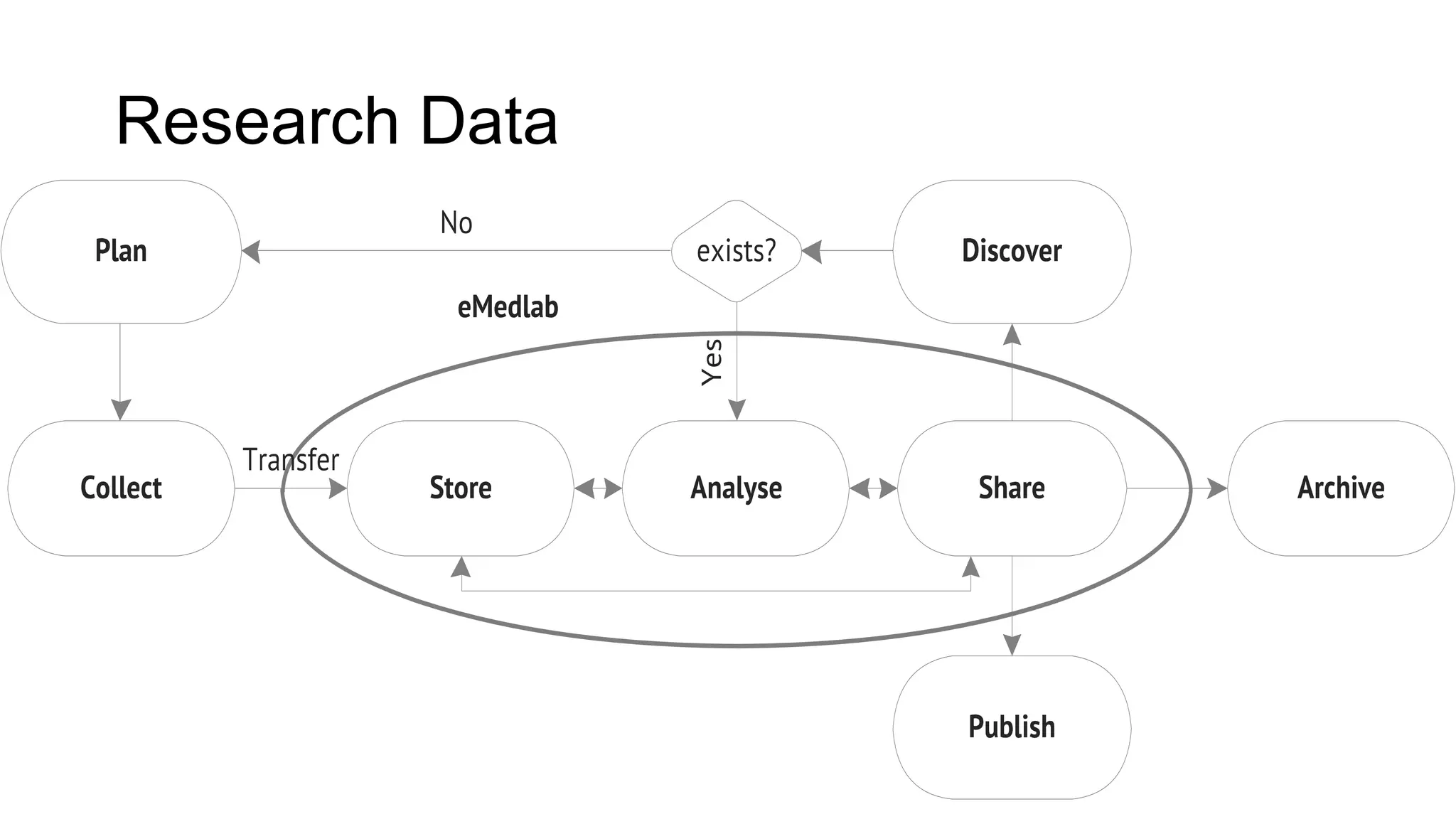

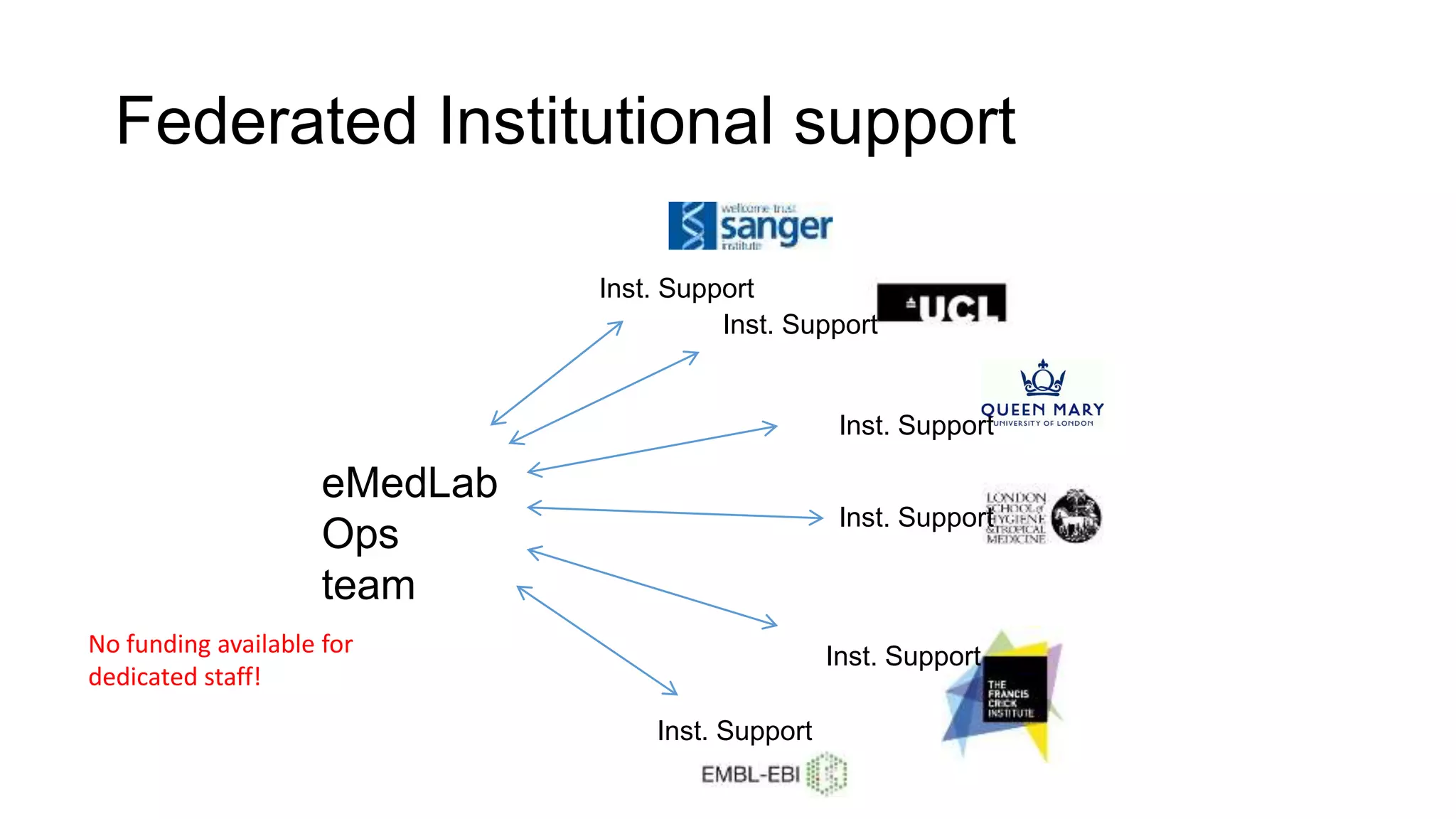

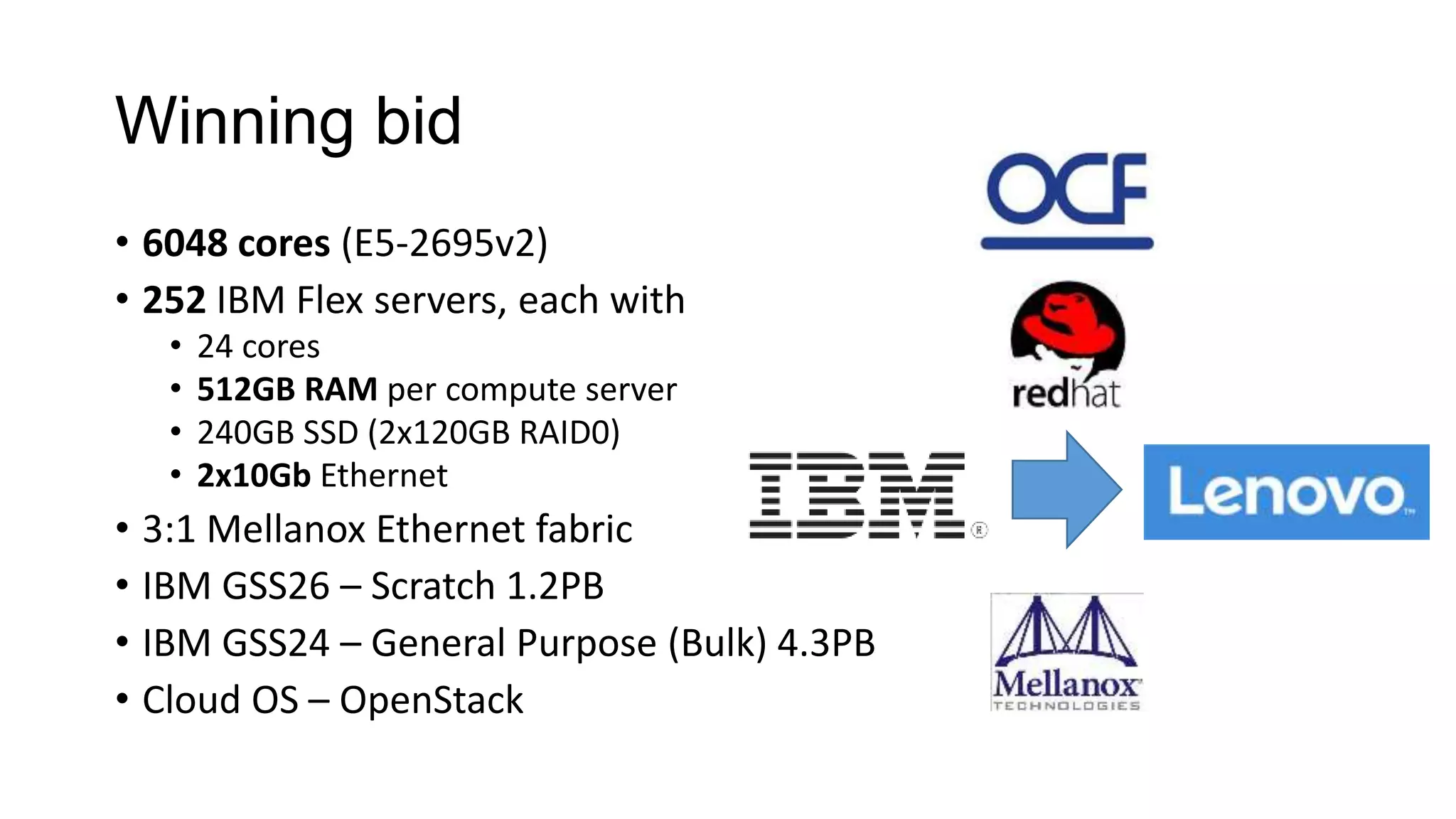

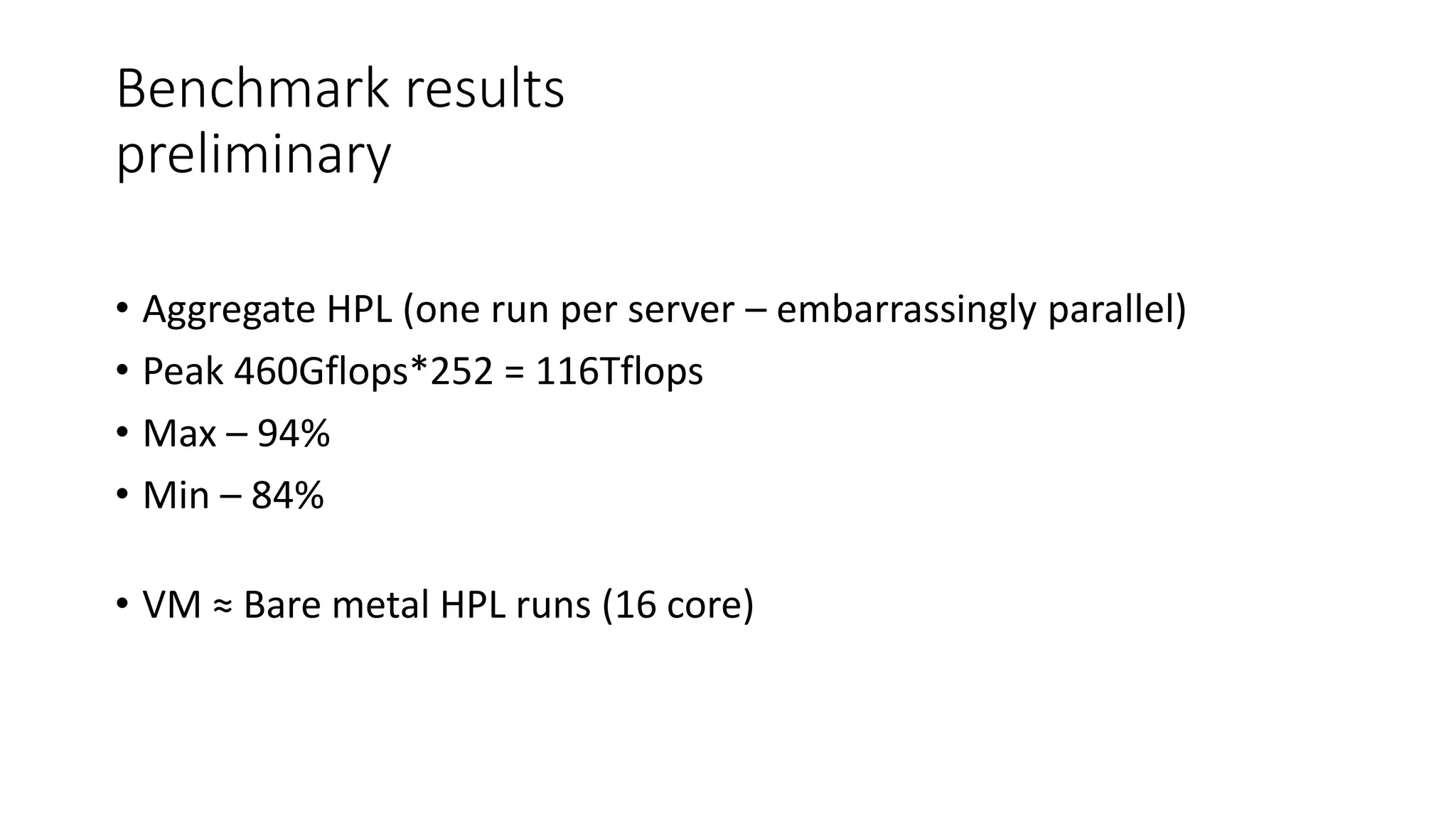

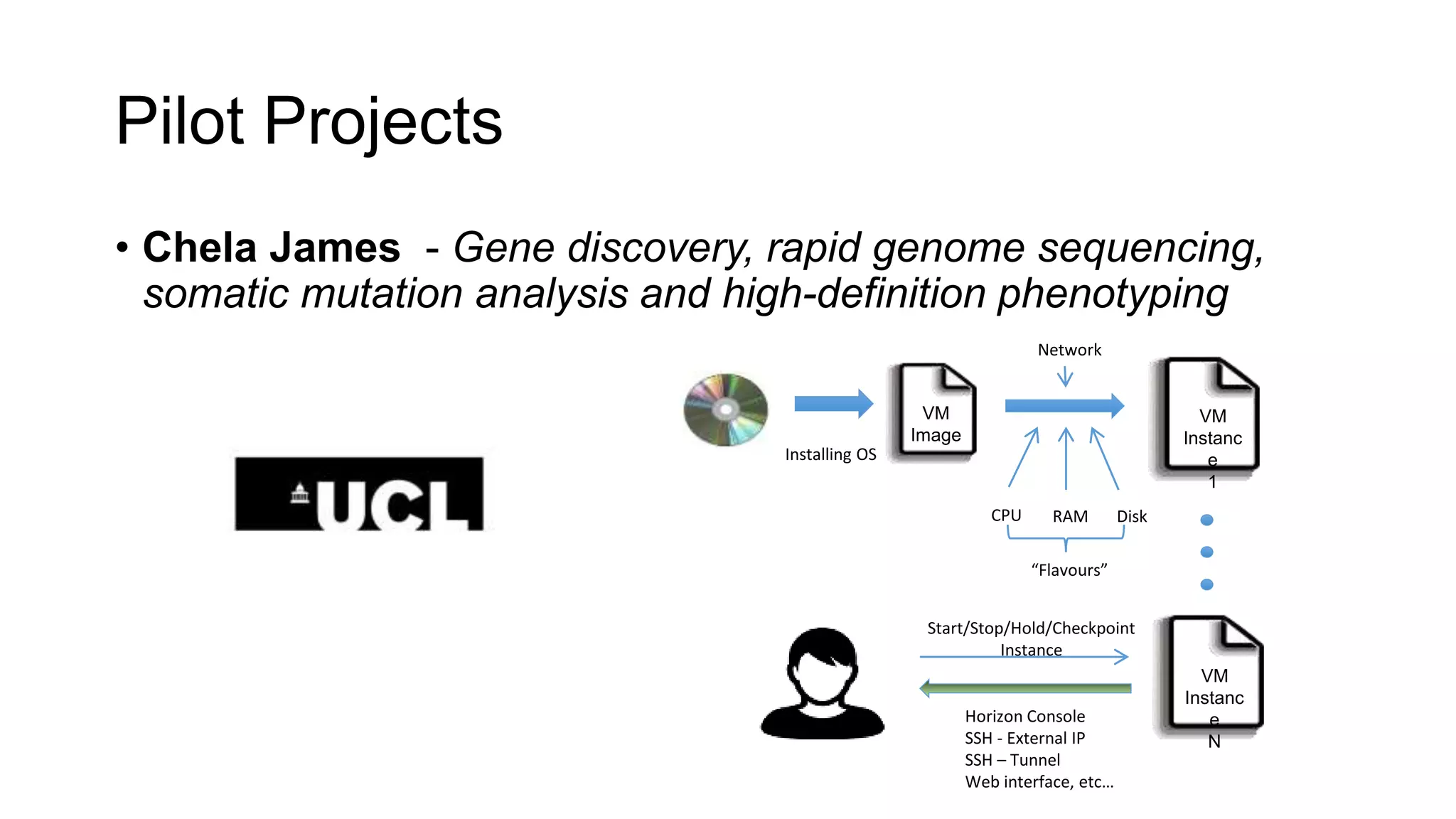

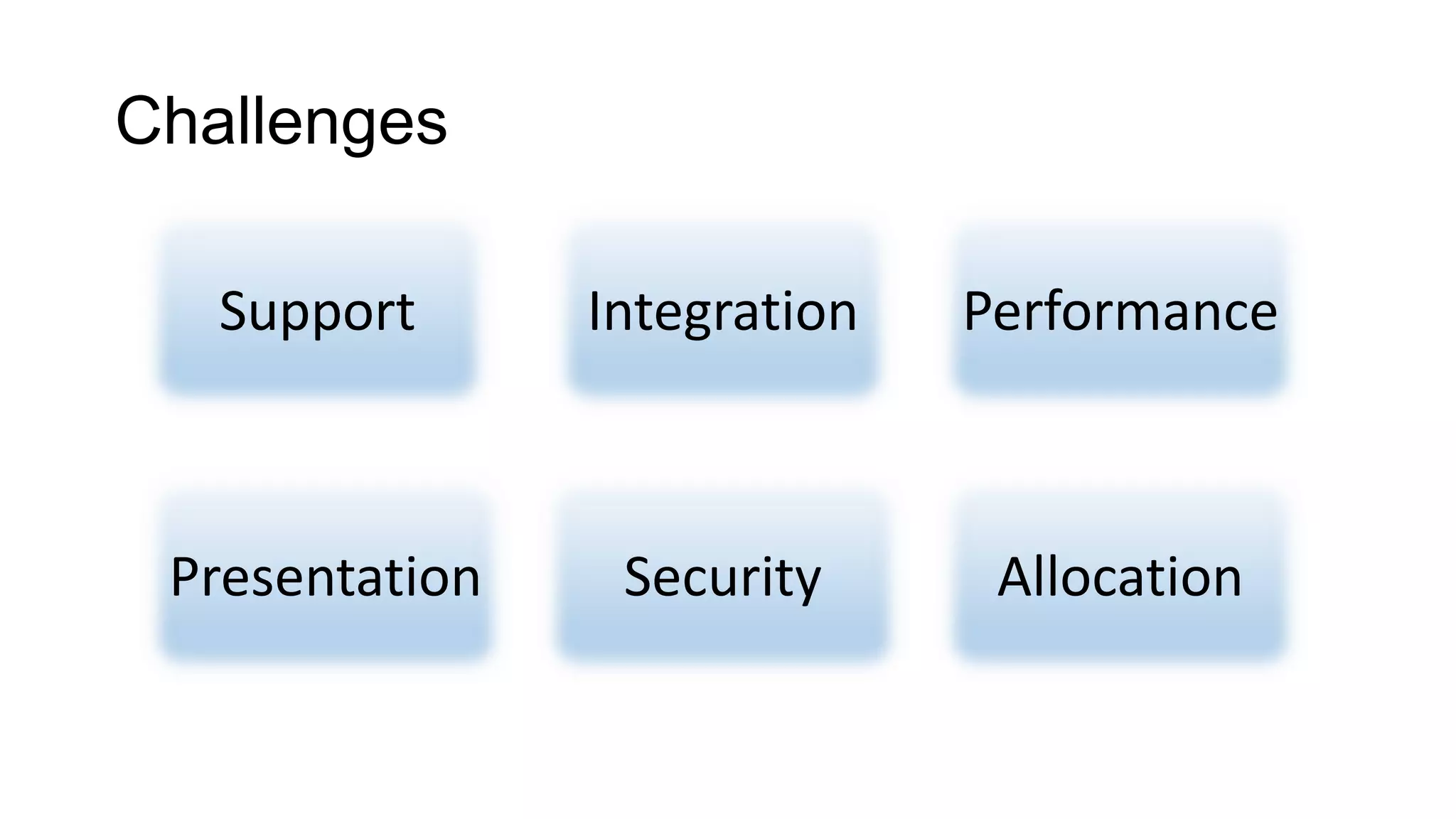

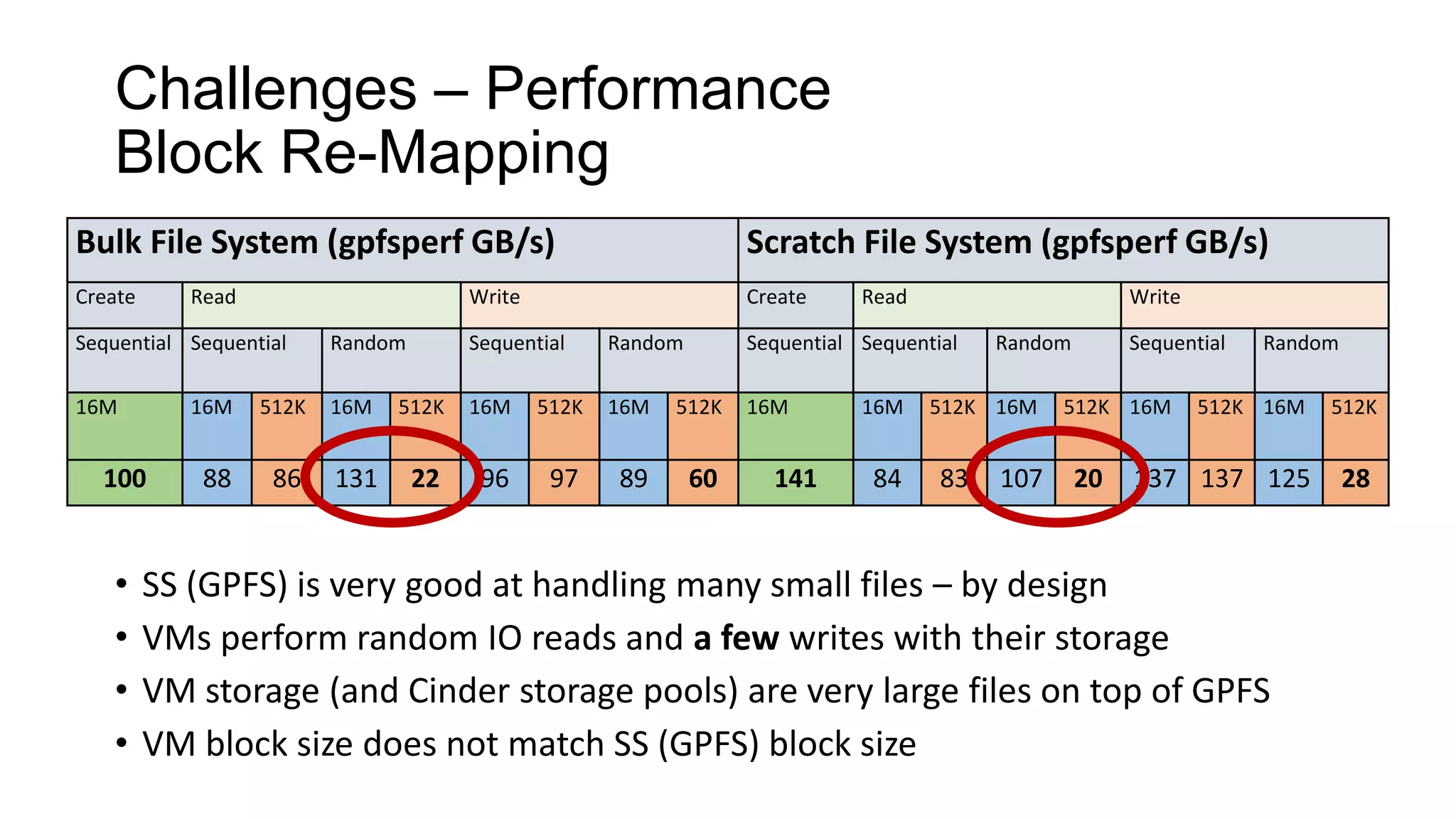

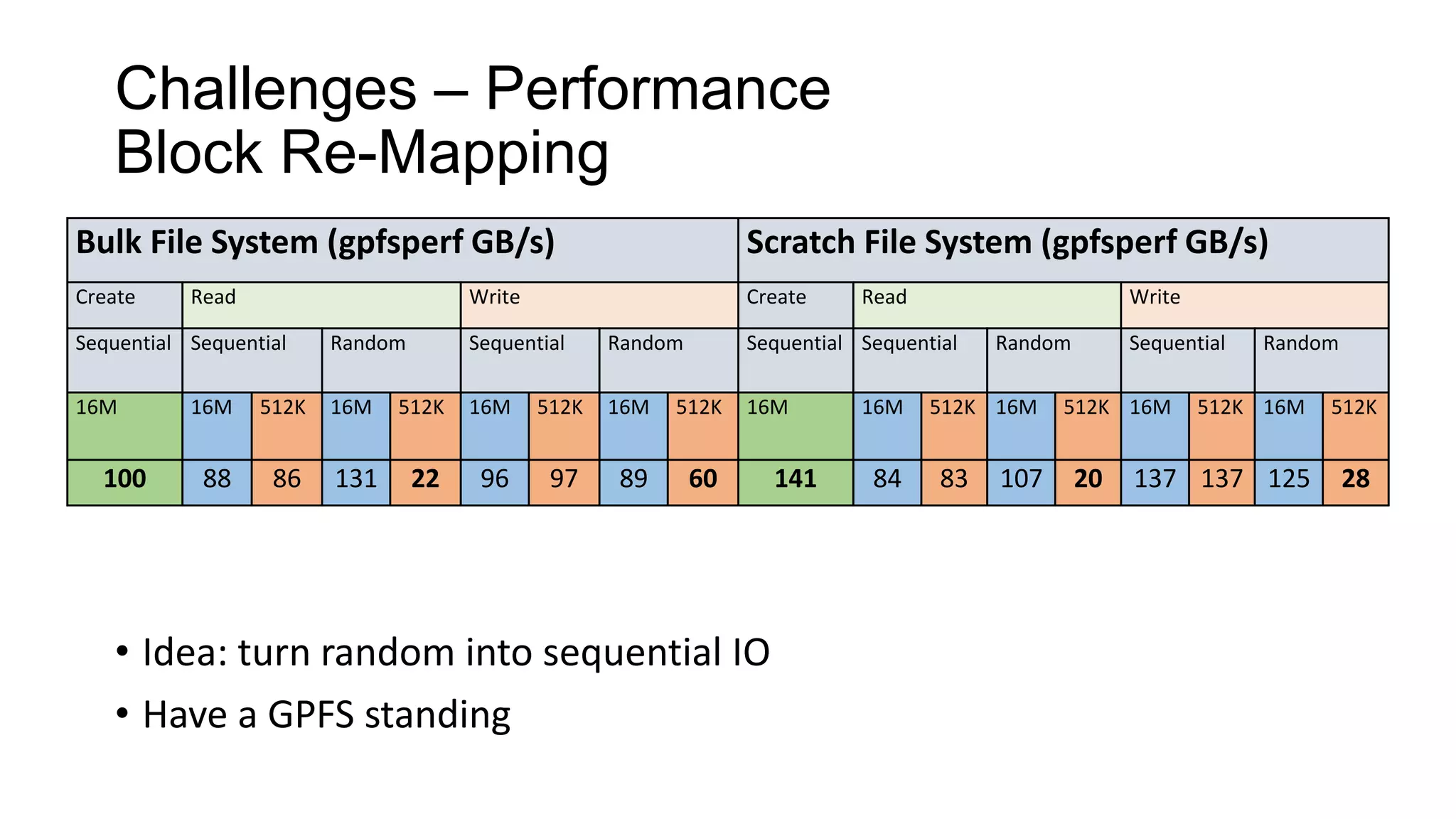

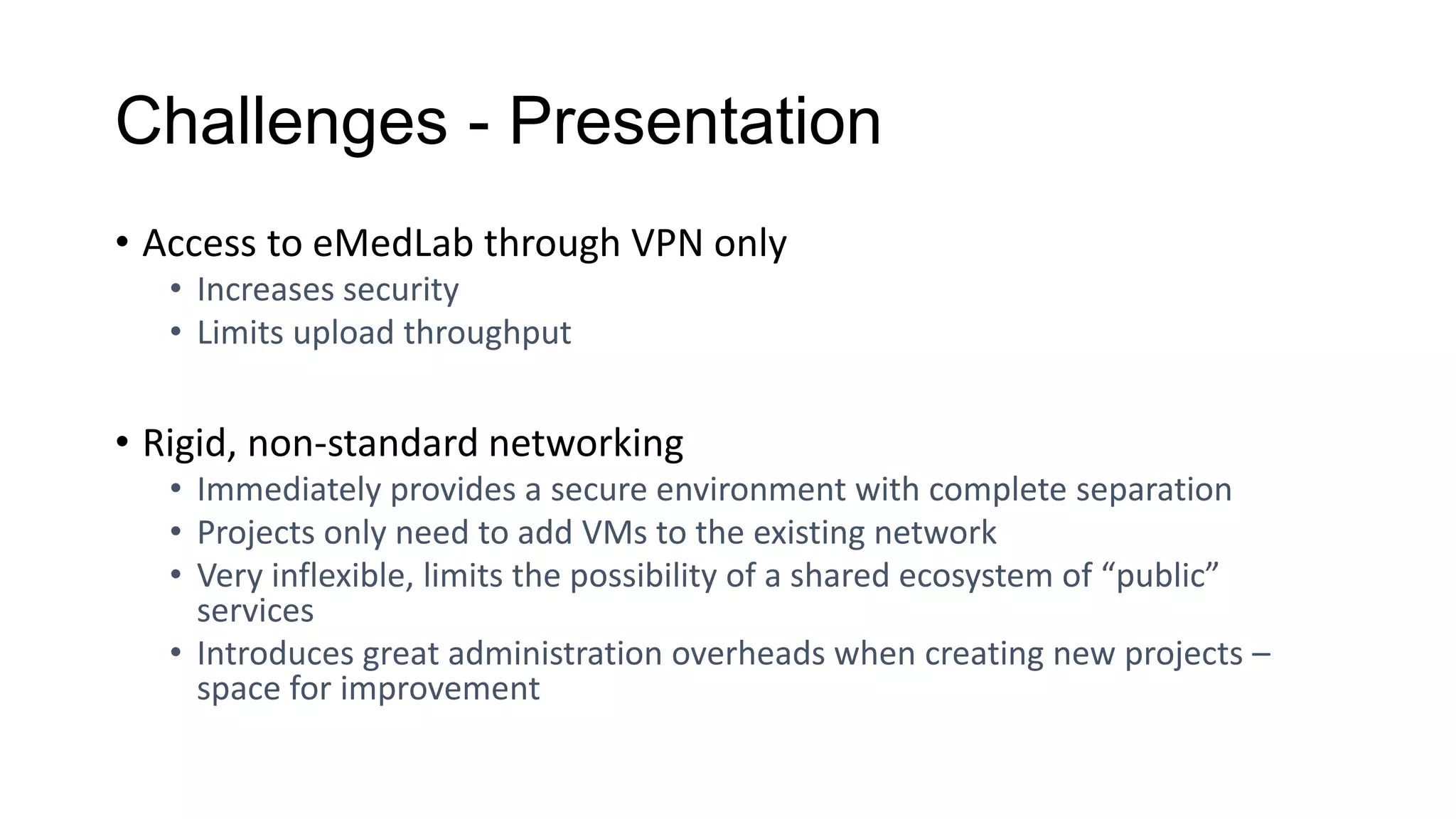

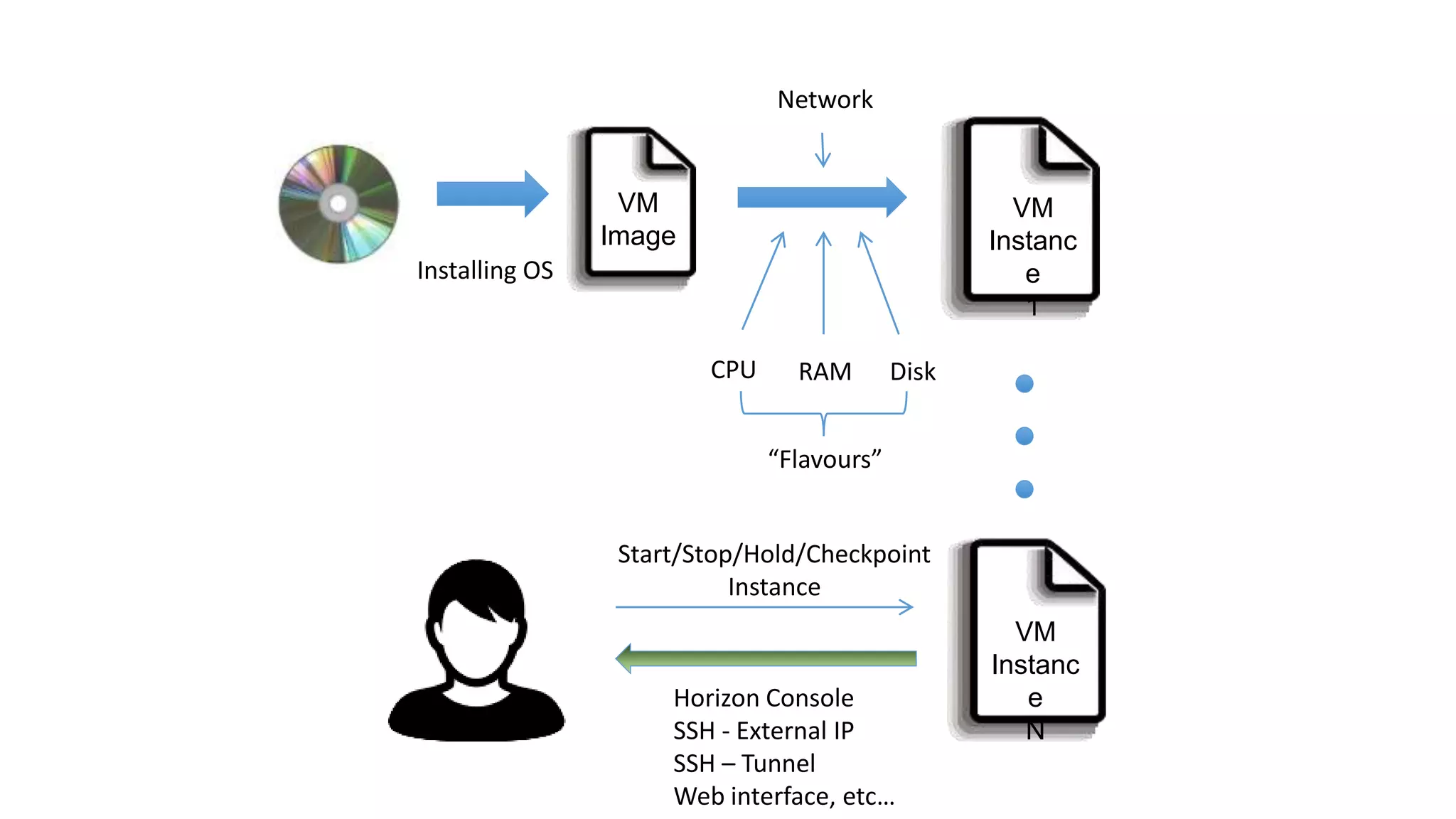

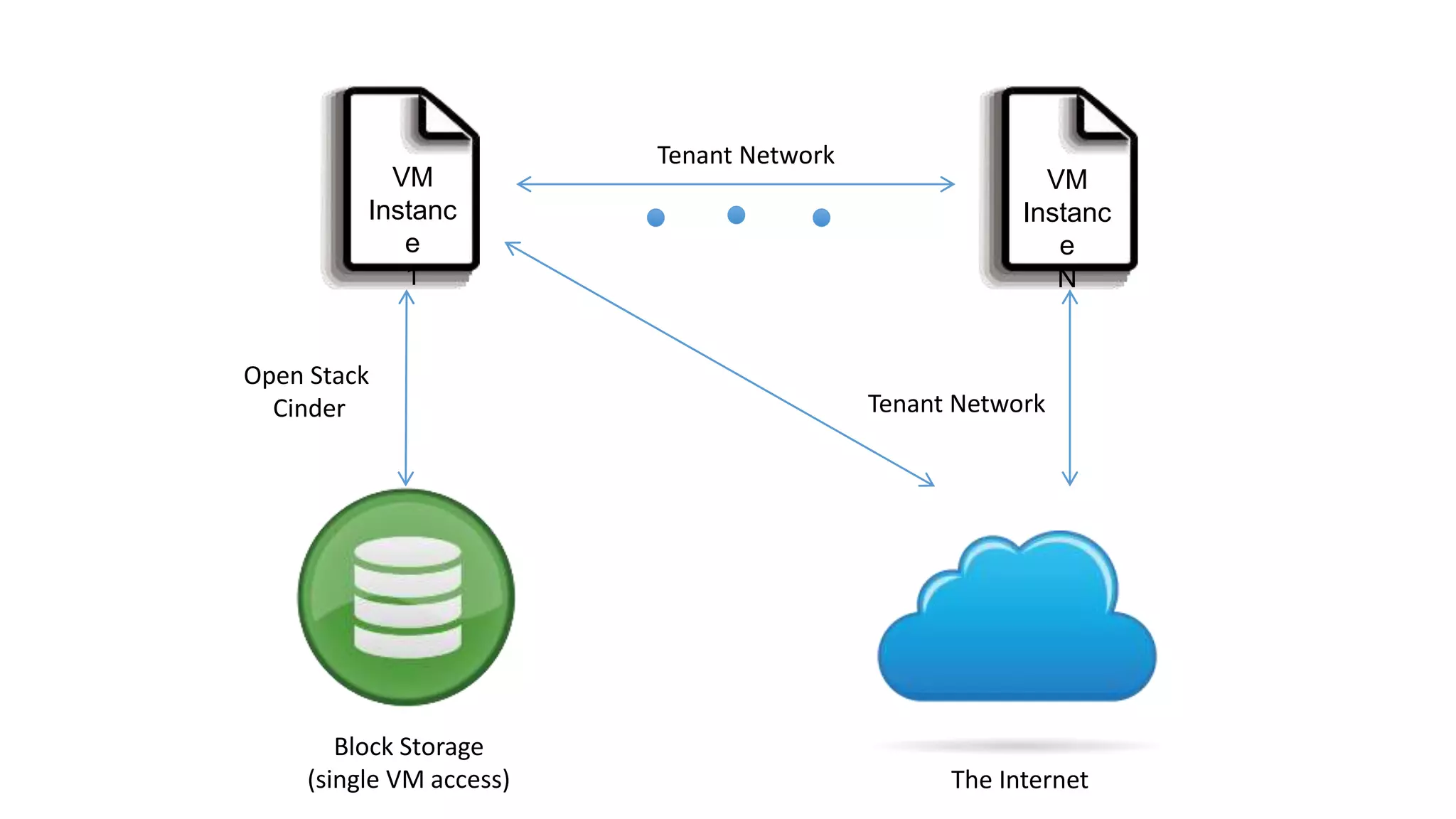

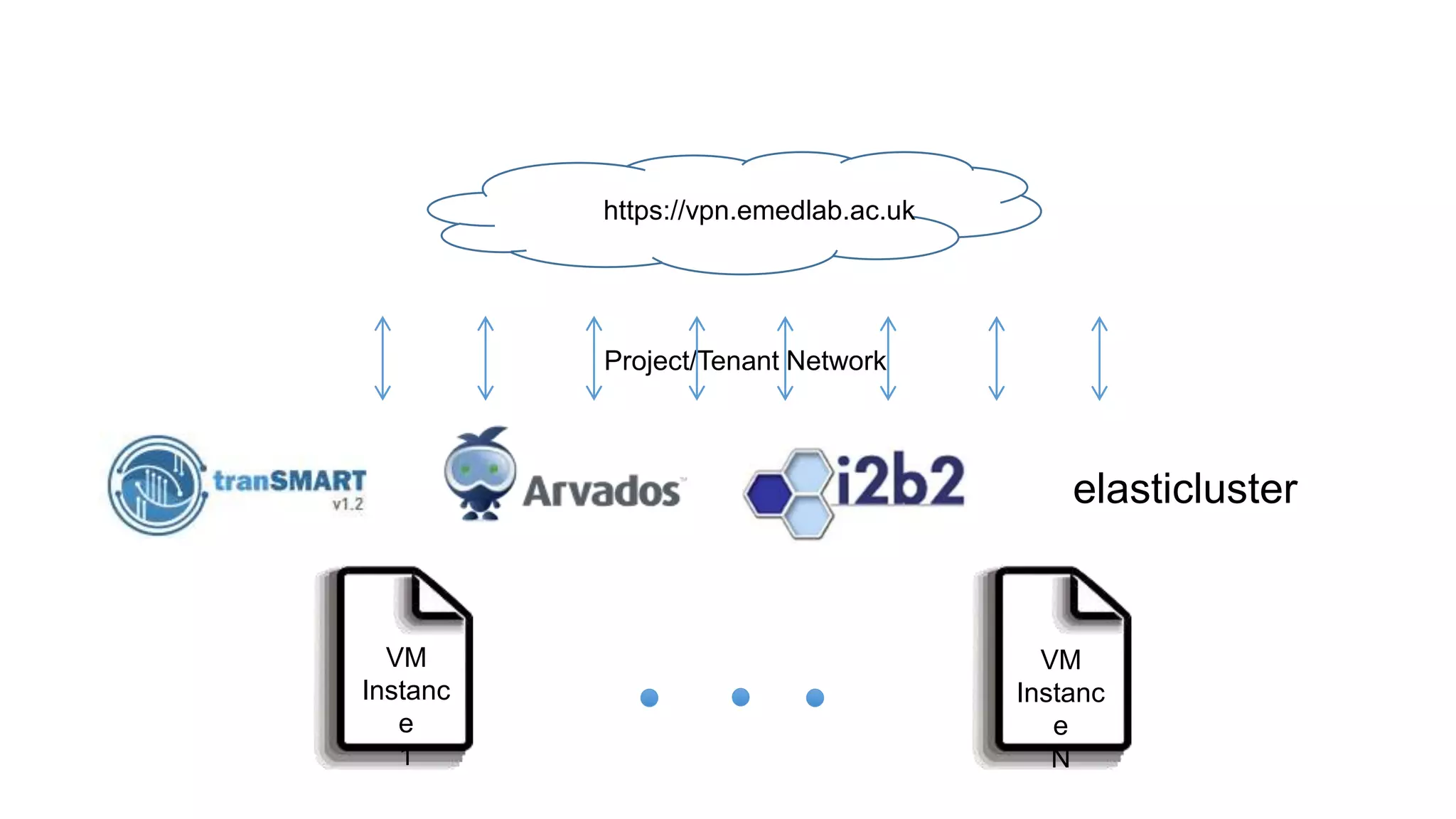

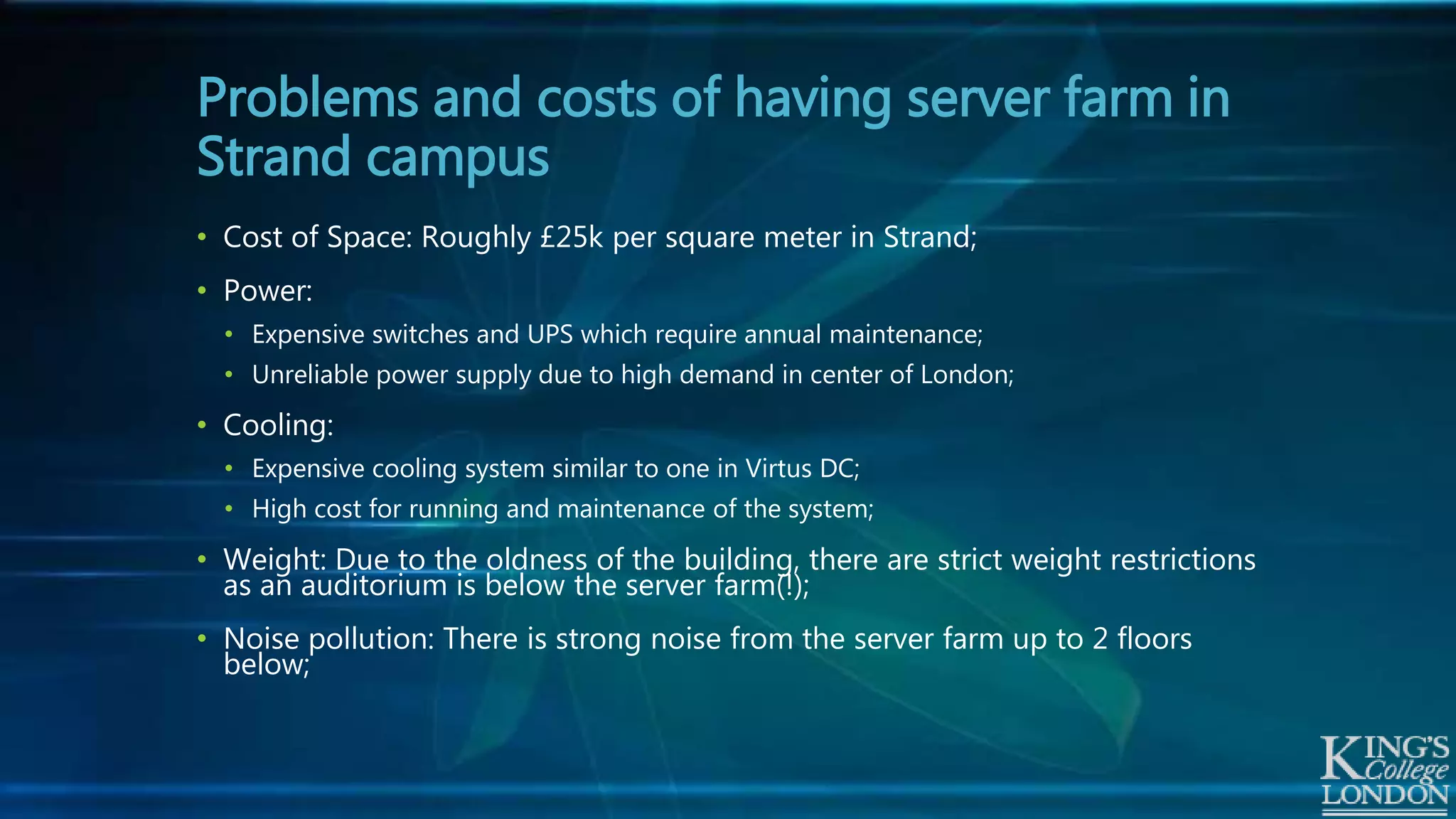

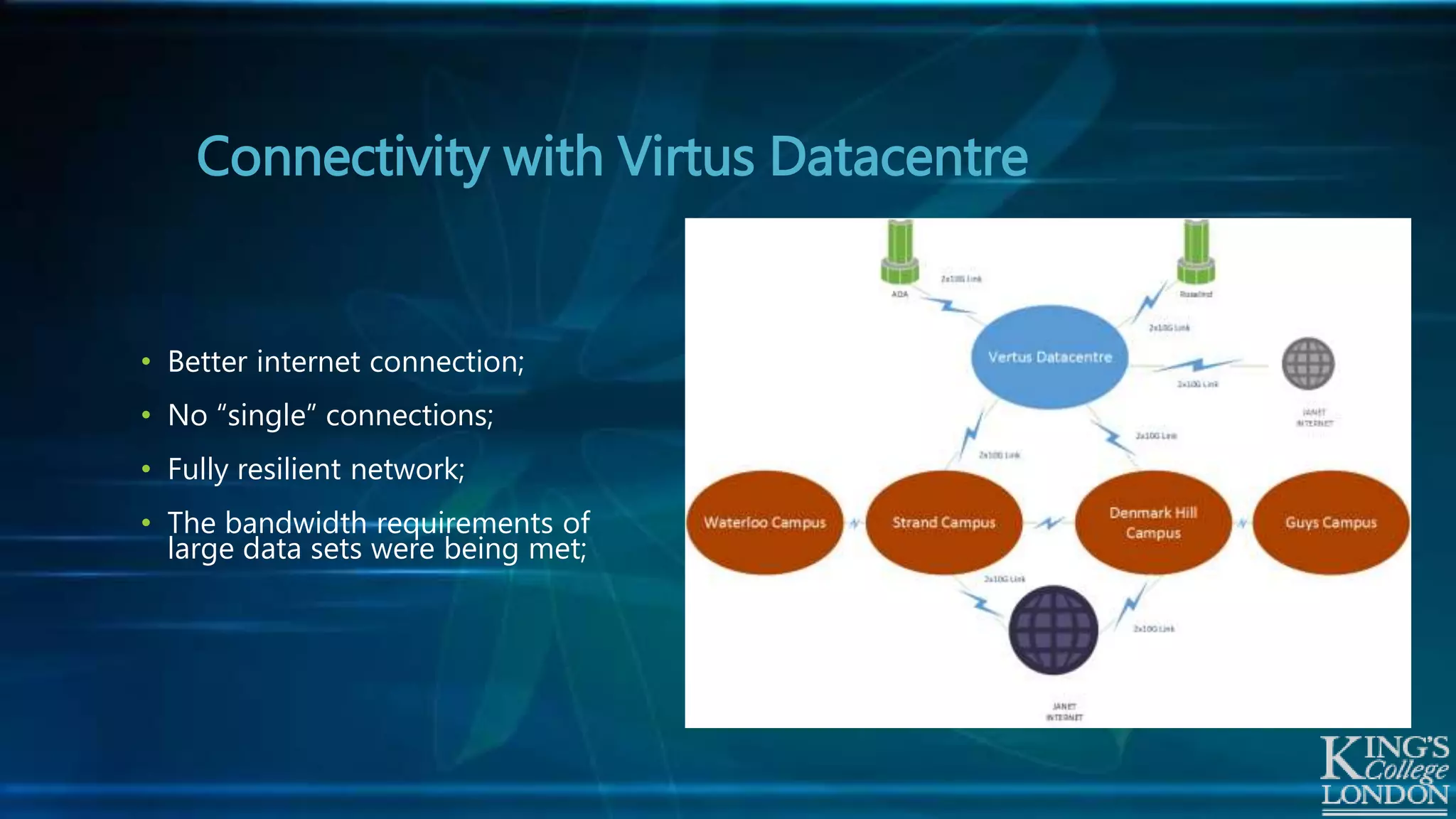

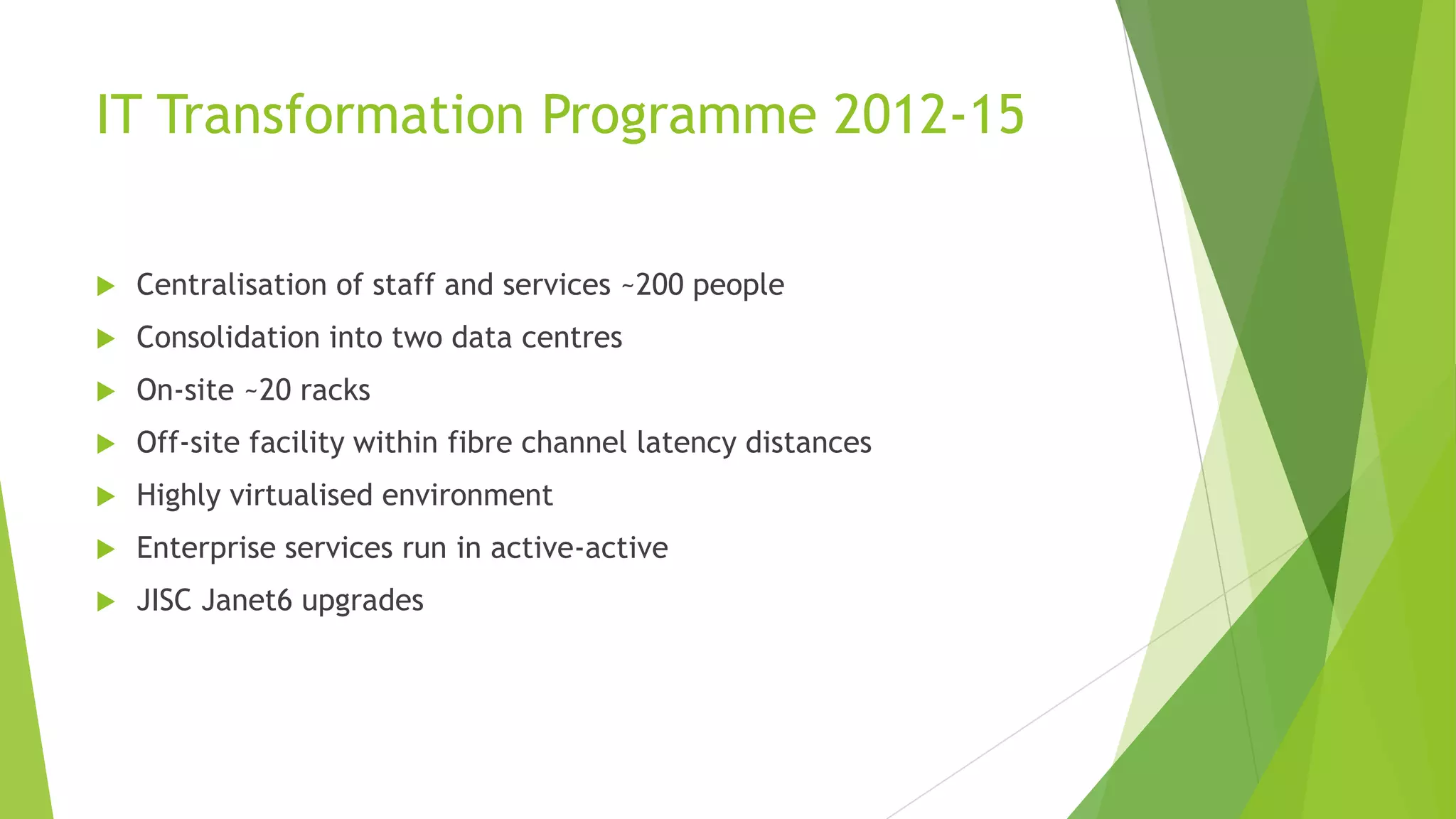

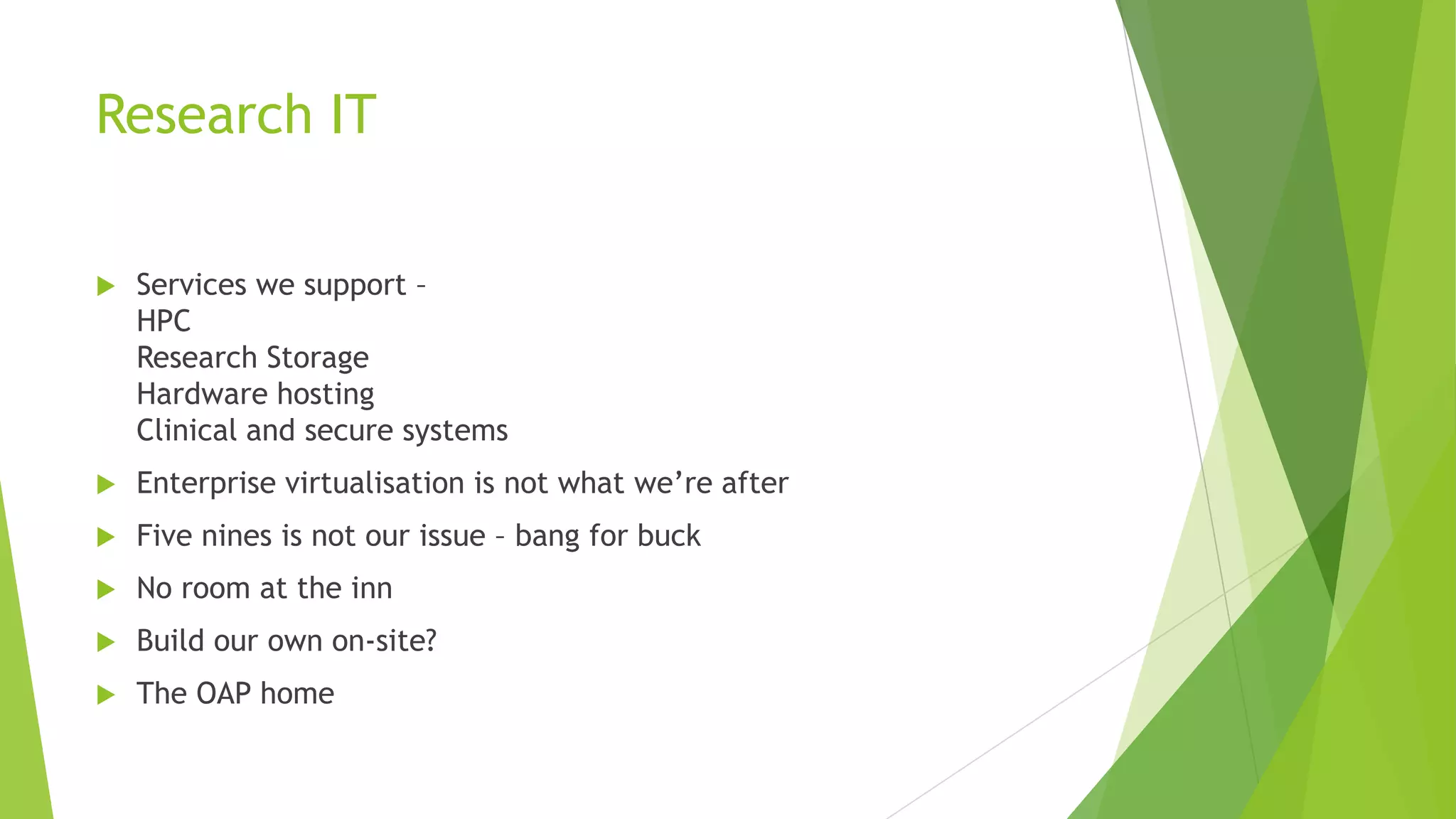

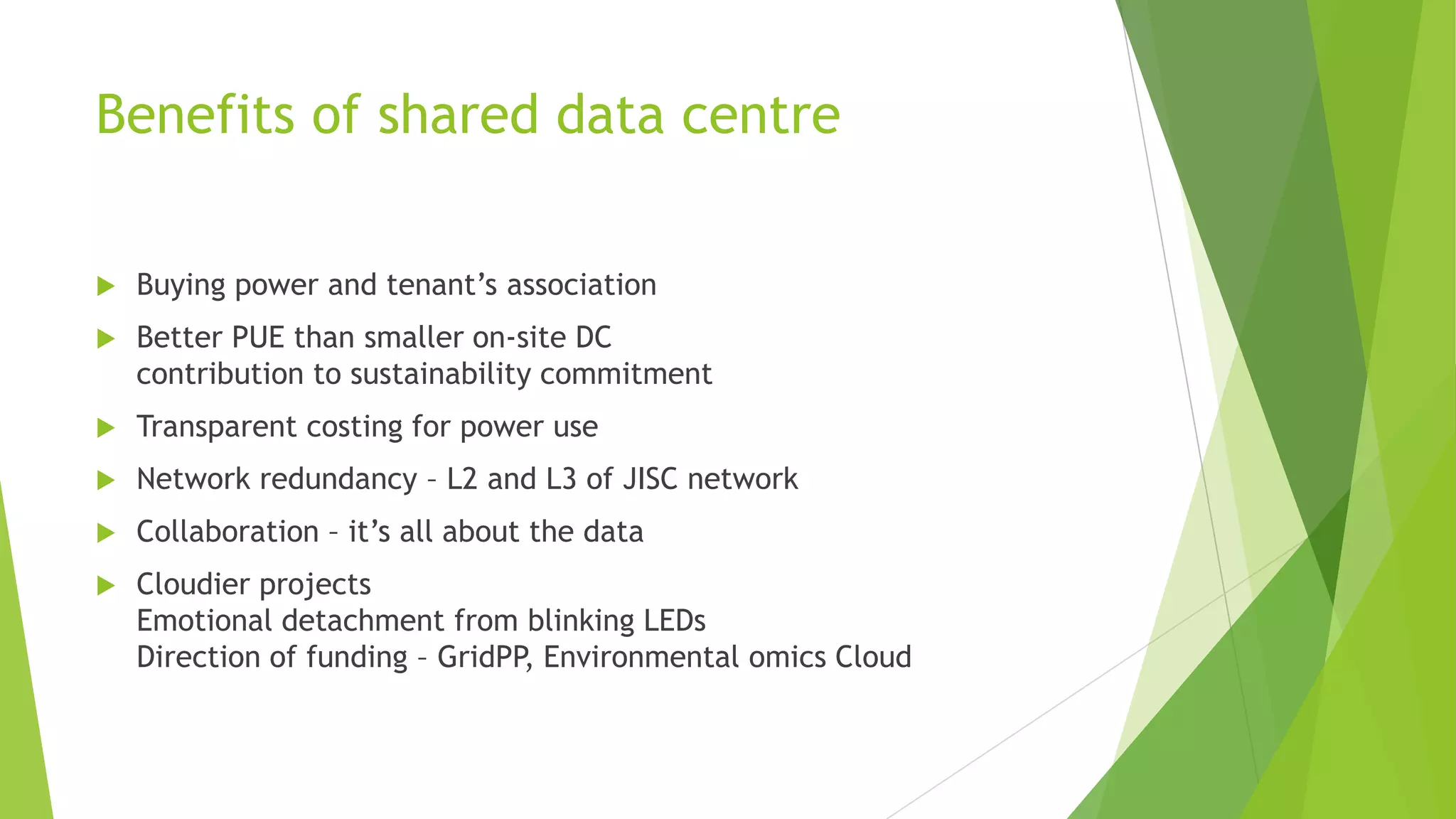

The document discusses shared services and advancements in high-performance computing (HPC) and big data facilities for UK research, emphasizing the role of JISC in enabling collaboration among institutions. Key topics include recent developments in HPC, data management, and equipment sharing initiatives, along with insights from panel discussions involving leaders from various universities and research institutions. The document outlines challenges faced in integrating technologies and the future potential for enhancing research efficiency through improved infrastructure and support systems.

![1. About Jisc

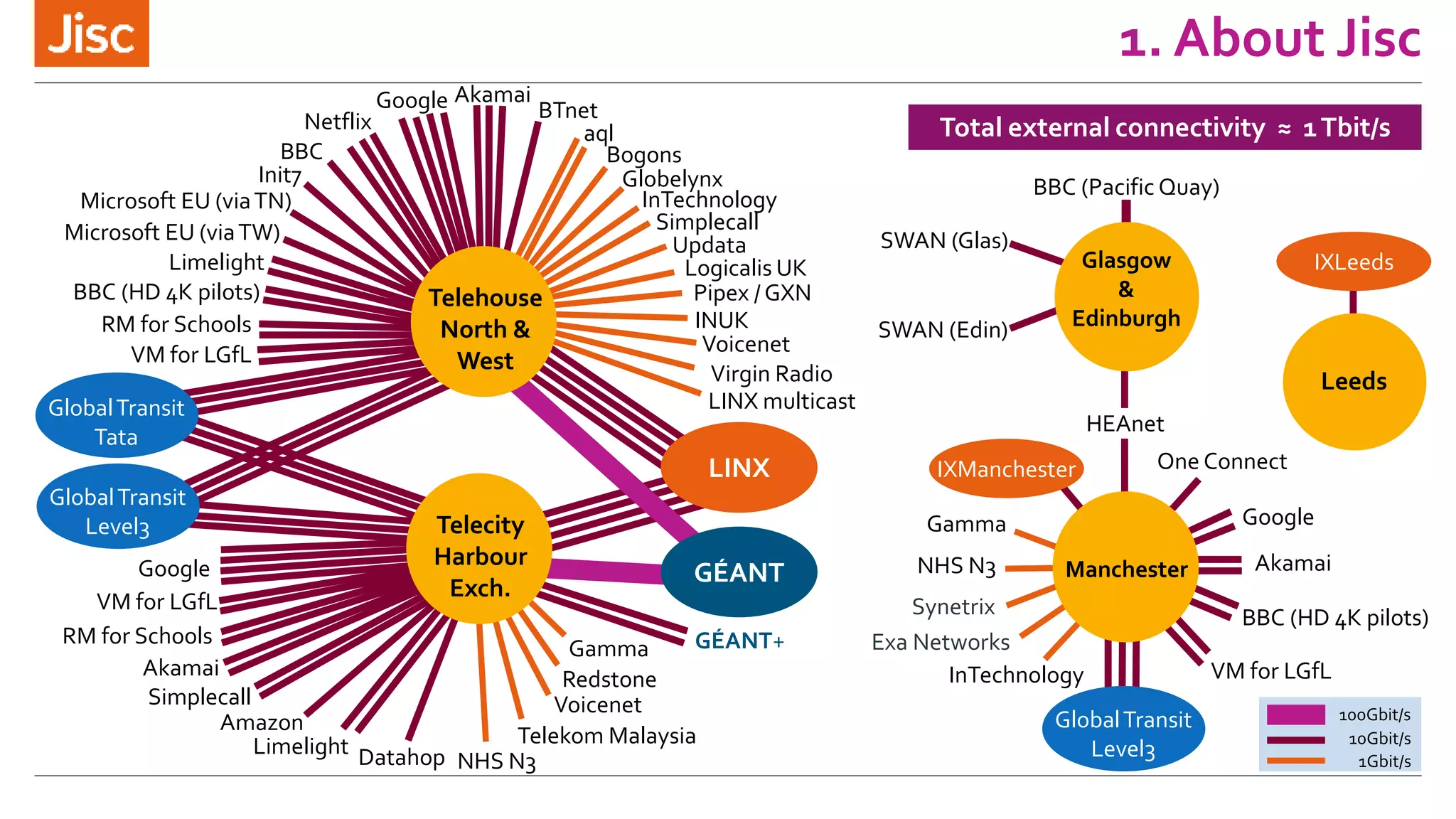

Janet network

[Image credit: Dan Perry]](https://image.slidesharecdn.com/sharedservices-thefutureofhpcandbigdatafaciitiesforukresearch-hpcandbigdata2016-160205100912/75/Shared-services-the-future-of-HPC-and-big-data-facilities-for-UK-research-6-2048.jpg)

![1. About Jisc

Janet network

[Image credit: Dan Perry]](https://image.slidesharecdn.com/sharedservices-thefutureofhpcandbigdatafaciitiesforukresearch-hpcandbigdata2016-160205100912/75/Shared-services-the-future-of-HPC-and-big-data-facilities-for-UK-research-7-2048.jpg)