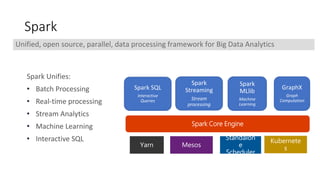

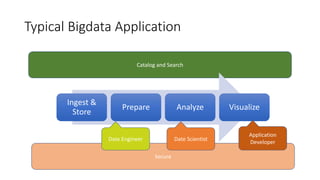

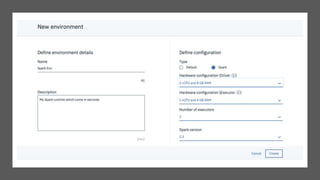

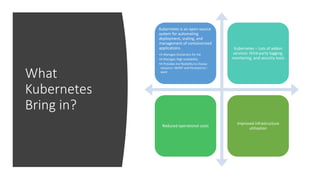

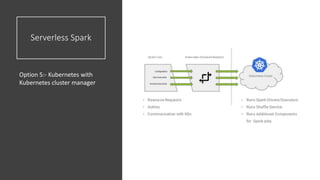

The document discusses the serverless Spark framework and its integration with Kubernetes for big data analytics, outlining various deployment options, including multitenant Spark clusters and function as a service. It emphasizes the advantages of Kubernetes, such as high availability, flexibility, and reduced operational costs. Additionally, the document highlights the role of data scientists in utilizing Spark for various analytics tasks and provides references for further resources.