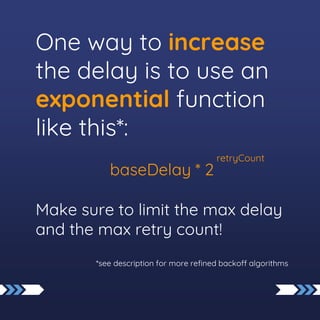

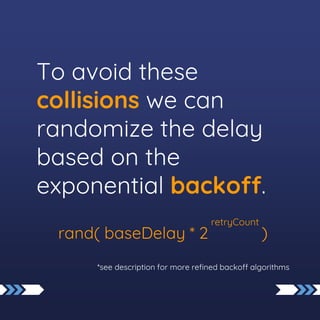

The document discusses lessons learned about using exponential backoff and rate limiting in serverless applications on AWS. It emphasizes the importance of increasing backoff delays to avoid exceeding service rate limits and managing parallel requests effectively by implementing jitter to prevent collisions. Additionally, the AWS SDK provides built-in support for retries and backoff strategies that can be customized as needed.