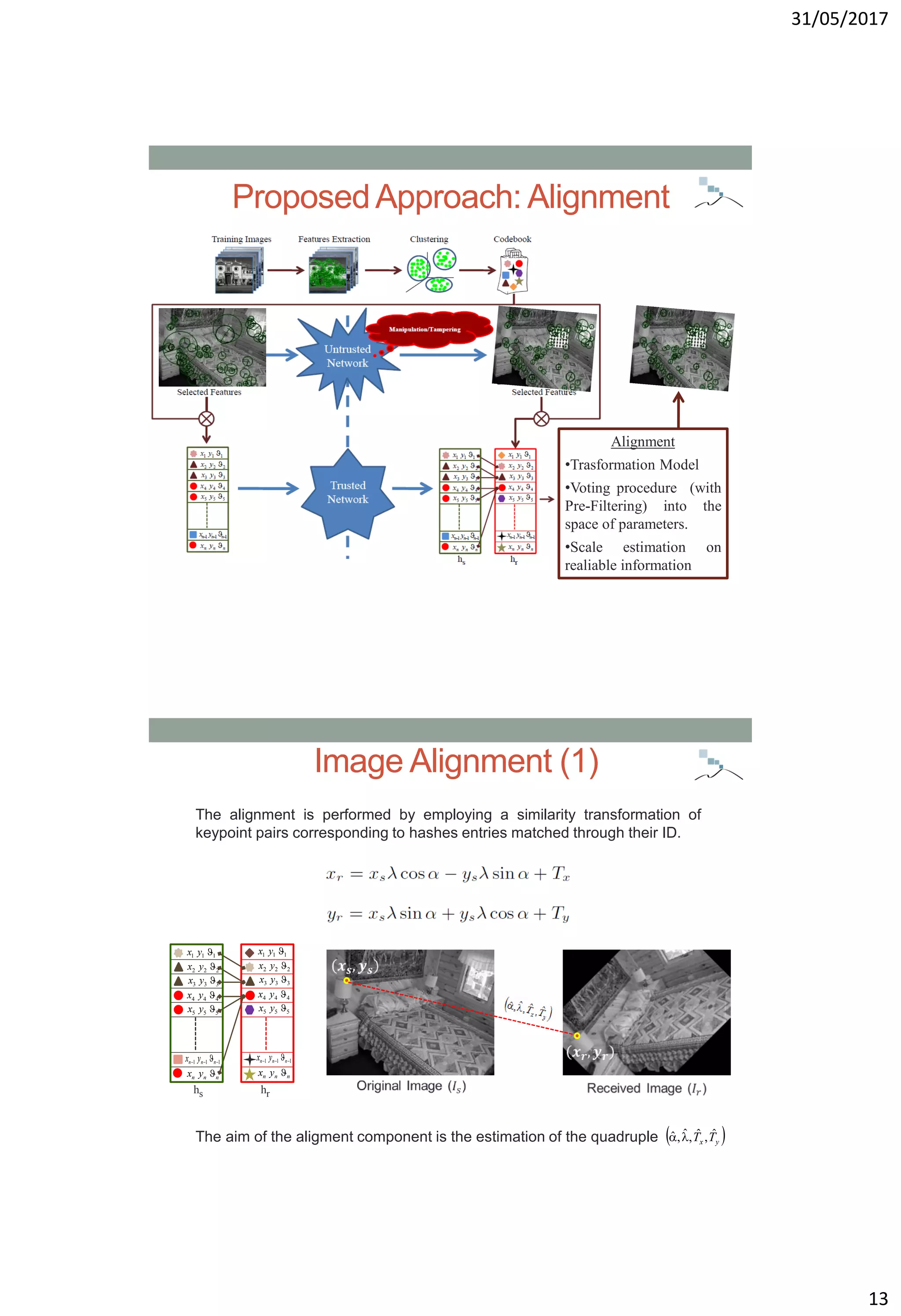

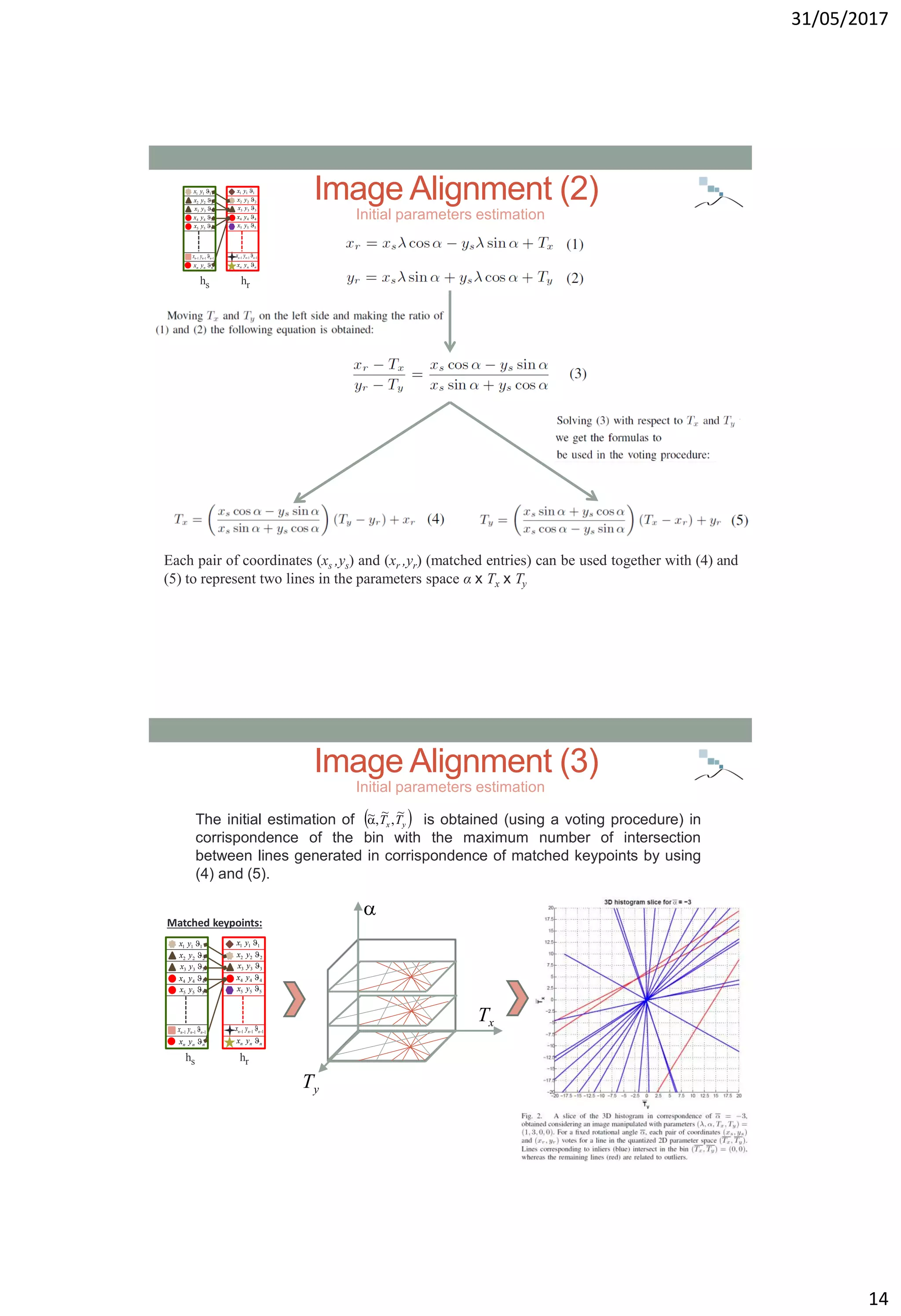

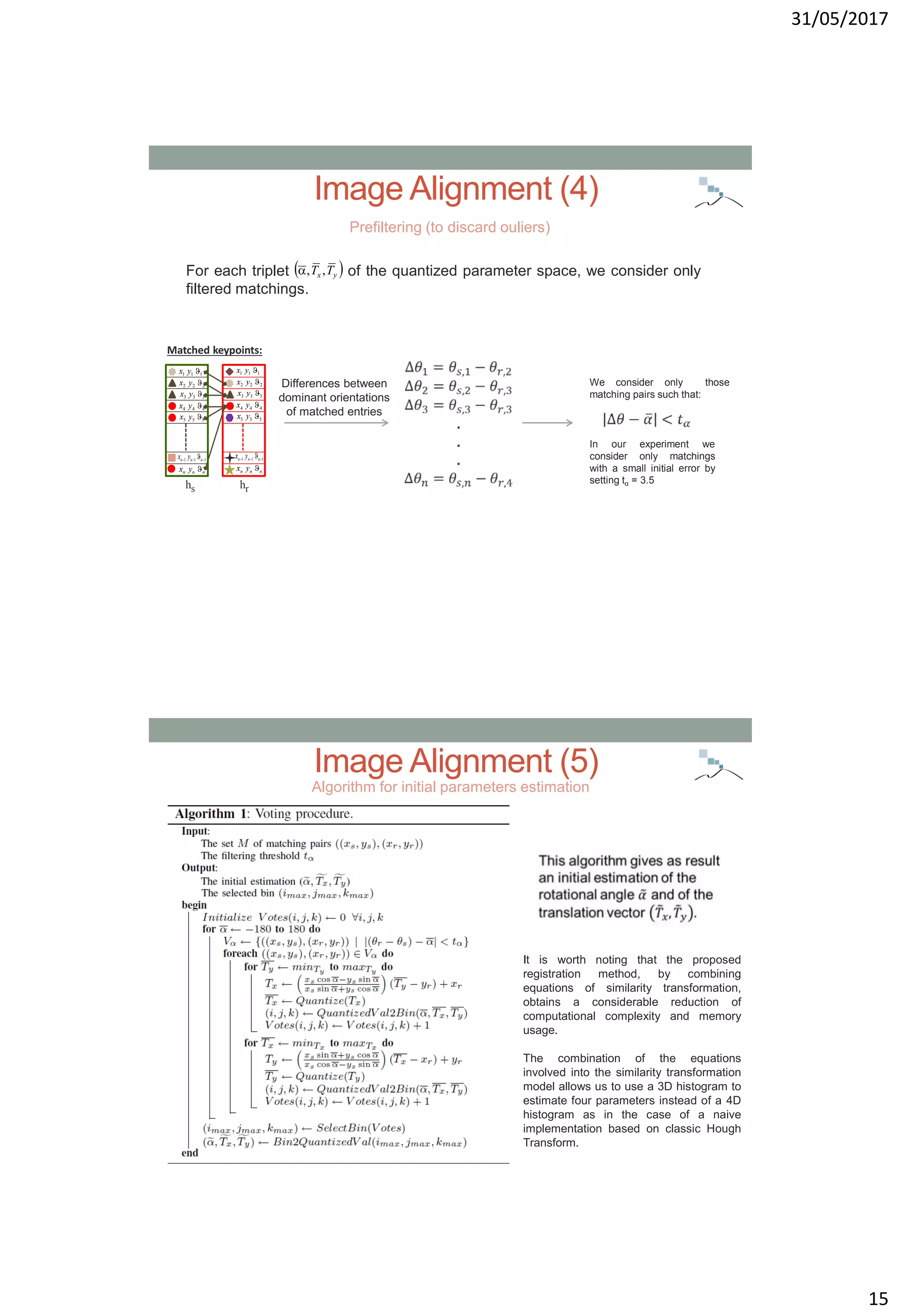

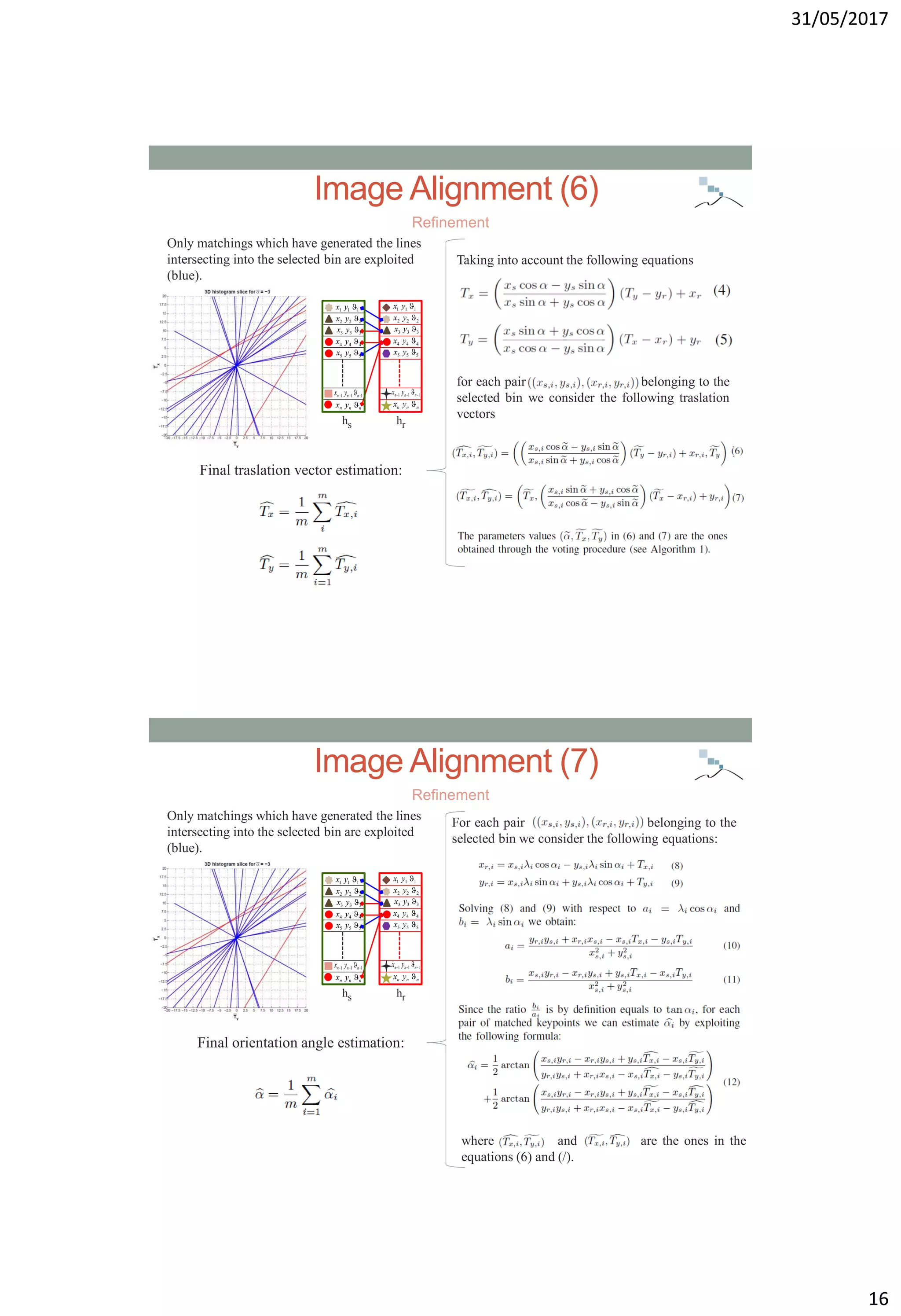

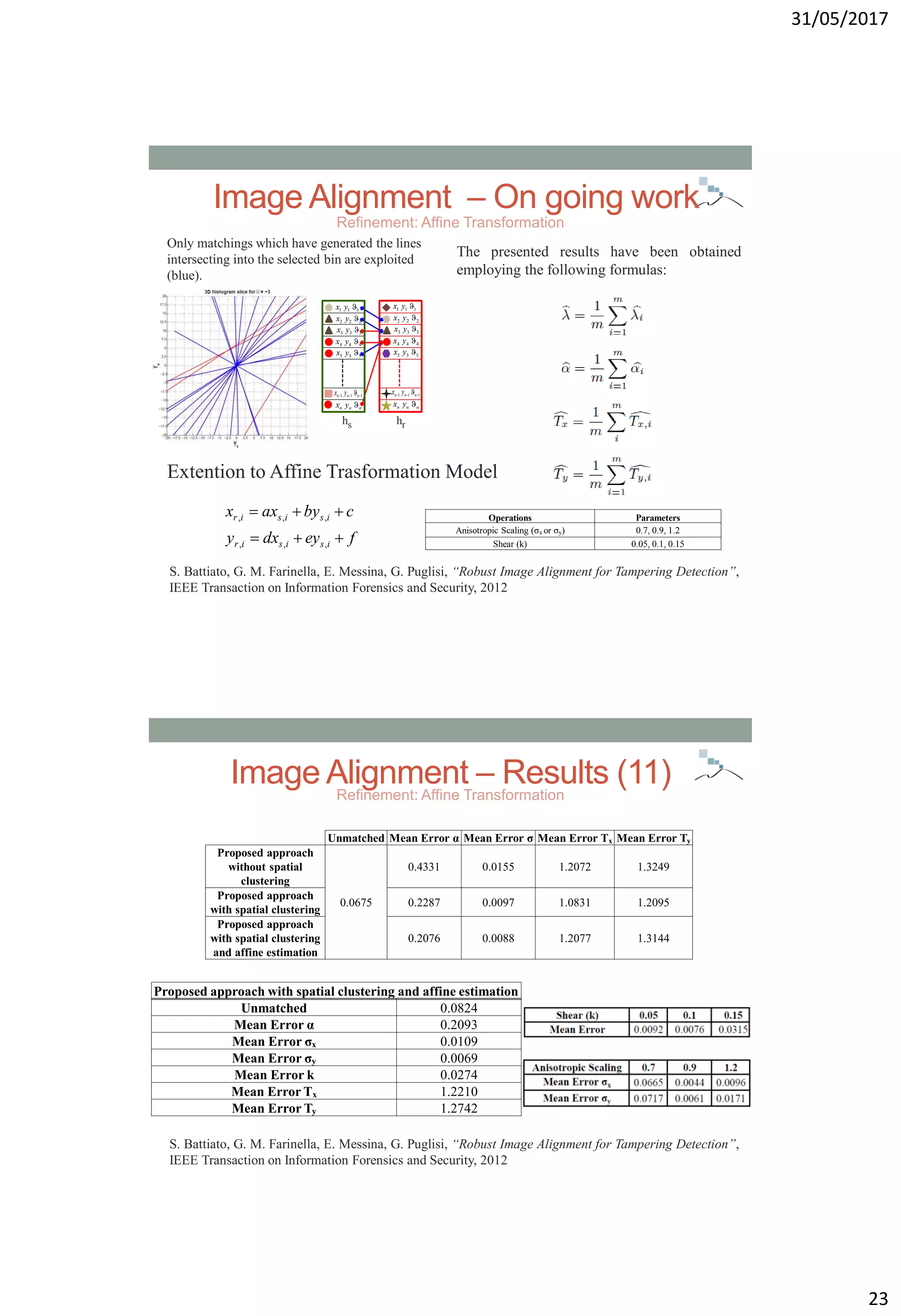

The document discusses a proposed approach for robust image alignment aimed at detecting tampering through forensic hashing. It emphasizes the need for effective tampering localization and robust image signatures that can withstand allowed operations while allowing for the identification of manipulations. The authors further assess previous works and present their methods for enhancing alignment and detection accuracy in images subjected to various geometric transformations.

![31/05/2017

2

Introduction and Motivations (1)

• Different episodes make questionable the use of visual

content as evidence material [PT11, HF06].

[PT11] “Photo tampering throughout history,” www.cs.dartmouth.edu/farid/research/digitaltampering/

[HF06] H. Farid, “Digital doctoring: how to tell the real from the fake,” Significance, vol. 3, no. 4, pp.

162–166, 2006.

= +

Introduction and Motivations (2)

Tampering localization is the process of localizing the

regions of the image that have been manipulated for

malicious purposes to change the semantic meaning of

the visual message.

In order to create a more heroic portrait of himself, Benito Mussolini had the

horse handler removed from the original photograph [PT11].

[PT11] “Photo tampering throughout history,” www.cs.dartmouth.edu/farid/research/digitaltampering/

Tampering](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-2-2048.jpg)

![31/05/2017

6

Destination

Authentication

Algorithms

Related Works: Basic Scheme

Source

Image Hash

(e.g., Alignment

component)

Untrusted Connection

Tampering

Detection

Reliable

Image

Original

Source

Image Received

Image

Trusted

Connection

Related Works (1)

• Fundamental requirement: image signature should be as

“compact" as possible.

Despite different robust alignment techniques have been

proposed by computer vision researchers, these

techniques are unsuitable in the context of forensic

hashing.

• To fit the underlying requirements, authors of [LVW10]

have proposed to exploit information extracted through

Radon transform and scale space theory in order to

estimate the parameters of the geometric

transformations.

[LVW10] W. Lu, A. L. Varna, and M. Wu, “Forensic hash for multimedia information,” in Proceedings of the

IS&T-SPIE Electronic Imaging Symposium - Media Forensics and Security, 2010, vol.7541.](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-6-2048.jpg)

![31/05/2017

7

Related Works (2)

Θ=45

shift along the angle axis=45

Radon

Trasform

Radon

Trasform

become

[LVW10] W. Lu, A. L. Varna, and M. Wu, “Forensic hash for multimedia information,” in Proceedings of the

IS&T-SPIE Electronic Imaging Symposium - Media Forensics and Security, 2010, vol.7541.

Related Works (3)

σ=0.5

σ=0.5

Radon

Trasform

Radon

Trasform

become

[LVW10] W. Lu, A. L. Varna, and M. Wu, “Forensic hash for multimedia information,” in Proceedings of the

IS&T-SPIE Electronic Imaging Symposium - Media Forensics and Security, 2010, vol.7541.](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-7-2048.jpg)

![31/05/2017

8

Related Works (4)

• To make more robust the alignment phase with respect to

manipulations such as cropping and tampering, an image

hash based on robust invariant features has been proposed

in [LW10].

• The above technique extended the idea previously proposed

in [RS07] by employing the Bag of Features (BOF) model to

represent the features to be used as image hash.

• The exploitation of the BOF representation is useful to

reduce the space needed for the image signature, by

maintaining the performances of the alignment component.

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[RS07] S. Roy and Q. Sun, “Robust hash for detecting and localizing image tampering,” in Proceedings of the

IEEE International Conference on Image Processing, 2007, pp. 117–120.

Related Works (5)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

22

11

33

44

55

11 nn

nn

11

22

33

44

55

11 nn

nn

hs hr

Ransac (σ)

SIFT points with highest contrast value

+

+

Send

Send

Receive

Receive

Shared Vocabulary

(obtained by clustering

of SIFT points extracted

from a training set)

Match:

same ID and

single occurrence

Ransac (θ)](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-8-2048.jpg)

![31/05/2017

9

Related Works (6)

• In [BS11] a more robust approach based on a cascade of

estimators has been introduced.

• The method in [BS11] is able to better handle the

replicated matchings in order to make a more robust

estimation of the orientation parameter.

• Moreover, the cascade of estimators allows a higher

precision in estimating the scale factor outperforming the

approach in [LW10].

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Related Works (7)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

22

11

33

44

55

11 nn

nn

11

22

33

44

55

11 nn

nn

hs hr

SIFT points with highest contrast value

+

+

Send

Send

Receive

Receive

Match:

same ID and

single occurrence

Ransac (σ)

Ransac (θ)

Shared Vocabulary

(obtained by clustering

of SIFT points extracted

from a training set)](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-9-2048.jpg)

![31/05/2017

10

Related Works (8)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Approach of [BS11]

22

11

33

44

55

11 nn

nn

11

22

33

44

55

11 nn

nn

hs hr

22

11

33

44

55

11 nn

nn

11

22

33

44

55

11 nn

nn

hs hr

Approach of [LW10]

Ransac (σ)

Ransac (θ)

Learned from previous works…

• The exploitation of the BOF representation is

useful to reduce the space needed for the image

signature, by maintaining the performances of the

alignment component.

• Handle replicated matchings help to make more

robust the parameters estimation phase

(especially for rotation angle estimation).

• Cascade approach (filtering) help to make more

robust the parameters estimation phase

(especially for the scale factor estimation).

22

11

33

44

55

11 nn

nn

11

22

33

44

55

11 nn

nn ](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-10-2048.jpg)

![31/05/2017

11

What we have done more…

Feature selection problem

Transformation model (e.g.,

we wish to estimate traslation

too)

Check the robustness on a

bigger and challenging dataset

by considering different image

transformation

Consider realistic tampering

samples

ID

ID

2

1

7.0

30

Ty

Tx

Sender Receiver

Features Selection:

Ordering by contrast values

SIFT features extraction

SIFT ordering by contrast values

and selection of top n SIFT

SIFT

[θ,λ,(x,y)]

label (id)

1

2

Associate id value of the

closest prototype belonging

to the shared codebook

3

222 yx

333 yx

444 yx

555 yx

111 yx

111 nnn yx

nnn yx

The signature is

composed of all the

quadruple [id,θ,x,y]

associated to the

selected SIFT features

4

h

Signature

Generation

Process](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-11-2048.jpg)

![31/05/2017

12

Features Selection:

Spatial Distribution and Ordering by Contrast Values

SIFT features extraction

cluster-based SIFT

ordering by contrast

values and selection

of top one for each

cluster SIFT

[θ,λ,(x,y)]

label (id)

Associate id value of the

closest prototype belonging

to the shared codebook

The signature is

composed of all the

quadruple [id,θ,x,y]

associated to the

selected SIFT features

2

3

5

h

Signature

Generation

Process

Spatial

Clustering

4

1

222 yx

333 yx

444 yx

555 yx

111 yx

111 nnn yx

nnn yx

Previous Approaches Proposed solution

Features Selection: Example

Original Image Pattern

Different matches are preservedAll are wrong matches

Sender Receiver Sender Receiver

SpatialDistributionand

OrderingbyContrastValues

OrderingbyContrastValues](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-12-2048.jpg)

![31/05/2017

18

Experimental Dataset (1)

In order to cope with scene variability the tests have been performed

considering a subset of the fifteen scene category benchmark dataset

[LSP06] and the dataset DBForgery 1.0 [BM09].

[LSP06] S. Lazebnik, C. Schmid, and J. Ponce, “Beyond bags of features: Spatial pyramid matching for recognizing

natural scene categories,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2006,

pp. 2169–2178.

[BM09] S. Battiato, G. Messina "Digital Forgery Estimation into DCT Domain - A Critical Analysis", ACM Multimedia

2009, Multimedia in Forensics (MiFor'09), October 2009, Beijing, China.

Ten images have been randomly

sampled from each scene category

(average size: 244x272 pixels).

29 different images tampered with

different parameter settings

(average size: 470x500 pixels)

+

• The training set used in the experiments is built through a

random selection of 179 images from the previous

mentioned datasets.

• The test set consists of 21330 images generated through the

application of different manipulations on the training images.

Experimental Dataset (2)

Operations Parameters

Rotation (α) 3, 5, 10, 30, 45 degrees

Scaling (σ) factor = 0.5, 0.7, 0.9, 1.2, 1.5

Orizontal Traslation (Tx) 5, 10, 20 pixels

Vertical Traslation (Ty) 5, 10, 20 pixels

Cropping 19%, 28%, 36%, of entire image

Tampering block size 50x50

Malicious Tampering block size 50x50

Linear Photometric Transformation (a*I+b)

a = 0.90, 0.95, 1, 1.05, 1.10

b = -10, -5, 0, 5, 10

Compression JPEG Q=10

Seam Carving 10%, 20%, 30%

Realistic Tampering [BM09]

Various combinations of above operations](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-18-2048.jpg)

![31/05/2017

19

Image Alignment – Results (1)

Proposed approach

Number of SIFT 15 30 45 60

Unmatched Images 5.18% 1.90% 1.12% 0.83%

Spatial Clustering without with without with without with without with

Mean Error α 1.3826 1.9911 0.8986 0.8627 0.6661 0.6052 0.5658 0.4518

Mean Error σ 0.0462 0.0593 0.0306 0.0302 0.0241 0.0200 0.0208 0.0164

Mean Error Tx 2.7672 3.3191 1.8621 1.9504 1.5664 1.5626 1.4562 1.4227

Mean Error Ty 2.6650 3.2428 1.9409 2.0750 1.7009 1.7278 1.6008 1.5944

Image Alignment – Results (2)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Unmatched Images

Number of SIFT 15 30 45 60

Lu et al. [LW10] 7.87% 2.77% 1.52% 1.16%

Battiato et al. [BS11] 0.86% 0.48% 0.25% 0.08%

Proposed approach without spatial clustering 3.00% 1.35% 0.87% 0.73%

Proposed approach with spatial clustering 2.53% 0.64% 0.18% 0.10%](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-19-2048.jpg)

![31/05/2017

20

Image Alignment – Results (3)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Mean Error α

Number of SIFT 15 30 45 60

Unmatched Images 10.99% 3.85% 2.02% 1.56%

Lu et al. [LW10] 7.3311 7.9970 7.8600 7.4125

Battiato et al. [BS11] 3.4372 2.4810 2.4718 1.9581

Proposed approach without spatial clustering 1.1591 0.8206 0.5485 0.4634

Proposed approach with spatial clustering 1.7933 0.8288 0.5735 0.4318

Mean Error σ

Number of SIFT 15 30 45 60

Unmatched Images 10.99% 3.85% 2.02% 1.56%

Lu et al. [LW10] 0.0619 0.0680 0.0625 0.0592

Battiato et al. [BS11] 0.0281 0.0229 0.0197 0.0179

Proposed approach without spatial clustering 0.0388 0.0281 0.0214 0.0183

Proposed approach with spatial clustering 0.0541 0.0287 0.0195 0.0161

Image Alignment – Results (5)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-20-2048.jpg)

![31/05/2017

21

Image Alignment – Results (6)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Malicious Manipulation

Unmatched Images

Number of SIFT 15 30 45 60

Lu et al. [LW10] 90.50% 87.71% 81.01% 73.74%

Battiato et al. [BS11] 68.72% 54.19% 29.61% 9.50%

Proposed approach without spatial clustering 87.15% 86.03% 74.86% 64.25%

Proposed approach with spatial clustering 0% 0% 0% 0%

Mean Error α

Number of SIFT 15 30 45 60

Lu et al. [LW10] 85.6844 79.9884 88.4555 97.4700

Battiato et al. [BS11] 86.9447 92.0451 92.5144 91.8478

Proposed approach without spatial clustering 35.6087 33.6800 42.5111 38.5156

Proposed approach with spatial clustering 1.2458 0.0000 0.0000 0.0000

Mean Error σ

Number of SIFT 15 30 45 60

Lu et al. [LW10] 0.2868 0.2934 0.2920 0.3482

Battiato et al. [BS11] 0.3141 0.3453 0.3505 0.3493

Proposed approach without spatial clustering 0.8249 0.7891 0.9284 0.7706

Proposed approach with spatial clustering 0.0193 0.0005 0.0002 0.0006

Unmatched Images

45 60

20.67% 21.79%

1.68% 1.12%

14.53% 13.97%

0.00% 0.00%

Mean Error α

45 60

14.2848 13.4975

34.3832 36.8093

7.0392 9.6364

0.0000 0.0000

Mean Error σ

45 60

0.0538 0.0564

0.1288 0.1608

0.1141 0.1815

0.0003 0.0004

patch size 50x50 patch size 25x25

Image Alignment – Results (7)

Malicious Manipulation (50x50)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

Number of SIFT 45 60

Unmatched Images 92.74% 89.39%

Mean Error α σ α σ

Lu et al. [LW11] 81.1994 0.2750 88.2215 0.3126

Battiato et al. [BS10] 96.5480 0.4163 88.3088 0.3058

Proposed approach without spatial clustering 32.3846 0.7285 34.9474 0.6213

Proposed approach with spatial clustering 0.0000 0.0001 0.0000 0.0009](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-21-2048.jpg)

![31/05/2017

22

Realistic Tampering (DBForgery1.0) [BM09]

Image Alignment – Results (8)

[BM09] S. Battiato, G. Messina "Digital Forgery Estimation into DCT Domain - A Critical Analysis", ACM

Multimedia 2009, Multimedia in Forensics (MiFor'09), October 2009, Beijing, China.

Proposed approach without sp

Proposed approach with spa

Proposed approach without sp

Proposed approach with spa

Proposed approach without sp

Proposed approach with spa

Proposed approach without sp

Proposed approach with spa

Number of SIF

UnmatchedNumber of SIFT 15 30 45 60

Unmatched 3.45% 0.00% 0.00% 0.00%

Spatial Clustering without with without with without with without with

Mean Error α 0.1071 0.0000 0.0690 0.0000 0.0000 0.0000 0.0000 0.0000

Mean Error σ 0.0065 0.0002 0.0014 0.0002 0.0006 0.0006 0.0003 0.0003

Mean Error Tx 0.5675 0.0171 0.1699 0.0104 0.0106 0.0140 0.0092 0.0130

Mean Error Ty 0.3718 0.0270 0.0283 0.0219 0.0178 0.0172 0.0144 0.0138

Image Alignment – Results (9)

Cropping

Number of SIFT 15 30 45 60

Unmatched 2.05% 0.74% 0.00% 0.00%

Spatial Clustering without with without with without with without with

Mean Error α 1.5494 1.5399 0.2758 0.2720 0.1750 0.1266 0.1695 0.0782

Mean Error σ 0.0416 0.0427 0.0143 0.0089 0.0078 0.0064 0.0088 0.0061

Mean Error Tx 2.3241 2.1522 0.6974 0.6862 0.5512 0.4864 0.5444 0.4527

Mean Error Ty 1.9857 1.9840 0.7036 0.6820 0.5474 0.4773 0.5158 0.4660](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-22-2048.jpg)

![31/05/2017

24

Alignment - Complexity Comparison

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.

ProposedApproach: Tampering

Tampering Detection

Hash for

tampering

Hash for

tampering

=

?

Alignment

•Trasformation Model

•Voting procedure (with

Pre-Filtering) into the

space of parameters.

•Scale estimation on

realiable information](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-24-2048.jpg)

![31/05/2017

25

Tampering Detection (1)

Representation of each block is based on histogram of oriented gradients (HOG)

magnitudo

orientation

Finally, the histogram is normalized and quantized.

(ϑ1,ρ1)

(ϑ2,ρ2)

:

:

(ϑL,ρL)

4 bins

For each pixel of the block the

magnitudo ρ and the

orientation θ are computed

Create the gradient

image and divide it into

blocks of 32x32 pixels

Each pixel of the block

votes for a bin using its

magnitude

Tampering Detection (2)

Each block representation is part of the image signature, so it has to be as small as

possible. We compared two different solutions:

Uniform quantization Non-uniform quantization

Each bin is quantized using a

fixed number of bits. In our

tests, we used 3 bits. So, 12

bits are required to encode a

single histogram.

Sequence of 12 bits

3bits

For each image with N

blocks, 12*N bits are needed

to encode the hash.

It uses a precomputed shared vocabulary of

histograms of oriented gradients, making a

clustering (through k-means) considering all the

histogram of gradients extracted from the whole scene

category dataset.

. . .(1) (2) (3) (k)

The sequence of ids is the image hash representation.

In this case the histogram centroids are not quantized.

Each block is hence associated to an ID

corresponding to the closed centroid.

[id1,id2,…idk]](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-25-2048.jpg)

![31/05/2017

26

(1)

Tampering Detection (3)

Similarity

Tampering Detection – Results (1)

[LW10] W. J. Lu and M. Wu, “Multimedia forensic hash based on visual words,” in Proceedings of the IEEE

International Conference on Image Processing, 2010, pp. 989–992.

[BS11] S. Battiato, G. M. Farinella, E. Messina, and G. Puglisi, “Understanding geometric manipulations of images

through BOVW-based hashing,” in International Workshop on Content Protection & Forensics (CPAF 2011), 2011.](https://image.slidesharecdn.com/multimediasecurity-robustimagealignmentfortamperingdetection-170601090506/75/Robust-image-alignment-for-tampering-detection-26-2048.jpg)