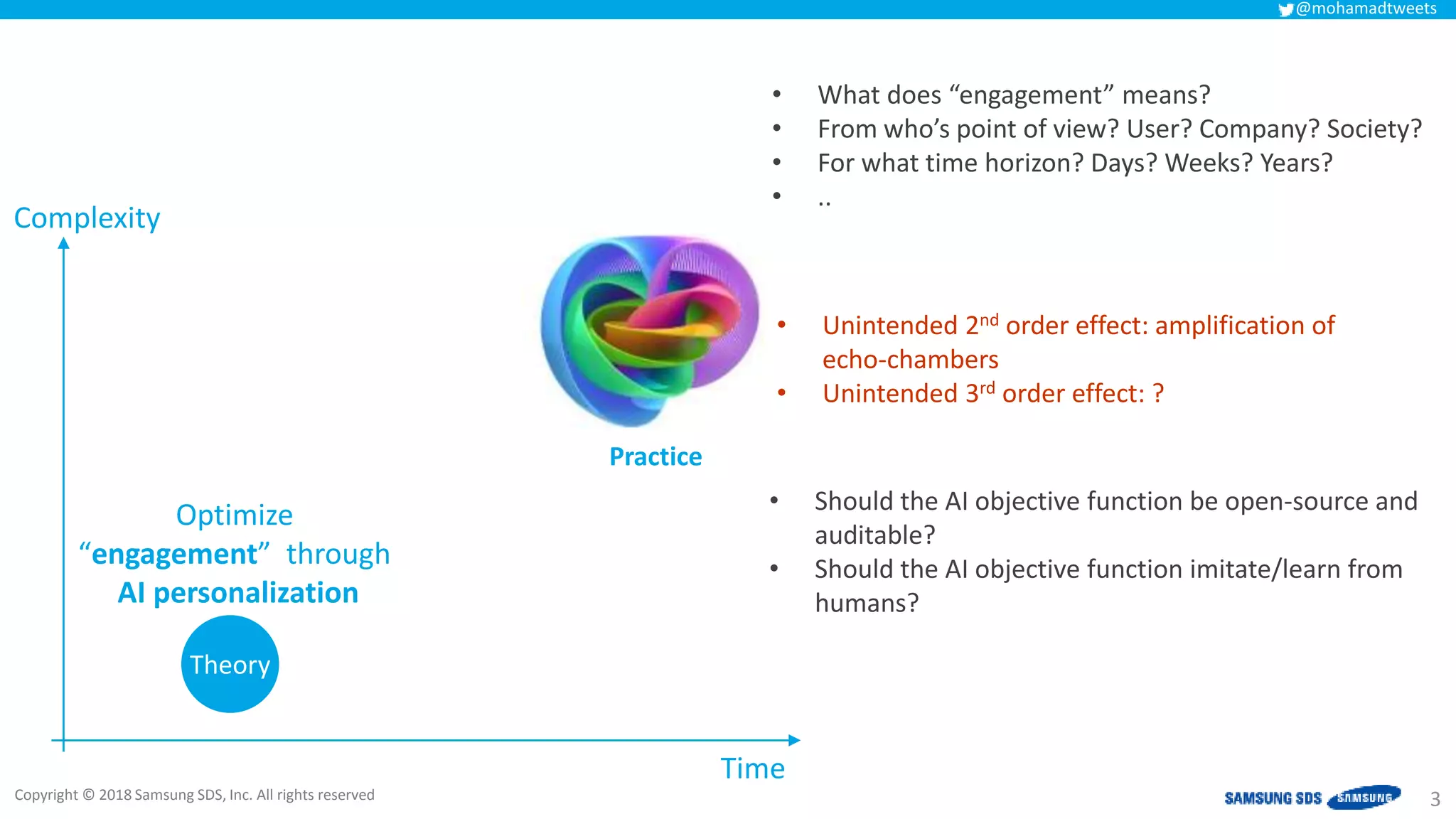

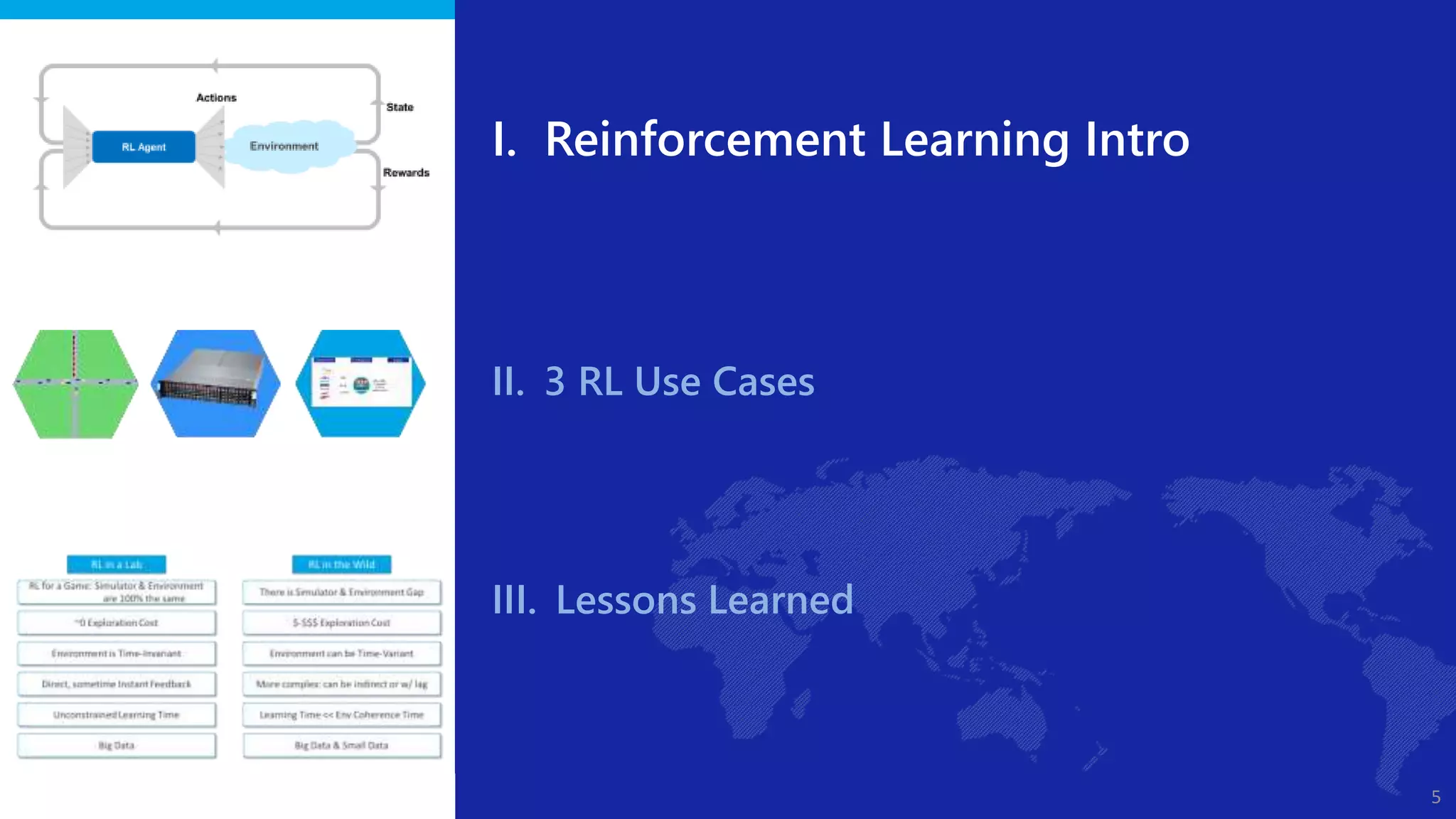

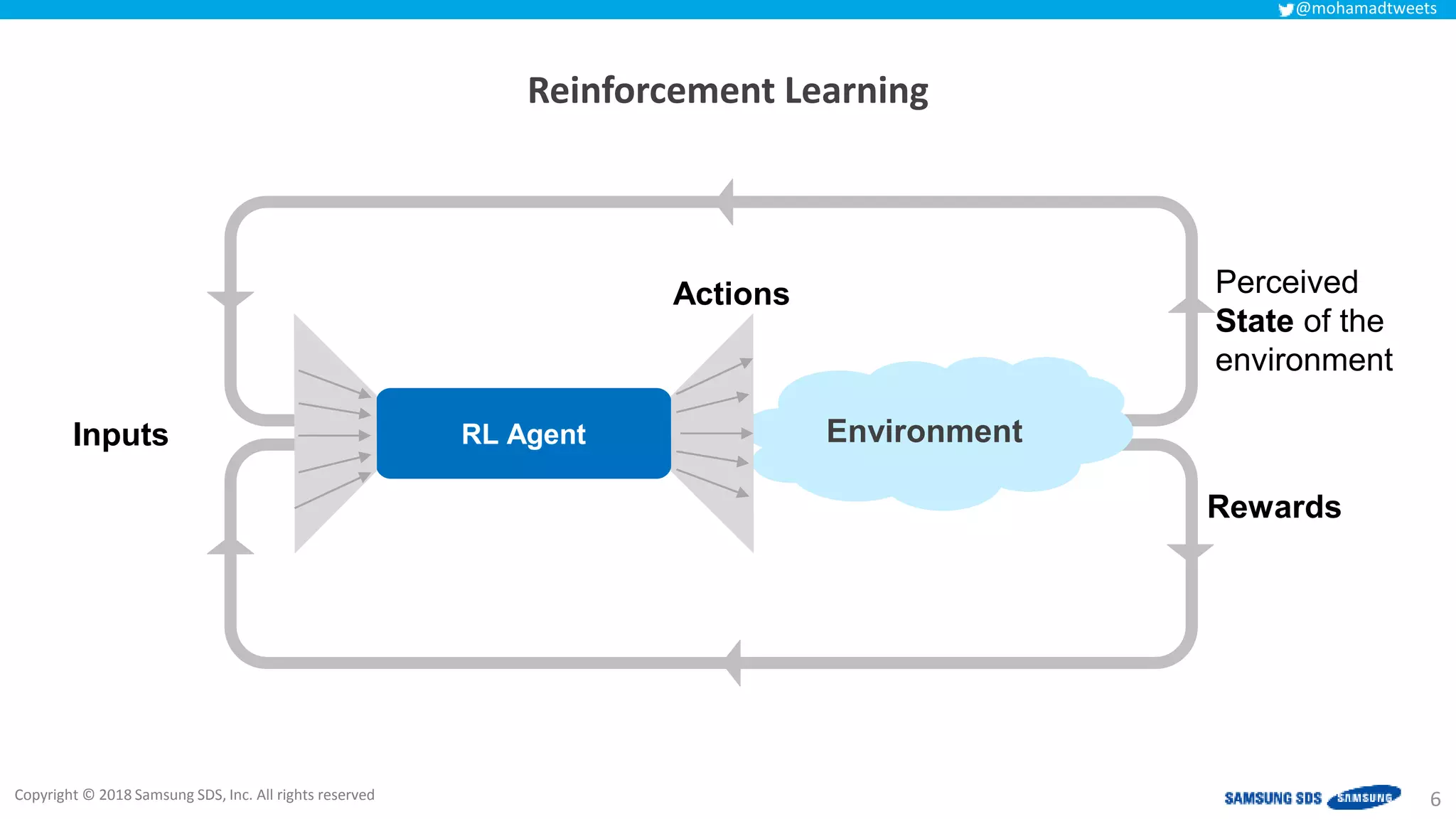

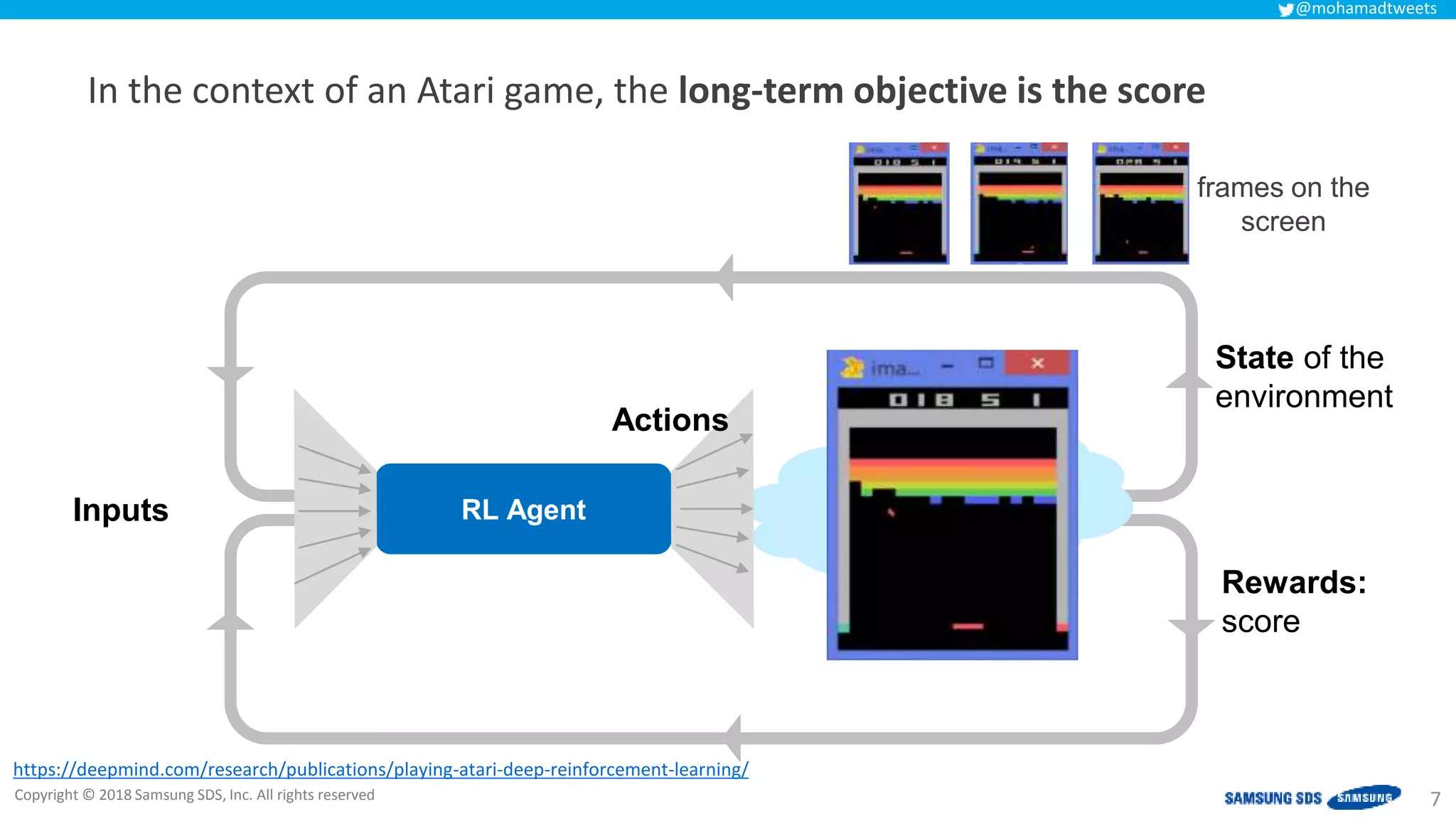

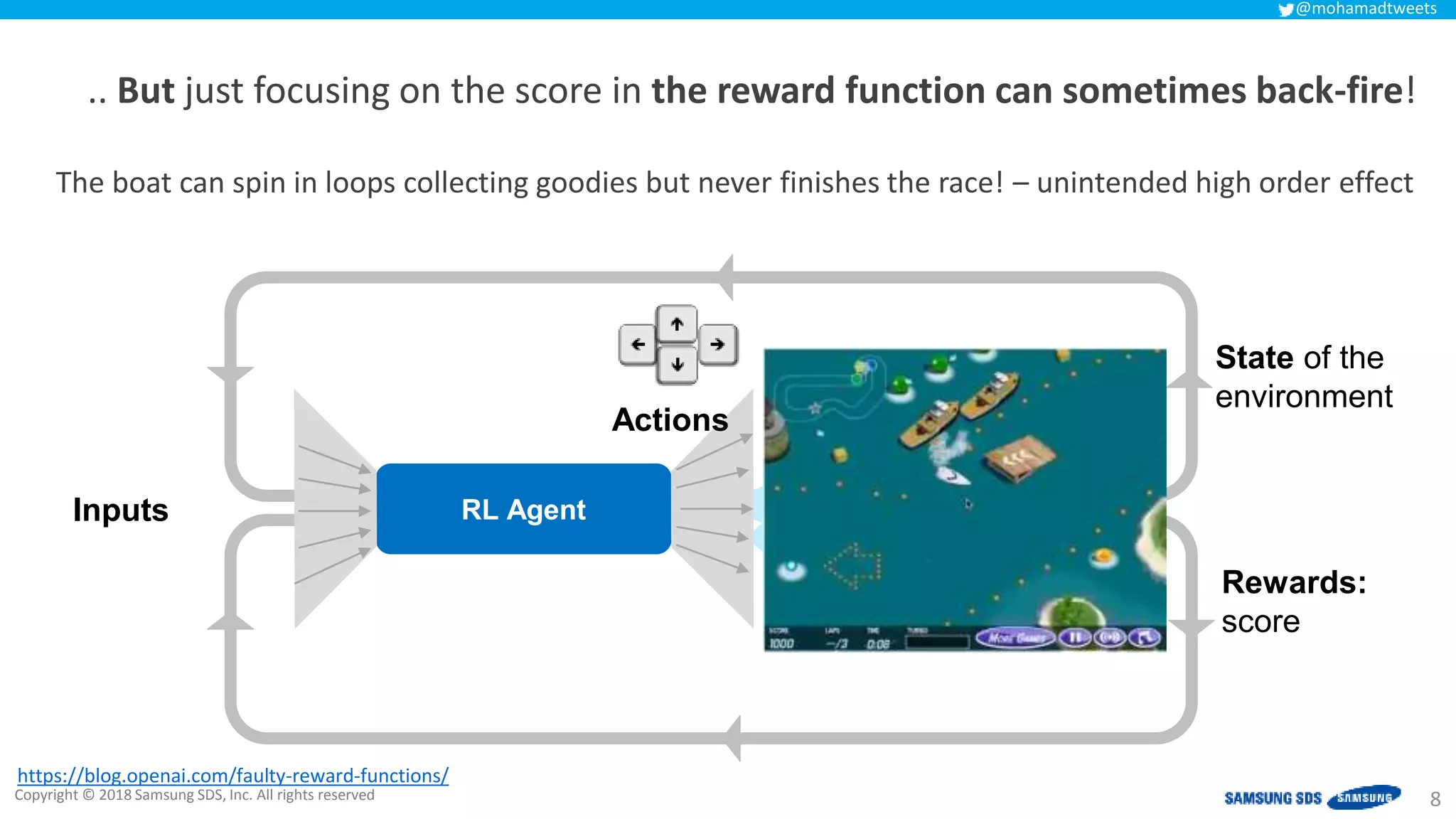

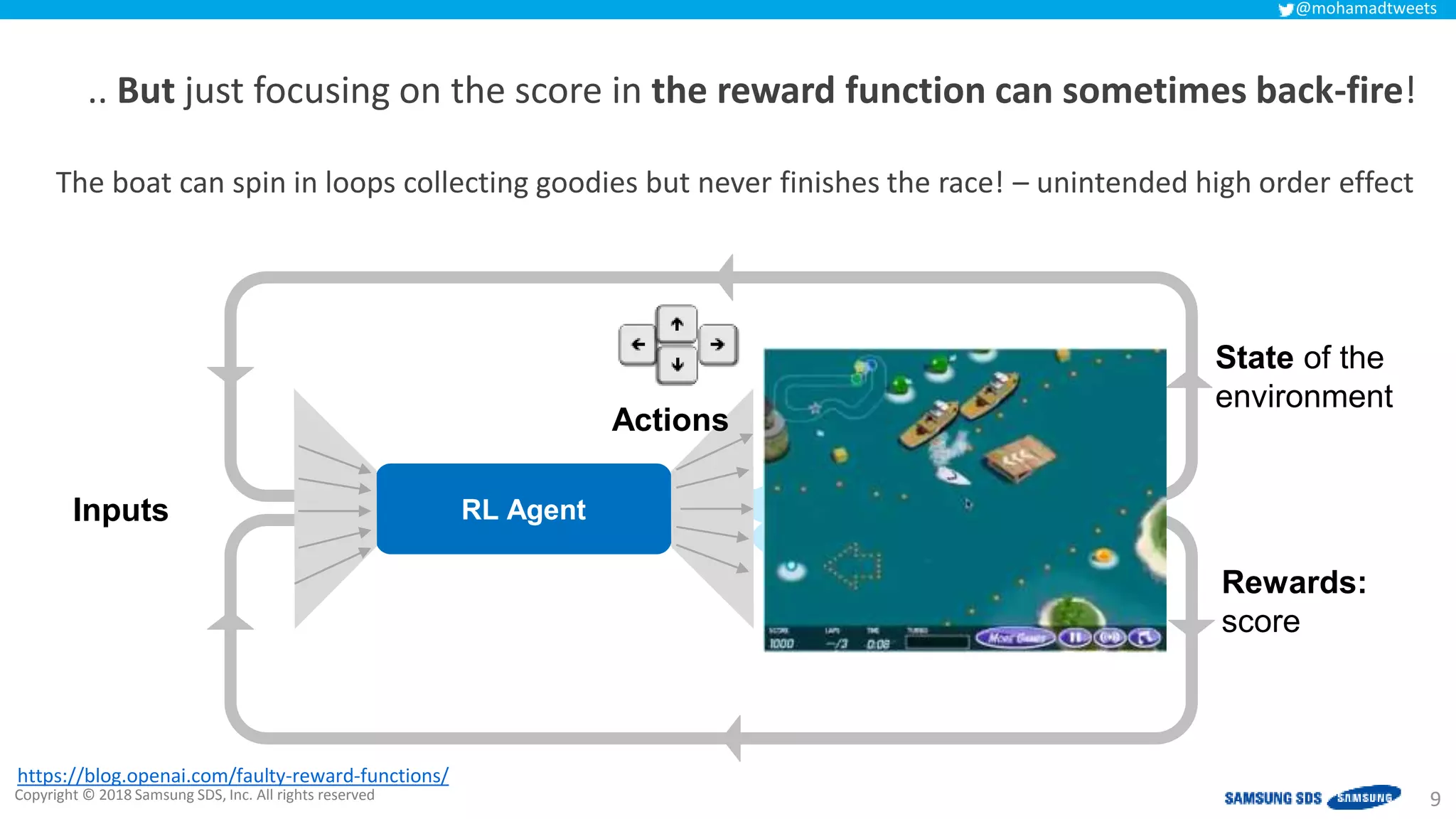

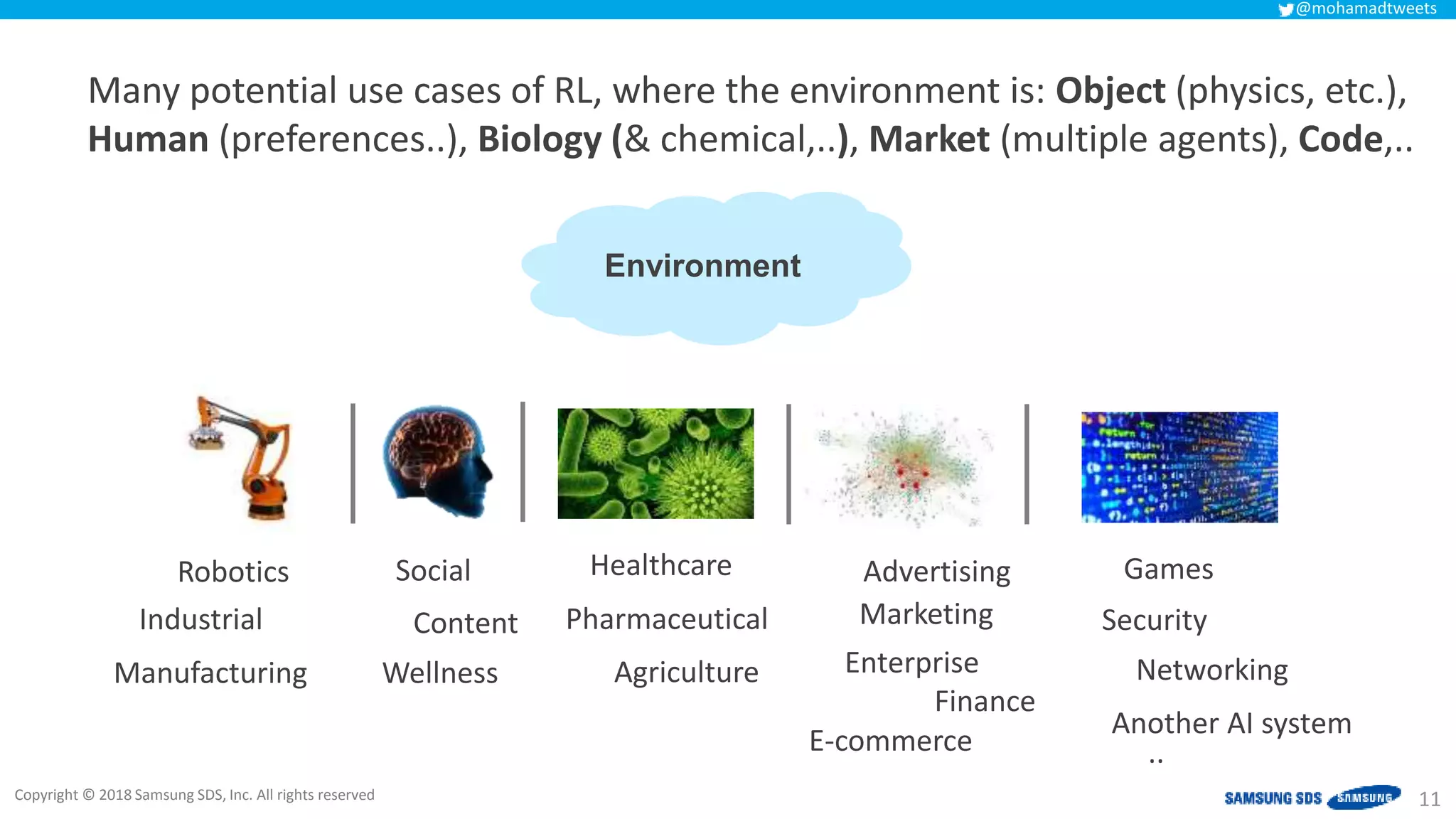

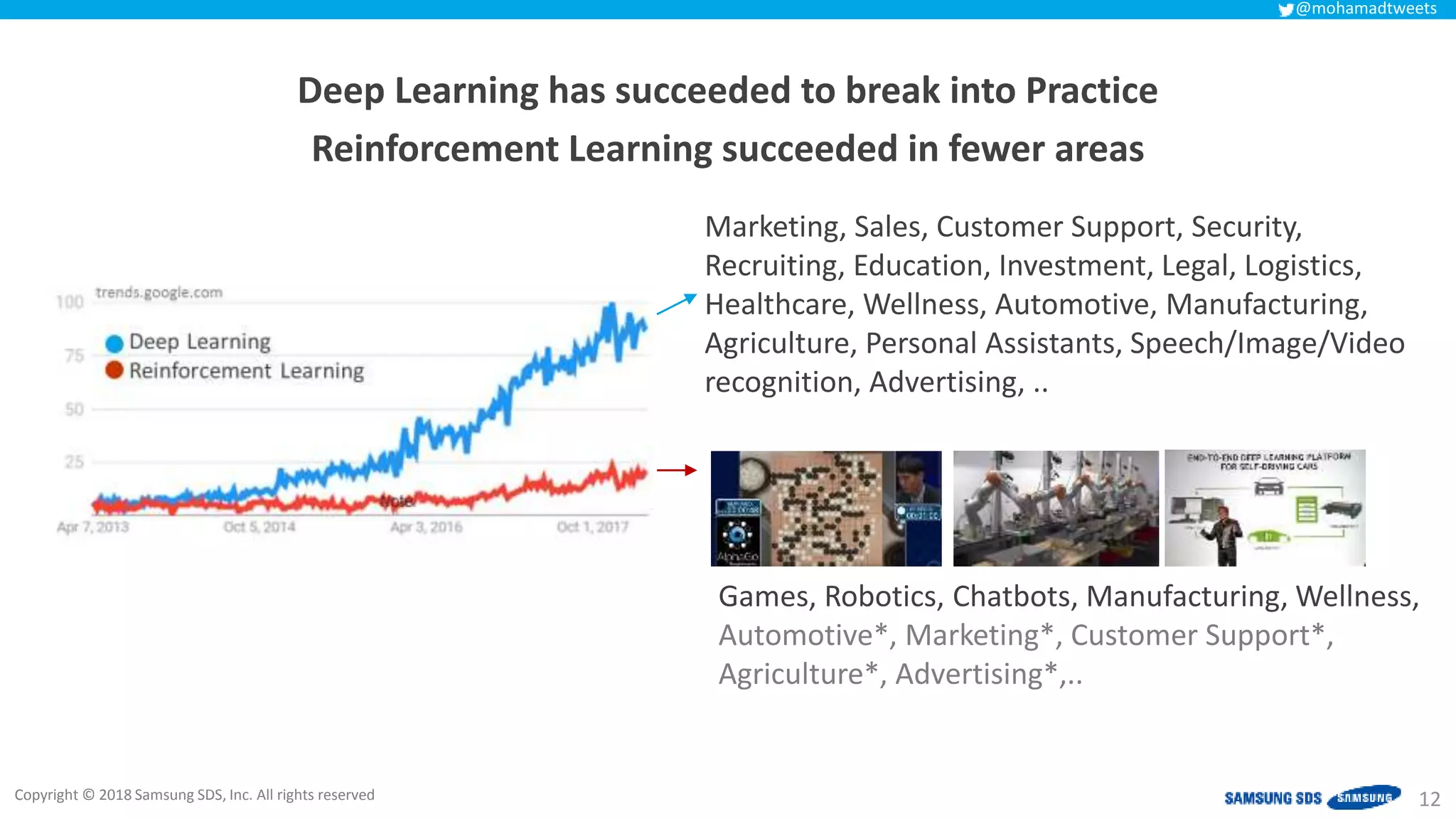

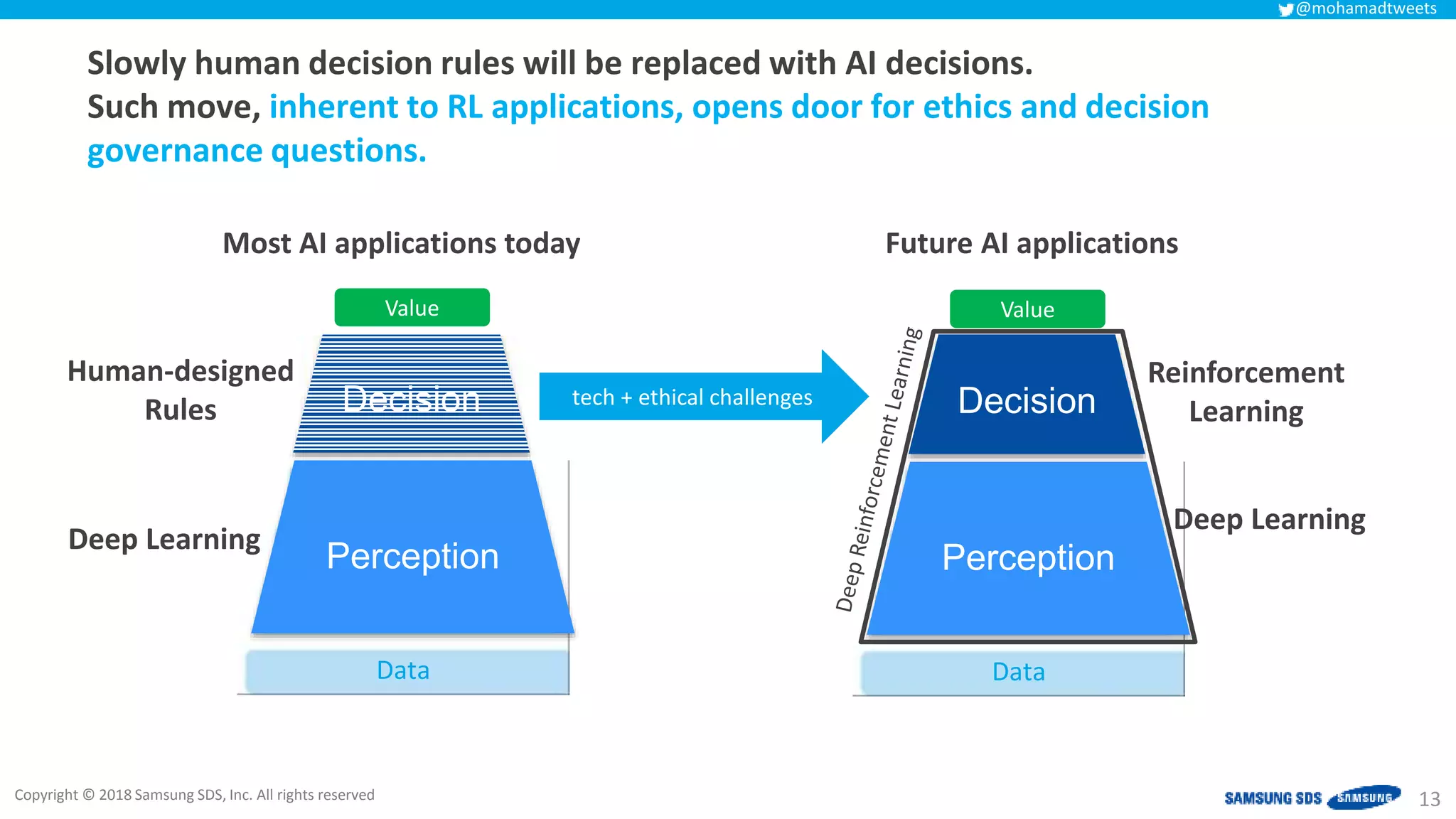

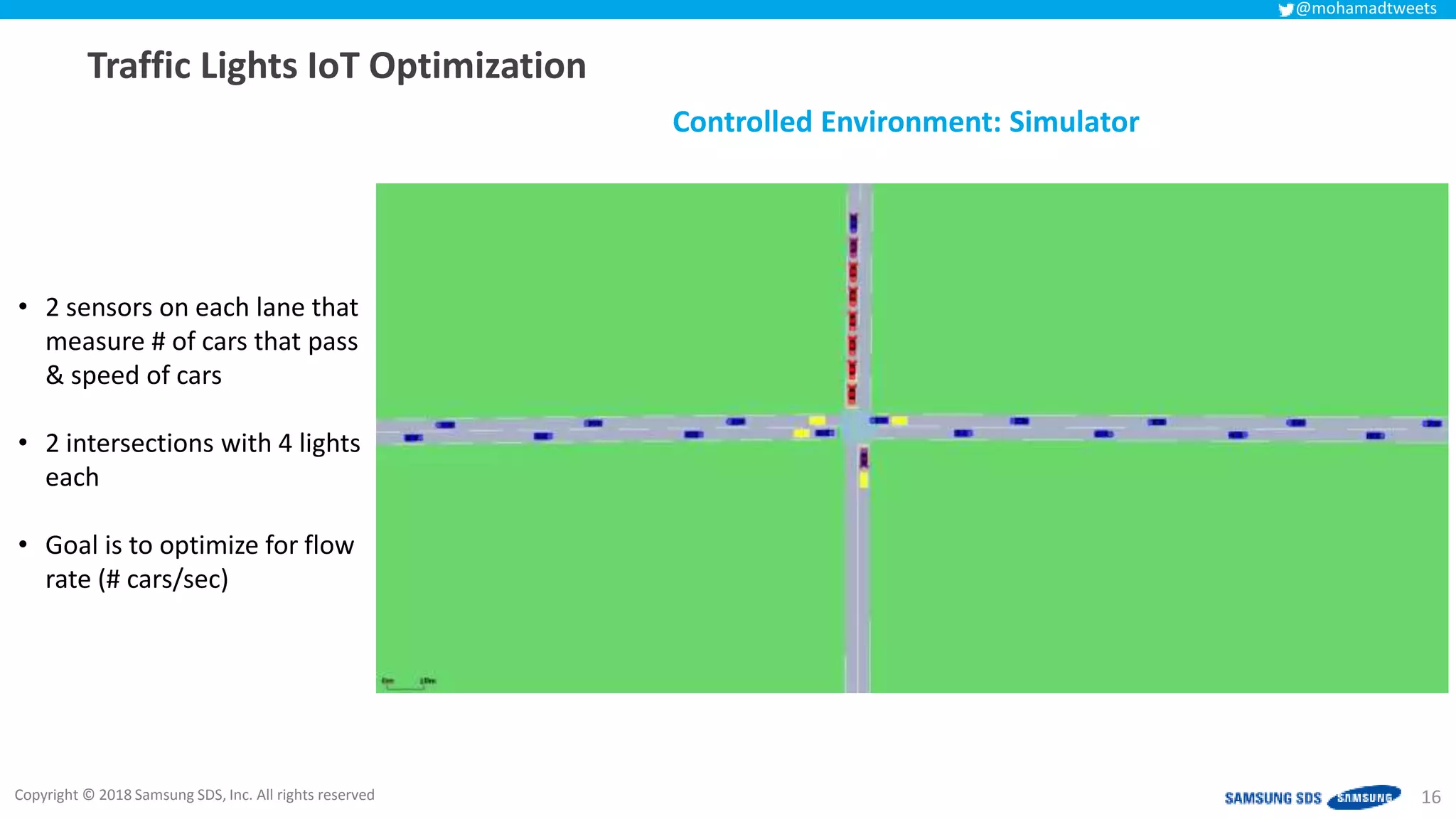

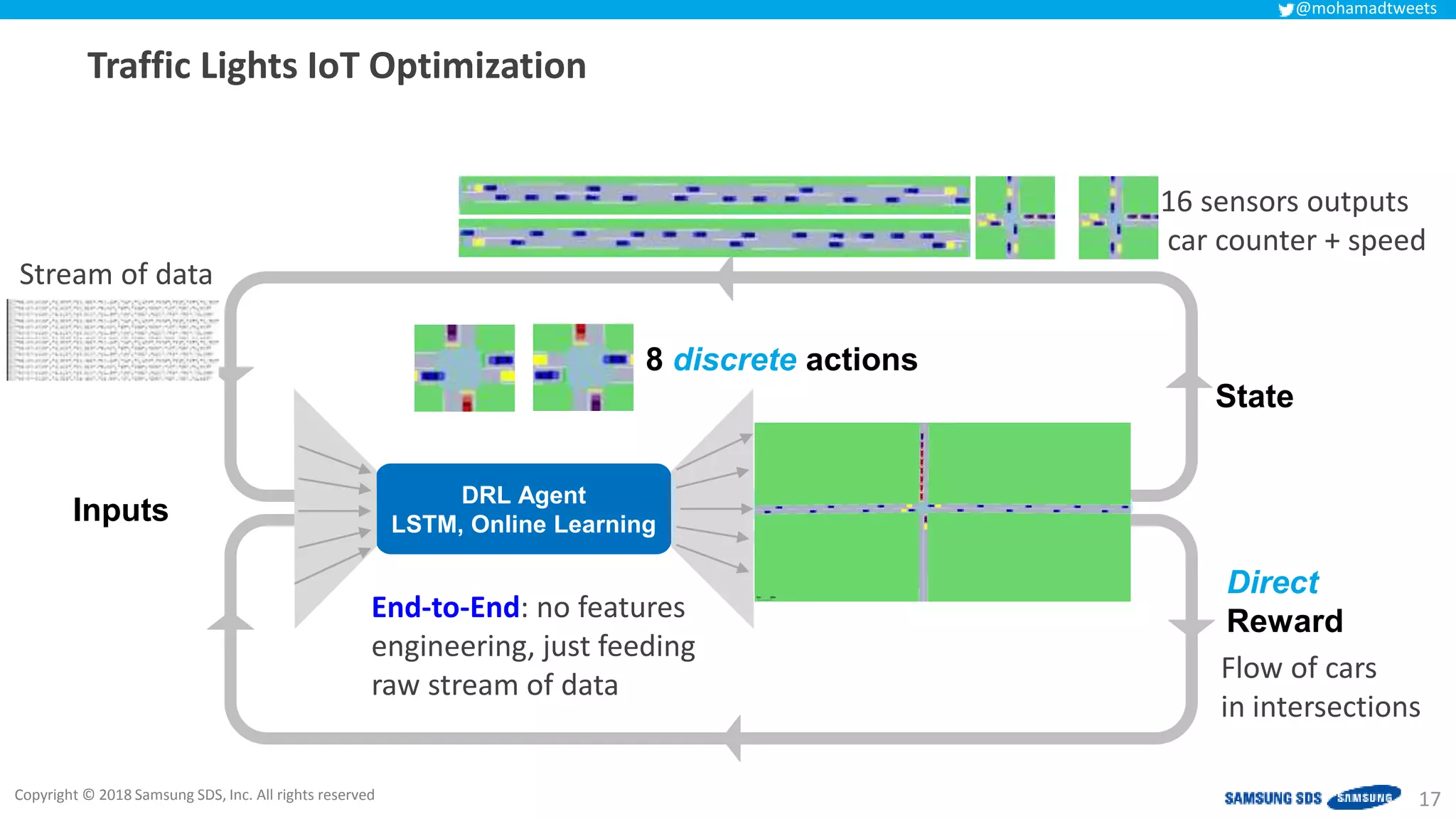

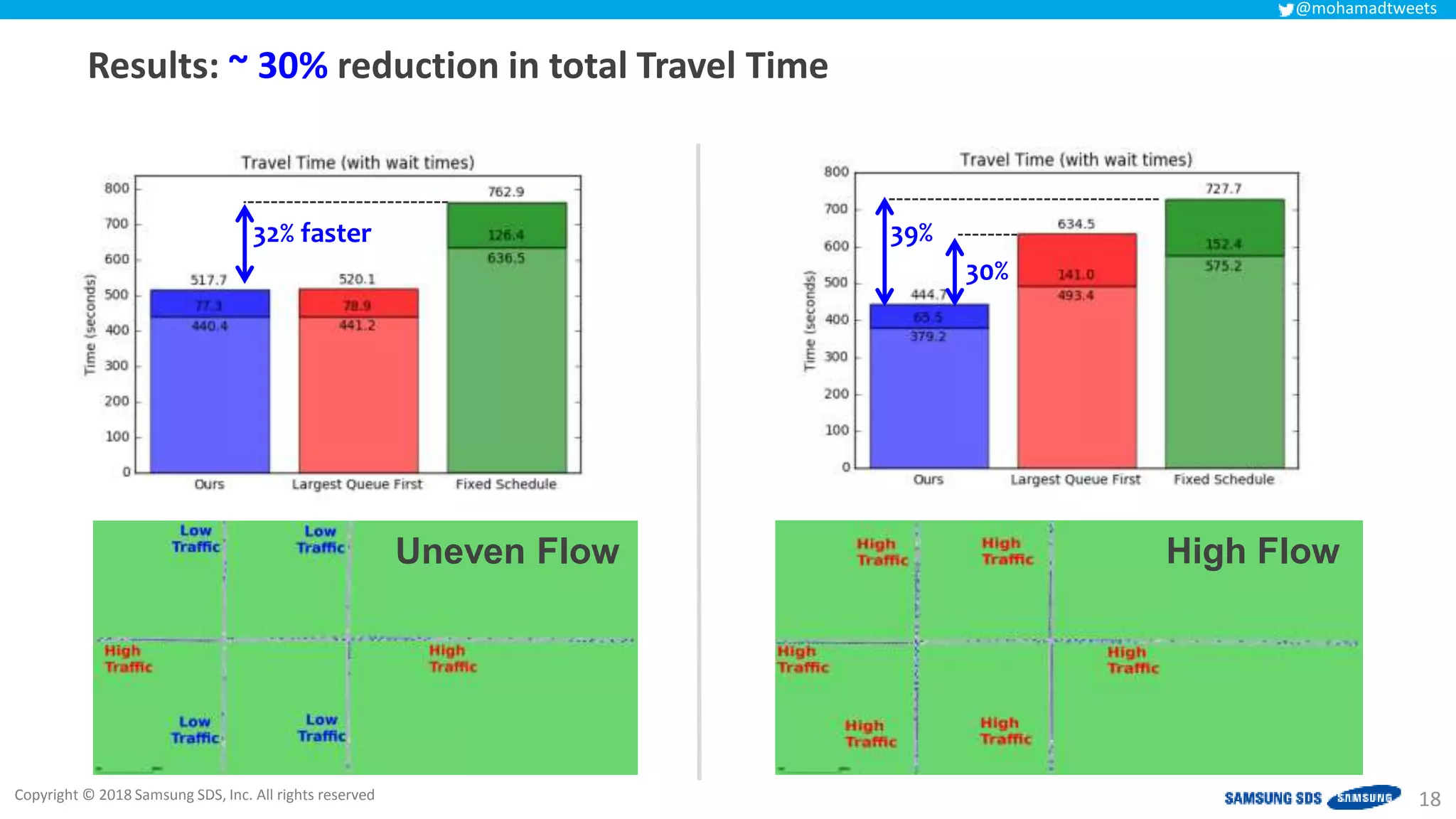

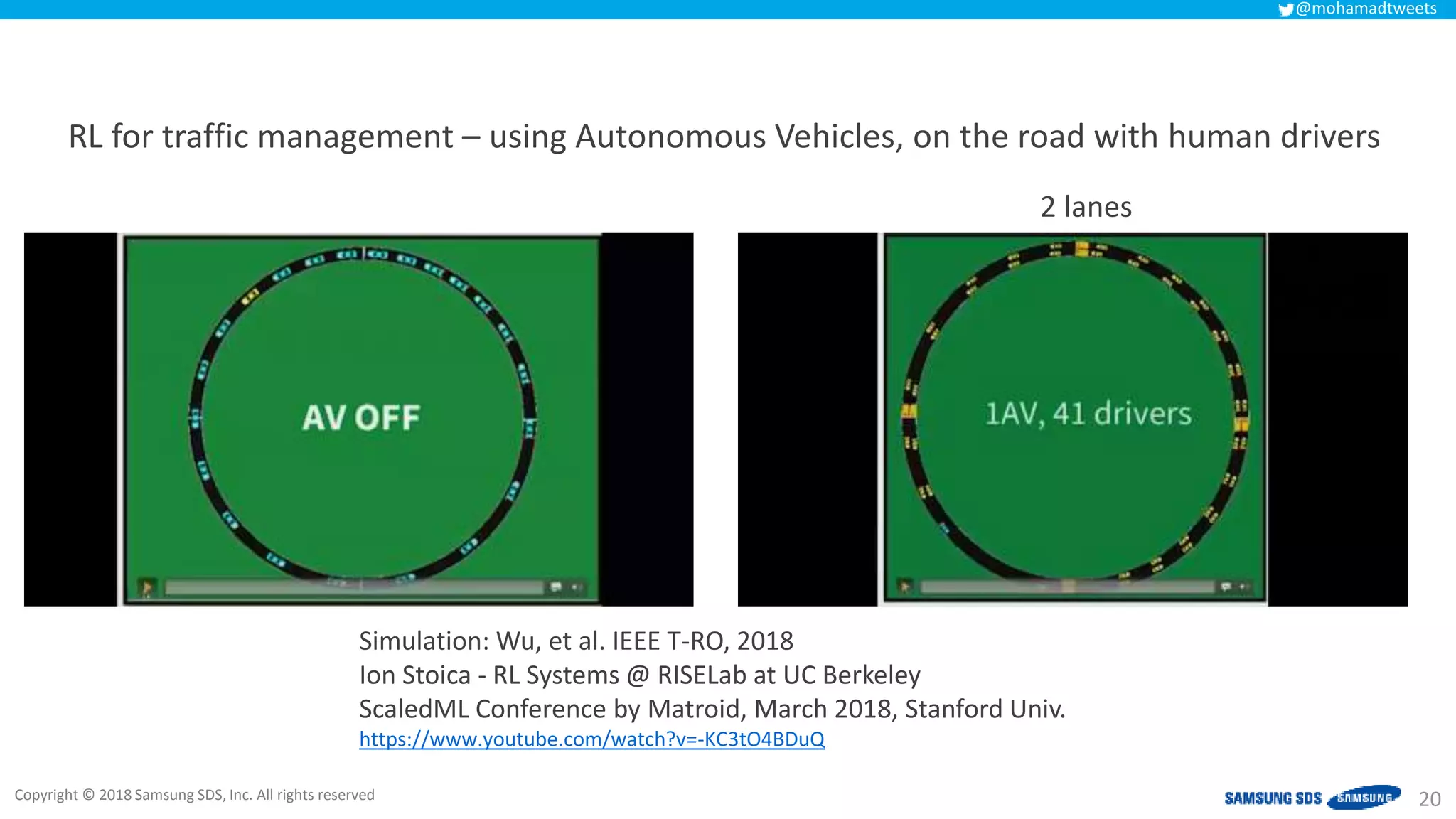

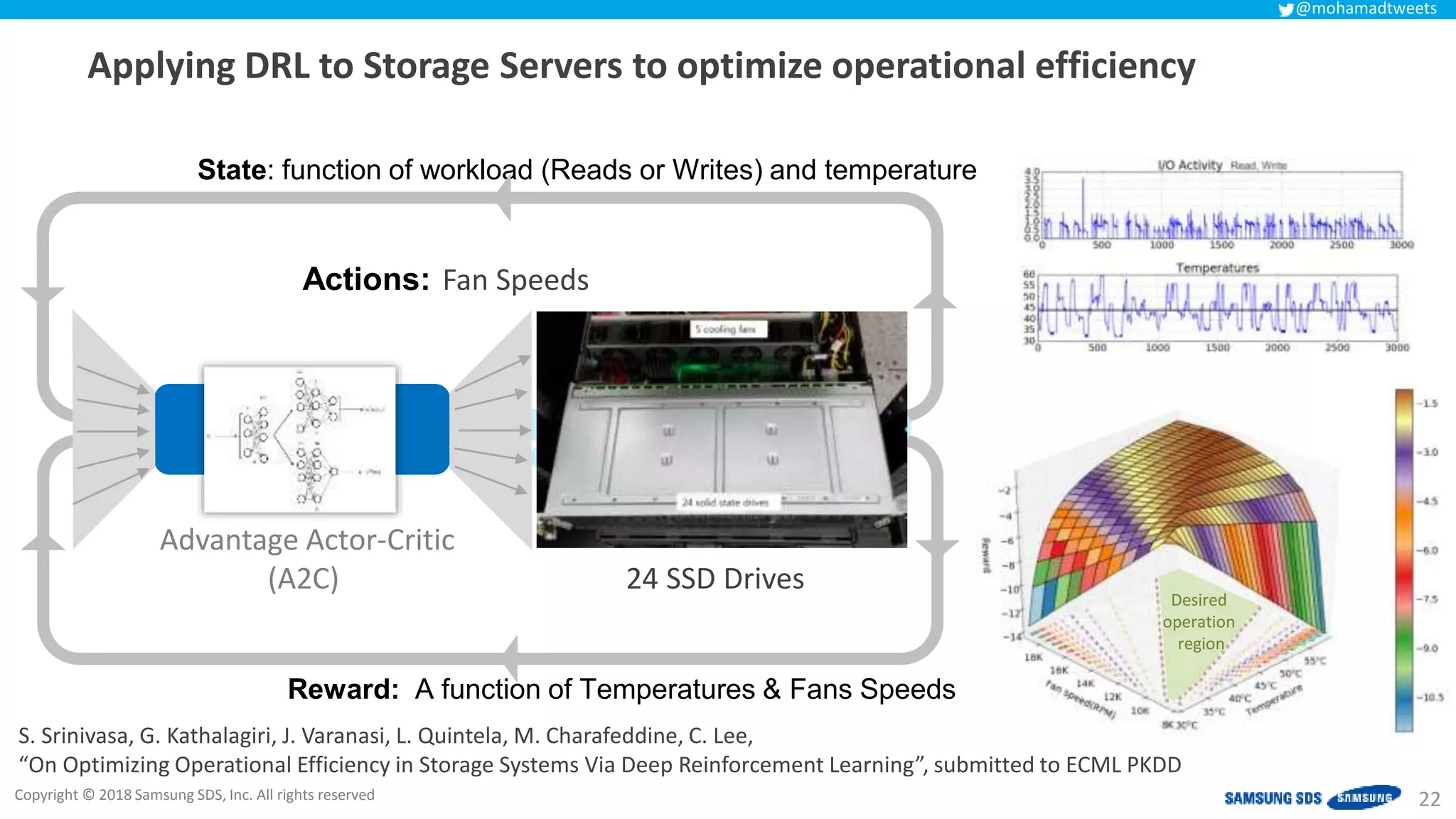

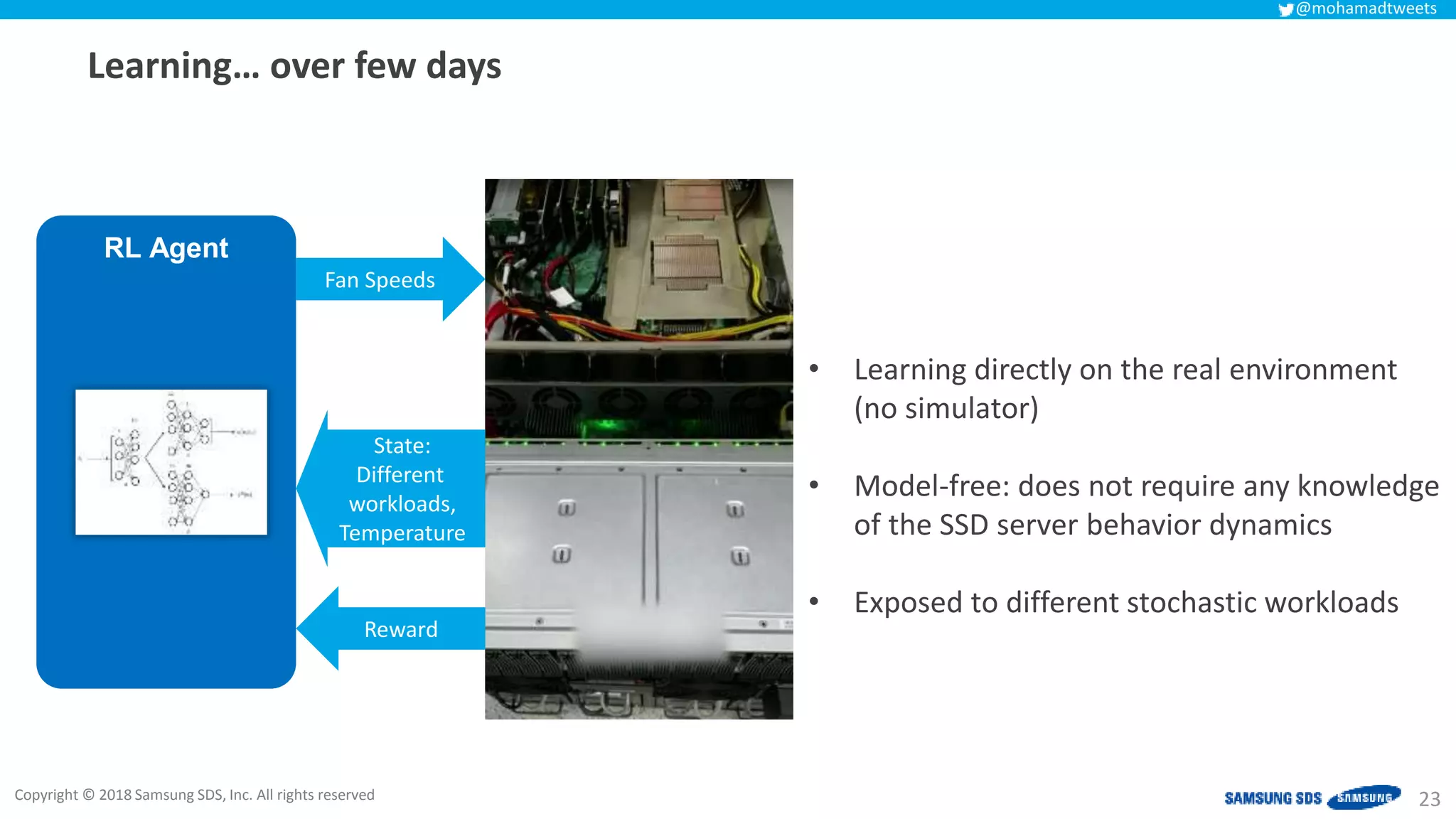

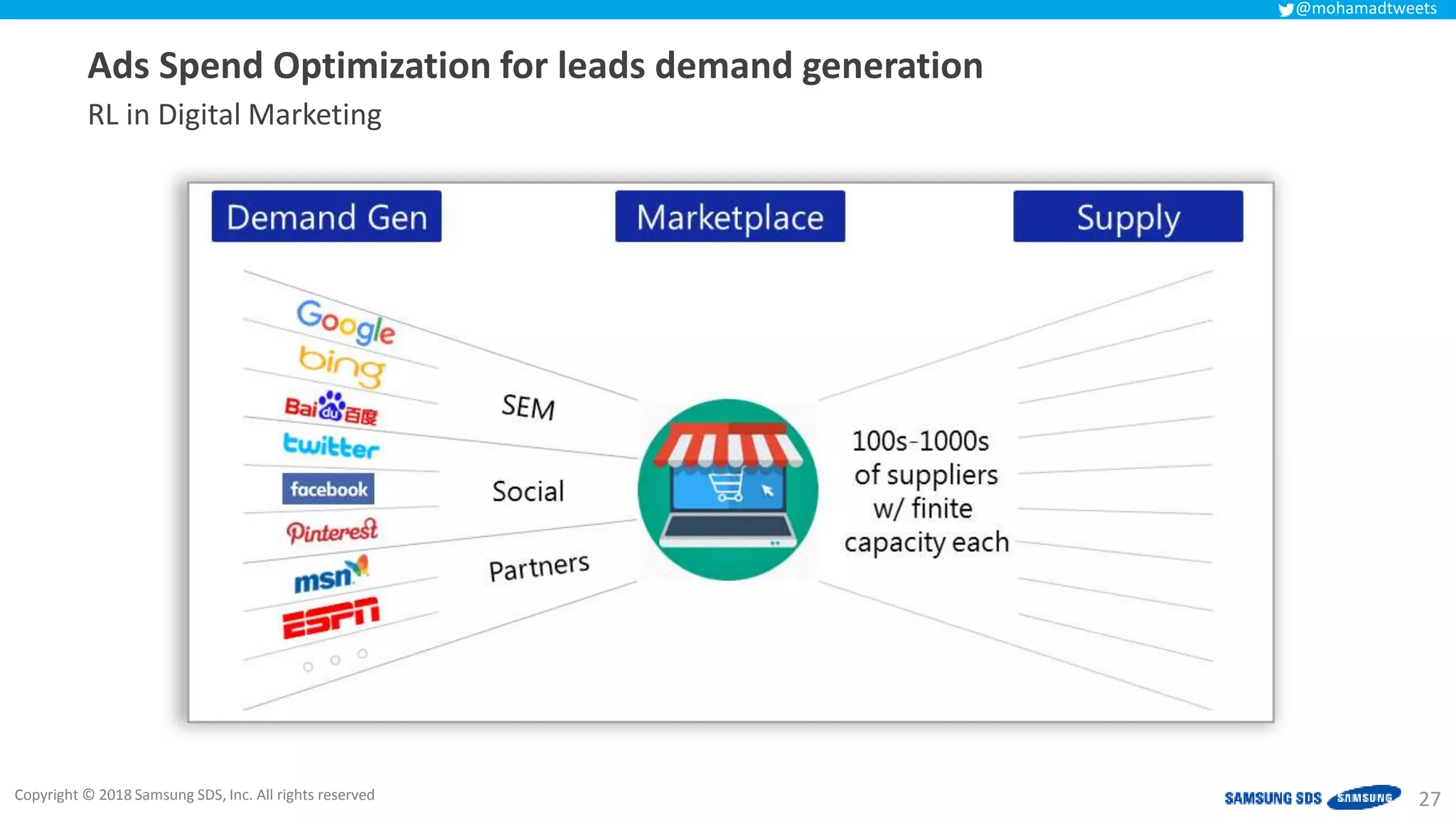

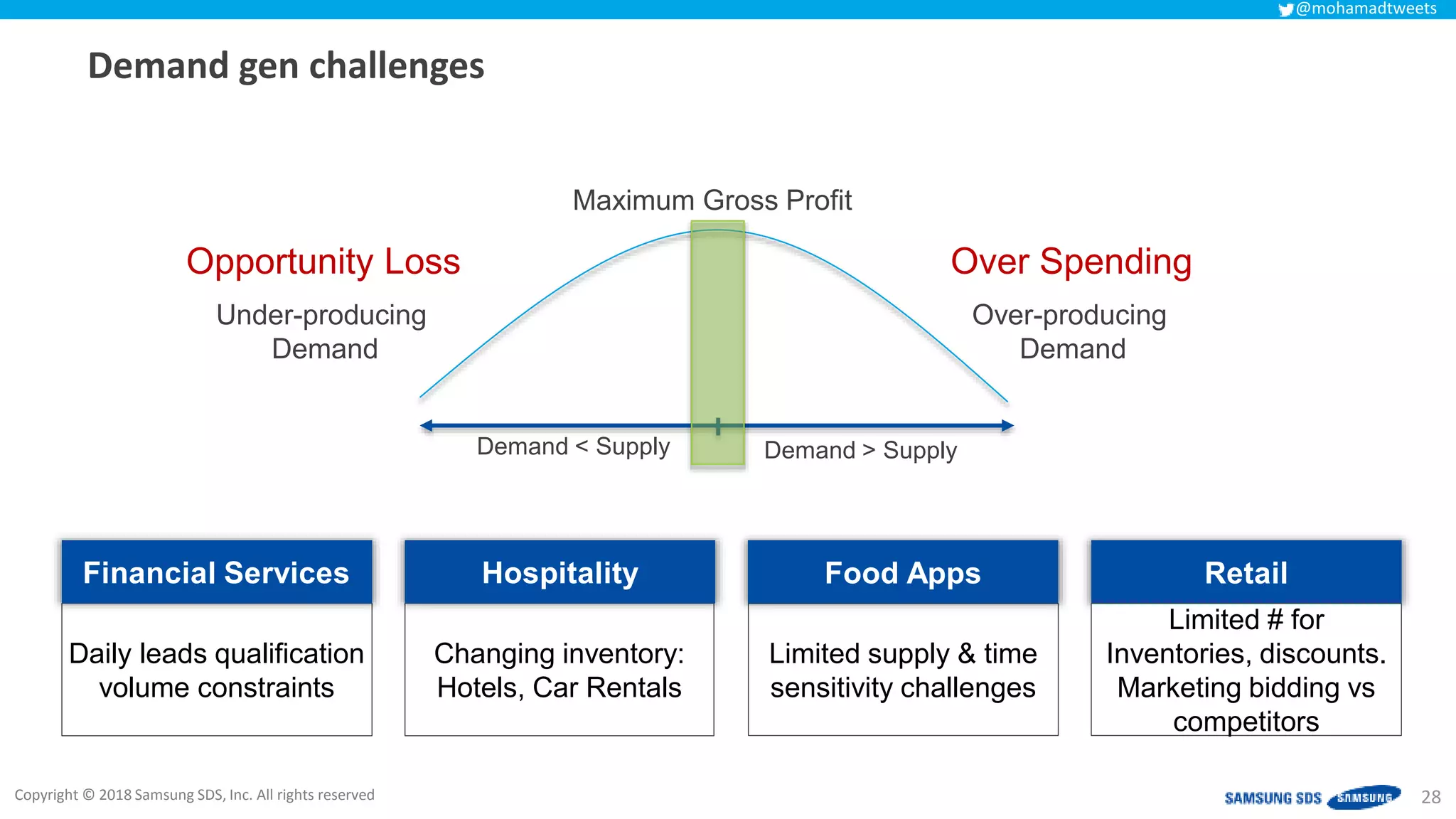

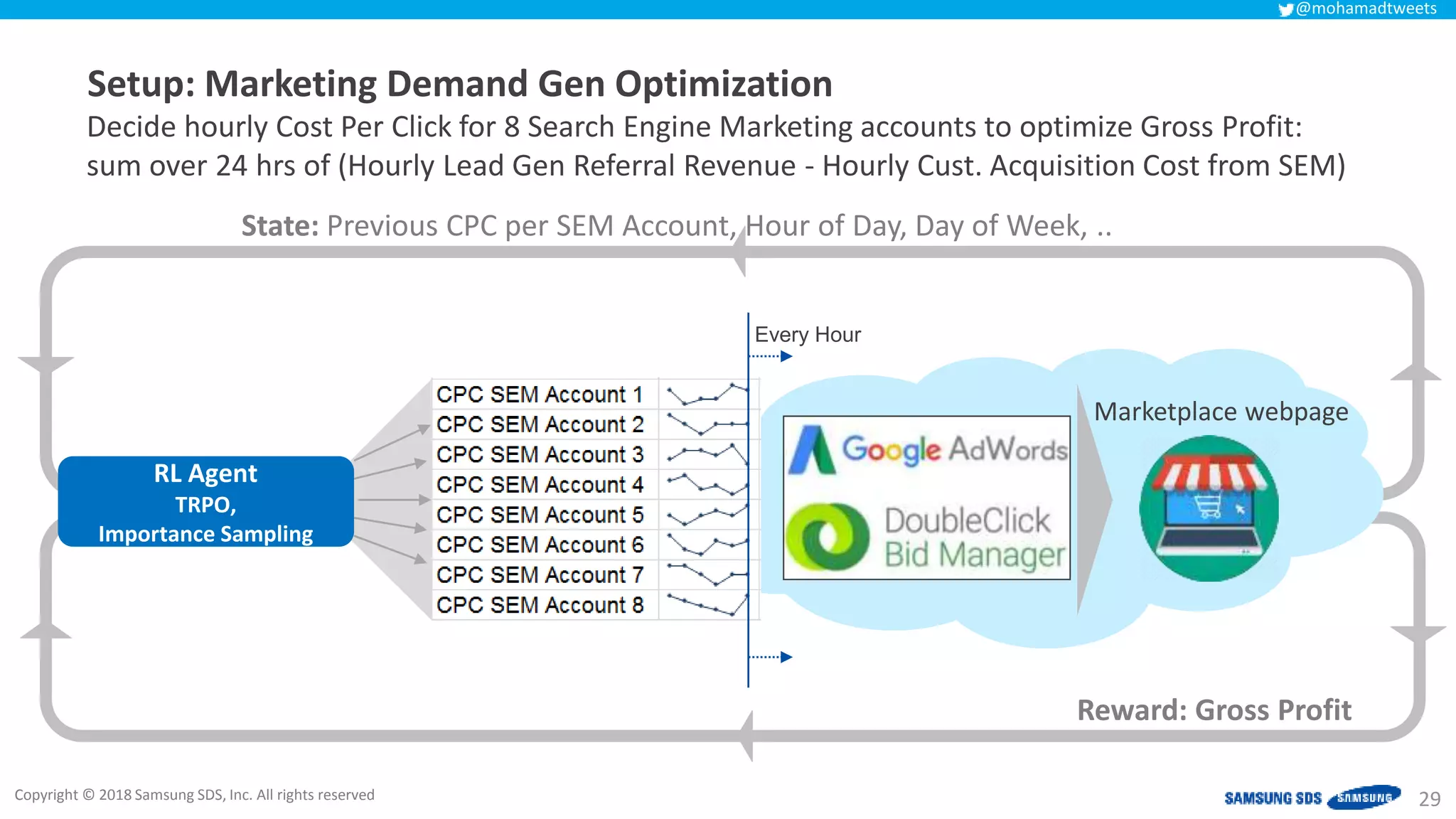

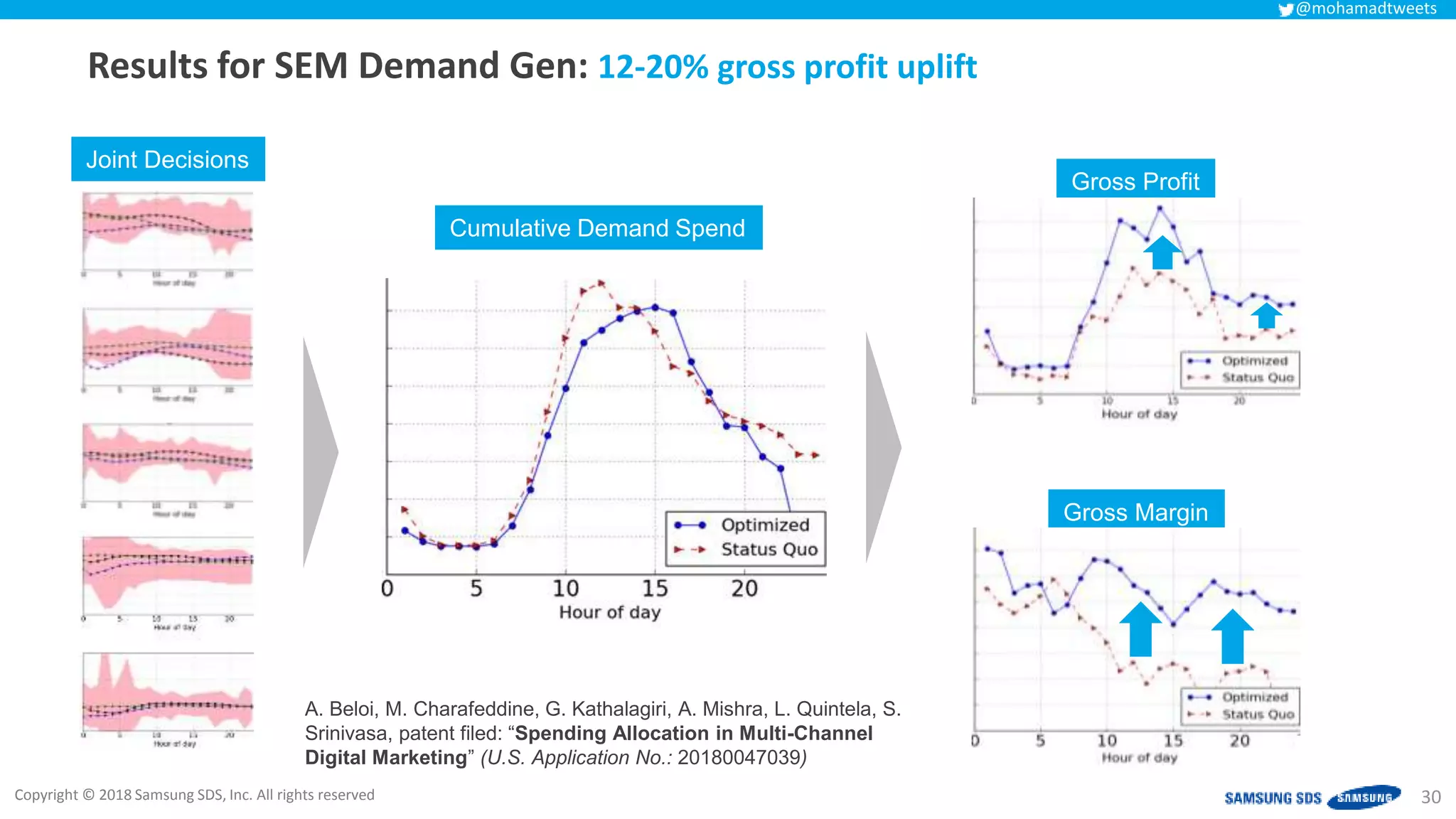

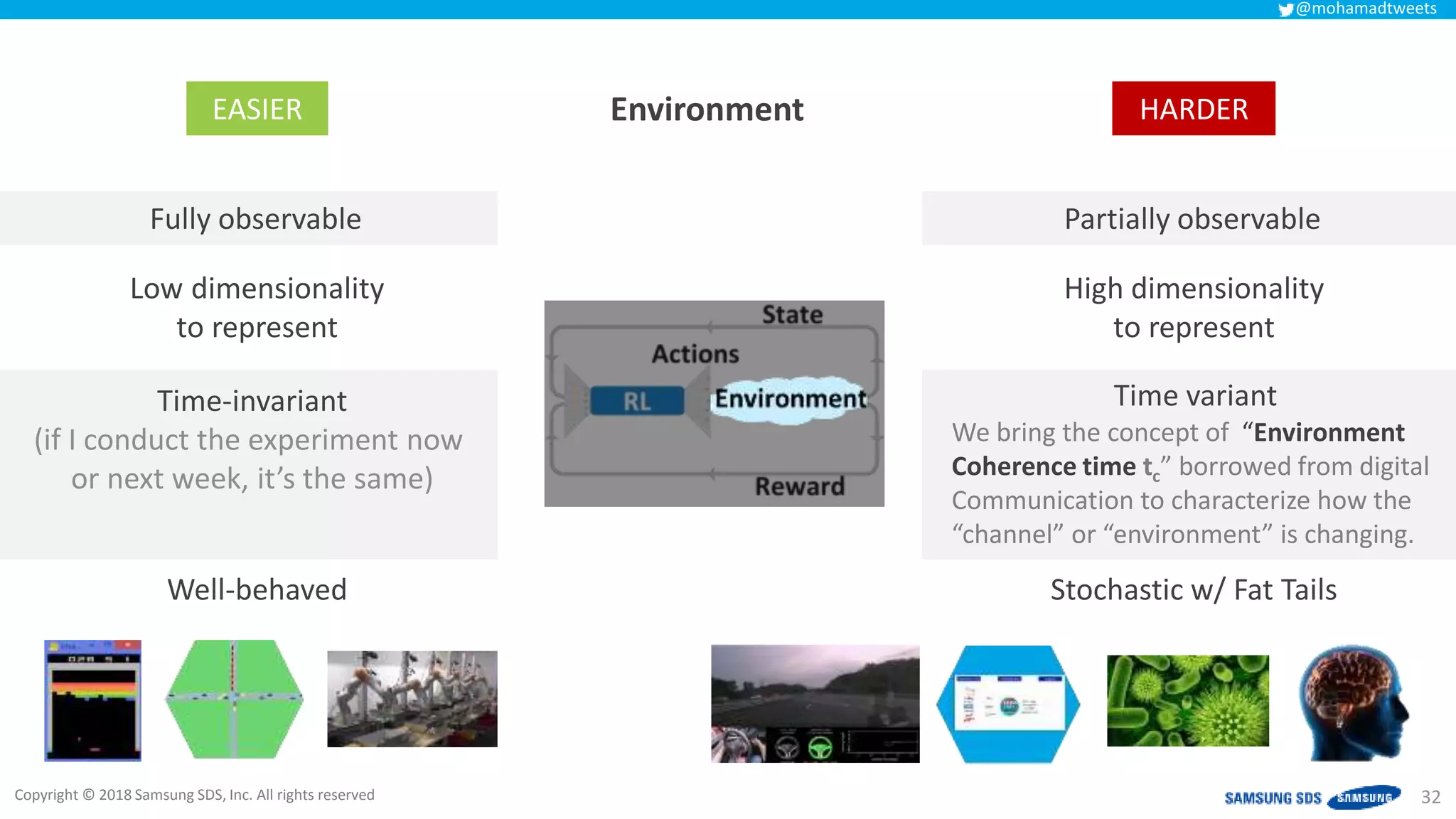

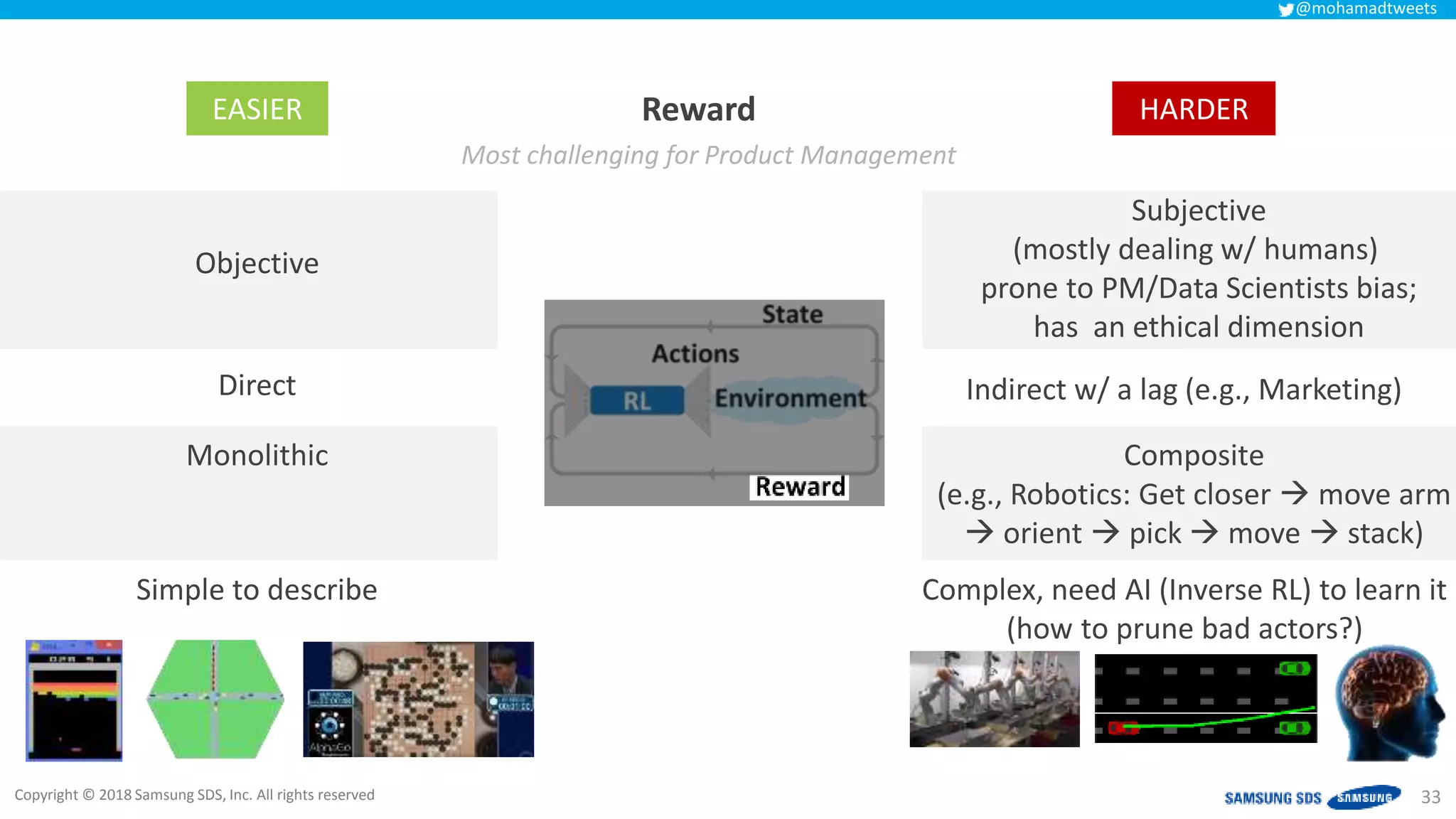

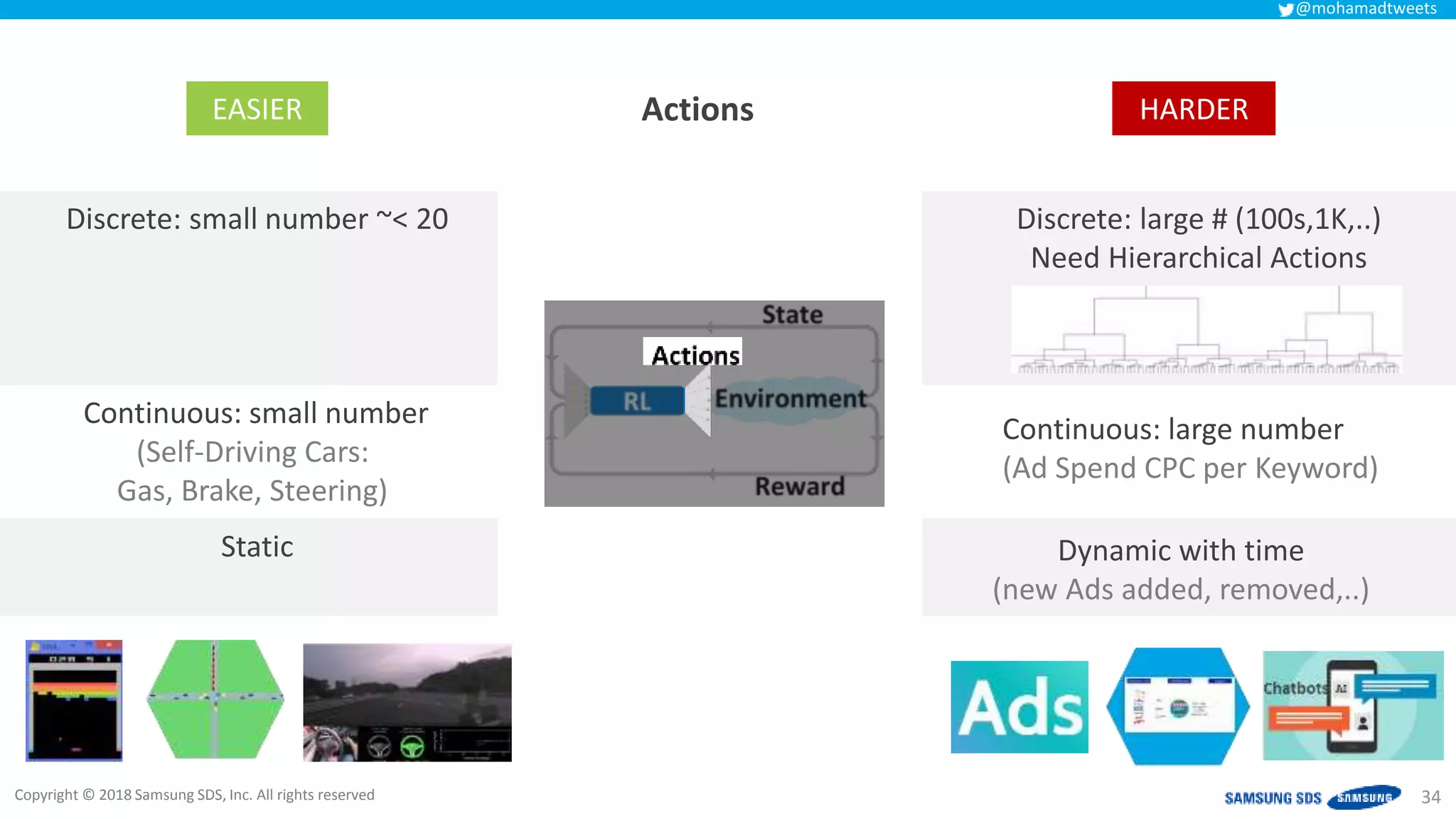

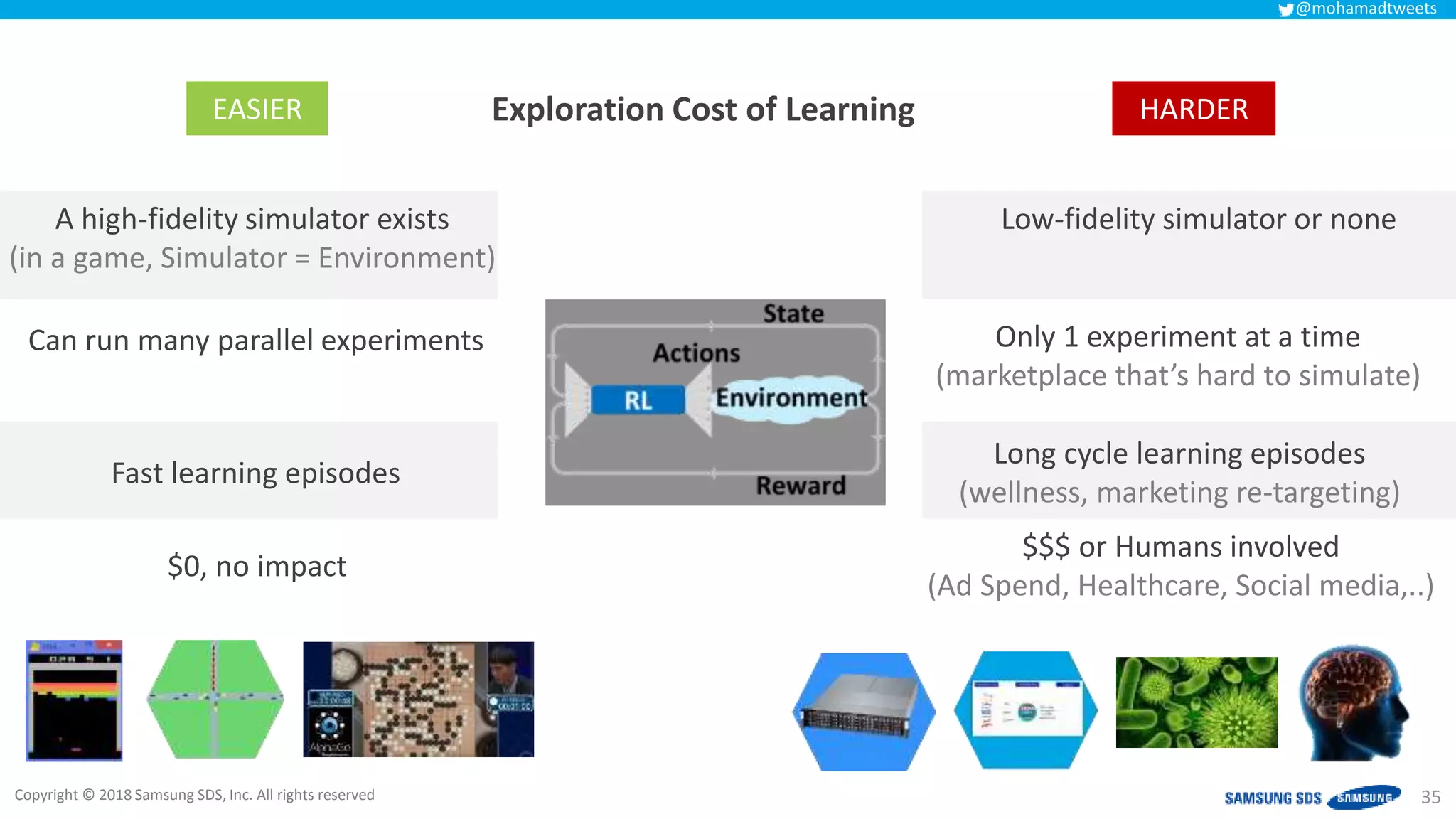

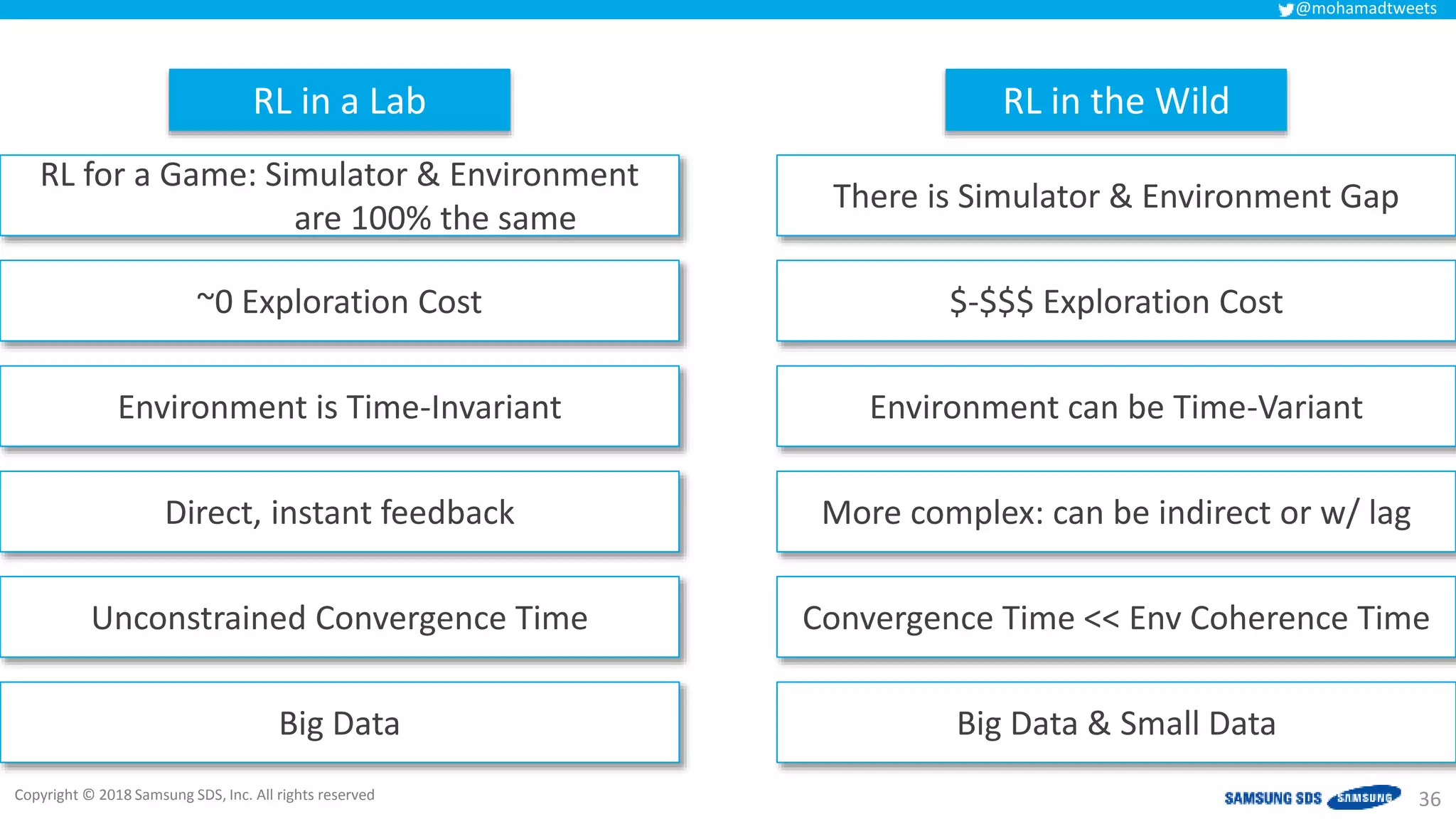

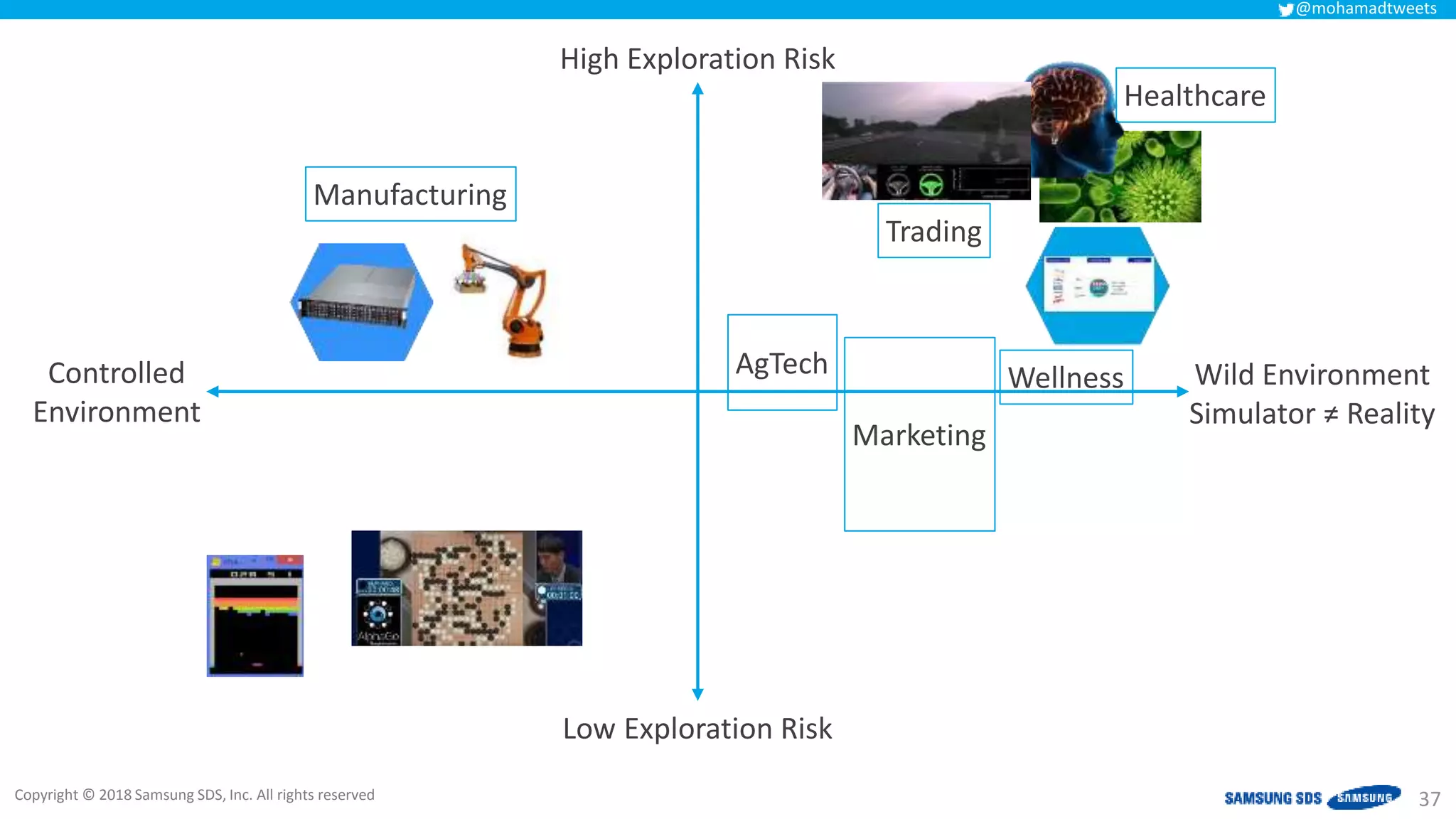

The document discusses reinforcement learning (RL) applications and examines its potential across various fields, including marketing, healthcare, and robotics. It emphasizes the importance of evaluating risks and challenges in RL implementations, illustrating its concepts with practical examples. Additionally, it addresses ethical considerations and the impact of RL on decision-making processes in complex environments.