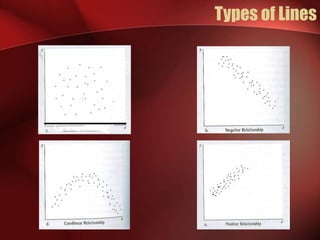

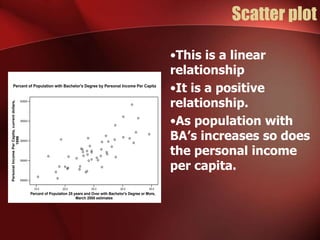

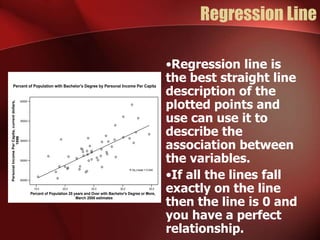

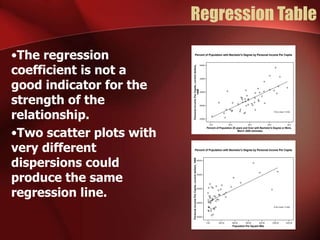

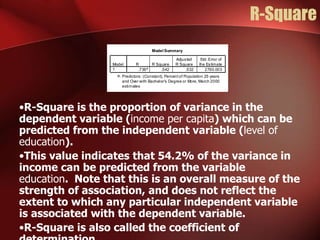

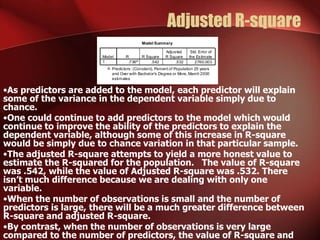

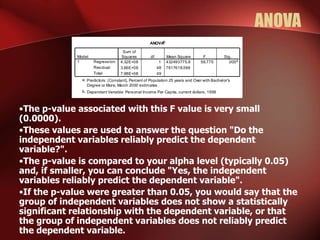

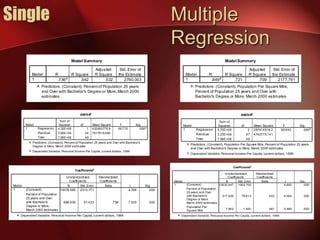

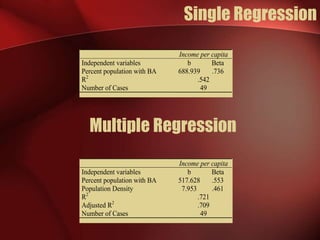

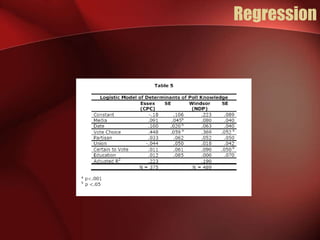

Regression analysis can be used to analyze the relationship between variables. A scatter plot should first be created to determine if the variables have a linear relationship required for regression analysis. A regression line is fitted to best describe the linear relationship between the variables, with an R-squared value indicating how well it fits the data. Multiple regression allows for analysis of the relationship between a dependent variable and multiple independent variables and their individual contributions to explaining the variance in the dependent variable.