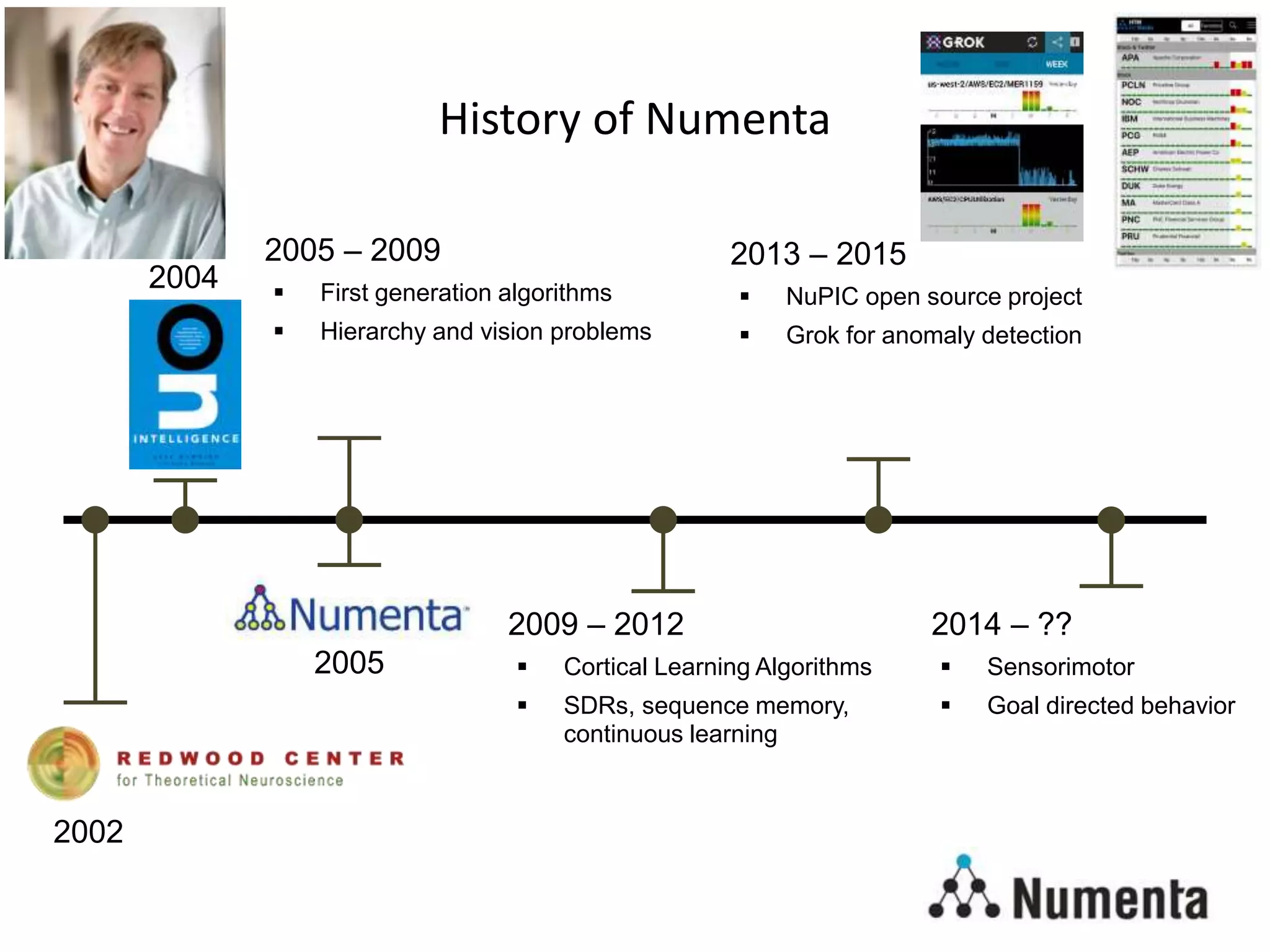

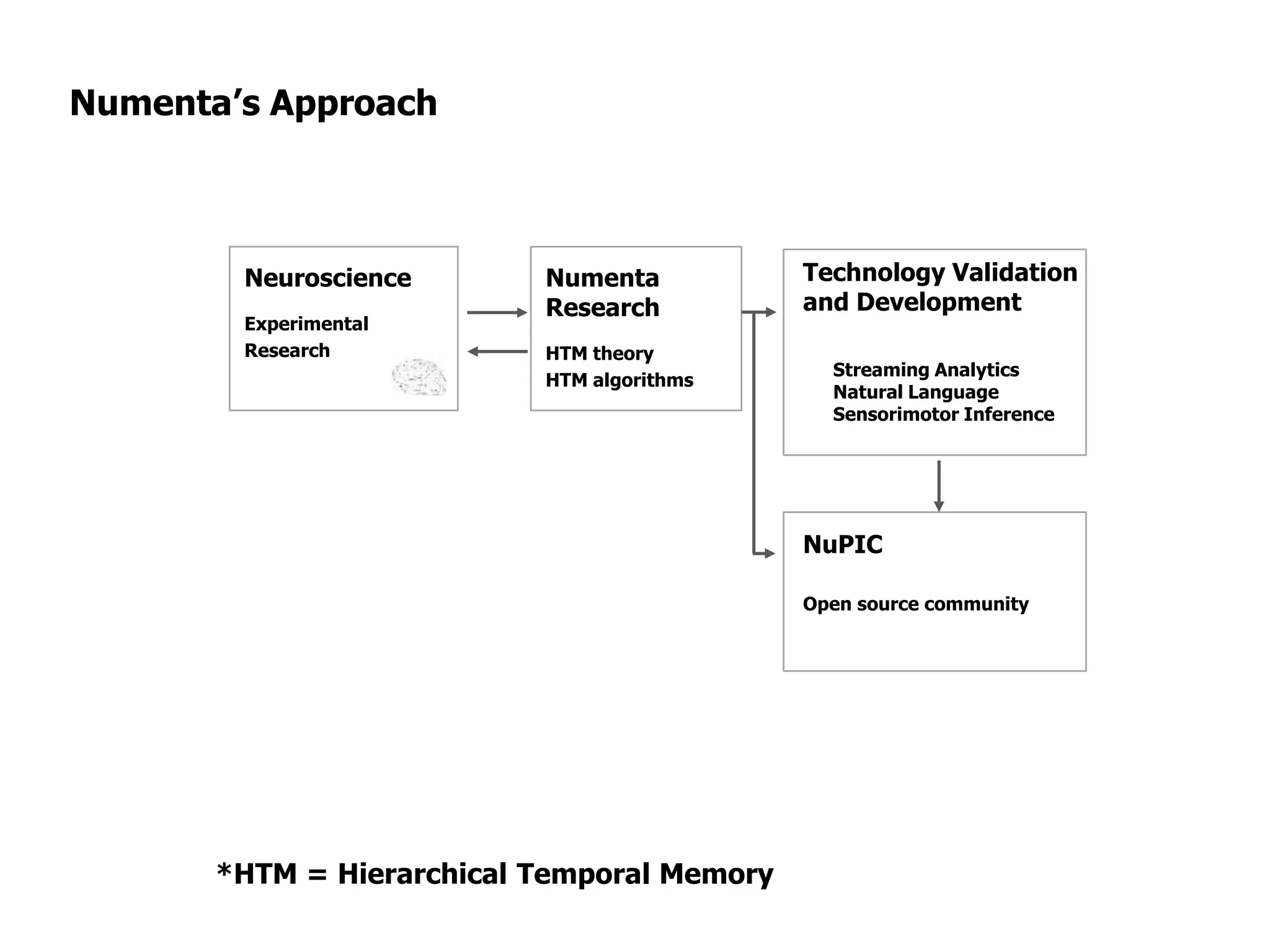

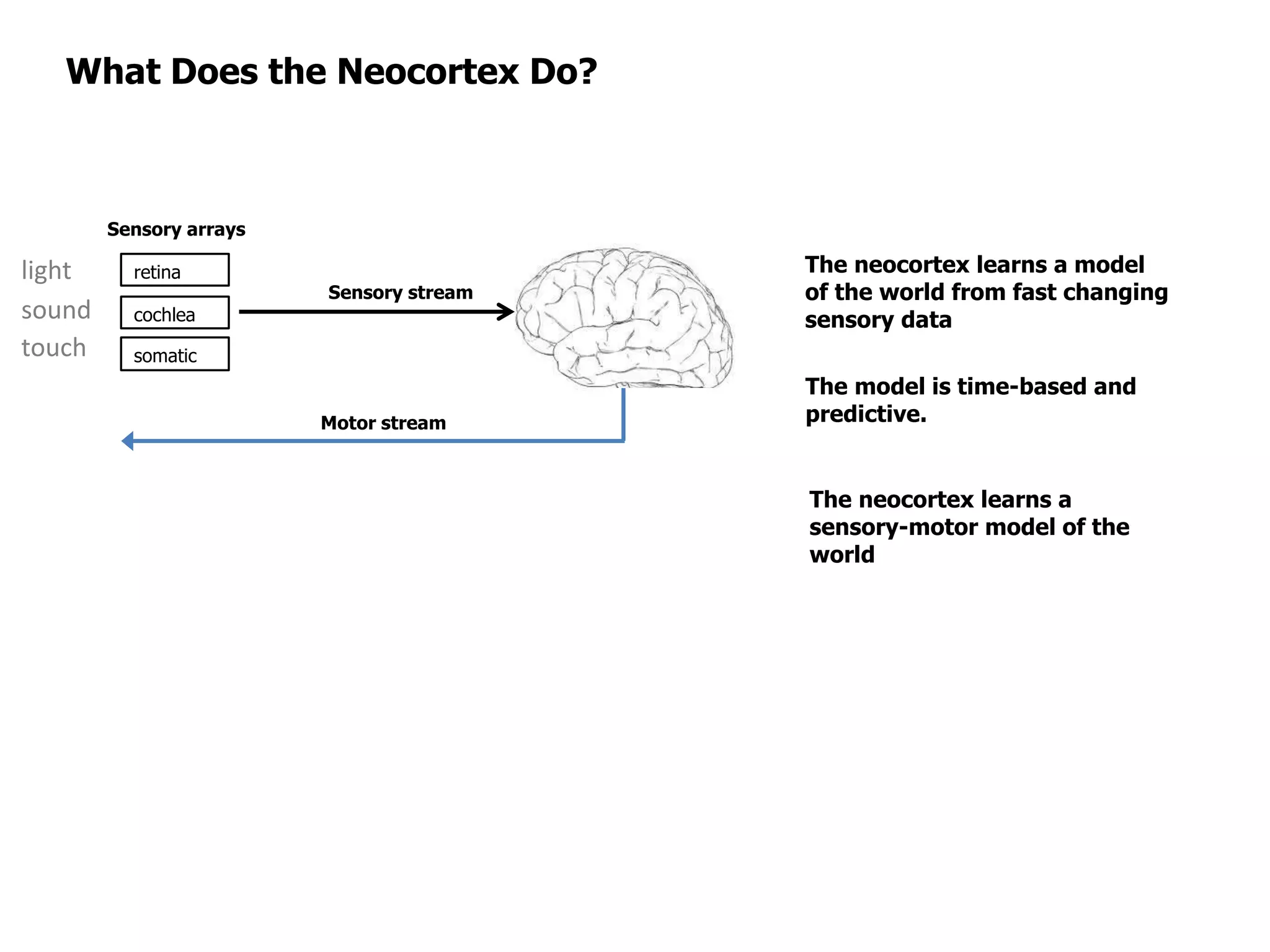

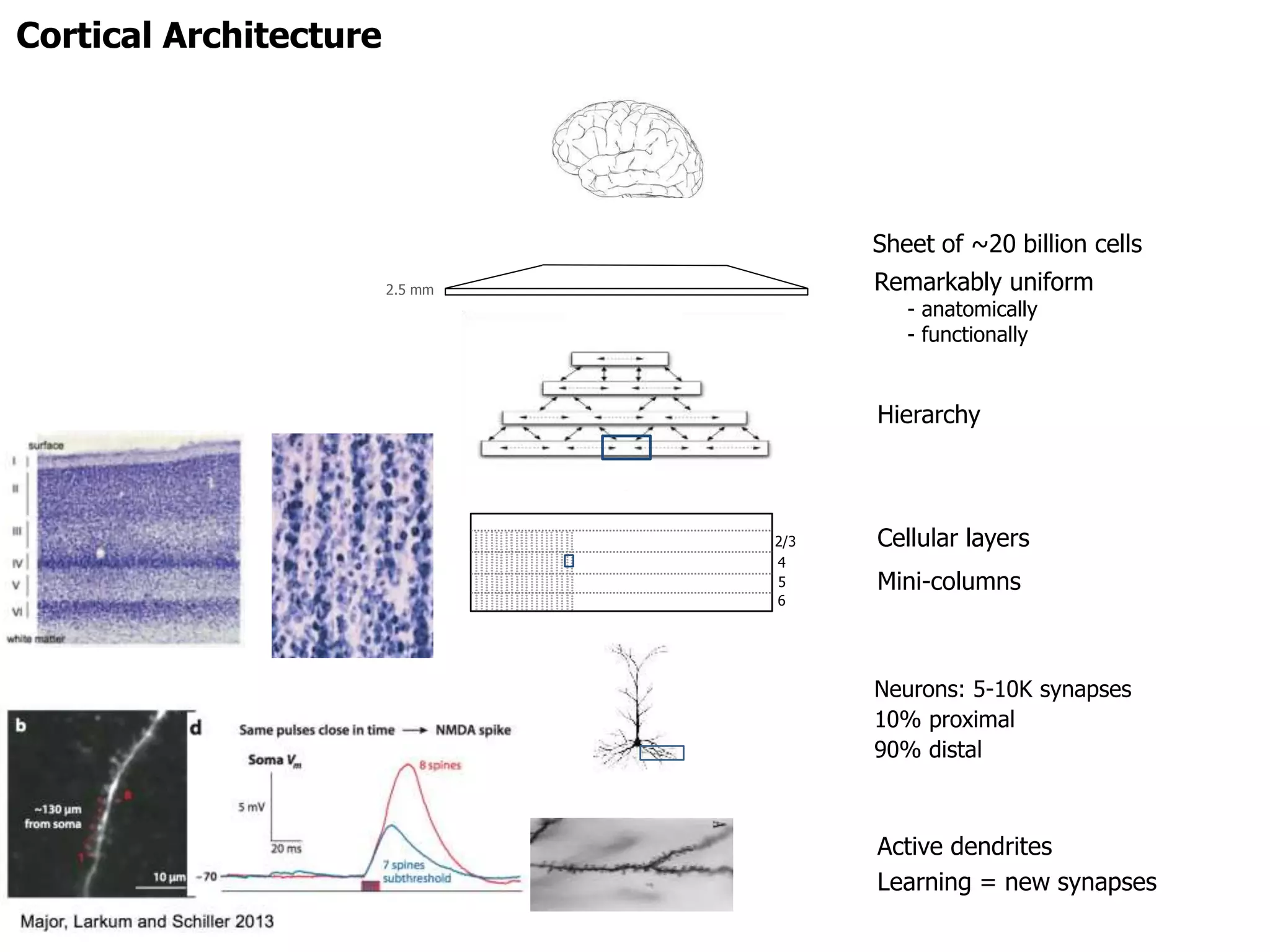

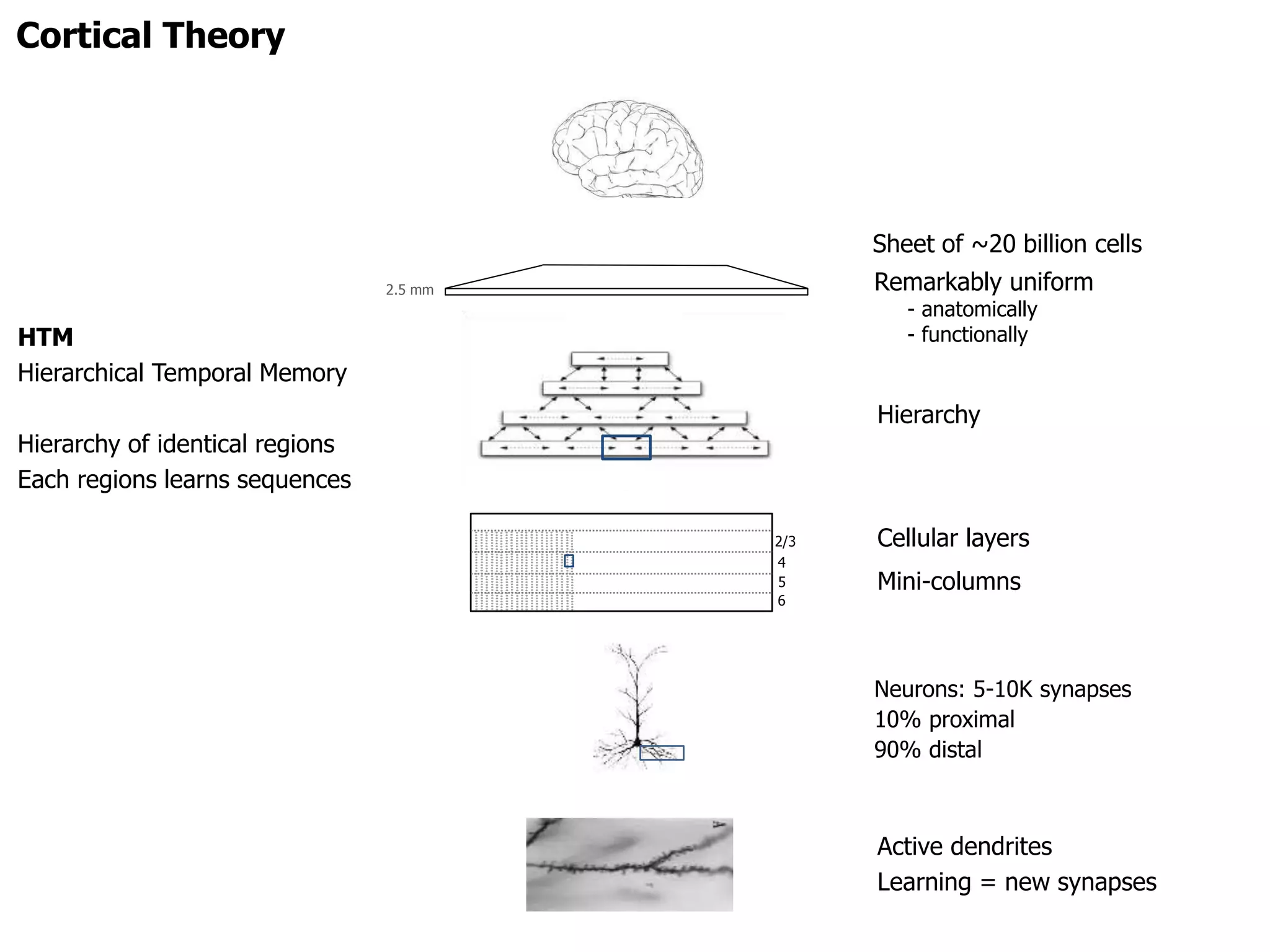

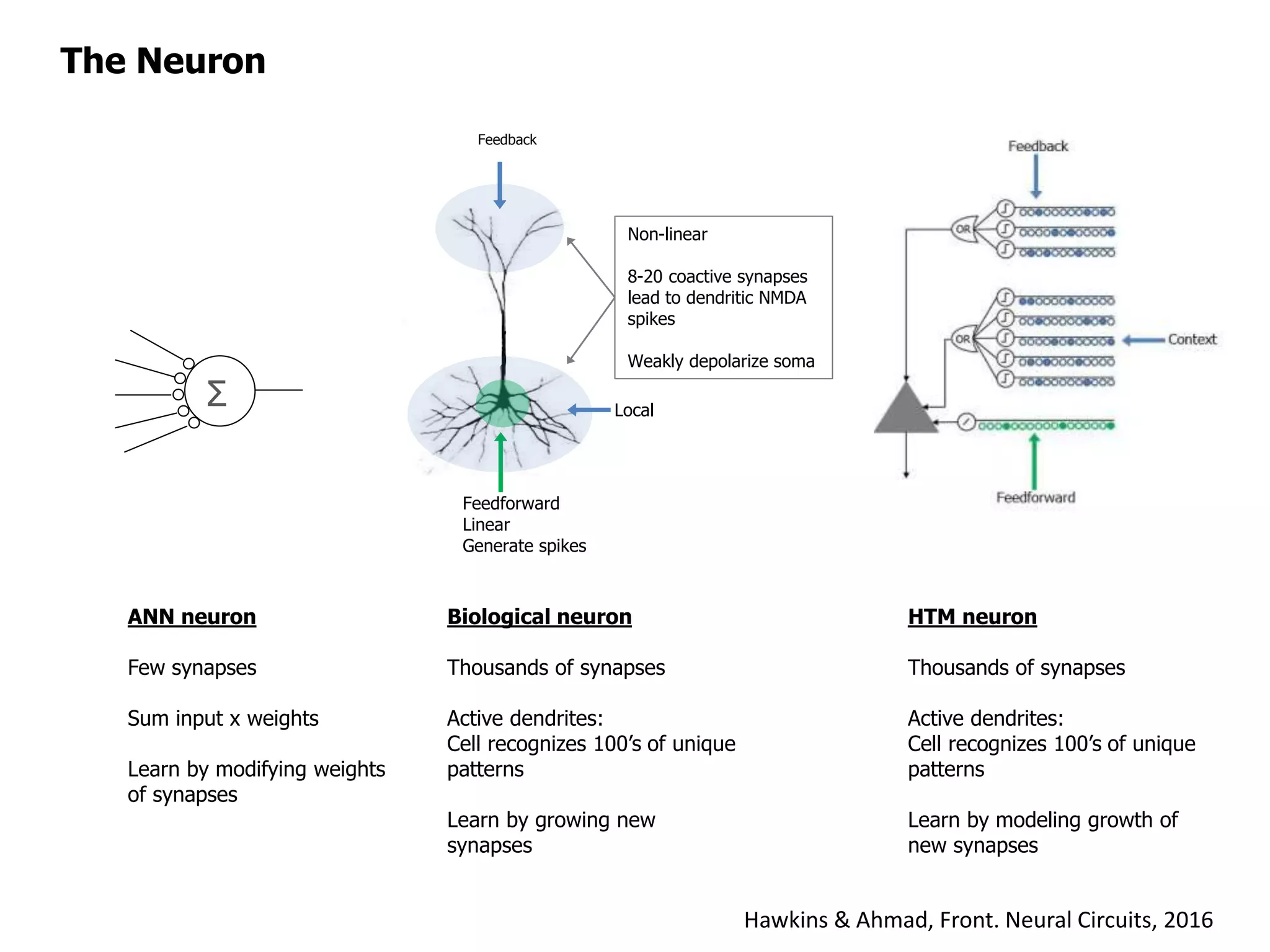

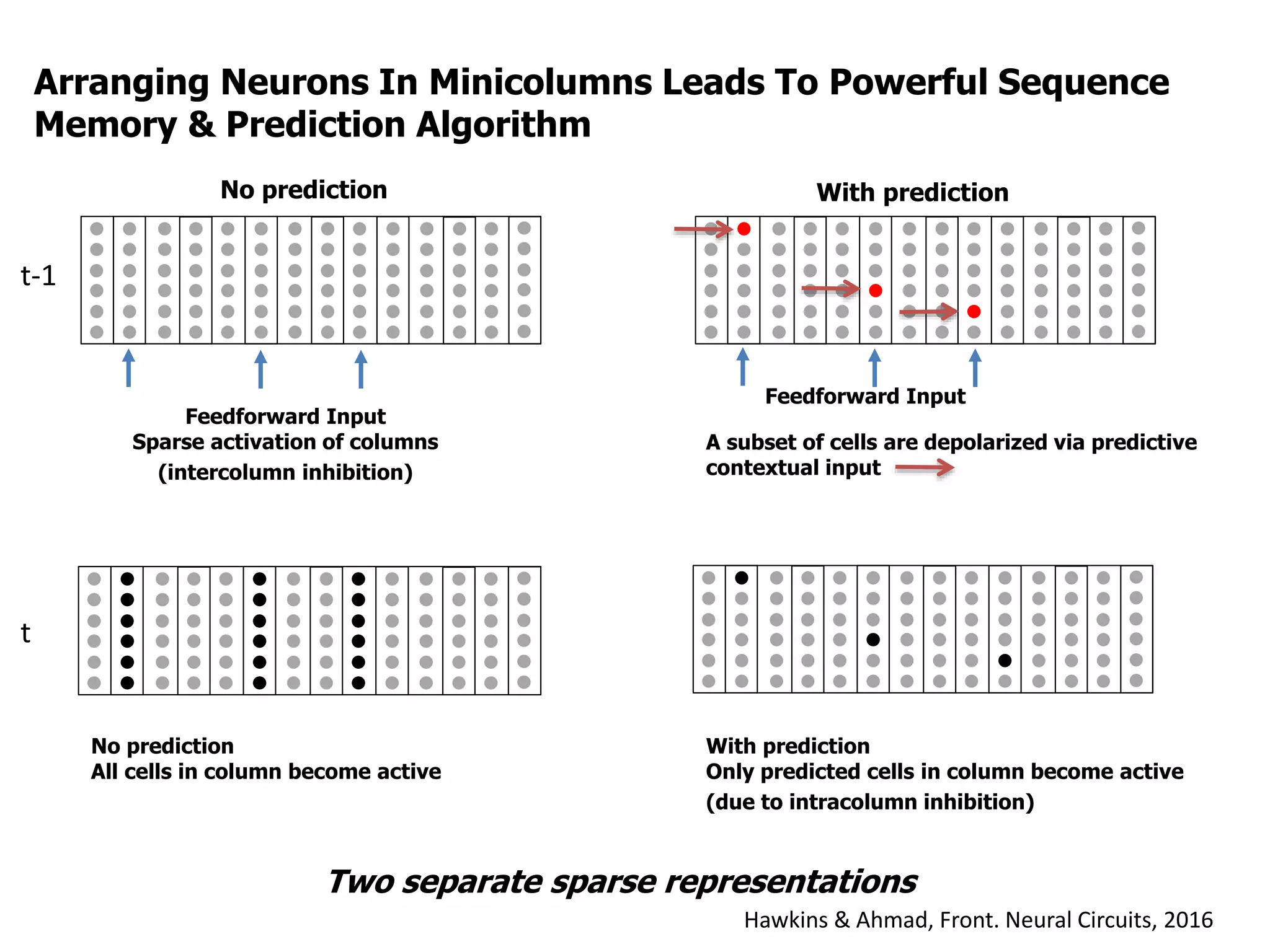

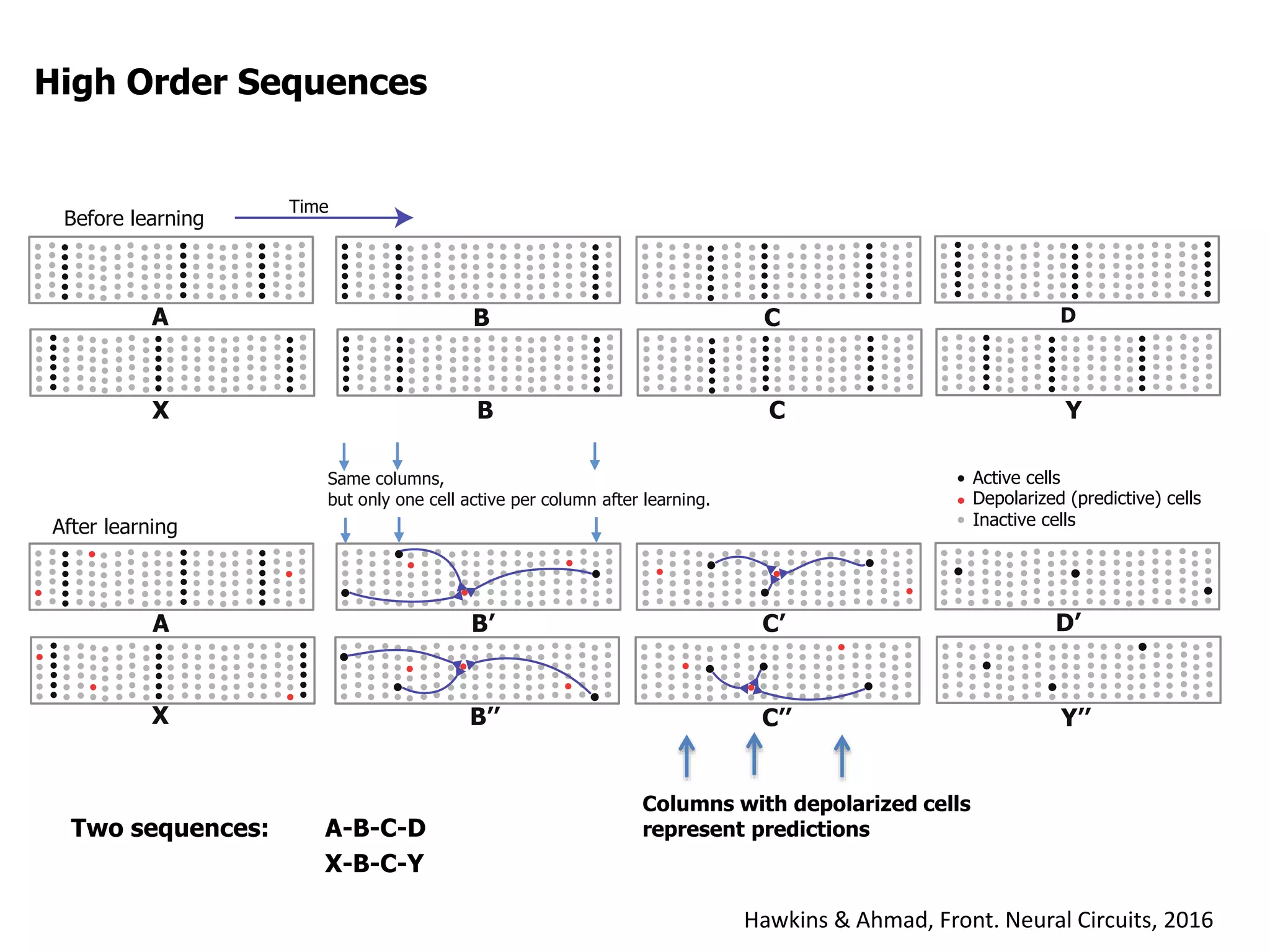

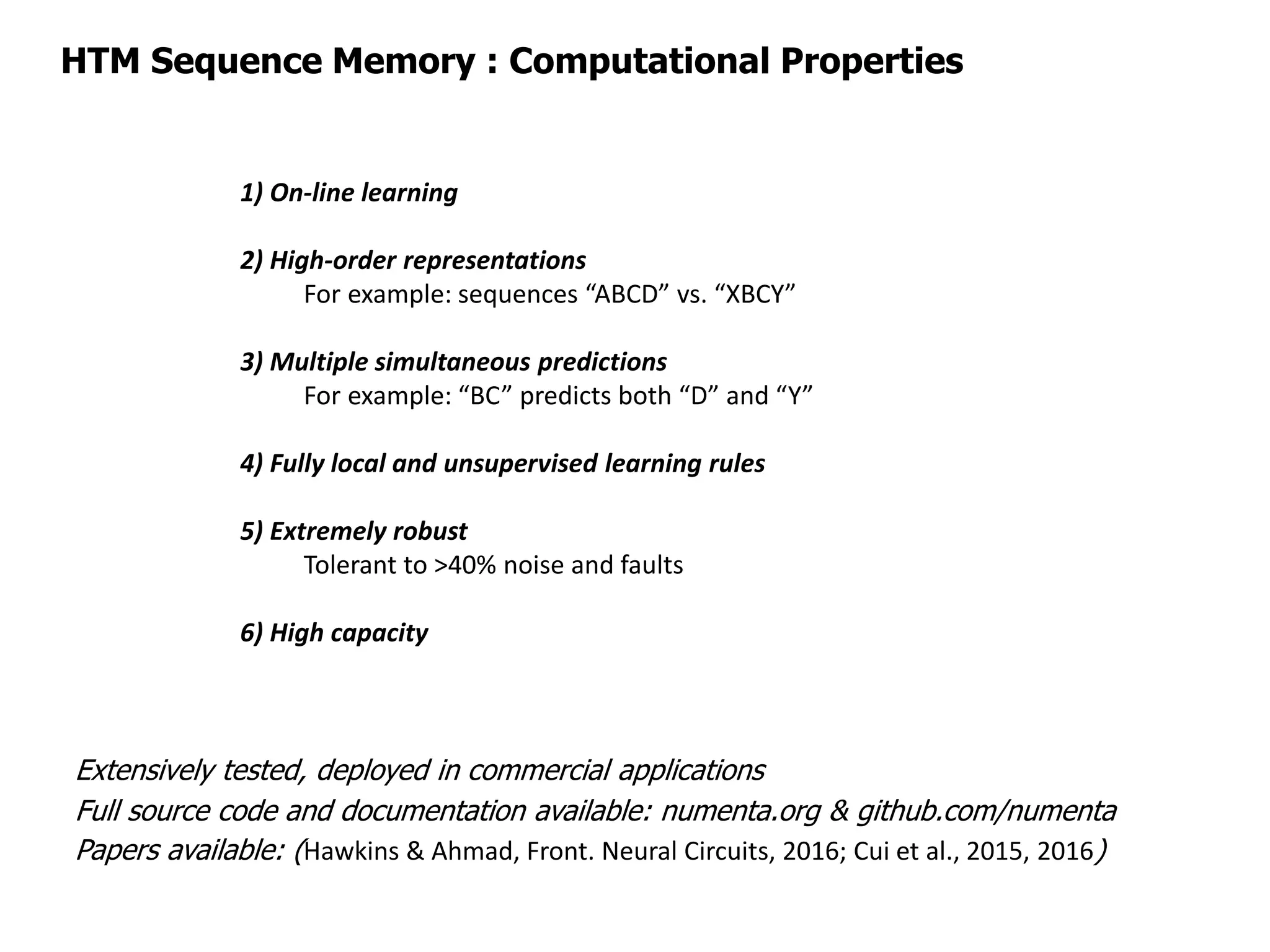

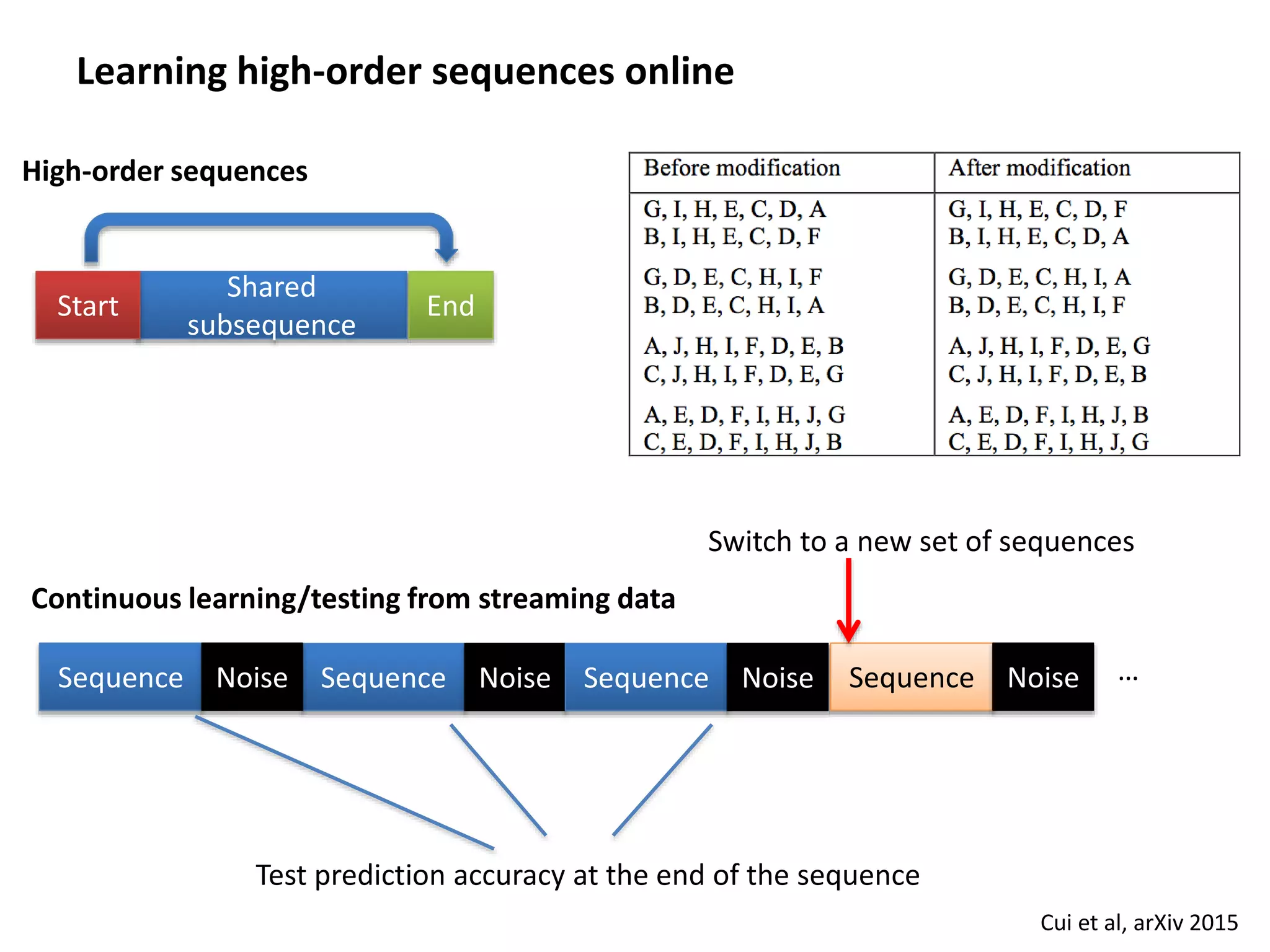

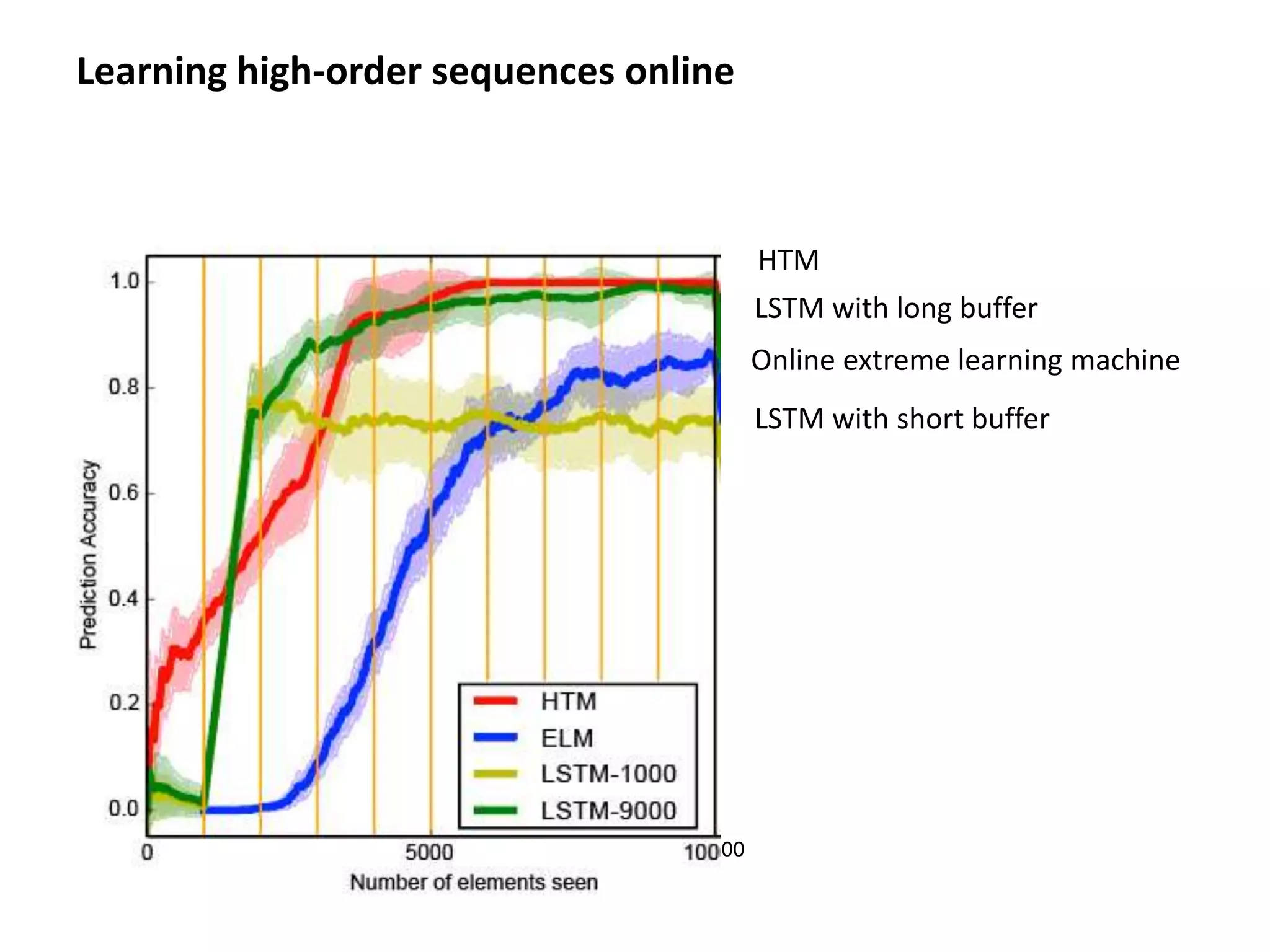

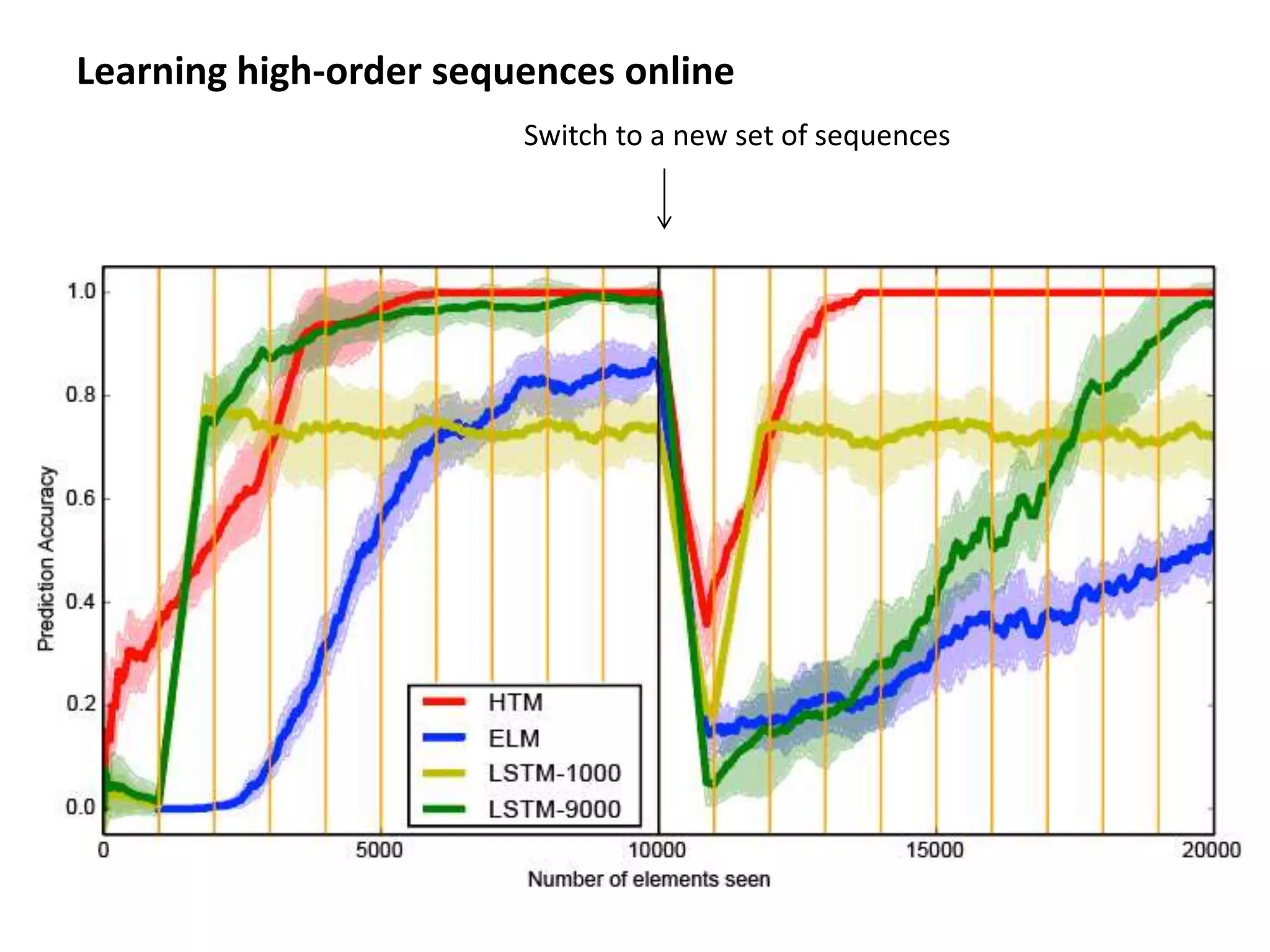

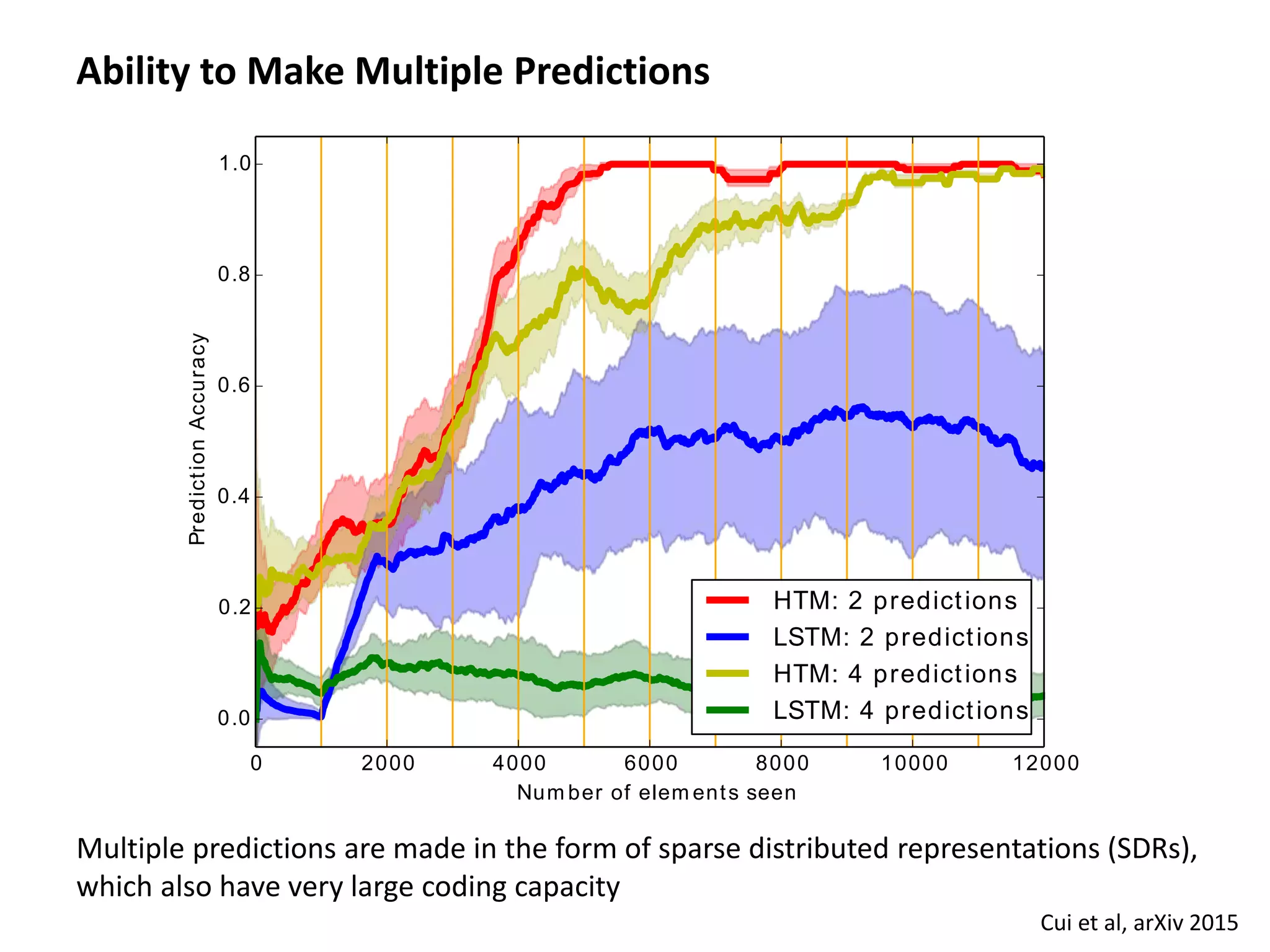

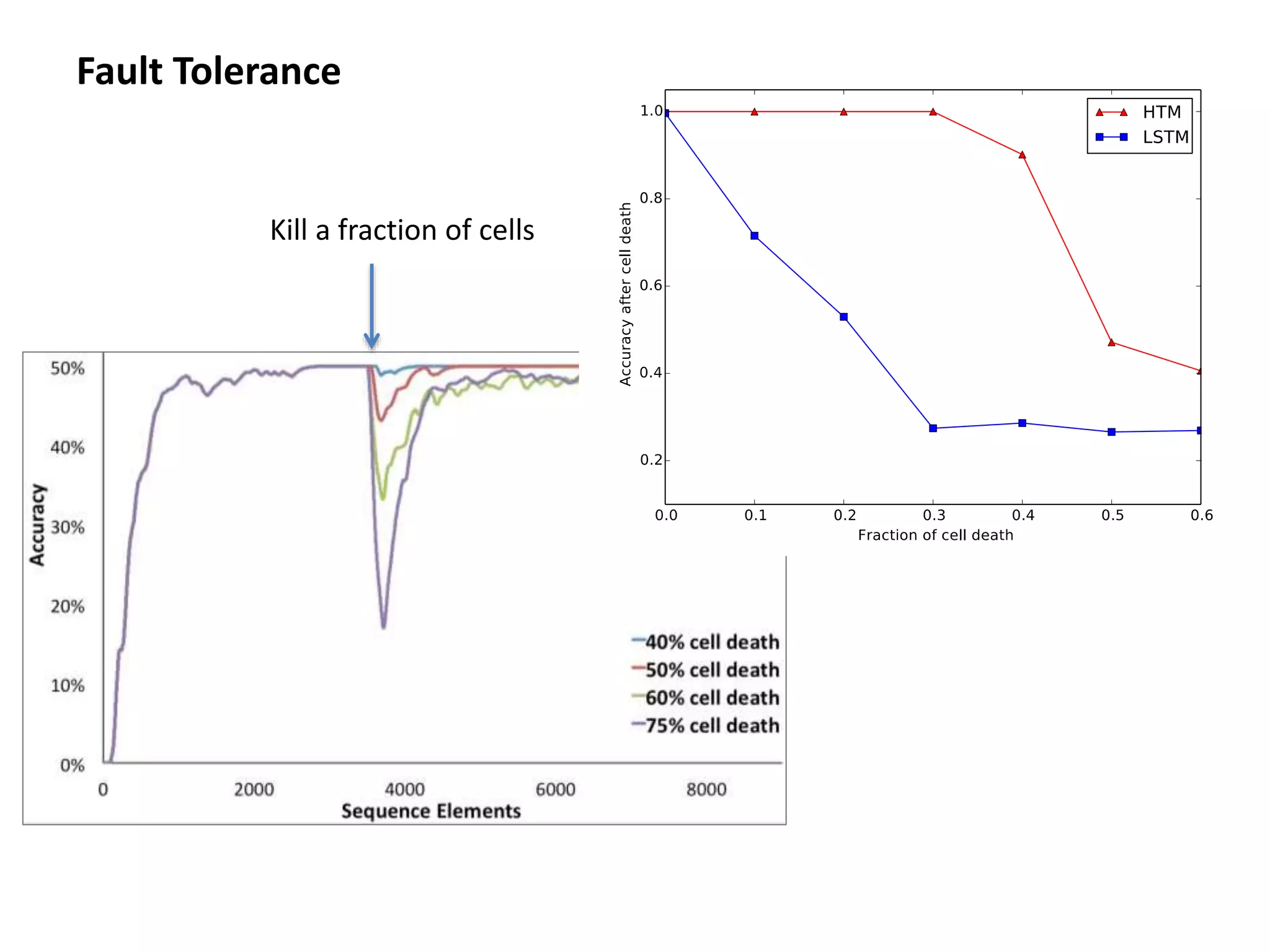

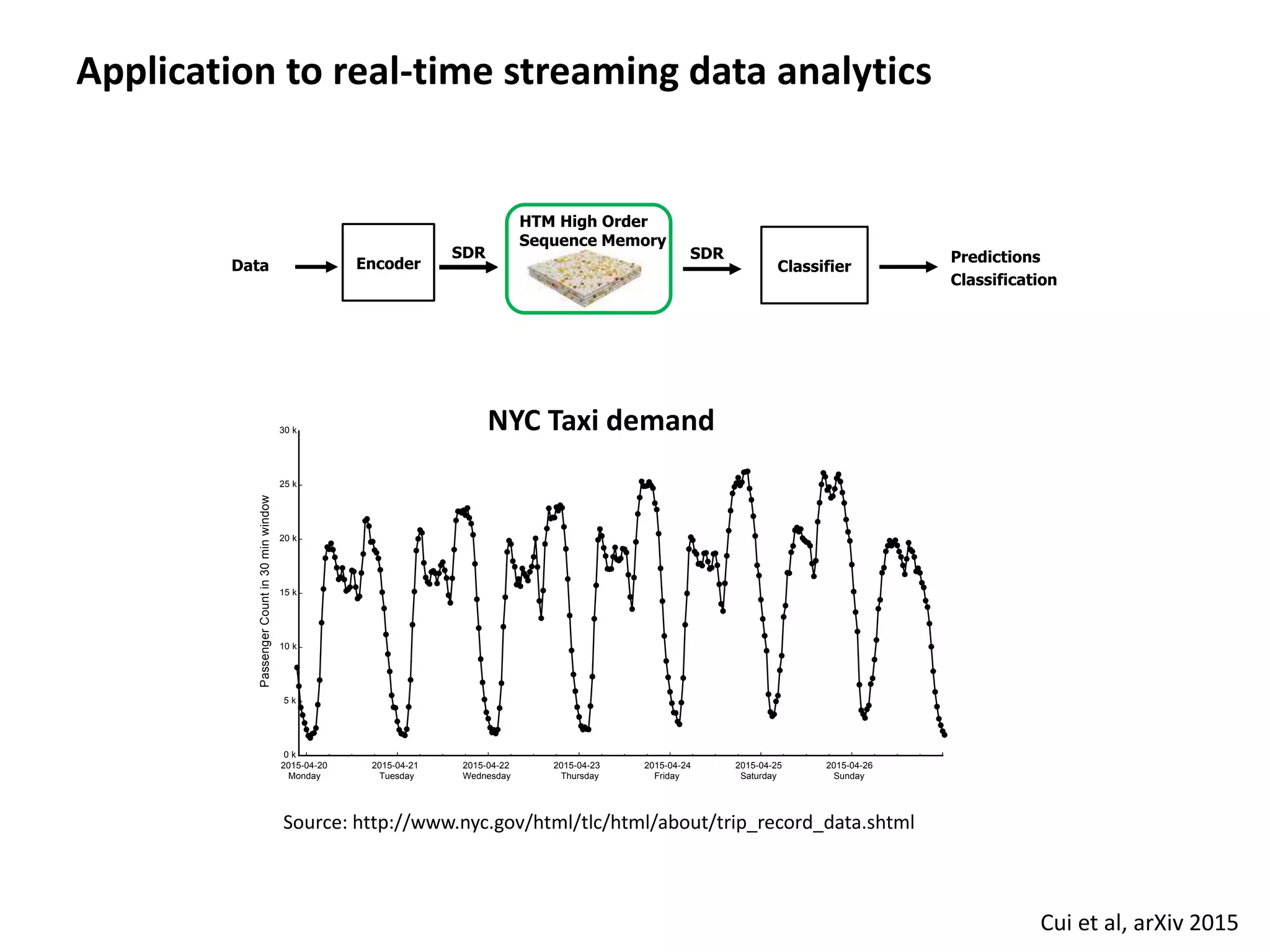

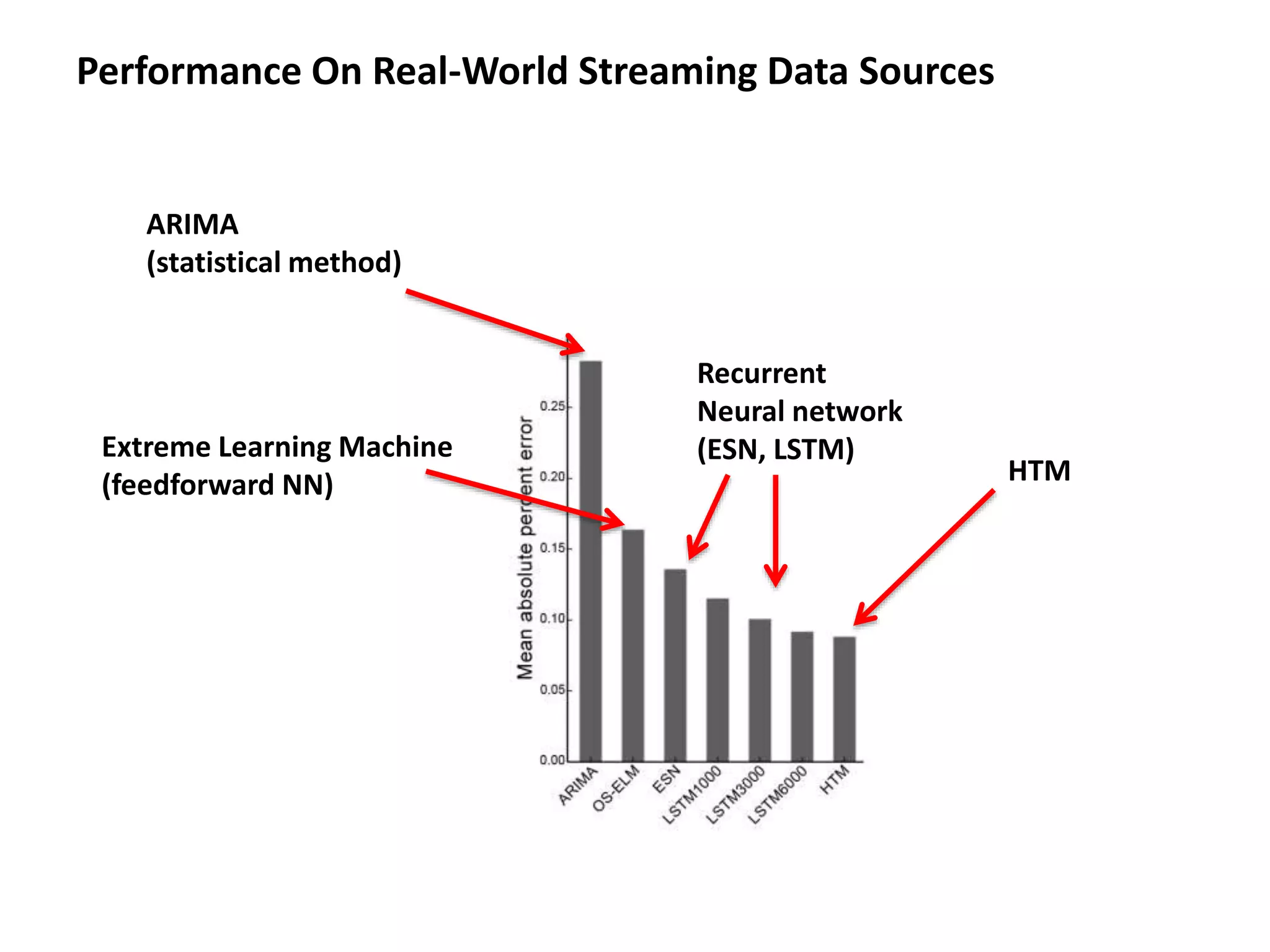

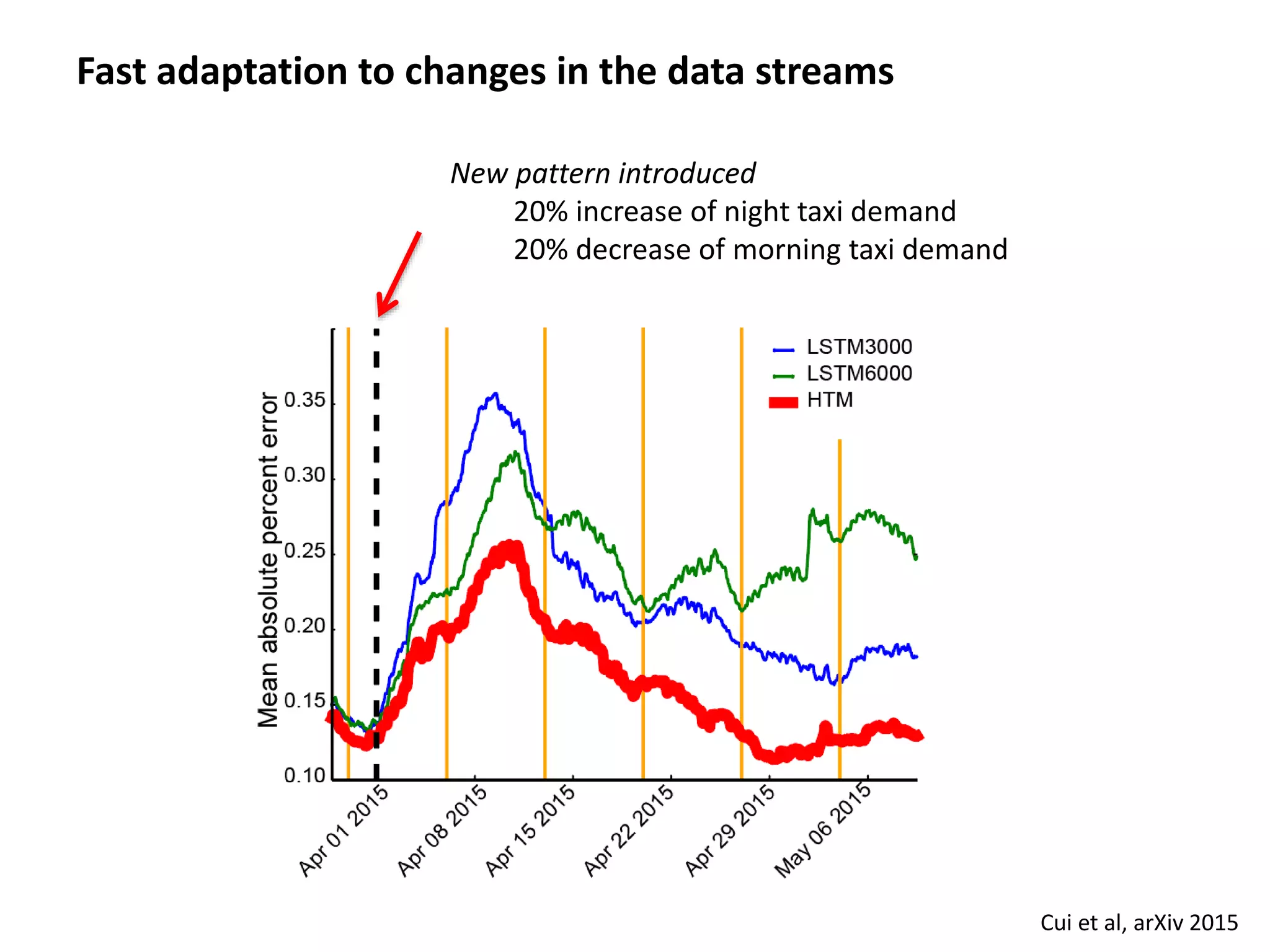

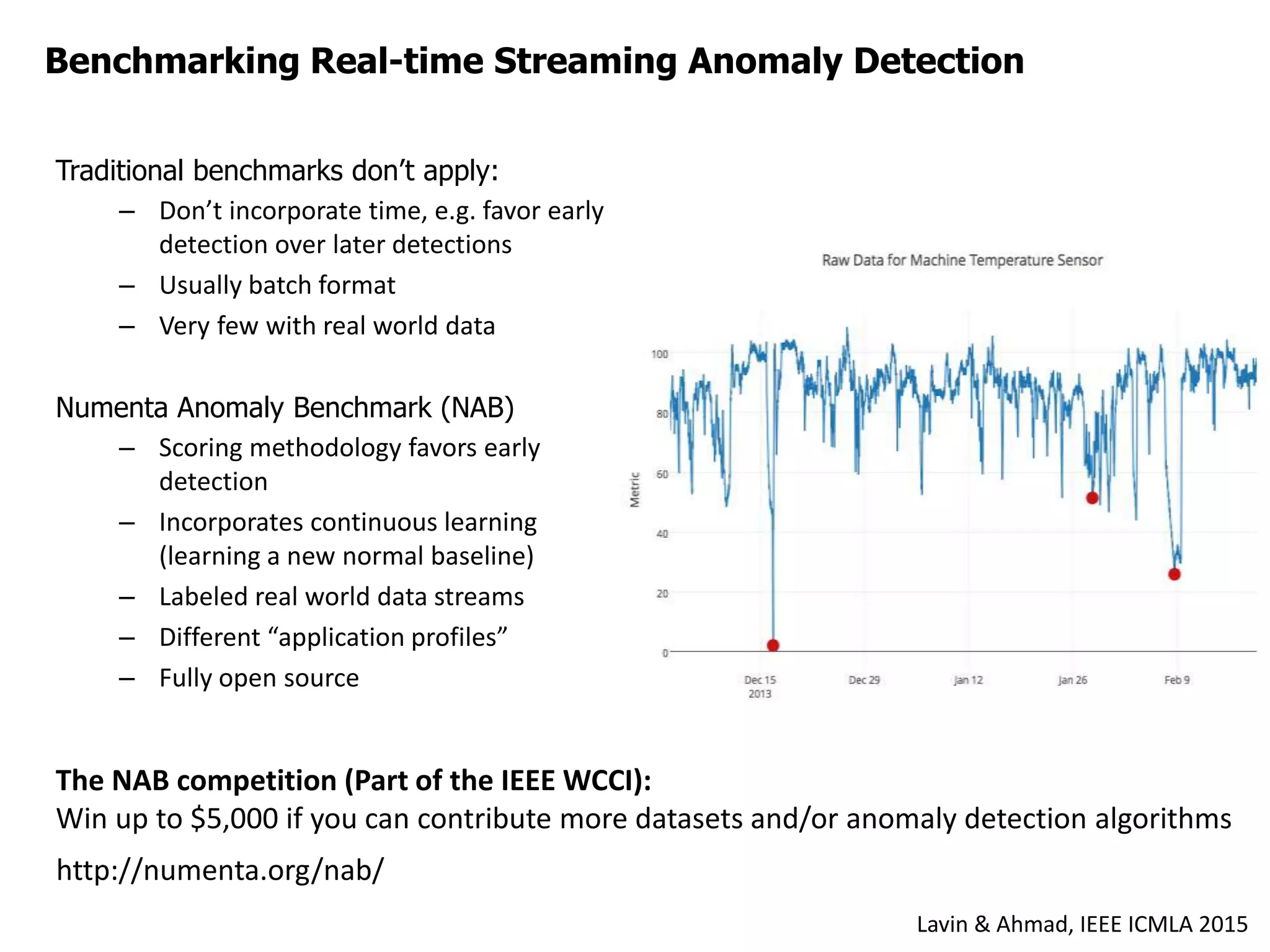

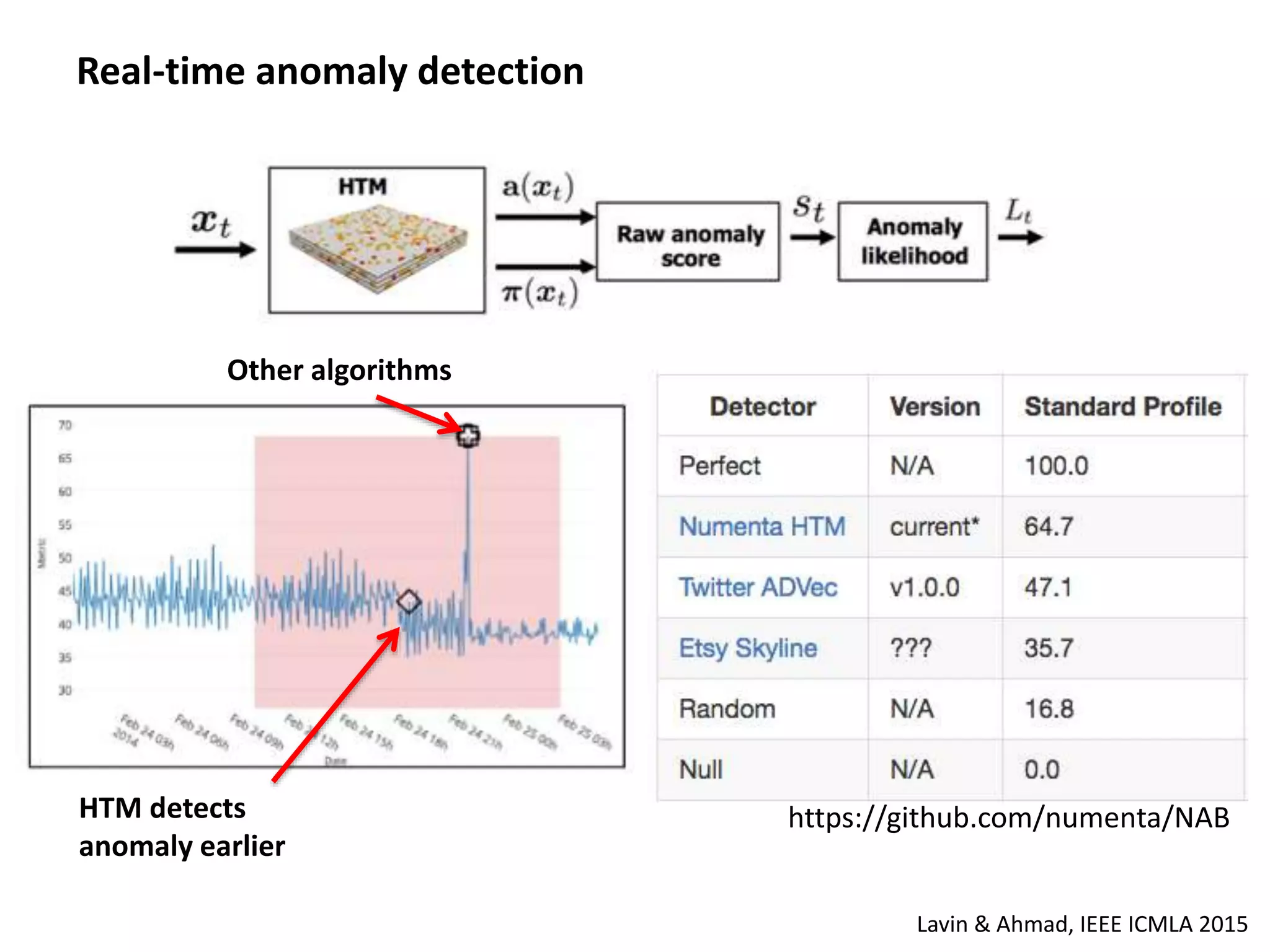

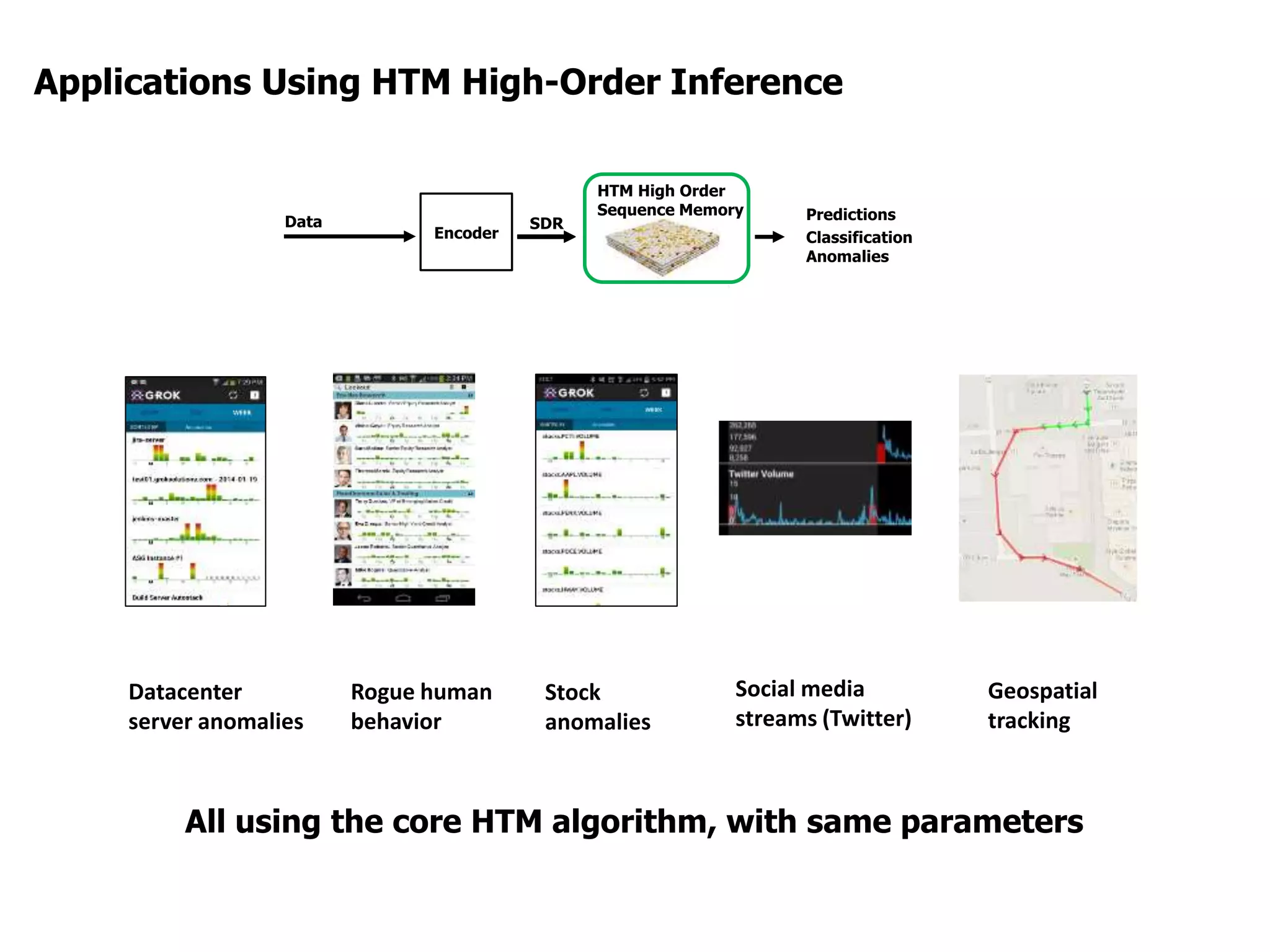

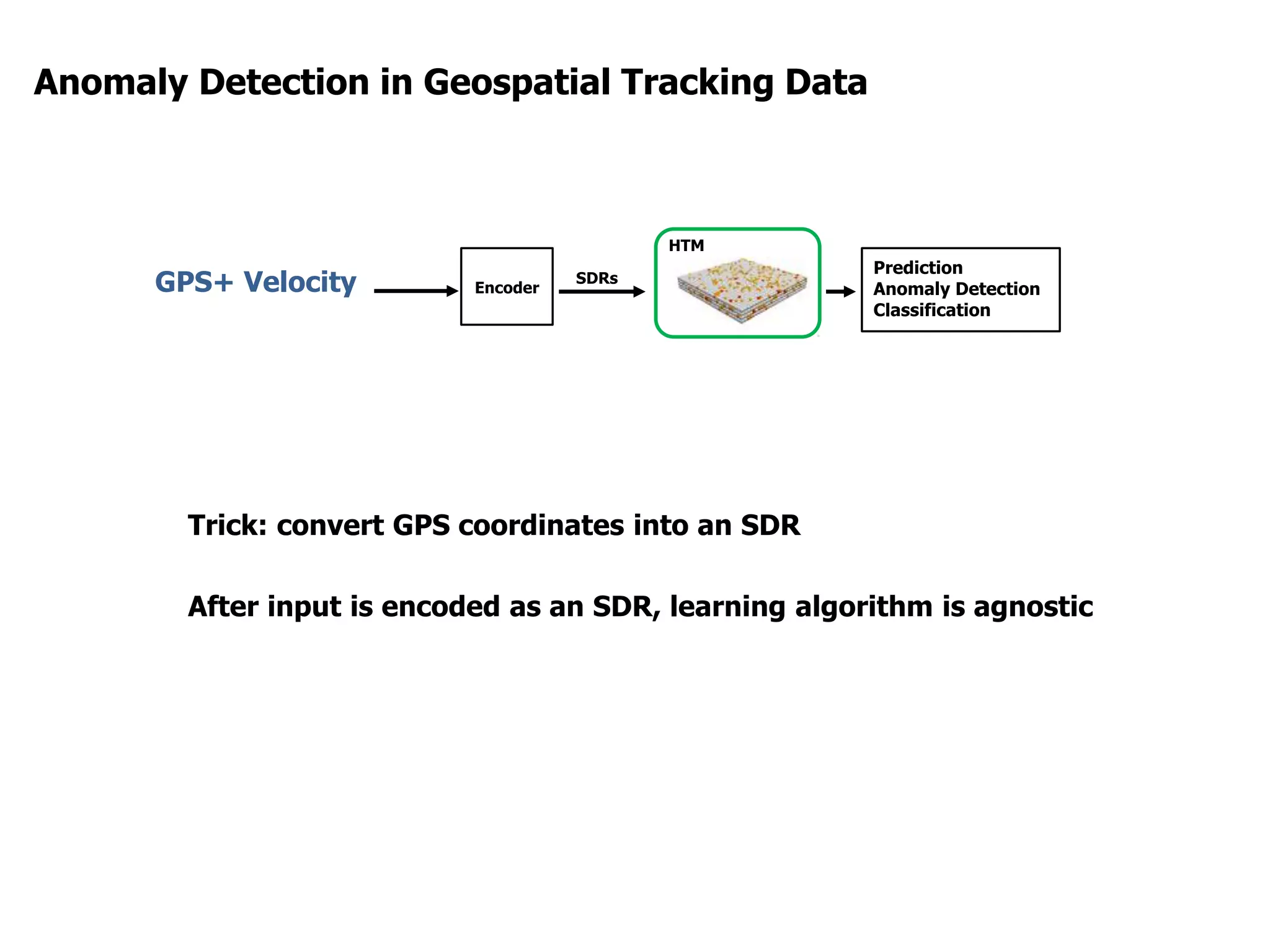

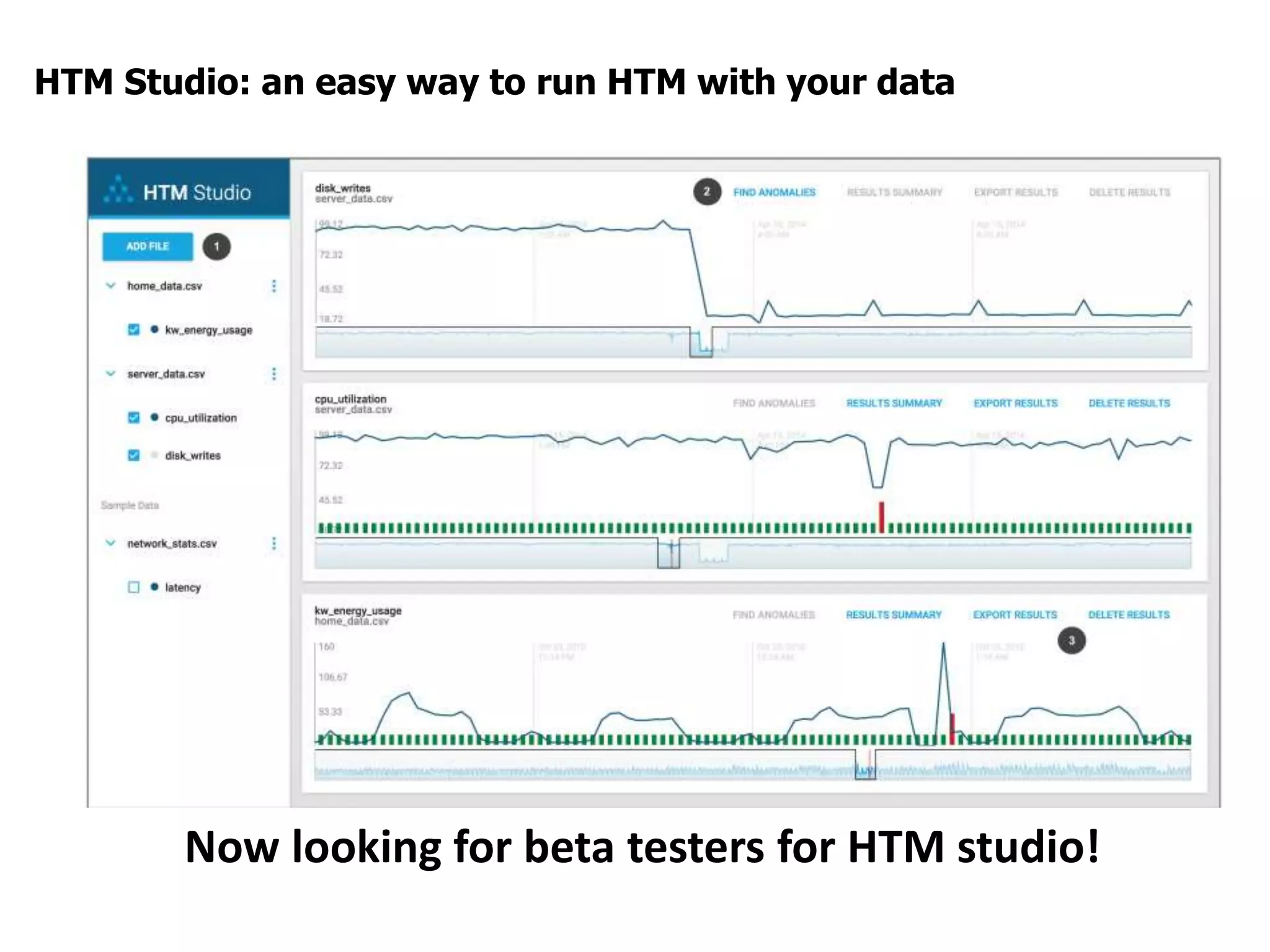

Yuwei Cui from Numenta presented on real-time streaming data analysis using Hierarchical Temporal Memory (HTM). HTM is based on principles of the neocortex and allows for online learning of high-order sequences from streaming data. HTM can make multiple predictions simultaneously and is fault tolerant. It has been applied successfully to problems like anomaly detection in data center servers and geospatial tracking data. Numenta is working to further understand the neocortex and create more biologically accurate models to continue advancing machine intelligence.