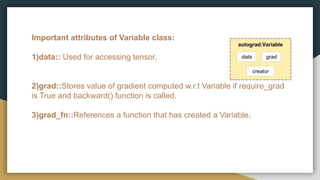

Pytorch's autograd package automatically calculates gradients for tensor computations. It builds a computational graph to track operations on tensors and uses reverse-mode differentiation to calculate gradients by backpropagating from the leaves to the root of the graph. The Variable class stores tensor data and gradients, and references the function that created it via grad_fn. An example demonstrates setting requires_grad, tracking operations to build the graph, computing gradients with backward(), and printing the resulting gradients.

![Example Illustration::

Create tensor and set requires_grad=True .

import torch

x = torch.ones(2, 2, requires_grad=True)

print(x)

Out::

tensor([[1., 1.],

[1., 1.]], requires_grad=True)

Do tensor operation::

y = x + 2

print(y)

Out::

tensor([[3., 3.],

[3., 3.]], grad_fn=<AddBackward0>)](https://image.slidesharecdn.com/pytorchautograd-190822181020/85/Pytorch-auto-grad-4-320.jpg)

![Since y is created as a result of function, it will have grad_fn.

print(y.grad_fn)

Out::

<AddBackward0 object at 0x7f100c04f2b0>

More operations on y::

z = y * y * 3

out = z.mean()

print(z, out)

Output::

tensor([[27., 27.],

[27., 27.]], grad_fn=<MulBackward0>) tensor(27., grad_fn=<MeanBackward0>)

Lets compute gradient by calling backward():

out.backward()

Print gradients d(out)/dx

print(x.grad)

O/p::

tensor([[4.5000, 4.5000],

[4.5000, 4.5000]])](https://image.slidesharecdn.com/pytorchautograd-190822181020/85/Pytorch-auto-grad-5-320.jpg)