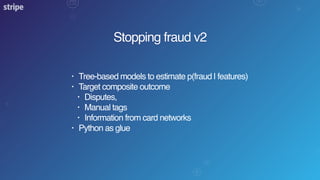

- Machine learning models are used to detect fraud by estimating the probability of fraud given transaction features.

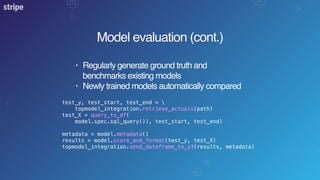

- Building and updating fraud detection models involves significant work in feature engineering, model training, evaluation, and monitoring in production.

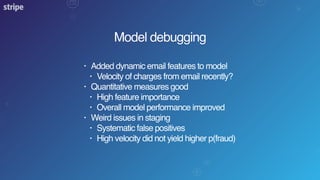

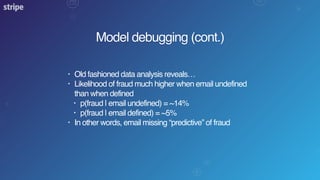

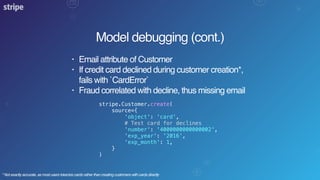

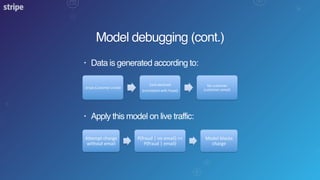

- Debugging a model that was performing poorly revealed an important predictive feature - whether a customer's email address was provided - that improved the model once incorporated.

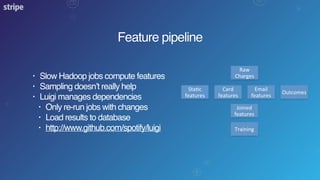

![Feature pipeline (cont.)

@redshift('transactionfraud.features')

class JoinFeatures(luigi.WrapperTask):

def requires(self):

components = [

'static_features',

'dynamic_card_features',

'dynamic_email_features',

'outcomes',

]

return [FeatureTask(c) for c in components]

def job(self):

return ScaldingJob(

job='JoinFeatures',

output=self.output().path,

**self.requires()

)](https://image.slidesharecdn.com/pydata-london-2015-150621183255-lva1-app6891/85/Detecting-fraud-with-Python-and-machine-learning-13-320.jpg)

)

val historicalCounts = getHistoricalCounts(charges)

historicalCounts

.map { case (chargeId, counts) =>

IpFeatures(

chargeId = chargeId,

feature1 = counts.feature1,

feature2 = counts.feature2,

...

)

}

.save

}](https://image.slidesharecdn.com/pydata-london-2015-150621183255-lva1-app6891/85/Detecting-fraud-with-Python-and-machine-learning-14-320.jpg)