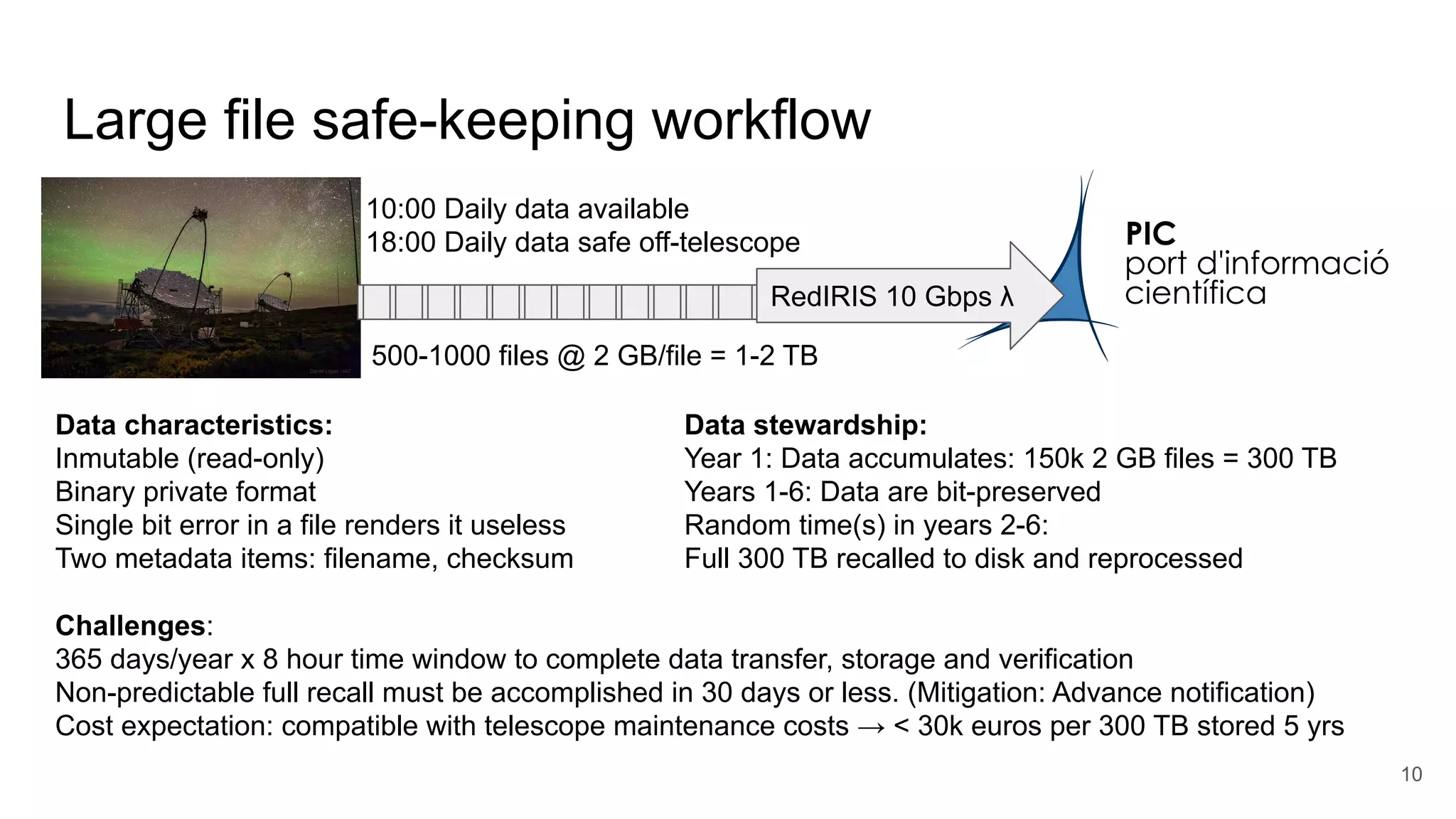

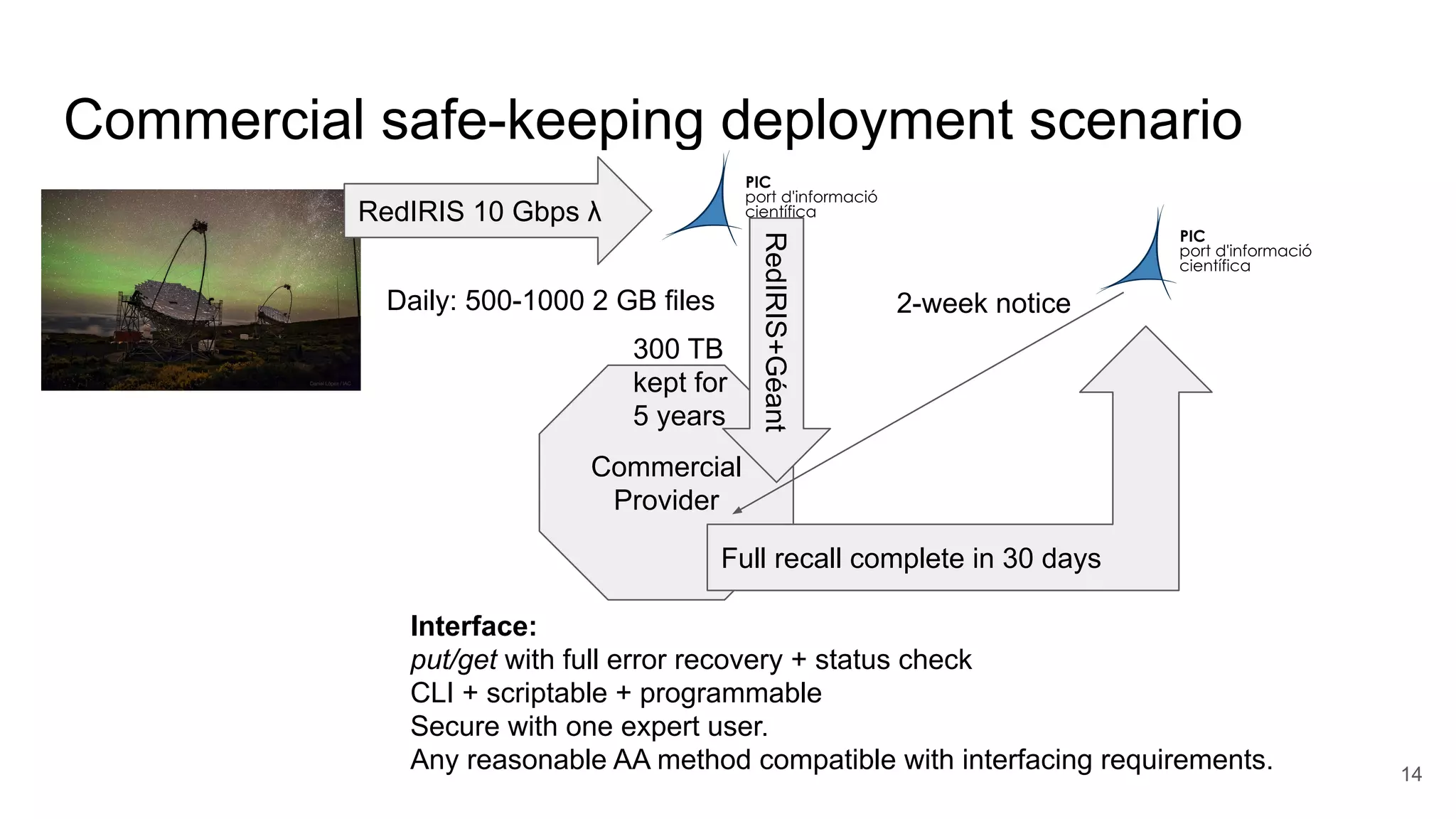

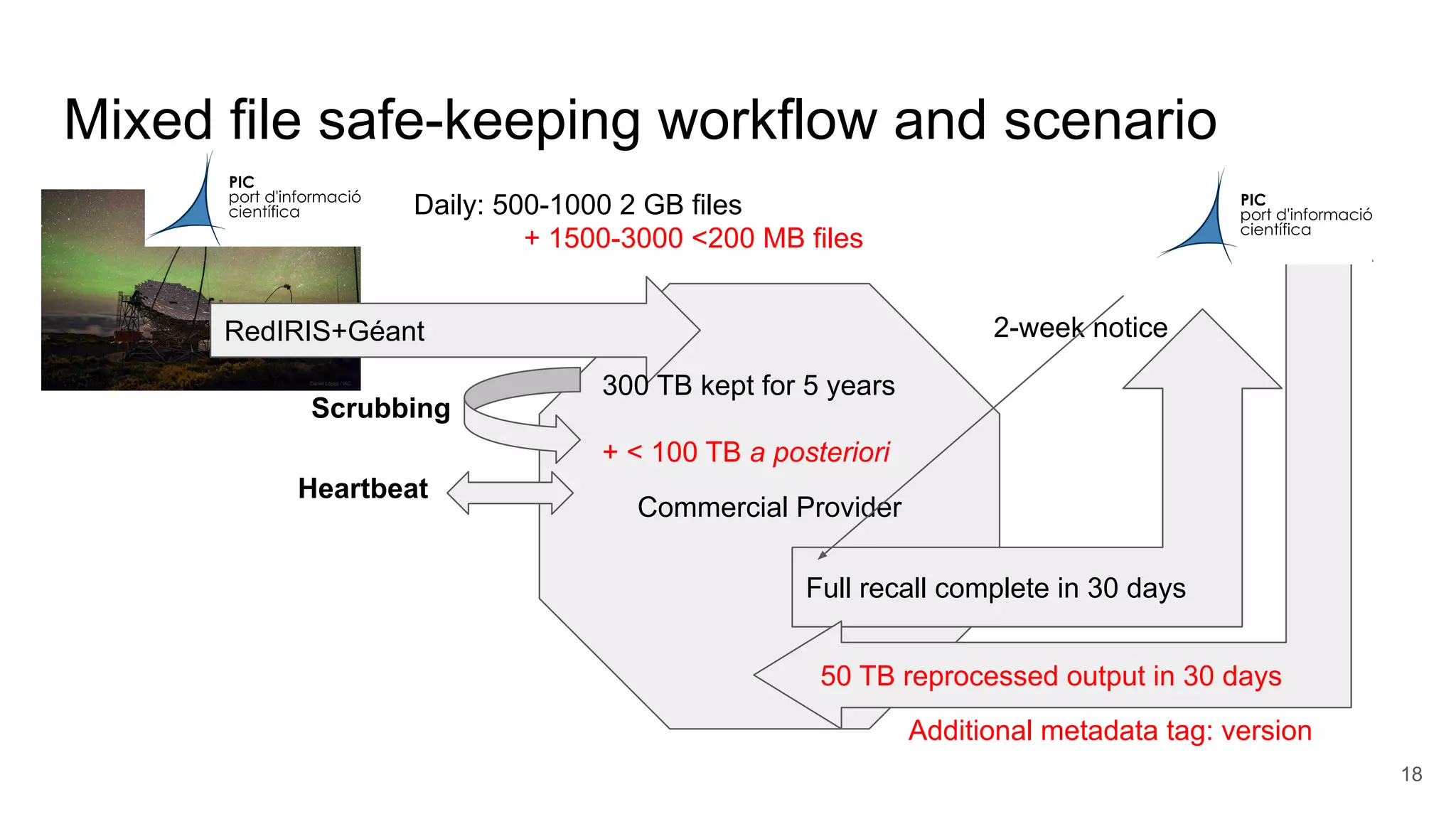

The document describes several deployment scenarios for storing and processing scientific data from instruments like the MAGIC Telescopes.

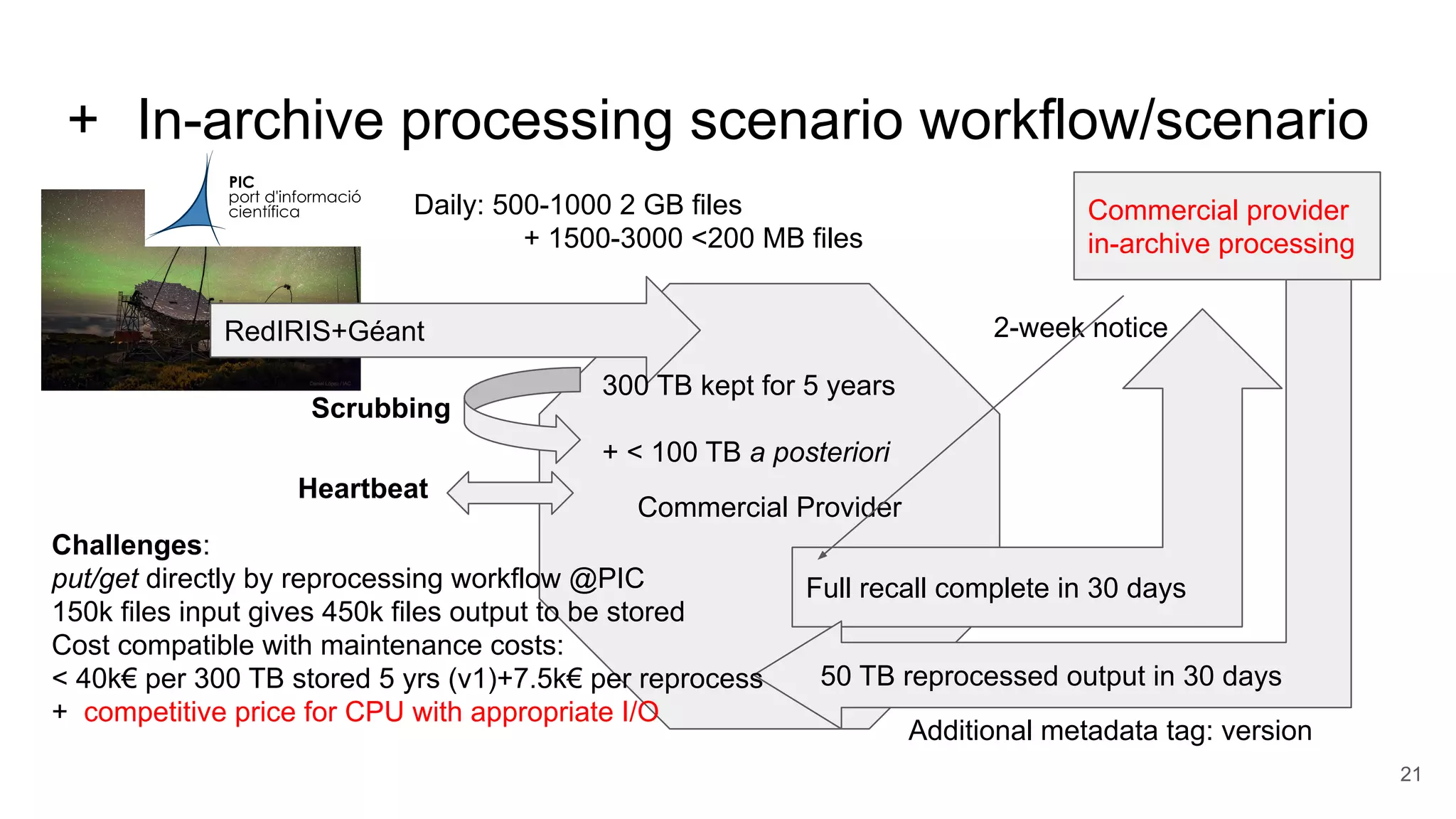

It outlines scenarios for: 1) large file safe-keeping, 2) mixed file safe-keeping including smaller files and reprocessing outputs, 3) in-archive data processing, 4) distributing data to instrument analysts, and 5) external user access.

Challenges include meeting data transfer timelines, ensuring data integrity over long periods, and providing flexible access and metadata tools to support analysis and discovery. Commercial providers could offer solutions but managing trust and customization needs is also discussed.