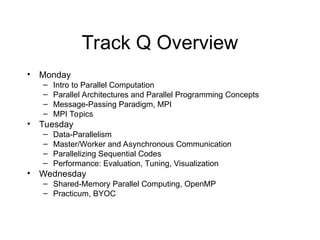

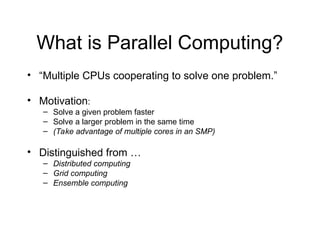

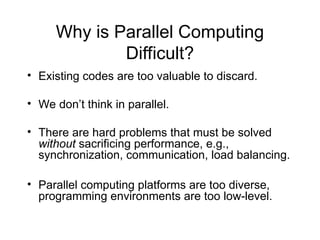

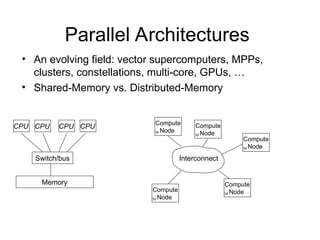

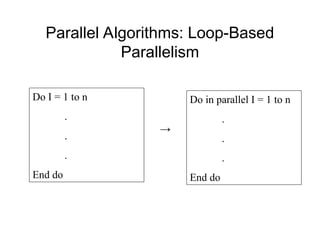

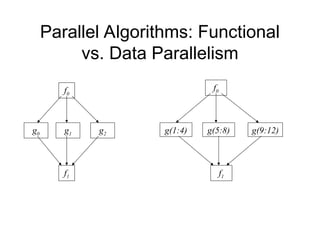

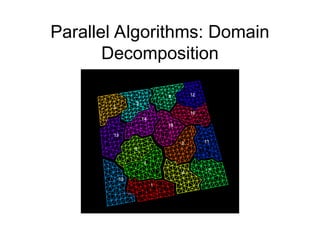

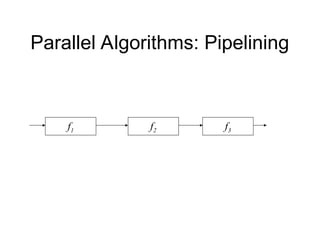

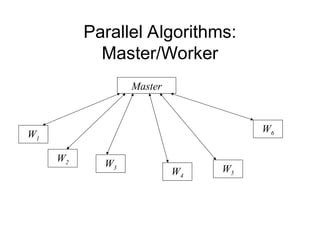

The document introduces parallel computing, covering its motivation, challenges, and various programming paradigms, technologies, and architectures. It discusses strategies such as loop-based parallelism, data parallelism, and master/worker models, as well as performance evaluation methods. Practical programming approaches include using compilers, extended languages, or libraries like OpenMP for shared-memory systems.