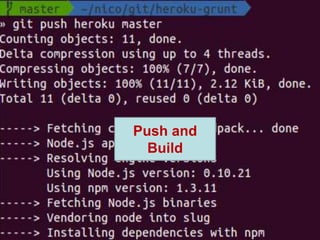

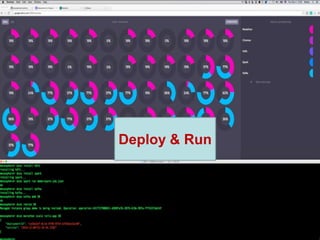

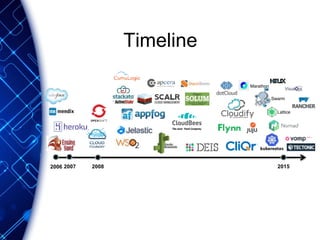

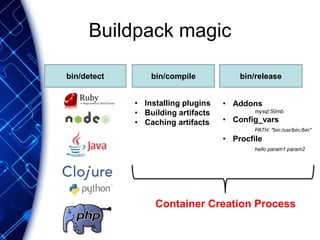

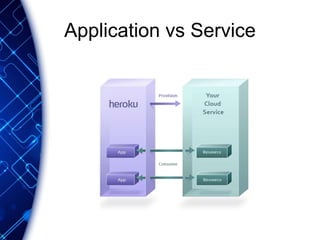

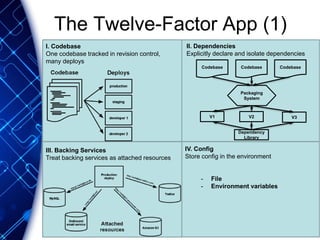

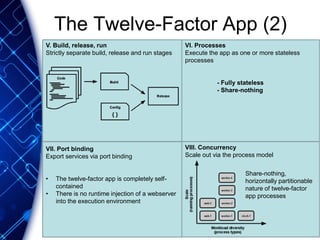

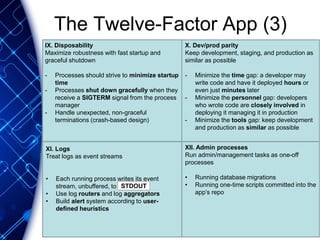

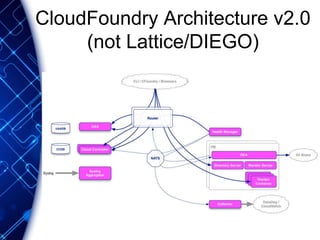

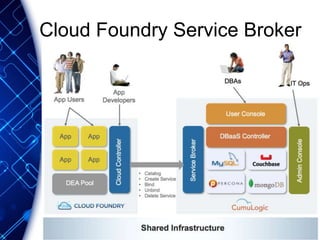

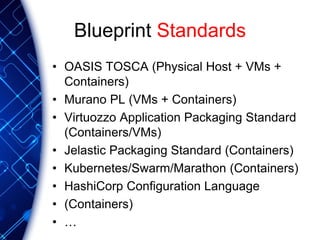

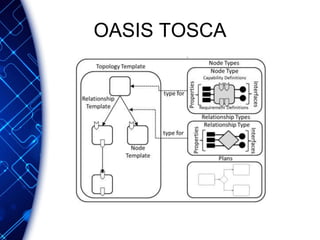

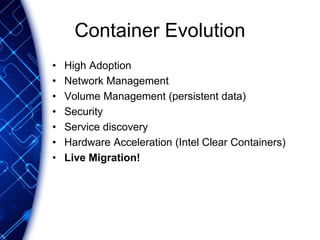

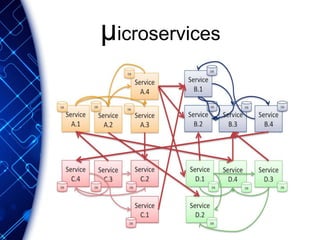

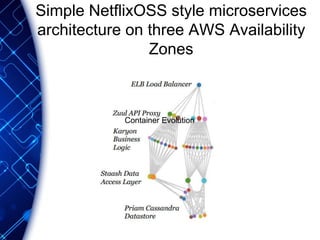

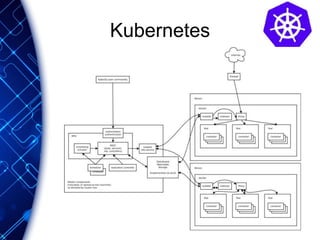

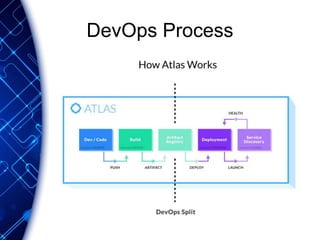

The document provides an overview of the PaaS ecosystem, detailing its evolution, key concepts like the twelve-factor app, and various platforms such as Cloud Foundry and Heroku. It explains the importance of build, release, and run processes, as well as managing dependencies and configurations. Additionally, it highlights trends in containerization and microservices architecture, suggesting that organizations should explore creating their own solutions.