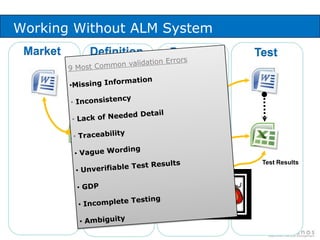

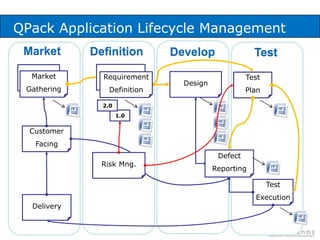

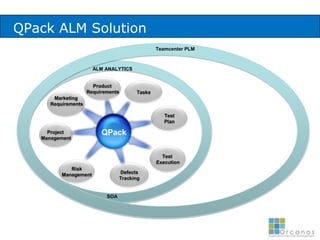

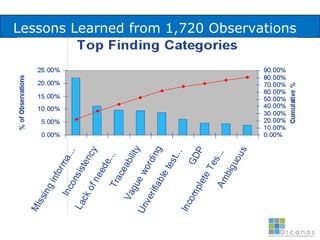

The document discusses common validation errors found in software development projects and how an Application Lifecycle Management (ALM) system called QPack can help address them. It identifies the nine most frequent errors as missing information, inconsistency, lack of detail, poor traceability, vague wording, unverifiable test results, non-compliance with good documentation practices, incomplete testing, and ambiguity. QPack provides an integrated platform to manage requirements, testing, defects, and reporting across the entire software development and validation process. Customers report that QPack gives them better control and visibility into projects through standardized workflows and reporting capabilities.